这篇是第6章,并发。

go中的concurrency是什么?

A:

Concurrency in Go is the ability for functions to run independent of each other. When a function is created as a goroutine, it’s treated as an independent unit of work that gets scheduled and then executed on an available logical processor.

go语言中的Concurrency就是指函数能够独立于彼此运行的能力。当一个函数被创建成一个goroutine,其就被当成了一个独立的工作单元,并被调度到空闲的逻辑处理器上执行。

什么是CSP?

A: CSP,就是communication sequence processes,是一种消息传递机制,即通过消息传递去同步对资源的访问,而不是锁定资源。

并发和并行

简单来说,并发就是看起来像是同时执行的,并行就是真的是同时执行的。后者靠的是多个cpu核同时工作,前者可以是单个cpu核切换进行多个任务。

进程和线程

关于进程:

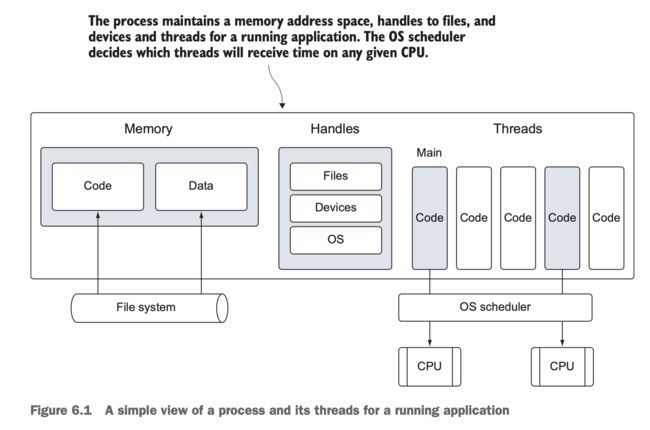

When you run an application, such as an IDE or editor, the operating system starts a process for the application. You can think of a process like a container that holds all the resources an application uses and maintains as it runs.These resources include but are not limited to a memory address space, handles to files, devices, and threads.

当你执行一个应用的时候,比如IDE或者编辑器,操作系统会为该应用创建一个进程。你可以认为进程是一个包含了所有该应用运行时使用的资源的容器。这些资源包括了内存地址空间、文件句柄、设备及线程等。

关于线程:

A thread is a path of execution that’s scheduled by the operating system to run the code that you write in your functions.

一个线程是经过操作系统调度的一系列指令,这些指令执行了你写在函数中的那些代码。

Each process contains at least one thread, and the initial thread for each process is called the main thread. When the main thread terminates, the application terminates, because this path of the execution is the origin for the application

每个进程至少包含一个线程,每个进程的第一个线程叫做主线程(main thread)。当主线程结束的时候,应用也就结束了,因为主线程是应用的这一些列指令的源头。

The operating system schedules threads to run against processors regardless of the process they belong to.

操作系统将多个不同进程的线程调度到不同的处理器中。注意,其调度的单位是线程不是进程。

看两个图:

上图表示:

- 一个进程包含了Memory,Handles,Threads

- Thread就是一段代码

- 一段代码被OS scheduler调度到不同的cpu上执行

几点:

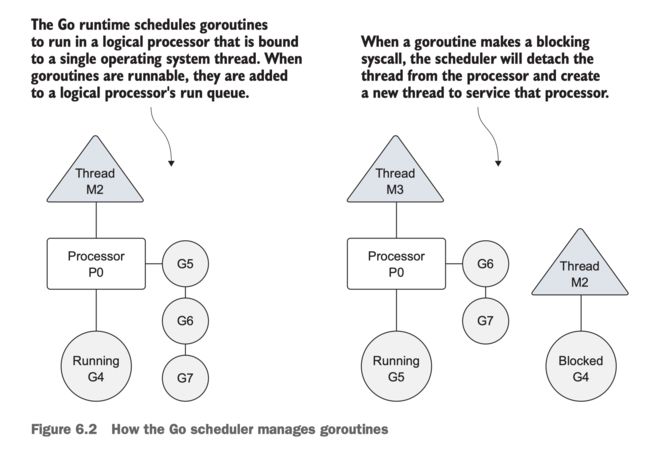

- 当goroutines已经创建好了准备执行的时候,就会被go的runtime的全局执行队列(global run queue)

- 之后,goroutines会被分配一个逻辑处理器(logic processor),并进入该逻辑处理器的本地执行队列(local run queue)

- 然后,goroutine等待自己被该逻辑处理器执行

- 逻辑处理器执行goroutine的时候会创建一个操作系统线程

- 当一个运行中的goroutine执行了一个阻塞式系统调用时(blocking syscall),比如打开文件,goroutine以及正在运行的线程就会同逻辑处理器分离(detach),然后该线程继续阻塞,等待系统调用返回

- 此时,该逻辑处理器就没有一个对应的线程了,go调度器就会创建一个新的线程给该逻辑处理器

- 然后调度器从该逻辑处理器的本地执行队列中选一个goroutine放到该线程中执行

- 当系统调用返回时,等待的goroutine就被放入到本地运行队列,然后之前的线程被留作未来使用

If a goroutine needs to make a network I/O call, the process is a bit different. In this case, the goroutine is detached from the logical processor and moved to the runtime integrated network poller. Once the poller indicates a read or write operation is ready, the goroutine is assigned back to a logical processor to handle the operation.

当一个goroutine需要执行一个网络I/O时,流程有些不同。goroutine首先同逻辑处理器分离,然后进行runtime integrated network poller。当那个poller表示读或者写准备好时,goroutine才会被放回逻辑处理器去处理该操作。

go的运行时并没有限制逻辑处理器的个数,但是默认设置了最大线程数为10000。

注意,logic processor并非指操作系统的逻辑处理器,而是go运行时中的逻辑处理器。

Goroutines

先看一段代码:

pacakge main

imort (

"fmt"

"runtime"

"sync"

)

func main() {

runtime.GOMACPROCS(1) // 设置logic processor的数量

var wg sync.WaitGroup

wg.Add(2)

fmt.Println("Start go routines")

go func() {

defer wg.Done()

for count := 0; count < 3; count++ {

for char := 'a'; char < 'a' + 26; char++ {

fmt.Printf("%c ", char)

}

}

}()

go func() {

defer wg.Done()

for count := 0; count < 3; count++ {

for char := 'A'; char < 'A' + 26; char++ {

fmt.Printf("%c ", char)

}

}

}()

fmt.Println("Waiting to Finish")

wg.Wait()

fmt.Println("\n Terminating Program")

}

程序的输出结果是首先打印了大写字母,然后打印了小写字母。由于工作量小,因此没有出现交替执行的情况。

waitGroup的行为是,通过Add函数设置一个数值,通过Done对该数值减一,Wait()函数会阻塞直到该数值被减为0.

defer即其后的函数会在其中的函数返回时执行。

Based on the internal algorithms of the scheduler, a running goroutine can be stopped and rescheduled to run again before it finishes its work. The scheduler does this to prevent any single goroutine from holding the logical processor hostage. It will stop the currently running goroutine and give another runnable goroutine a chance to run.

根据调度器的算法,一个goroutine会被暂停,然后执行另一个goroutine,然后再重新执行之前那个goroutine,这样做是为了避免一个goroutine一直占用logic processor,调度器会给另一个goroutine执行的机会。(避免被饿死)。

It’s important to note that using more than one logical processor doesn’t necessarily mean better performance. Benchmarking is required to understand how your program performs when changing any runtime configuration parameters.

使用大于一个的logical process并不一定意味着更好的性能.Bechmarking才是判断程序性能的正确打开方式。

Race Condition

Race condition,竞争态的场景。

首先来看看定义:

When two or more goroutines have unsynchronized access to a shared resource and attempt to read and write to that resource at the same time, you have what’s called a race condition.

当两个或以上的多个goroutines对一个共享资源在同一时间进行未经同步的读或者写,此时我们成为race condition。

几个关键点:

- 两个及以上

- 同一个共享资源

- 同时读或者写

- 未进行同步

来看个例子:

package main

import (

"fmt"

"runtime"

"sync"

)

var (

counter int

wg sync.WaitGroup

)

func main() {

wg.Add(2)

go incCounter(2)

go incCounter(2)

wg.Wait()

fmt.Println("Final Counter: ", connter)

}

func incCounter(val int) {

defer wg.Done()

for count := 0; count < val; count++ {

value := counter

// Yield the thread and be placed back in queue

runtime.Gosched()

value++

counter = value

}

}

首先我们来对一下race condition的条件:

- 两个或多个go routine。这里是有连个

- 共享资源。这里就是counter

- 同一时间读写。incCounter函数里面读取了counter,然后写入了counter

- 没有任何同步措施

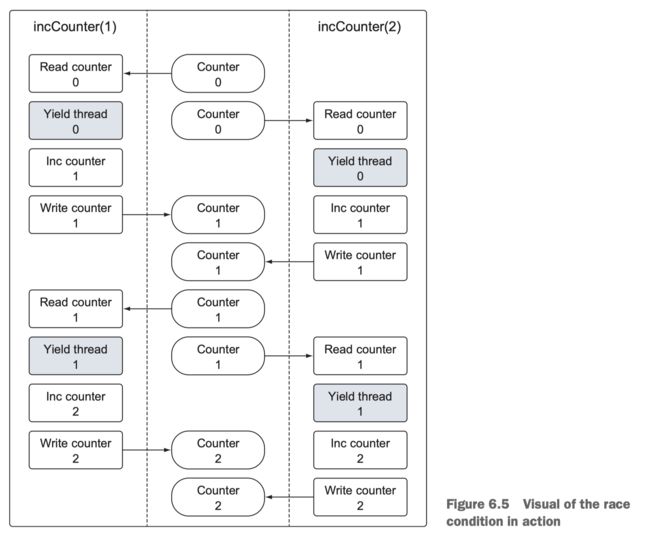

整个代码对counter增加了4次,但是结果确不一定是4。原因是:

Each goroutine overwrites the work of the other. This happens when the goroutine swap is taking place. Each goroutine makes its own copy of the counter variable and then is swapped out for the other goroutine. When the goroutine is given time to execute again, the value of the counter variable has changed, but the goroutine doesn’t update its copy. Instead it continues to increment the copy it has and set the value back to the counter variable, replacing the work the other goroutine performed.

简单来说,value = counter这句话会把counter的值赋给value,如果此时发生了swap,即当前goroutine被放入队列,开始执行另一个goroutine,那么另外的goroutine里面的value的值也是counter,然后对counter进行了增加。此时之前那个goroutine swap回来继续执行,还是从最初的counter的值进行增加,并赋值。此时也就覆盖了goroutine的现有值。

关键点是:

- 每个goroutine都持有了一个counter的副本,此时是value

- 然后goroutine里使用value对counter进行了赋值。后执行的goroutine或覆盖先前执行的那个goroutine给counter赋的值

看个图:

golang具有检测race condition的工具

使用go build -race可以检测代码中的race condition

race condition应该怎么处理呢?

两种方式吧:

- lock。即锁。通过锁让多个goroutine对资源进行顺序访问

- channel机制。

锁

先看一下上面的代码,使用锁的情况:

import "sync/atomic"

func incCounter(id int) {

defer wg.Done()

for count := 0; count < id; count++ {

atomic.AddInt64(&counter, 1)

runtime.Gosched()

}

}

上述代码同之前一样的地方就省略了。不一样的是:

- 引入了sync/atomic库

- 对counter进行修改的地方使用了

atomic.AddInt64方法。该方法保证了对counter改变的原子性。

mutex

先来看看什么是mutex:

Another way to synchronize access to a shared resource is by using a mutex. A mutex is named after the concept of mutual exclusion. A mutex is used to create a critical section around code that ensures only one goroutine at a time can execute that code section

mutex是一种对共享资源做同步访问的方式。其全称为"mutual exclusion"。mutex用于创建一个critical section,该section里面的代码在同一时间仅能被一个goroutine执行。

package main

import (

"fmt"

"runtime"

"sync"

)

var (

counter int

wg sync.WaitGroup

mutex sync.Mutex

)

func main() {

wg.Add(2)

go incCounter(1)

go incCounter(2)

wg.Wait()

fmt.Printf("Final Counter: %d\n", counter

}

func incCounter(id int) {

defer wg.Done()

for count := 0; count < id; count++ {

mutex.Lock()

{

value := counter

runtiem.Gosched()

value++

counter = value

}

mutex.Unlock()

}

}

简单来说就是使用mutex的两个方法Lock和Unlock对一段代码进行了加锁和解锁操作。

注意大括号不是必须的。

channels

channel,可以认为就是一根管道,里面有数据。可以往管道里面发送数据,也可以从管道里面获取数据。

看看channel的创建和使用:

unbufferd := make(chan int)

bufferd := make(chan string, 10)

channel有两种,一种是buffered,另一种是unbufferd。

使用make进行创建。传入channel里的数据的类型。

往channel传入值:

bufferd := make(chan string, 10)

bufferd <- "Gopher"

这里使用<-传入值。

从channel里接收值:

value := <- bufferd

注意使用了两个操作符::=和<-。第二个是在bufferd这个channel的左边。

所以<-出现在channel的左边是读取,出现在右边是传入。

unbufferd channel

先来看一下定义:

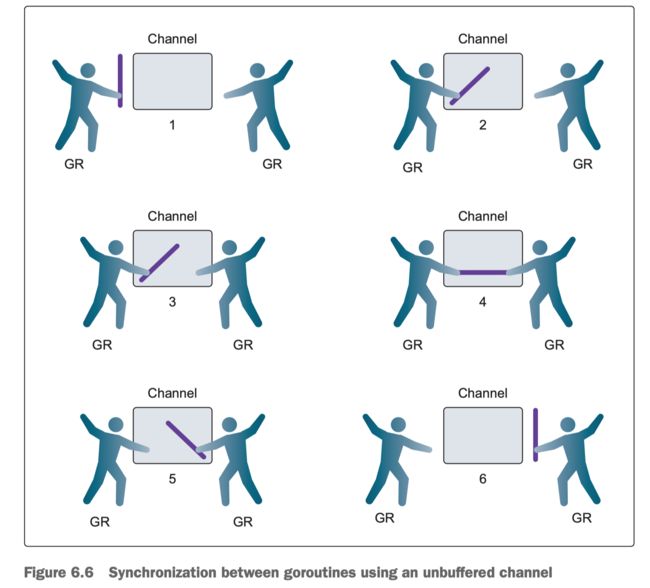

An unbuffered channel is a channel with no capacity to hold any value before it’s received. These types of channels require both a sending and receiving goroutine to be ready at the same instant before any send or receive operation can complete. If the two goroutines aren’t ready at the same instant, the channel makes the goroutine that performs its respective send or receive operation first wait.

简单来说,unbufferd channel就是一个没有任何容量(capacity)的channel。只有接收和发送的两个goroutine在同一时间都准备好时,接收和发送操作才能完成。否则准备好的一方将等待另一方准备好。

看图说话:

1,两个goroutine准备通过channel通信

2,左边的往channel里面发送了一个数据。此时该goroutine被锁住,直到数据交换完成

3,右边的goroutine发起接收操作。该goroutien被锁住,直到数据交换完成

4,开始数据交换

5,数据交换完成

6,两个goroutine的锁被释放

所以,上图里面,谁的手伸进去了表示,发起了发送或者读取操作,然后就被lock了。

bufferd channel

先看解释:

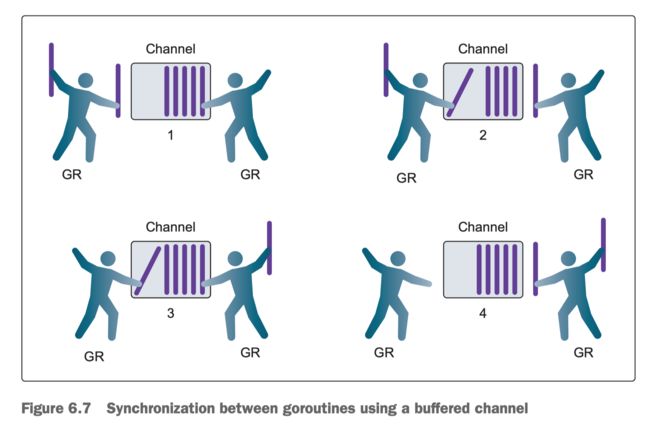

A buffered channel is a channel with capacity to hold one or more values before they’re received. These types of channels don’t force goroutines to be ready at the same instant to perform sends and receives. There are also different conditions for when a send or receive does block. A receive will block only if there’s no value in the channel to receive. A send will block only if there’s no available buffer to place the value being sent.

简单来说:

- bufferd channel就是具备容量(capacity)的channel

- 并不要求接收方和发送方在同一时间ready

- 当channel里面没有值的时候,接收方阻塞(block)

- 当channel里面没有空间的时候,发送方阻塞(block)

看图:

对于bufferd channel:

When a channel is closed, goroutines can still perform receives on the channel but can no longer send on the channel.

当channel被关闭后,接收方依然可以从channel中读取数据,但发送方不能往channel里面发送数据了。

两者的区别

原文:

This leads to the one big difference between unbuffered and buffered channels: An unbuffered channel provides a guarantee that an exchange between two goroutines is performed at the instant the send and receive take place. A buffered channel has no such guarantee.

即unbufferd channel要求发送方和接收方同时ready,而bufferd channel没有这个要求。