人工智能实践Tensorflow2.0 第五章--2.八股法搭建LeNet5网络--北京大学慕课

第五章–卷积神经网络基础–八股法搭建LeNet5网络

本讲目标:

介绍八股法搭建LeNet5网络的流程。参考视频。

卷积神经网络基础

- 1.LeNet5网络介绍

-

- 1.1-网络分析

- 1.2-卷积层搭建

- 2.六步法训练LeNet5网络

-

- 2.1六步法回顾

- 2.2完整代码

- 2.3输出结果

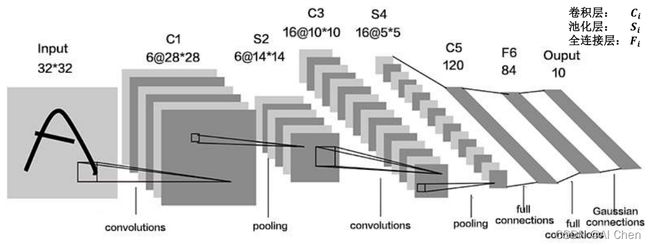

1.LeNet5网络介绍

借鉴点:共享卷积核,减少网络参数(通过共享卷积参数,避免了像全连接层那样存在大量参数)。

LeNet由Yann LeCun于1998年提出,是卷积网络的开篇之作。LeNet-5提出以后,卷积神经网络成功的被商用,广泛的应用在邮政编码、支票号码识别等相关任务中。

本节用LeNet网络实现MNIST数据集的识别任务。

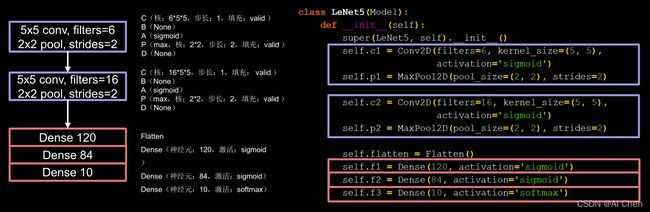

1.1-网络分析

在统计卷积神经网络的层数时,一般只统计卷积计算层和全连接计算层,其余操作可以认为是卷积计算层的附属。

LeNet共5层网络(如下图),两层卷积层(C1、C2,前面两个卷积层)和三层全连接层(D1、D2、D3,最后三个Dense层)

卷积就是特征提取器:CBAPB

特征提取器(卷积层):

C1:

C(核:6x5x5,步长:1,填充:valid)

B(None)

A(sigmoid)

P(max,核:2x2,步长:2,填充:valid)

D(None)

C2:

C(核:16x5x5,步长:1,填充:valid)

B(None)

A(sigmoid)

P(max,核:2x2,步长:2,填充:valid)

D(None)

分类器(全连接层):

D1:

Dense(神经元:120,激活:sigmoid)

D2:

Dense(神经元: 84,激活:sigmoid)

D3:

Dense(神经元: 10,激活:softmax)

1.2-卷积层搭建

上图中,紫色的部分为卷积层(即特征提取器CBAPD),红色为全连接层(即分类器)。总体上看,LeNet5网络比较简单,其创新点在于成功的利用“卷积特征提取器->全连接分类”的经典思路解决了手写数字识别的问题,对神经网络研究的发展有着重要的意义。

2.六步法训练LeNet5网络

2.1六步法回顾

import

train,test

model=tf.keras.Sequantial()/ class

model.compile

model.fit

model.summary

2.2完整代码

## 六步法第一步->import

import tensorflow as tf

from tensorflow.keras.layers import Conv2D,BatchNormalization,Activation,MaxPool2D,Dropout,Dense,Flatten

from tensorflow.keras import Model,datasets

import matplotlib.pyplot as plt

import os

## 六步法第二步->train,test

(x_train,y_train),(x_test,y_test)=datasets.mnist.load_data()

x_train,x_test=x_train/255.,x_test/255.

print(x_train.shape)

print(x_test.shape)

x_train = x_train.reshape(-1, 28, 28, 1)

x_test = x_test.reshape(-1, 28, 28, 1)

print(x_train.shape)

print(x_test.shape)

## 六步法第三步->model=tf.keras.Sequantial()/ class

class LeNet5(Model):

def __init__(self):

super(LeNet5, self).__init__()

self.c1=Conv2D(filters=6,kernel_size=(5,5),activation='sigmoid')

self.p1=MaxPool2D(pool_size=(2,2),strides=2)

self.c2 = Conv2D(filters=16, kernel_size=(5, 5), activation='sigmoid')

self.p2 = MaxPool2D(pool_size=(2, 2), strides=2)

self.flatten=Flatten()

self.f1=Dense(120,activation='sigmoid')

self.f2=Dense(84,activation='sigmoid')

self.f3=Dense(10,activation='softmax')

def call(self,inputs):

x=self.c1(inputs)

x=self.p1(x)

x = self.c2(x)

x=self.p2(x)

x=self.flatten(x)

x = self.f1(x)

x = self.f2(x)

y = self.f3(x)

return y

model=LeNet5()

## 六步法第四步->model.compile

model.compile(

optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy']

)

checkpoint_save_path='./checkpoint/mnist.ckpt'

if os.path.exists(checkpoint_save_path+'.index'):

print('load the weights')

model.load_weights(checkpoint_save_path)

cp_callback=tf.keras.callbacks.ModelCheckpoint(

filepath=checkpoint_save_path,

save_weights_only=True,

save_best_only=True

)

## 六步法第五步->model.fit

history=model.fit(

x_train,y_train,

batch_size=32,epochs=5,

validation_data=(x_test,y_test),

validation_freq=1,

callbacks=[cp_callback]

)

## 六步法第六步->model.summary

model.summary()

file=open('./weight.txt','w')

for v in model.trainable_variables:

file.write(str(v.name)+'\n')

file.write(str(v.shape)+'\n')

file.write(str(v.numpy())+'\n')

file.close()

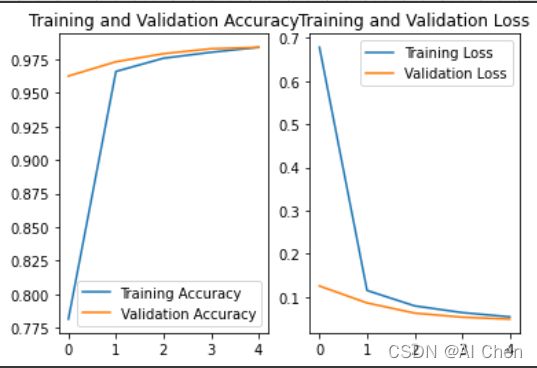

#显示训练集和验证集

acc=history.history['sparse_categorical_accuracy']

val_acc=history.history['val_sparse_categorical_accuracy']

loss=history.history['loss']

val_loss=history.history['val_loss']

plt.subplot(121)

plt.plot(acc,label='Training Accuracy')

plt.plot(val_acc,label='Validation Accuracy')

plt.title('Training and Validation Accuracy')

plt.legend()

plt.subplot(122)

plt.plot(loss,label='Training Loss')

plt.plot(val_loss,label='Validation Loss')

plt.title('Training and Validation Loss')

plt.legend()

plt.show()

2.3输出结果

Epoch 1/5

This message will be only logged once.

1563/1563 [==============================] - 6s 4ms/step - loss: 2.0373 - sparse_categorical_accuracy: 0.2286 - val_loss: 1.8006 - val_sparse_categorical_accuracy: 0.3408

Epoch 2/5

1563/1563 [==============================] - 3s 2ms/step - loss: 1.7326 - sparse_categorical_accuracy: 0.3612 - val_loss: 1.6614 - val_sparse_categorical_accuracy: 0.3838

Epoch 3/5

1563/1563 [==============================] - 3s 2ms/step - loss: 1.6291 - sparse_categorical_accuracy: 0.3993 - val_loss: 1.5625 - val_sparse_categorical_accuracy: 0.4165

Epoch 4/5

1563/1563 [==============================] - 3s 2ms/step - loss: 1.5444 - sparse_categorical_accuracy: 0.4314 - val_loss: 1.5026 - val_sparse_categorical_accuracy: 0.4473

Epoch 5/5

1563/1563 [==============================] - 3s 2ms/step - loss: 1.4757 - sparse_categorical_accuracy: 0.4602 - val_loss: 1.4320 - val_sparse_categorical_accuracy: 0.4782

Model: "le_net5"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) multiple 456

_________________________________________________________________

max_pooling2d (MaxPooling2D) multiple 0

_________________________________________________________________

conv2d_1 (Conv2D) multiple 2416

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 multiple 0

_________________________________________________________________

flatten (Flatten) multiple 0

_________________________________________________________________

dense (Dense) multiple 48120

_________________________________________________________________

dense_1 (Dense) multiple 10164

_________________________________________________________________

dense_2 (Dense) multiple 850

=================================================================

Total params: 62,006

Trainable params: 62,006

Non-trainable params: 0

_________________________________________________________________