机器学习作业 2 —— 逻辑回归Logistic Regression

机器学习作业 2 —— 逻辑回归Logistic Regression

目录

- 机器学习作业 2 —— 逻辑回归Logistic Regression

-

- 1. 简介

- 2. 准备数据

- 3. s i g m o i d sigmoid sigmoid 函数

- 4. Cost Function(代价函数)

- 5. Gradient Descent(梯度下降)

- 6. 拟合参数

- 7. 用训练集预测和验证

- 8. 寻找决策边界

- 9. 正则化逻辑回归

- 10. Feature Mapping(特征映射)

- 11. Regularized Cost(正则化代价函数)

- 12. Regularized Gradient(正则化梯度)

- 13. 正则化拟合参数

- 14. 正则化后的预测

- 15. 使用不同的 λ \lambda λ (这个是常数)

1. 简介

-

该任务主要是将

ex2data1.txt与ex2data2.txt中的数据集运用逻辑回归进行分类。ex2data1.txt包含的是学生的两种考试的历史成绩,以及他们是否录取,ex2data2.txt包含的是芯片产品的两种测试结果,以及是否好坏。运用算法来为学生与芯片分类,判断什么样的学生可以录取,以及什么样的芯片是好的。 -

其中需要完成

sigmoid函数,cost函数,gradient descend函数,Regularized方法,featuring mapping以及画出decision boundary -

计算包的导入

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

plt.style.use('seaborn-dark-palette') # 绘图风格的设置,可以用plt.style.available来查看有多少种风格

import matplotlib.pyplot as plt

from sklearn.metrics import classification_report # 这个包是评价报告

2. 准备数据

data = pd.read_csv('ex2data1.txt', names=['exam1', 'exam2', 'admitted'])

data.head()#看前五行

| exam1 | exam2 | admitted | |

|---|---|---|---|

| 0 | 34.623660 | 78.024693 | 0 |

| 1 | 30.286711 | 43.894998 | 0 |

| 2 | 35.847409 | 72.902198 | 0 |

| 3 | 60.182599 | 86.308552 | 1 |

| 4 | 79.032736 | 75.344376 | 1 |

- 看一下数据的情况

data.describe()

| exam1 | exam2 | admitted | |

|---|---|---|---|

| count | 100.000000 | 100.000000 | 100.000000 |

| mean | 65.644274 | 66.221998 | 0.600000 |

| std | 19.458222 | 18.582783 | 0.492366 |

| min | 30.058822 | 30.603263 | 0.000000 |

| 25% | 50.919511 | 48.179205 | 0.000000 |

| 50% | 67.032988 | 67.682381 | 1.000000 |

| 75% | 80.212529 | 79.360605 | 1.000000 |

| max | 99.827858 | 98.869436 | 1.000000 |

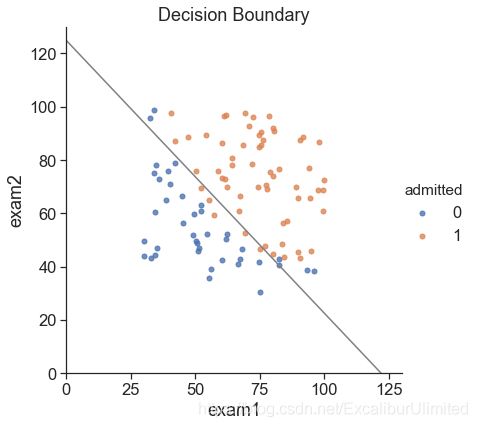

sns.set(context="notebook", style="darkgrid")

sns.lmplot('exam1', 'exam2', hue='admitted', data=data, # hue参数 用于分类

height=6,

fit_reg=False,

scatter_kws={"s": 30} # 设置描绘的点的半径大小

)

plt.show()#看下数据的样子

- 读取数据函数

def get_X(df):#读取特征

# 使用concat函数将x_0列加入X矩阵,亦即自变量矩阵

ones = pd.DataFrame({'ones': np.ones(len(df))})#ones是m行1列的dataframe

data = pd.concat([ones, df], axis=1) # 合并数据,根据列合并

return data.iloc[:, :-1].values # 这个操作返回矩阵

def get_y(df):#读取标签

# 最后一列是目标值,也就是因变量

return np.array(df.iloc[:, -1]) # df.iloc[:, -1]是指df的最后一列

def normalize_feature(df):

# 使得数据保持在一个维度

return df.apply(lambda column: (column - column.mean()) / column.std())#特征缩放

X = get_X(data)

print(X.shape)

y = get_y(data)

print(y.shape)

(100, 3)

(100,)

3. s i g m o i d sigmoid sigmoid 函数

分类问题尤其是二元分类问题,其结果无非是非黑即白。所以我们需要知道的是结果是0还是1,这个0或者1的概率有多大。而且往往分类问题是非线性的,线性回归的东西在大部分场景下都是失效的。所以能够满足上述特点的假设函数只有sigmoid函数。给它一个很大的数,它会给你一个接近1的数;给它一个很小的数,它会给你一个接近0的数,将你的任何复杂的非线性组合坍缩为一个合理的数字。

它通常用 g g g来进行表示,公式为: g ( z ) = 1 1 + e − z g\left( z \right)=\frac{1}{1+{{e}^{-z}}} g(z)=1+e−z1

然后我们的线性回归的假设函数结合起来,就得到逻辑回归模型的假设函数:

h θ ( x ) = 1 1 + e − θ T X = 1 1 + e − ( θ 0 x 0 + θ 1 x 1 + θ 2 x 2 + . . . + θ n x n ) {{h}_{\theta }}\left( x \right)=\frac{1}{1+{{e}^{-{{\theta }^{T}}X}}}=\frac{1}{1+{{e}^{-(\theta_0x_0+\theta_1x_1+\theta_2x_2+...+\theta_nx_n)}}} hθ(x)=1+e−θTX1=1+e−(θ0x0+θ1x1+θ2x2+...+θnxn)1

def sigmoid(z):

return 1 / (1 + np.exp(-z))

fig, ax = plt.subplots(figsize=(8, 6))

ax.plot(np.arange(-10, 10, step=0.01),

sigmoid(np.arange(-10, 10, step=0.01)))

ax.set_ylim((-0.1,1.1))

ax.set_xlabel('z', fontsize=18)

ax.set_ylabel('g(z)', fontsize=18)

ax.set_title('Sigmoid Function', fontsize=18)

plt.show()

4. Cost Function(代价函数)

逻辑回归的代价函数亦即目标函数并不能像线性回归那样沿用平方和最小化的目标,因为假设函数的变化导致带入的数值总是匡于0到1之间,平方和函数会不断波动,产生有限个局部最小值,导致最后的算法无法收敛抑或是无法找到全局最优解。

所以迫切需要改进,目前我们需要的函数需要满足的条件是基于任务的目的,既然是分类问题,那么当实际结果是1时,我们需要随着 h θ ( x ) h_{\theta}(x) hθ(x)的往1的方向变大时,Cost值一直在减少;当实际结果为0时,我们需要随着 h θ ( x ) h_{\theta}(x) hθ(x)的往1的方向变大时,Cost值一直在增加。就像下面两张图这样:

看到这样的函数图像想到了什么?没错除了幂函数就是对数函数满足这样的曲线形状,稍加研究可以发现,下面的函数就可以完美地满足刚刚提到的要求。

这样的函数可以定义为

cost ( h θ ( x ) , y ) = { − log ( h θ ( x ) ) if y = 1 − log ( 1 − h θ ( x ) ) if y = 0 \operatorname{cost}\left(h_{\theta}(x), y\right)=\left\{\begin{aligned}-\log \left(h_{\theta}(x)\right) & \text { if } y=1 \\-\log \left(1-h_{\theta}(x)\right) & \text { if } y=0 \end{aligned}\right. cost(hθ(x),y)={−log(hθ(x))−log(1−hθ(x)) if y=1 if y=0

简化一下就是:

cost ( h θ ( x ) , y ) = − y × log ( h θ ( x ) ) − ( 1 − y ) × log ( 1 − h θ ( x ) ) \operatorname{cost}\left(h_{\theta}(x), y\right)=-y \times \log \left(h_{\theta}(x)\right)-(1-y) \times \log \left(1-h_{\theta}(x)\right) cost(hθ(x),y)=−y×log(hθ(x))−(1−y)×log(1−hθ(x))

可以分别将 y = 1 y=1 y=1以及 y = 0 y=0 y=0代入验算,结果与分类讨论的公式一致。

由此可以得到新的代价函数 J ( θ ) J(\theta) J(θ):

J ( θ ) = 1 m ∑ i = 1 m cos t ( h θ ( x ( i ) ) , y ( i ) ) = − 1 m ∑ i = 1 m [ y ( i ) log ( h θ ( x ( i ) ) ) + ( 1 − y ( i ) ) log ( 1 − h θ ( x ( i ) ) ) ] J(\theta) = \frac{1}{m} \sum_{i=1}^{m} \cos t\left(h_{\theta}\left(x^{(i)}\right), y^{(i)}\right) \\ =-\frac{1}{m} \sum_{i=1}^{m}\left[y^{(i)} \log \left(h_{\theta}\left(x^{(i)}\right)\right)+\left(1-y^{(i)}\right) \log \left(1-h_{\theta}\left(x^{(i)}\right)\right)\right] J(θ)=m1i=1∑mcost(hθ(x(i)),y(i))=−m1i=1∑m[y(i)log(hθ(x(i)))+(1−y(i))log(1−hθ(x(i)))]

theta = np.zeros(3) # θ是3x1的列向量,分别对应x0,x1,x2

theta

array([0., 0., 0.])

def cost(theta, X, y):

# 也就是之前提到的Cost函数

return np.mean(-y * np.log(sigmoid(X @ theta)) - (1 - y) * np.log(1 - sigmoid(X @ theta)))

# X @ theta与X.dot(theta)等价

cost(theta, X, y)

0.6931471805599458

5. Gradient Descent(梯度下降)

批量梯度下降(batch gradient descent)

原始的梯度下降算法为

θ j : = θ j − α ∂ ∂ θ j J ( θ ) \theta_{j}:=\theta_{j}-\alpha \frac{\partial}{\partial \theta_{j}} J(\theta) θj:=θj−α∂θj∂J(θ)

应用于刚得到的 J ( θ ) J(\theta) J(θ):

θ j : = θ j − α 1 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) x j ( i ) \theta_{j}:=\theta_{j}-\alpha \frac{1}{m} \sum_{i=1}^{m}\left(h_{\theta}\left(\mathrm{x}^{(i)}\right)-\mathrm{y}^{(i)}\right) \mathrm{x}_{j}^{(i)} θj:=θj−αm1i=1∑m(hθ(x(i))−y(i))xj(i)

以下为求导过程:

J ( θ ) = − 1 m ∑ i = 1 m [ y ( i ) log ( h θ ( x ( i ) ) ) + ( 1 − y ( i ) ) log ( 1 − h θ ( x ( i ) ) ) ] J(\theta)=-\frac{1}{m} \sum_{i=1}^{m}\left[y^{(i)} \log \left(h_{\theta}\left(x^{(i)}\right)\right)+\left(1-y^{(i)}\right) \log \left(1-h_{\theta}\left(x^{(i)}\right)\right)\right] J(θ)=−m1i=1∑m[y(i)log(hθ(x(i)))+(1−y(i))log(1−hθ(x(i)))]

考虑:

h θ ( x ( i ) ) = 1 1 + e − θ T x ( i ) h_{\theta}\left(x^{(i)}\right)=\frac{1}{1+e^{-\theta^{T} x^{(i)}}} hθ(x(i))=1+e−θTx(i)1

则:

y ( i ) log ( h θ ( x ( i ) ) ) + ( 1 − y ( i ) ) log ( 1 − h θ ( x ( i ) ) ) = y ( i ) log ( 1 1 + e − θ T x ( i ) ) + ( 1 − y ( i ) ) log ( 1 − 1 1 + e − θ T x ( i ) ) = − y ( i ) log ( 1 + e − θ T x ( i ) ) − ( 1 − y ( i ) ) log ( 1 + e θ T x ( i ) ) \begin{array}{l}{\quad y^{(i)} \log \left(h_{\theta}\left(x^{(i)}\right)\right)+\left(1-y^{(i)}\right) \log \left(1-h_{\theta}\left(x^{(i)}\right)\right)} \\ {=y^{(i)} \log \left(\frac{1}{1+e^{-\theta^{T} x^{(i)}}}\right)+\left(1-y^{(i)}\right) \log \left(1-\frac{1}{1+e^{-\theta^{T} x^{(i)}}}\right)} \\ {=-y^{(i)} \log \left(1+e^{-\theta^{T} x^{(i)}}\right)-\left(1-y^{(i)}\right) \log \left(1+e^{\theta^{T} x^{(i)}}\right)}\end{array} y(i)log(hθ(x(i)))+(1−y(i))log(1−hθ(x(i)))=y(i)log(1+e−θTx(i)1)+(1−y(i))log(1−1+e−θTx(i)1)=−y(i)log(1+e−θTx(i))−(1−y(i))log(1+eθTx(i))

所以:

∂ ∂ θ j J ( θ ) = ∂ ∂ θ j [ − 1 m ∑ i = 1 m [ − y ( i ) log ( 1 + e − θ T x ( i ) ) − ( 1 − y ( i ) ) log ( 1 + e θ T x ( i ) ) ) ] ] = − 1 m ∑ i = 1 m [ − y ( i ) − x j ( i ) e − θ T x ( i ) 1 + e − θ T x ( i ) − ( 1 − y ( i ) ) x j ( i ) e θ T x ( i ) 1 + e θ T x ( i ) ] = − 1 m ∑ i = 1 m y ( i ) x j ( i ) 1 + e θ T x ( i ) − ( 1 − y ( i ) ) x j ( i ) e θ T x ( i ) 1 + e θ T x ( i ) = − 1 m ∑ i = 1 m y ( i ) x j ( i ) − x j ( i ) e θ T x ( i ) + y ( i ) x j ( i ) e θ T x ( i ) 1 + e θ T x ( i ) = − 1 m ∑ i = 1 m y ( i ) ( 1 + e θ T x ( i ) ) − e θ T x ( i ) 1 + e θ T x ( i ) x j ( i ) = − 1 m ∑ i = 1 m ( y ( i ) − e θ T x ( i ) 1 + e θ T x ( i ) ) x j ( i ) = − 1 m ∑ i = 1 m ( y ( i ) − 1 1 + e − θ T x ( i ) ) x j ( i ) = − 1 m ∑ i = 1 m [ y ( i ) − h θ ( x ( i ) ) ] x j ( i ) = 1 m ∑ i = 1 m [ h θ ( x ( i ) ) − y ( i ) ] x j ( i ) {\frac{\partial}{\partial \theta_{j}} J(\theta) \\ =\frac{\partial}{\partial \theta_{j}}\left[-\frac{1}{m} \sum_{i=1}^{m}\left[-y^{(i)} \log \left(1+e^{-\theta^{T} x^{(i)}}\right)-\left(1-y^{(i)}\right) \log \left(1+e^{\left.\theta^{T} x^{(i)}\right)}\right)\right]\right]} \\ {\quad=-\frac{1}{m} \sum_{i=1}^{m}\left[-y^{(i)} \frac{-x_{j}^{(i)} e^{-\theta^{T} x^{(i)}}}{1+e^{-\theta^{T} x^{(i)}}}-\left(1-y^{(i)}\right) \frac{x_{j}^{(i)} e^{\theta^{T} x^{(i)}}}{1+e^{\theta^{T} x^{(i)}}}\right]} \\ {=-\frac{1}{m} \sum_{i=1}^{m} y^{(i)} \frac{x_{j}^{(i)}}{1+e^{\theta^{T} x^{(i)}}}-\left(1-y^{(i)}\right) \frac{x_{j}^{(i)} e^{\theta^{T} x^{(i)}}}{1+e^{\theta^{T} x^{(i)}}}} \\ {=-\frac{1}{m} \sum_{i=1}^{m} \frac{y^{(i)} x_{j}^{(i)}-x_{j}^{(i)} e^{\theta^{T} x^{(i)}}+y^{(i)} x_{j}^{(i)} e^{\theta^{T} x^{(i)}}}{1+e^{\theta^{T} x^{(i)}}}} \\ {=-\frac{1}{m} \sum_{i=1}^{m} \frac{y^{(i)}\left(1+e^{\theta^{T} x^{(i)}}\right)-e^{\theta^{T} x^{(i)}}}{1+e^{\theta^{T} x^{(i)}}} x_{j}^{(i)}} \\ {=-\frac{1}{m} \sum_{i=1}^{m}\left(y^{(i)}-\frac{e^{\theta^{T} x^{(i)}}}{1+e^{\theta^{T} x^{(i)}}}\right) x_{j}^{(i)}} \\ {=-\frac{1}{m} \sum_{i=1}^{m}\left(y^{(i)}-\frac{1}{1+e^{-\theta^{T} x^{(i)}}}\right) x_{j}^{(i)} } \\ {=-\frac{1}{m} \sum_{i=1}^{m}\left[y^{(i)}-h_{\theta}\left(x^{(i)}\right)\right] x_{j}^{(i)}} \\ {=\frac{1}{m} \sum_{i=1}^{m}\left[h_{\theta}\left(x^{(i)}\right)-y^{(i)}\right] x_{j}^{(i)}} ∂θj∂J(θ)=∂θj∂[−m1i=1∑m[−y(i)log(1+e−θTx(i))−(1−y(i))log(1+eθTx(i)))]]=−m1i=1∑m[−y(i)1+e−θTx(i)−xj(i)e−θTx(i)−(1−y(i))1+eθTx(i)xj(i)eθTx(i)]=−m1i=1∑my(i)1+eθTx(i)xj(i)−(1−y(i))1+eθTx(i)xj(i)eθTx(i)=−m1i=1∑m1+eθTx(i)y(i)xj(i)−xj(i)eθTx(i)+y(i)xj(i)eθTx(i)=−m1i=1∑m1+eθTx(i)y(i)(1+eθTx(i))−eθTx(i)xj(i)=−m1i=1∑m(y(i)−1+eθTx(i)eθTx(i))xj(i)=−m1i=1∑m(y(i)−1+e−θTx(i)1)xj(i)=−m1i=1∑m[y(i)−hθ(x(i))]xj(i)=m1i=1∑m[hθ(x(i))−y(i)]xj(i)

- 转化为向量化计算: 1 m X T ( S i g m o i d ( X θ ) − y ) \frac{1}{m} X^T( Sigmoid(X\theta) - y ) m1XT(Sigmoid(Xθ)−y)

∂ J ( θ ) ∂ θ j = 1 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) x j ( i ) \frac{\partial J\left( \theta \right)}{\partial {{\theta }_{j}}}=\frac{1}{m}\sum\limits_{i=1}^{m}{({{h}_{\theta }}\left( {{x}^{(i)}} \right)-{{y}^{(i)}})x_{_{j}}^{(i)}} ∂θj∂J(θ)=m1i=1∑m(hθ(x(i))−y(i))xj(i)

def gradient(theta, X, y):

# 只需要一次批量梯度下降算法

return (1 / len(X)) * X.T @ (sigmoid(X @ theta) - y)

gradient(theta, X, y)

ones -0.100000

exam1 -12.009217

exam2 -11.262842

dtype: float64

6. 拟合参数

- 这里我使用

scipy.optimize.minimize去寻找参数

import scipy.optimize as opt

res = opt.minimize(fun=cost, x0=theta, args=(X, y), method='Newton-CG', jac=gradient)

print(res)

fun: 0.2034977030343232

jac: ones -3.908000e-09

exam1 -6.926737e-05

exam2 -4.659433e-05

dtype: float64

message: 'Optimization terminated successfully.'

nfev: 75

nhev: 0

nit: 31

njev: 287

status: 0

success: True

x: ones -25.158217

exam1 0.206207

exam2 0.201446

dtype: float64

7. 用训练集预测和验证

def predict(x, theta):

prob = sigmoid(x @ theta)

return (prob >= 0.5).astype(int)

final_theta = res.x

y_pred = predict(X, final_theta)

print(classification_report(y, y_pred)) # 输出原始数据和用预测模型的数据之间的差别

precision recall f1-score support

0 0.87 0.85 0.86 40

1 0.90 0.92 0.91 60

accuracy 0.89 100

macro avg 0.89 0.88 0.88 100

weighted avg 0.89 0.89 0.89 100

-

查准率(Precision) = T P T P + F P \frac{TP}{TP+FP} TP+FPTP(在预测的所有真中,实际为真的百分比)或 T N T N + F N \frac{TN}{TN+FN} TN+FNTN(在预测的所有假中,实际为假的百分比)

-

查全率(Recall)= T P T P + F N \frac{TP}{TP+FN} TP+FNTP(在所有实际为真中,成功预测为真的百分比)或 T N T N + F P \frac{TN}{TN+FP} TN+FPTN(在所以体验实际为假中,成功预测为假的百分比)

TP:True Positive 预测真,实际真

TN:True Negative 预测假,实际假

FP:False Positive 预测真,实际假

FN:False Negative 预测假,实际真

F1-score:是精确度和召回率的调和平均值

2 F 1 = 1 P + 1 R \frac{2}{F_1} = \frac{1}{P} + \frac{1}{R} F12=P1+R1

F1为1时是最佳值,完美的查准率和查全率,最差为0.

8. 寻找决策边界

http://stats.stackexchange.com/questions/93569/why-is-logistic-regression-a-linear-classifier

由前文的介绍,可以知道当 h θ ( X × θ ) = 0.5 h_\theta(X \times \theta) = 0.5 hθ(X×θ)=0.5时就是边界,亦即

X × θ = 0 X \times \theta = 0 X×θ=0

print(res.x) # 这是最终拟合的θ参数

ones -25.158217

exam1 0.206207

exam2 0.201446

dtype: float64

coef = -(res.x / res.x[2]) # 消除第三个变量,构造线性函数

print(coef)

x = np.arange(130, step=0.1)

y = coef[0] + coef[1]*x

ones 124.887907

exam1 -1.023631

exam2 -1.000000

dtype: float64

sns.set(context="notebook", style="ticks", font_scale=1.5)

sns.lmplot('exam1', 'exam2', hue='admitted', data=data,

size=6,

fit_reg=False,

scatter_kws={"s": 25}

)

plt.plot(x, y, 'grey')

plt.xlim(0, 130)

plt.ylim(0, 130)

plt.title('Decision Boundary')

plt.show()

第一个任务集到此结束

9. 正则化逻辑回归

将经过正则化的逻辑回归应用于第二个数据集

df = pd.read_csv('ex2data2.txt', names=['test1', 'test2', 'accepted'])

df.head()

| test1 | test2 | accepted | |

|---|---|---|---|

| 0 | 0.051267 | 0.69956 | 1 |

| 1 | -0.092742 | 0.68494 | 1 |

| 2 | -0.213710 | 0.69225 | 1 |

| 3 | -0.375000 | 0.50219 | 1 |

| 4 | -0.513250 | 0.46564 | 1 |

sns.set(context="notebook", style="ticks", font_scale=1.5)

sns.lmplot('test1', 'test2', hue='accepted', data=df,

size=6,

fit_reg=False,

scatter_kws={"s": 50}

)

plt.title('Regularized Logistic Regression - Data Preview')

plt.show()

10. Feature Mapping(特征映射)

为了更好地拟合数据,就需要创造更多的特征参数,但是又不能凭空捏造,就需要从已有的数据中产生新的特征。具体的方法就是进行多项式运算。

mapFeature ( x ) = [ 1 x 1 x 2 x 1 2 x 1 x 2 x 2 2 x 1 3 ⋮ x 1 x 2 5 x 2 6 ] \text { mapFeature }(x)=\left[\begin{array}{c}{1} \\ {x_{1}} \\ {x_{2}} \\ {x_{1}^{2}} \\ {x_{1} x_{2}} \\ {x_{2}^{2}} \\ {x_{1}^{3}} \\ {\vdots} \\ {x_{1} x_{2}^{5}} \\ {x_{2}^{6}}\end{array}\right] mapFeature (x)=⎣⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎡1x1x2x12x1x2x22x13⋮x1x25x26⎦⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎤

def feature_mapping(x, y, power, as_ndarray=False):

# 以ndarray或者dataframe的方式返回参数

data = {"f{}{}".format(i - p, p): np.power(x, i - p) * np.power(y, p)

for i in np.arange(power + 1)

for p in np.arange(i + 1)

}

if as_ndarray:

return pd.DataFrame(data).values

else:

return pd.DataFrame(data)

x1 = np.array(df.test1)

x2 = np.array(df.test2)

data = feature_mapping(x1, x2, power=6)

print(data.shape)

data.head()

(118, 28)

| f00 | f10 | f01 | f20 | f11 | f02 | f30 | f21 | f12 | f03 | ... | f23 | f14 | f05 | f60 | f51 | f42 | f33 | f24 | f15 | f06 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1.0 | 0.051267 | 0.69956 | 0.002628 | 0.035864 | 0.489384 | 0.000135 | 0.001839 | 0.025089 | 0.342354 | ... | 0.000900 | 0.012278 | 0.167542 | 1.815630e-08 | 2.477505e-07 | 0.000003 | 0.000046 | 0.000629 | 0.008589 | 0.117206 |

| 1 | 1.0 | -0.092742 | 0.68494 | 0.008601 | -0.063523 | 0.469143 | -0.000798 | 0.005891 | -0.043509 | 0.321335 | ... | 0.002764 | -0.020412 | 0.150752 | 6.362953e-07 | -4.699318e-06 | 0.000035 | -0.000256 | 0.001893 | -0.013981 | 0.103256 |

| 2 | 1.0 | -0.213710 | 0.69225 | 0.045672 | -0.147941 | 0.479210 | -0.009761 | 0.031616 | -0.102412 | 0.331733 | ... | 0.015151 | -0.049077 | 0.158970 | 9.526844e-05 | -3.085938e-04 | 0.001000 | -0.003238 | 0.010488 | -0.033973 | 0.110047 |

| 3 | 1.0 | -0.375000 | 0.50219 | 0.140625 | -0.188321 | 0.252195 | -0.052734 | 0.070620 | -0.094573 | 0.126650 | ... | 0.017810 | -0.023851 | 0.031940 | 2.780914e-03 | -3.724126e-03 | 0.004987 | -0.006679 | 0.008944 | -0.011978 | 0.016040 |

| 4 | 1.0 | -0.513250 | 0.46564 | 0.263426 | -0.238990 | 0.216821 | -0.135203 | 0.122661 | -0.111283 | 0.100960 | ... | 0.026596 | -0.024128 | 0.021890 | 1.827990e-02 | -1.658422e-02 | 0.015046 | -0.013650 | 0.012384 | -0.011235 | 0.010193 |

5 rows × 28 columns

data.describe()

| f00 | f10 | f01 | f20 | f11 | f02 | f30 | f21 | f12 | f03 | ... | f23 | f14 | f05 | f60 | f51 | f42 | f33 | f24 | f15 | f06 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| count | 118.0 | 118.000000 | 118.000000 | 118.000000 | 118.000000 | 118.000000 | 1.180000e+02 | 118.000000 | 118.000000 | 118.000000 | ... | 118.000000 | 1.180000e+02 | 118.000000 | 1.180000e+02 | 118.000000 | 1.180000e+02 | 118.000000 | 1.180000e+02 | 118.000000 | 1.180000e+02 |

| mean | 1.0 | 0.054779 | 0.183102 | 0.247575 | -0.025472 | 0.301370 | 5.983333e-02 | 0.030682 | 0.015483 | 0.142350 | ... | 0.018278 | 4.089084e-03 | 0.115710 | 7.837118e-02 | -0.000703 | 1.893340e-02 | -0.001705 | 2.259170e-02 | -0.006302 | 1.257256e-01 |

| std | 0.0 | 0.496654 | 0.519743 | 0.248532 | 0.224075 | 0.284536 | 2.746459e-01 | 0.134706 | 0.150143 | 0.326134 | ... | 0.058513 | 9.993907e-02 | 0.299092 | 1.938621e-01 | 0.058271 | 3.430092e-02 | 0.037443 | 4.346935e-02 | 0.090621 | 2.964416e-01 |

| min | 1.0 | -0.830070 | -0.769740 | 0.000040 | -0.484096 | 0.000026 | -5.719317e-01 | -0.358121 | -0.483743 | -0.456071 | ... | -0.142660 | -4.830370e-01 | -0.270222 | 6.472253e-14 | -0.203971 | 2.577297e-10 | -0.113448 | 2.418097e-10 | -0.482684 | 1.795116e-14 |

| 25% | 1.0 | -0.372120 | -0.254385 | 0.043243 | -0.178209 | 0.061086 | -5.155632e-02 | -0.023672 | -0.042980 | -0.016492 | ... | -0.001400 | -7.449462e-03 | -0.001072 | 8.086369e-05 | -0.006381 | 1.258285e-04 | -0.005749 | 3.528590e-04 | -0.016662 | 2.298277e-04 |

| 50% | 1.0 | -0.006336 | 0.213455 | 0.165397 | -0.016521 | 0.252195 | -2.544062e-07 | 0.006603 | -0.000039 | 0.009734 | ... | 0.001026 | -8.972096e-09 | 0.000444 | 4.527344e-03 | -0.000004 | 3.387050e-03 | -0.000005 | 3.921378e-03 | -0.000020 | 1.604015e-02 |

| 75% | 1.0 | 0.478970 | 0.646562 | 0.389925 | 0.100795 | 0.464189 | 1.099616e-01 | 0.086392 | 0.079510 | 0.270310 | ... | 0.021148 | 2.751341e-02 | 0.113020 | 5.932959e-02 | 0.002104 | 2.090875e-02 | 0.001024 | 2.103622e-02 | 0.001289 | 1.001215e-01 |

| max | 1.0 | 1.070900 | 1.108900 | 1.146827 | 0.568307 | 1.229659 | 1.228137e+00 | 0.449251 | 0.505577 | 1.363569 | ... | 0.287323 | 4.012965e-01 | 1.676725 | 1.508320e+00 | 0.250577 | 2.018260e-01 | 0.183548 | 2.556084e-01 | 0.436209 | 1.859321e+00 |

8 rows × 28 columns

11. Regularized Cost(正则化代价函数)

有时用线性函数不能很好地将数据进行分类,就会考虑用非线性函数,但也会存在要么曲线太曲了导致过拟合(overfitting),或者曲线不够曲,也就是欠拟合(underfitting)。为了防止过拟合的发生,核心就在于降低高次幂特征的参数量,让影响假设函数结果较大的项占据较小的权重,就是给它更多的惩罚。

比如对于这样的假设函数:

h θ ( x ) = θ 0 + θ 1 x 1 + θ 2 x 2 2 + θ 3 x 3 3 + θ 4 x 4 4 h_{\theta}(x)=\theta_{0}+\theta_{1} x_{1}+\theta_{2} x_{2}^{2}+\theta_{3} x_{3}^{3}+\theta_{4} x_{4}^{4} hθ(x)=θ0+θ1x1+θ2x22+θ3x33+θ4x44

我们可以用这样的正则化方法:

min θ 1 2 m [ ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) 2 + 1000 θ 3 2 + 10000 θ 4 2 ] \min _{\theta} \frac{1}{2 m}\left[\sum_{i=1}^{m}\left(h_{\theta}\left(x^{(i)}\right)-y^{(i)}\right)^{2}+1000 \theta_{3}^{2}+10000 \theta_{4}^{2}\right] θmin2m1[i=1∑m(hθ(x(i))−y(i))2+1000θ32+10000θ42]

我们可以对 θ 3 \theta_3 θ3和 θ 4 \theta_4 θ4加大惩罚,从而减小它们的影响。但是对于一般问题我们并不知道该惩罚谁,所以要由算法自己去判断,我们只需要将这些项求和再加上一个 λ \lambda λ来约束即可,当然 λ \lambda λ的选取也需要由经验考虑。也就是下面所代表的的正则化一般方法:

J ( θ ) = 1 m ∑ i = 1 m [ − y ( i ) log ( h θ ( x ( i ) ) ) − ( 1 − y ( i ) ) log ( 1 − h θ ( x ( i ) ) ) ] + λ 2 m ∑ j = 1 n θ j 2 J\left( \theta \right)=\frac{1}{m}\sum\limits_{i=1}^{m}{[-{{y}^{(i)}}\log \left( {{h}_{\theta }}\left( {{x}^{(i)}} \right) \right)-\left( 1-{{y}^{(i)}} \right)\log \left( 1-{{h}_{\theta }}\left( {{x}^{(i)}} \right) \right)]}+\frac{\lambda }{2m}\sum\limits_{j=1}^{n}{\theta _{j}^{2}} J(θ)=m1i=1∑m[−y(i)log(hθ(x(i)))−(1−y(i))log(1−hθ(x(i)))]+2mλj=1∑nθj2

theta = np.zeros(data.shape[1])

X = feature_mapping(x1, x2, power=6, as_ndarray=True)

print(X.shape)

y = get_y(df)

print(y.shape)

(118, 28)

(118,)

def regularized_cost(theta, X, y, l=1):

# 不需要对x0进行正则化

theta_j1_to_n = theta[1:]

regularized_term = (l / (2 * len(X))) * np.power(theta_j1_to_n, 2).sum()

return cost(theta, X, y) + regularized_term

#正则化代价函数

regularized_cost(theta, X, y, l=1)

0.6931471805599454

因为我们设置θ为0,所以这个正则化代价函数与代价函数的值相同

12. Regularized Gradient(正则化梯度)

∂ J ( θ ) ∂ θ j = ( 1 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) ) + λ m θ j for j ≥ 1 \frac{\partial J\left( \theta \right)}{\partial {{\theta }_{j}}}=\left( \frac{1}{m}\sum\limits_{i=1}^{m}{\left( {{h}_{\theta }}\left( {{x}^{\left( i \right)}} \right)-{{y}^{\left( i \right)}} \right)} \right)+\frac{\lambda }{m}{{\theta }_{j}}\text{ }\text{ for j}\ge \text{1} ∂θj∂J(θ)=(m1i=1∑m(hθ(x(i))−y(i)))+mλθj for j≥1

如果我们要使用梯度下降法令这个代价函数最小化,因为我们未对 θ 0 {{\theta }_{0}} θ0 进行正则化,所以梯度下降算法将分两种情形:

θ 0 : = θ 0 − a 1 m ∑ i = 1 m [ h θ ( x ( i ) ) − y ( i ) ] x 0 ( i ) θ j : = θ j − a 1 m ∑ i = 1 m [ h θ ( x ( i ) ) − y ( i ) ] x j ( i ) + λ m θ j {{\theta }_{0}}:={{\theta }_{0}}-a\frac{1}{m}\sum\limits_{i=1}^{m}{[{{h}_{\theta }}\left( {{x}^{(i)}} \right)-{{y}^{(i)}}]x_{_{0}}^{(i)}} \\ {{\theta }_{j}}:={{\theta }_{j}}-a\frac{1}{m}\sum\limits_{i=1}^{m}{[{{h}_{\theta }}\left( {{x}^{(i)}} \right)-{{y}^{(i)}}]x_{j}^{(i)}}+\frac{\lambda }{m}{{\theta }_{j}} \\ θ0:=θ0−am1i=1∑m[hθ(x(i))−y(i)]x0(i)θj:=θj−am1i=1∑m[hθ(x(i))−y(i)]xj(i)+mλθj

对上面的算法中 j=1,2,…,n 时的更新式子进行调整可得:

θ j : = θ j ( 1 − a λ m ) − a 1 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) x j ( i ) {{\theta }_{j}}:={{\theta }_{j}}(1-a\frac{\lambda }{m})-a\frac{1}{m}\sum\limits_{i=1}^{m}{({{h}_{\theta }}\left( {{x}^{(i)}} \right)-{{y}^{(i)}})x_{j}^{(i)}} θj:=θj(1−amλ)−am1i=1∑m(hθ(x(i))−y(i))xj(i)

可以看出加入正则项的改变就是在每一次迭代过程中,将 θ j \theta_j θj变得更小一点

def regularized_gradient(theta, X, y, l=1):

theta_j1_to_n = theta[1:]

regularized_theta = (l / len(X)) * theta_j1_to_n

# by doing this, no offset is on theta_0

regularized_term = np.concatenate([np.array([0]), regularized_theta])

return gradient(theta, X, y) + regularized_term

regularized_gradient(theta, X, y)

array([8.47457627e-03, 1.87880932e-02, 7.77711864e-05, 5.03446395e-02,

1.15013308e-02, 3.76648474e-02, 1.83559872e-02, 7.32393391e-03,

8.19244468e-03, 2.34764889e-02, 3.93486234e-02, 2.23923907e-03,

1.28600503e-02, 3.09593720e-03, 3.93028171e-02, 1.99707467e-02,

4.32983232e-03, 3.38643902e-03, 5.83822078e-03, 4.47629067e-03,

3.10079849e-02, 3.10312442e-02, 1.09740238e-03, 6.31570797e-03,

4.08503006e-04, 7.26504316e-03, 1.37646175e-03, 3.87936363e-02])

13. 正则化拟合参数

import scipy.optimize as opt

print('init cost = {}'.format(regularized_cost(theta, X, y)))

res = opt.minimize(fun=regularized_cost, x0=theta, args=(X, y), method='Newton-CG', jac=regularized_gradient)

res

init cost = 0.6931471805599454

fun: 0.5290027297127226

jac: array([-5.59000348e-08, -1.28838122e-08, -6.36410934e-08, 1.48052446e-08,

-2.17946454e-09, -3.76148722e-08, 9.78876709e-09, -1.82798254e-08,

1.13128886e-08, -1.07496536e-08, 9.72446799e-09, -3.07080137e-09,

-8.99944467e-09, 3.95483551e-09, -2.61273742e-08, 4.27780929e-10,

-1.11055205e-08, -6.79817860e-10, -5.00207423e-09, 2.66918207e-09,

-1.42573657e-08, 2.66682830e-09, -3.70874575e-09, -1.41882519e-10,

-1.24101649e-09, -1.53332708e-09, 3.89033012e-10, -2.18628962e-08])

message: 'Optimization terminated successfully.'

nfev: 7

nhev: 0

nit: 6

njev: 68

status: 0

success: True

x: array([ 1.27273933, 0.62527083, 1.18108774, -2.01995945, -0.91742379,

-1.43166442, 0.12400731, -0.36553516, -0.35723847, -0.17512854,

-1.45815594, -0.05098912, -0.61555563, -0.27470594, -1.19281681,

-0.24218847, -0.20600683, -0.04473089, -0.27778458, -0.29537795,

-0.45635707, -1.04320269, 0.02777149, -0.29243126, 0.01556672,

-0.32737949, -0.14388703, -0.92465318])

14. 正则化后的预测

final_theta = res.x

y_pred = predict(X, final_theta)

print(classification_report(y, y_pred))

precision recall f1-score support

0 0.90 0.75 0.82 60

1 0.78 0.91 0.84 58

accuracy 0.83 118

macro avg 0.84 0.83 0.83 118

weighted avg 0.84 0.83 0.83 118

15. 使用不同的 λ \lambda λ (这个是常数)

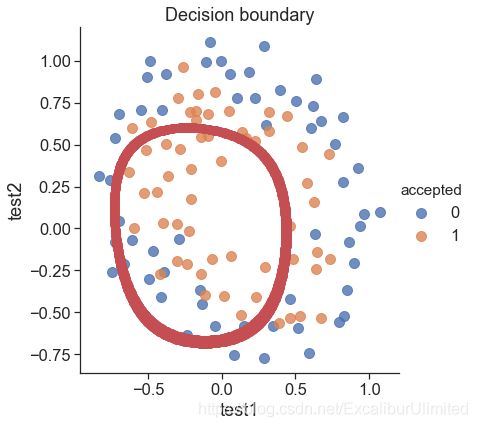

- 画出决策边界

我们找到所有满足 X × θ = 0 X\times \theta = 0 X×θ=0 的 x x x

def feature_mapped_logistic_regression(power, l):

# power: int

# 使用 x1, x2 产生power次的多项式

# l: int

# 正则化的lambda常数

# """

df = pd.read_csv('ex2data2.txt', names=['test1', 'test2', 'accepted'])

x1 = np.array(df.test1)

x2 = np.array(df.test2)

y = get_y(df)

X = feature_mapping(x1, x2, power, as_ndarray=True)

theta = np.zeros(X.shape[1])

res = opt.minimize(fun=regularized_cost,

x0=theta,

args=(X, y, l),

method='TNC',

jac=regularized_gradient)

final_theta = res.x

return final_theta

def find_decision_boundary(density, power, theta, threshhold):

t1 = np.linspace(-1, 1.5, density)

t2 = np.linspace(-1, 1.5, density)

cordinates = [(x, y) for x in t1 for y in t2]

x_cord, y_cord = zip(*cordinates) #zip(*) 为解压

# 例如 对于 [1,2,3],[4,5,6] zip后变成([1,4],[2,5],[3,6]) zip(*) 后变为([1,2,3],[4,5,6])

mapped_cord = feature_mapping(x_cord, y_cord, power) # 这是一个dataframe

inner_product = mapped_cord.values @ theta

decision = mapped_cord[np.abs(inner_product) < threshhold] # 不能精确地等于0,小于threshold就可以了

return decision.f10, decision.f01

#寻找决策边界函数

def draw_boundary(power, l):

# """

# power: mapped feature的指数

# l: lambda 常数

# """

density = 1000

threshhold = 2 * 10**-3

final_theta = feature_mapped_logistic_regression(power, l)

x, y = find_decision_boundary(density, power, final_theta, threshhold)

df = pd.read_csv('ex2data2.txt', names=['test1', 'test2', 'accepted'])

sns.lmplot('test1', 'test2', hue='accepted', data=df, height=6, fit_reg=False, scatter_kws={"s": 100})

plt.scatter(x, y, c='R', s=10)

plt.title('Decision boundary')

plt.show()

draw_boundary(power=6, l=1) #lambda=1

draw_boundary(power=6, l=0) #lambda=0, 没有正则化,过拟合了

draw_boundary(power=6, l=100) # underfitting,lambda=100,欠拟合