imx219-83 ros使用

1. jetson_nano_csi_cam_ros中译

Jetson Nano DevKit B01 + 双 CSI 摄像头 ROS 驱动程序。

1.1安装方法

系统:L4T R32.4.2 + ROS Melodic

-

下载此此存储库

cd ~/catkin_ws/src git clone https://github.com/rt-net/jetson_nano_csi_cam_ros.git -

gscam和对应的GStreamer-1.0支持包下载

sudo apt-get install gstreamer1.0-tools libgstreamer1.0-dev libgstreamer-plugins-base1.0-dev libgstreamer-plugins-good1.0-devcd ~/catkin_ws/src git clone https://github.com/ros-drivers/gscam.git下载后,编辑

./gscam/Makefile,添加-DGSTREAMER_VERSION_1_x=On:EXTRA_CMAKE_FLAGS = -DUSE_ROSBUILD:BOOL=1 -DGSTREAMER_VERSION_1_x=On -

构建

jetson_nano_csi_cam和gscamcd ~/catkin_ws catkin build source devel/setup.bash

1.2 使用方法

1.2.1 快速开始

要将CAM0连接的相机流数据在ROS主题/csi_cam_0/image_raw下分发,在终端执行以下命令:

roslaunch jetson_nano_csi_cam jetson_csi_cam.launch sensor_id:=0 width:=<image width> height:=<image height> fps:=<desired framerate>

要将连接到CAM0和CAM1的相机流数据在ROS主题/csi_cam_0/image_raw和/csi_cam_1/image_raw下同时分发,在终端执行以下命令:

roslaunch jetson_nano_csi_cam jetson_dual_csi_cam.launch width:=<image width> height:=<image height> fps:=<desired framerate>

1.2.2 视频采集/分发

视频分发

执行以下命令以将相机图像作为 ROS 主题传送。

roslaunch jetson_csi_cam jetson_csi_cam.launch

这次发布只是启动了一个用于分发的节点。还可以为视频分发添加一个选项:

roslaunch jetson_csi_cam jetson_csi_cam.launch width:=1920 height:=1080 fps:=15

对于其它参数,可以在使用roslaunch时以

jetson_csi_cam.launch的其它参数

sensor_id(default:0) – Camera IDwidth(default:640) – 要传送的视频的宽度height(default:480) – 要传送的视频的高度fps(default:30) – 传送的帧率(根据分辨率可能低于这个帧率)cam_name(default:csi_cam_$(arg sensor_id)) – camera info对应的相机名称frame_id(default:/$(arg cam_name)_link) – 用于tf的相机帧名sync_sink(default:true) – appsink的同步设置

(如果你设置了低帧率并且有问题,将此选项设置为false可能会解决问题。)flip_method(default:0) – 传送视频时的图像翻转选项

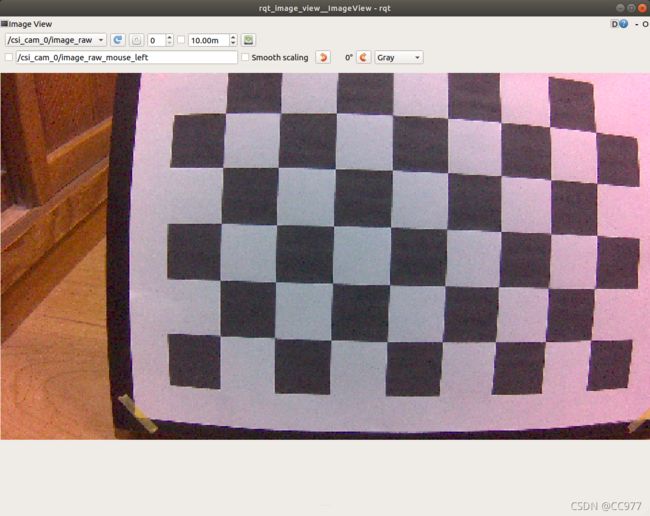

1.2.3 视频分发测试

检查相机图像

为了方便查看摄像头图像,请在桌面环境(例如 GNOME)中启动终端并执行 rqt_img_view。

rosrun rqt_iamge_view rqt_iamge_view

从启动的图像查看器左上角的下拉菜单中选择相机图像主题。 jetson_csi_cam.launch 的默认设置是/csi_cam_0/image_raw。

帧率测量

您可以使用以下命令检查 ROS 主题的更新频率:

rostopic hz /csi_cam_0/image_raw

相机图像ROS主题更新频率不是指定的图像帧率,但几乎匹配。 如果低于设置的帧率,可能是以下原因。

- Jetson Nano 电源管理处于性能限制模式

- Jetson Nano 与电脑接收视频网络不稳定

- 您指定的值大于或等于所连接摄像头模块的最大帧速率。

1.2.4 相机校准

jetson_nano_csi_cam还将相机信息作为 ROS 主题分发,以便更轻松地校准相机。

要将相机信息与您实际使用的相机相匹配,请根据 ROS Wiki 上的单目相机校准指南/双目相机校准指南进行校准。但无需校准即可分发相机图像。 若需进行校准,请参阅以下信息:

- 您需要按照 ROS Wiki 上的说明打印棋盘格。

- 使用视频分发中解释的 roslaunch 命令分发相机图像。

- 指定图像和相机选项以及棋盘的大小,如下面的命令所示,并进行校准。

#单目 rosrun camera_calibration cameracalibrator.py --size 8x6 --square <square size in meters> image:=/csi_cam_0/image_raw camera:=/csi_cam_0 #双目 rosrun camera_calibration cameracalibrator.py --approximate 0.1 --size 8x6 --square 0.027 --no-service-check right:=/csi_cam_1/image_raw left:=/csi_cam_0/image_raw right_camera:=/csi_cam_1 left_camera:=/csi_cam_0

如果在相机可以看到的范围内将棋盘移动到一定程度,您将可以按下“校准”按钮,从而得到校准文件。

2. IMU的使用

2.1 相机+IMU ROS包

2.2 ICM20948介绍

10 DOF IMU Sensor (D)

IMU —— Mahony滤波算法原理及实现源码

- 使用demo发现fps只有25hz,查看源码发现是磁力计读取占用了较多时间,而源码计算四元数时又使用了磁力计数据以确定方位。第三讲 AHRS姿态解算

- 关于标定文件的使用可用函数:

ICM20948_Calibration()

ICM20948通过软I2C IIC的读取三轴原始数据方法(含源代码)

ICM-20948 9-Axis Sensor Part I - 驱动

原来的demo磁力计读取速度慢,要换个驱动改ros版本,以下备用

Qwiic_9DoF_IMU_ICM20948_Py

SparkFun Qwiic 9DoF IMU Breakout

Playground for experimenting with ICM20948

ICM20948 9-DOF Accelerometer, Magnetometer and Gyroscope

C Driver for the ICM-20948 9-Axis Telemetry sensor

2.3 IMU 标定

VIO 中 IMU 的标定流程 (3/3) - imu_tk 使用备忘

总结:单独标定IMU的工具包(kalibr_allan,imu_tk,imu_utils)

IMU-TK: Inertial Measurement Unit ToolKit

#launch

>

>

>

>

-d $(find little_stereo_camera)/rviz/stereo_pcl.rviz" />

-0.5 0.5 -0.5 map stereo_image 100" output = "screen"/>

>

>

<!-- > -->

>

>

>

#! /usr/bin/env python

import numpy as np

import cv2

import os

import rospy

import shlex

import subprocess

import yaml

from std_msgs.msg import String

from std_msgs.msg import Header

from sensor_msgs.msg import Image

from sensor_msgs.msg import CameraInfo

from cv_bridge import CvBridge, CvBridgeError

import thread

# from pub_info import *

class LittleStereoCam():

def __init__(self):

rospy.init_node("little_stereo_camera", anonymous = True)

self.bridge = CvBridge()

self.left_image_pub = rospy.Publisher("stereo/left/image_raw", Image, queue_size=1)

self.left_image_info_pub = rospy.Publisher("stereo/left/camera_info", CameraInfo, queue_size=1)

self.right_image_pub = rospy.Publisher("stereo/right/image_raw", Image, queue_size=1)

self.right_image_info_pub = rospy.Publisher("stereo/right/camera_info", CameraInfo, queue_size=1)

self.camera_info = CameraInfo()

self.msg_header = Header()

self.ros_image = Image()

self.ros_image.height = 240

self.ros_image.width = 320

cam_id= rospy.get_param("cam_id", 0)

dir_path = os.path.dirname(os.path.realpath(__file__))

self.left_yaml_file = dir_path+"/../calibration/left.yaml"

self.right_yaml_file = dir_path+"/../calibration/right.yaml"

self.cam=cv2.VideoCapture(cam_id)

cam_mode_dict={

'LEFT_EYE_MODE':1,

'RIGHT_EYE_MODE':2,

'RED_BLUE_MODE':3,

'BINOCULAR_MODE':4,

}

cam_mode=cam_mode_dict['BINOCULAR_MODE']

command_list=[

"uvcdynctrl -d /dev/video{cam_id} -S 6:8 '(LE)0x50ff'",

"uvcdynctrl -d /dev/video{cam_id} -S 6:15 '(LE)0x00f6'",

"uvcdynctrl -d /dev/video{cam_id} -S 6:8 '(LE)0x2500'",

"uvcdynctrl -d /dev/video{cam_id} -S 6:8 '(LE)0x5ffe'",

"uvcdynctrl -d /dev/video{cam_id} -S 6:15 '(LE)0x0003'",

"uvcdynctrl -d /dev/video{cam_id} -S 6:15 '(LE)0x0002'",

"uvcdynctrl -d /dev/video{cam_id} -S 6:15 '(LE)0x0012'",

"uvcdynctrl -d /dev/video{cam_id} -S 6:15 '(LE)0x0004'",

"uvcdynctrl -d /dev/video{cam_id} -S 6:8 '(LE)0x76c3'",

"uvcdynctrl -d /dev/video{cam_id} -S 6:10 '(LE)0x0{cam_mode}00'",

]

for command in command_list:

subprocess.Popen(shlex.split(command.format(cam_id=cam_id,cam_mode=cam_mode)))

def pub_image(self, publisher, image, header):

try:

self.ros_image = self.bridge.cv2_to_imgmsg(image, "bgr8")

self.ros_image.header = header

publisher.publish(self.ros_image)

except CvBridgeError as e:

print(e)

def yaml_to_camera_info(self,yaml_file):

with open(yaml_file, "r") as f :

calib_data = yaml.load(f)

camera_info_msg = CameraInfo()

camera_info_msg.width = calib_data["image_width"]

camera_info_msg.height = calib_data["image_height"]

camera_info_msg.K = calib_data["camera_matrix"]["data"]

camera_info_msg.D = calib_data["distortion_coefficients"]["data"]

camera_info_msg.R = calib_data["rectification_matrix"]["data"]

camera_info_msg.P = calib_data["projection_matrix"]["data"]

camera_info_msg.distortion_model = calib_data["distortion_model"]

return camera_info_msg

def run(self):

# left_cam_info = self.yaml_to_camera_info(self.left_yaml_file)

# right_cam_info = self.yaml_to_camera_info(self.right_yaml_file)

left_cam_info = CameraInfo()

left_cam_info.width = 640

left_cam_info.height = 480

left_cam_info.K = [883.998642, 0.000000, 349.540253, 0.000000, 887.969815, 247.902874, 0.000000, 0.000000, 1.000000]

left_cam_info.D = [0.125962, -0.905045, 0.006512, 0.007531, 0.000000]

left_cam_info.R = [0.985389, 0.006639, 0.170189, -0.004920, 0.999933, -0.010521, -0.170248, 0.009530, 0.985355]

left_cam_info.P = [1022.167889, 0.000000, 150.220785, 0.000000, 0.000000, 1022.167889, 249.024044, 0.000000, 0.000000, 0.000000, 1.000000, 0.000000]

left_cam_info.distortion_model = 'plumb_bob'

right_cam_info = CameraInfo()

right_cam_info.width = 640

right_cam_info.height = 480

right_cam_info.K = [874.019843, 0.000000, 331.121922, 0.000000, 875.918758, 244.867443, 0.000000, 0.000000, 1.000000]

right_cam_info.D = [0.041385, -0.059698, 0.005392, 0.009075, 0.000000]

right_cam_info.R = [0.976610, 0.003803, 0.214985, -0.005979, 0.999937, 0.009472, -0.214936, -0.010535, 0.976571]

right_cam_info.P = [1022.167889, 0.000000, 150.220785, -41.006903, 0.000000, 1022.167889, 249.024044, 0.000000, 0.000000, 0.000000, 1.000000, 0.000000]

right_cam_info.distortion_model = 'plumb_bob'

rate = rospy.Rate(20)

while not rospy.is_shutdown():

ret,frame=self.cam.read()

if not ret:

print('[ERROR]: frame error')

break

expand_frame=cv2.resize(frame,None,fx=2,fy=1)

left_image = expand_frame[0:480,0:640]

right_image = expand_frame[0:480,640:1280]

self.msg_header.frame_id = 'stereo_image'

self.msg_header.stamp = rospy.Time.now()

left_cam_info.header = self.msg_header

right_cam_info.header = self.msg_header

self.left_image_info_pub.publish(left_cam_info)

self.right_image_info_pub.publish(right_cam_info)

# self.pub_image(self.left_image_pub, left_image, self.msg_header )

# self.pub_image(self.right_image_pub, right_image, self.msg_header )

try:

thread.start_new_thread( self.pub_image, (self.left_image_pub, left_image, self.msg_header, ))

thread.start_new_thread( self.pub_image, (self.right_image_pub, right_image, self.msg_header, ))

except:

print("[ERROR]: unable to start thread")

rate.sleep()

if __name__ == '__main__':

lsc = LittleStereoCam()

try:

lsc.run()

except rospy.ROSInterruptException:

pass

#!/usr/bin/env python

import time

import serial

import binascii

import rospy

from geometry_msgs.msg import Quaternion, Vector3

from sensor_msgs.msg import Imu

import codecs

s=serial.Serial("/dev/ttyUSB0",115200)

rospy.init_node("imu")

imuPub = rospy.Publisher("imu", Imu, queue_size=1)

rate=rospy.Rate(100)

Hex_str=codecs.decode('770500560560','hex')

s.write(Hex_str)

data= str(binascii.b2a_hex(s.read(6)))

#print(data)

print('AUTO PUT DATA TYPE SETTING SUCCESS')

Hex_str=codecs.decode('7705000C0617','hex')

s.write(Hex_str)

data=str(binascii.b2a_hex(s.read(6)))

#print(data)

print('SET 100Hz OUTPUT FREQUNSE')

try:

while not rospy.is_shutdown():

data= str(binascii.b2a_hex(s.read(48)))

g_value=data[26:44]

a_value=data[44:62]

q_value=data[62:95]

#x_acc

x_acc=9.87933*int(g_value[1:6])/10000.0

if int(g_value[0]):

x_acc=-1*x_acc

#y_acc

y_acc=9.87933*int(g_value[7:12])/10000.0

if int(g_value[6]) :

y_acc=-1*y_acc

#z_acc

z_acc=9.87933*int(g_value[13:])/10000.0

if int(g_value[12]):

z_acc=-1*z_acc

#an_x

an_x=int(a_value[1:6])/100.0/57.29578

if int(a_value[0]):

an_x=-1*an_x

#an_y

an_y=int(a_value[7:12])/100.0/57.29578

if int(a_value[6]):

an_y=-1*an_y

#an_z

an_z=int(a_value[13:])/100.0/57.29578

if int(a_value[12]):

an_z=-1*an_z

#print('acc:x,y,z:',x_acc,y_acc,z_acc)

#print('an_acc:x,y,z:',an_x,an_y,an_z)

q_0=int(q_value[1:8])/1000000.0

if int(q_value[0]):

q_0=-1*q_0

q_1=int(q_value[9:15])/1000000.0

if int(q_value[8]):

q_1=-1*q_1

q_2=int(q_value[16:23])/1000000.0

if int(q_value[15]):

q_2=-1*q_2

q_3=int(q_value[24:31])/1000000.0

if int(q_value[23]):

q_3=-1*q_3

imuMsg = Imu()

stamp = rospy.get_rostime()

imuMsg.header.stamp, imuMsg.header.frame_id = stamp, "imu_link"

imuMsg.orientation.x=0.707107*q_0

imuMsg.orientation.y=0.707107*q_3

imuMsg.orientation.z=-0.707107*q_2

imuMsg.orientation.w=-0.707107*q_1

#imuMsg.orientation_covariance = cov_orientation

imuMsg.angular_velocity.x, imuMsg.angular_velocity.y, imuMsg.angular_velocity.z = an_y,-1.0*an_x,-1.0*an_z

#imuMsg.angular_velocity_covariance = cov_angular_velocity

imuMsg.linear_acceleration.x, imuMsg.linear_acceleration.y, imuMsg.linear_acceleration.z = -1.0*x_acc,-1.0*y_acc,-1.0*z_acc

#imuMsg.linear_acceleration_covariance = cov_linear_acceleration

imuPub.publish(imuMsg)

rate.sleep()

except:

Hex_str=codecs.decode('7705000C0011','hex')

s.write(Hex_str)

data= str(binascii.b2a_hex(s.read(6)))

print('ANSER TYPE SETTING SUCCESS')

pass