卷积神经网络分类实战:疫情期间戴口罩识别

今天在云创大数据人工智能课程学习了用alexnet做了一个是否戴口罩的二分类问题 ,笔记如下

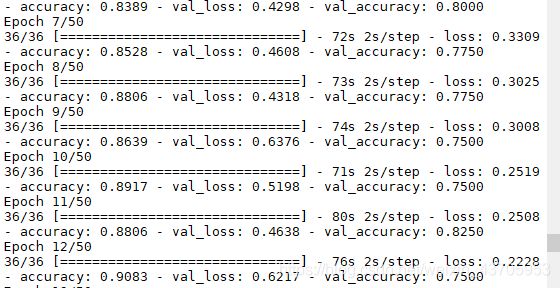

首先总结几个重要的知识点:

图片的数据格式

工业显示系统均通过RGB(red, green, blue)三色合成色彩, 计算机图像数据的存储也是保存RGB三通道的像素图像, 图像即三通道的像素值矩阵,矩阵的大小即图像的分辨率 。比如分辨率为500x400的图像,储存为500x400x3的三阶数组 。

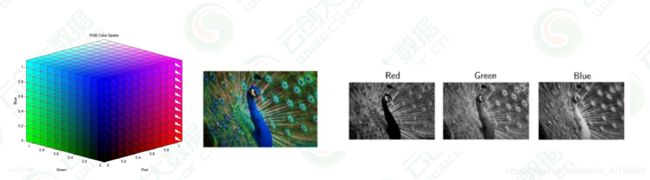

卷积

卷积就是一种算子,卷积运算将一个矩阵与其同样的大小的卷积核执行矩阵点乘 。可以实现图片的提取核压缩, 本质上也是一个滤波器 。看这个图就很非常清楚了

补零(pading)和步长可以控制映射后图片的尺寸 。补零就是四周补充全为0的值,步长控制每次移动的跨度。

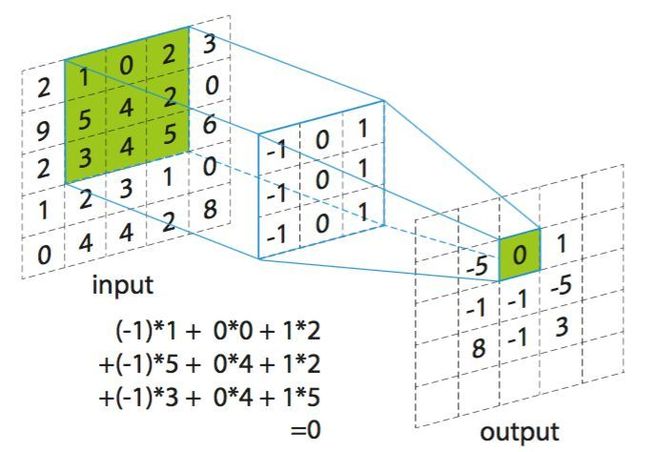

池化

池化(Pooling)是卷积神经网络中的一个重要的概念,它实际上是一种形式的降采样。有多种不同形式的非线性池化函数,而其中“最大池化(Max pooling)”是最为常见的。它是将输入的图像划分为若干个矩形区域,对每个子区域输出最大值。直觉上,这种机制能够有效的原因在于,在发现一个特征之后,它的精确位置远不及它和其他特征的相对位置的关系重要。池化层会不断地减小数据的空间大小,因此参数的数量和计算量也会下降,这在一定程度上也控制了过拟合。

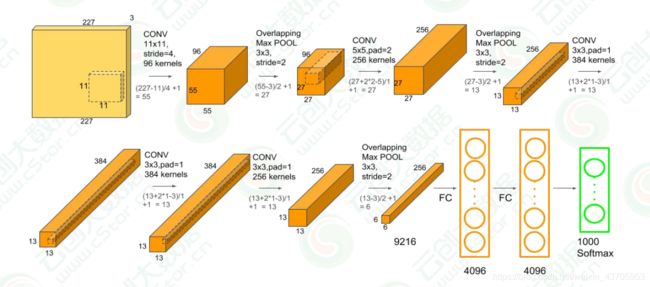

Alexnet

Alexnet共有八层, 包括五层卷积层和三层全连接层。

Data->

(layer1) conv1->relu1->LRN1->pooling1->

(layer2)conv2->relu2->LRN2->pooling2->

(layer3)conv3-relu3->

(layer4)conv4-relu4->

(layer5)conv5->relu5->pooling5->

(layer6)fc6->relu6->drop6->

(layer7)fc7->relu7->drop7->

(layer8)fc8

数据集

数据集分类两类,一类是戴口罩的图像,一类是不带口罩的图像 。分别如下

代码展示

import os

import glob

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

# -----------------------------------------------------------------------------

resize = 224 # 重设大小

total_num = 200

train_num = 160

epochs = 50

batch_size = 10

# -----------------------------------------------------------------------------

dir_data = './01_classify/train/' # 数据路径

dir_mask = os.path.join(dir_data, 'mask')

dir_nomask = os.path.join(dir_data, 'nomask')

assert os.path.exists(dir_mask), 'Could not find ' + dir_mask

assert os.path.exists(dir_nomask), 'Could not find ' + dir_nomask

fpath_mask = [os.path.abspath(fp) for fp in glob.glob(os.path.join(dir_mask, '*.jpg'))]

fpath_nomask = [os.path.abspath(fp) for fp in glob.glob(os.path.join(dir_nomask, '*.jpg'))]

num_mask = len(fpath_mask) # 样本量

num_nomask = len(fpath_nomask)

label_mask = [0] * num_mask # 标签

label_nomask = [1] * num_nomask

print('#mask: ', num_mask)

print('#nomask: ', num_nomask)

RATIO_TEST = 0.1 # 测试样本比列

num_mask_test = int(num_mask * RATIO_TEST)

num_nomask_test = int(num_nomask * RATIO_TEST)

# train

fpath_train = fpath_mask[num_mask_test:] + fpath_nomask[num_nomask_test:]

label_train = label_mask[num_mask_test:] + label_nomask[num_nomask_test:]

# test

fpath_test = fpath_mask[:num_mask_test] + fpath_nomask[:num_nomask_test]

label_test = label_mask[:num_mask_test] + label_nomask[:num_nomask_test]

num_train = len(fpath_train)

num_test = len(fpath_test)

print(num_train)

print(num_test)

def preproc(fpath, label): # 读取图片

image_byte = tf.io.read_file(fpath)

image = tf.io.decode_image(image_byte)

image_resize = tf.image.resize_with_pad(image, 224, 224)

image_norm = tf.cast(image_resize, tf.float32) / 255.

label_onehot = tf.one_hot(label, 2)

return image_norm, label_onehot

dataset_train = tf.data.Dataset.from_tensor_slices((fpath_train, label_train))

dataset_train = dataset_train.shuffle(num_train).repeat()

dataset_train = dataset_train.map(preproc, num_parallel_calls=tf.data.experimental.AUTOTUNE)

dataset_train = dataset_train.batch(batch_size).prefetch(tf.data.experimental.AUTOTUNE)

dataset_test = tf.data.Dataset.from_tensor_slices((fpath_test, label_test))

dataset_test = dataset_test.shuffle(num_test).repeat()

dataset_test = dataset_test.map(preproc, num_parallel_calls=tf.data.experimental.AUTOTUNE)

dataset_test = dataset_test.batch(batch_size).prefetch(tf.data.experimental.AUTOTUNE)

# -----------------------------------------------------------------------------

# AlexNet

model = keras.Sequential(name='Alexnet') # 搭建模型

model.add(layers.Conv2D(filters=96, kernel_size=(11,11),

strides=(4,4), padding='valid',

input_shape=(resize,resize,3),

activation='relu'))

model.add(layers.BatchNormalization())

model.add(layers.MaxPooling2D(pool_size=(3,3),

strides=(2,2),

padding='valid'))

model.add(layers.Conv2D(filters=256, kernel_size=(5,5),

strides=(1,1), padding='same',

activation='relu'))

model.add(layers.BatchNormalization())

model.add(layers.MaxPooling2D(pool_size=(3,3),

strides=(2,2),

padding='valid'))

model.add(layers.Conv2D(filters=384, kernel_size=(3,3),

strides=(1,1), padding='same',

activation='relu'))

model.add(layers.Conv2D(filters=384, kernel_size=(3,3),

strides=(1,1), padding='same',

activation='relu'))

model.add(layers.Conv2D(filters=256, kernel_size=(3,3),

strides=(1,1), padding='same',

activation='relu'))

model.add(layers.MaxPooling2D(pool_size=(3,3),

strides=(2,2), padding='valid'))

model.add(layers.Flatten())

model.add(layers.Dense(4096, activation='relu'))

model.add(layers.Dropout(0.5))

model.add(layers.Dense(4096, activation='relu'))

model.add(layers.Dropout(0.5))

model.add(layers.Dense(1000, activation='relu'))

model.add(layers.Dropout(0.5))

# Output Layer

model.add(layers.Dense(2, activation='softmax'))

# Training

model.compile(loss='categorical_crossentropy', # loss

optimizer='sgd',

metrics=['accuracy'])

history = model.fit(dataset_train,

steps_per_epoch = num_train//batch_size,

epochs = epochs,

validation_data = dataset_test,

validation_steps = num_test//batch_size,

verbose = 1)

# scores = model.evaluate(train_data, train_label, verbose=1)

scores = model.evaluate(dataset_train, steps=num_train//batch_size, verbose=1)

print(scores)

# scores = model.evaluate(test_data, test_label, verbose=1)

scores = model.evaluate(dataset_test, steps=num_test//batch_size, verbose=1)

print(scores)

model.save('./model/mask.h5')

# Record loss and acc

history_dict = history.history

train_loss = history_dict['loss']

train_accuracy = history_dict['accuracy']

val_loss = history_dict['val_loss']

val_accuracy = history_dict['val_accuracy']

预测

import cv2

#import tensorflow as tf

#from tensorflow import keras

from tensorflow.keras.models import load_model

resize = 224

label = ('带口罩!', '没戴口罩了')

image = cv2.resize(cv2.imread('./01_classify/test/test.jpg'),(resize,resize))

image = image.astype("float") / 255.0

image = image.reshape((1, image.shape[0], image.shape[1], image.shape[2]))

model = load_model('./01_classify/model/ready_mask.h5')

predict = model.predict(image)

i = predict.argmax(axis=1)[0]

print(label[i])

>>>戴口罩了

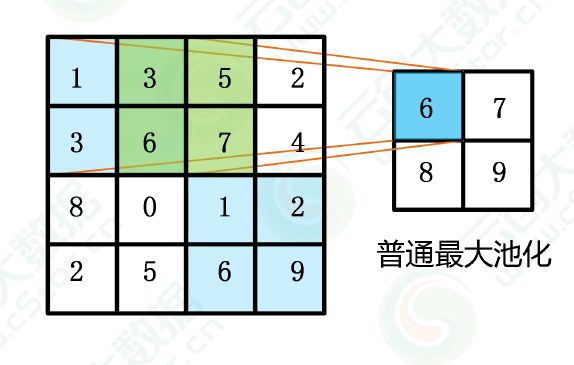

但是如果用测试集测试,模型效果并不好, 对于这个问题而言,可能是训练数据质量不高,有的是全身图,有的是侧脸图, 而且数据量较小。