图像分割——meanshift算法(C++&GDAL库)

图像分割——meanshift算法(C++&GDAL库)

- 一、meanshift分割原理

- 二、分割技术流程

- 三、代码实例

-

- 3.1 C++&GDAL库 实现

- 3.2 分割结果

- 3.3 结果分析

一、meanshift分割原理

Mean-Shift是一种非参数化的多模型分割方法,它的基本计算模块采用的是传统的模式识别程序,即通过分析图像的特征空间和聚类的方法来达到分割的目的。它是通过直接估计特征空间概率密度函数的局部极大值来获得未知类别的密度模式,并确定这个模式的位置,然后使之聚类到和这个模式有关的类别当中。

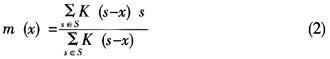

设S是n维空间X中的一个有限集合,K表示X空间中λ球体的一个特征函数,则其表达式为:

Fukunaga和Hostetle等人在其自己的论文中把m(x)-x的差叫做Mean-Shift。Mean-Shift算法实际上就是数据点到样本均值的重复移动,而且在算法的每一次迭代过程中,对于所有的s∈S,s←m (s)都是同时的。同时,模糊聚类算法还包括最大墒聚类算法以及常用的k均值聚类算法,它们都是Mean-Shift算法的一个有限的特例。Mean-Shift算法作为一种聚类分析方法,由于其密度估计器的梯度是递增的,而其收敛点即为密度梯度的局部极大值点,这个局部极大值即对应特征空间中的一个模式。

Mean-Shift算法对于概率密度函数的估计通常采用Parzen窗函数法,即核密度估计器。在d维空间Rd中,给定n个数据点xi,i=1,2…n,点x的多变量核密度估计器的计算式如式(3)所示。这个估计量可以由核K(x)和一个对称正定的d×d宽度的矩阵H来表示。

一般情况下,具有d个变量的核K(x)是一个满足以下条件的边界函数:

其中,ck是一个常量。从图像分割的目的出发,多变量核K (x)采用的是放射状对称核Ks(x)=ak,dK1(‖x‖),其中K1(z)是一个对称的单变量核,且K (x)满足下式:

![]()

其中,ck,d是可使K (x)等于1的归一化常量。带宽矩阵H一般选择对角阵,H=diag[h12,…,h2d]或与单位矩阵H=h2I成比例。H=h2I情况下的一个明显优点是只需带宽参数h>0。然而,从式(4)可以看出,首先应确定用于特征空间的欧几里德矩阵的有效性。若使用一个宽度参数h,则式(3)就会变成如下典型的表示式:

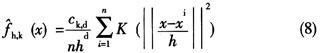

将(6)式代入上式,就可以得到一个通用的、用核符号表示的核密度估计式:

对有基本密度函数f(x)的一个特征空间,Mean-Shift算法分析的第一步是找到这个密度模式,然后对这个模式进行相关聚类。此模式应该在梯度▽f(x)=0的零点当中,而Mean-Shift程序是不用估计密度,而直接对密度的梯度进行估计,就能定位这些零点。

二、分割技术流程

对于Mean-Shift算法的应用与分割,首先,可设xi和zi(i=1,2,…,n)分别为n维空间内的输人和联合的空值域内的滤波图像的像素,Li为分割后的图像中的第i个像素。那么,其操作可分为以下步骤:

- 运行均值平移滤波程序对图像进行滤波,并存储所有d维空间内在zi处的收敛点zi=yi,c。

- 在联合域中对所有的zi进行分组以描述类,这些类{Cp}p=1…m在空域内较hs较近,在值域内较hr较近。

- 对于每一个i=1,…,n,并记为: Li={p|zi∈Cp|} (4)消除在空间区域内少于M个像素的区域。

输入:遥感图像,输出:分割后的斑块

三、代码实例

3.1 C++&GDAL库 实现

#include "gdal_priv.h"

#include "cpl_conv.h"

#include (coreYCoordinate, coreXCoordinate)[0] = corePointNum;

coreIteration++;

}

else

{

coreVector[1] = 0;

coreVector[0] = 0;

newCoreYCoordinate = coreYCoordinate; //新坐标

newCoreXCoordinate = coreXCoordinate;

} //防止溢出

if (newDataXY.at <Vec2i>(newCoreYCoordinate, newCoreXCoordinate)[0] >= 0) //如果步进的位置已经有模点

{

newDataXY.at <Vec2i>(coreYCoordinate, coreXCoordinate)[0] = newDataXY.at <Vec2i>(newCoreYCoordinate, newCoreXCoordinate)[0];//行:坐标赋值

newDataXY.at <Vec2i>(coreYCoordinate, coreXCoordinate)[1] = newDataXY.at <Vec2i>(newCoreYCoordinate, newCoreXCoordinate)[1]; //列

}

else if ((abs(coreVector[0]) < 1 && abs(coreVector[1]) < 1)) //|| (coreIteration >= 100)) //如果找到了重心或者循环次数达到100

{

corePointXY.at<int>(0, corePointNum) = coreYCoordinate;

corePointXY.at<int>(1, corePointNum) = coreXCoordinate;

newDataXY.at <Vec2i>(coreYCoordinate, coreXCoordinate)[0] = coreYCoordinate; //行:坐标赋值

newDataXY.at <Vec2i>(coreYCoordinate, coreXCoordinate)[1] = coreXCoordinate; //列

corePointNum++; //模点数目加一

}

else

{

SingleMeanShift(h,w,newCoreYCoordinate, newCoreXCoordinate,

spacialH, colorRadiusSpace, newDataXY, corePointXY, dataColorSpatial, coreIteration, corePointNum);

newDataXY.at <Vec2i>(coreYCoordinate, coreXCoordinate)[0] = newDataXY.at <Vec2i>(newCoreYCoordinate, newCoreXCoordinate)[0];//行:坐标赋值

newDataXY.at <Vec2i>(coreYCoordinate, coreXCoordinate)[1] = newDataXY.at <Vec2i>(newCoreYCoordinate, newCoreXCoordinate)[1]; //列

}

/*delete xGaussNumerator;

delete yGaussNumerator;

delete xGaussDenominator;

delete yGaussDenominator;

delete []coreVector;*/

}

Mat MeanShift(aboutmat data, int spacialH, int colorR, Mat &corePointXY)

{

Mat dataColorSpatial = data.mat;

int iWidth = data.width;

int iHeight = data.height;

int colorRadiusSpace = pow(colorR, 2) + pow(colorR, 2) + pow(colorR, 2);

Mat newDataXY(iHeight, iWidth, CV_32SC2, Scalar::all(-1)); //存放新的点坐标,以模点的顺序代替

int corePointNum = 0; //共有几个模点

for (int h = 0; h < iHeight; h++)

{

for (int w = 0; w < iWidth; w++)

{

//对每个数据点进行循环计算

int coreIteration = 0; //一个点移动了几步

if (newDataXY.at<Vec2i>(h, w)[0] >= 0)

continue;

//执行单个的meanshift操作

SingleMeanShift(h, w, h, w,spacialH, colorRadiusSpace, newDataXY, corePointXY, dataColorSpatial, coreIteration, corePointNum);

}

}

dataColorSpatial.release(); //用来释放Mat空间

return newDataXY;

}

//opencv的分层迭代法,速度快,处理难

//系统采样/随机采样选取一定间隔的初始点

//进行meanshift迭代,选出最后的模点位置

Mat randomPointmeanShift(aboutmat data,int spacialH,int colorR)

{

int coreInterval = 500; //踩点间隔

Mat dataColor = data.mat;

int iWidth = data.width;

int iHeight = data.height;

int pointNum = int(iWidth / coreInterval) * int(iHeight / coreInterval);

//系统采样 / 随机采样选取一定间隔的初始点

Mat soursePoint(iHeight, iWidth, CV_8U, Scalar::all(-1));

Mat point_XY(2, pointNum, CV_32S, Scalar::all(0));

int n = 0;

for (int i = coreInterval-1; i < iHeight; i += coreInterval) //必须是最大的数字,否则容易溢出

{

for (int j = coreInterval-1; j < iWidth; j += coreInterval)

{

soursePoint.at<uchar>(i,j) = 1;

point_XY.at<int>(0, n) = i; //存储初始点的xy坐标

point_XY.at<int>(1, n) = j;

n++;

}

}

/*用于验证坐标正不正确

cout << "point_XY.at(0, n)" << point_XY.at(0, 4000) << endl;

cout << "point_XY.at(0, n)" << point_XY.at(1, 4000) << endl;*/

int colorRadiusSpace = pow(colorR, 2)+ pow(colorR, 2)+ pow(colorR, 2);

//开始meanshift迭代

for (int p = 0; p < pointNum; p++) //每个初始点循环

{

int coreIteration = 0;

do{

int coreVector[2] = { 0, 0 };

int coreNeiborNum = 0;

for (int i = point_XY.at<int>(0, p) - spacialH; i <= point_XY.at<int>(0, p) + spacialH; ++i)

{

if (i < iHeight && i >= 0)

{

for (int j = point_XY.at<int>(1, p) - spacialH; j <= point_XY.at<int>(1, p) + spacialH; ++j)

{

if (j >= 0 && j < iWidth)

{

//求出核与周围点的色彩差异

int rDistance = pow((dataColor.at<Vec3b>(i, j)[0] - dataColor.at<Vec3b>(point_XY.at<int>(0, p), point_XY.at<int>(1, p))[0]), 2);

int gDistance = pow((dataColor.at<Vec3b>(i, j)[0] - dataColor.at<Vec3b>(point_XY.at<int>(0, p), point_XY.at<int>(1, p))[1]), 2);

int bDistance = pow((dataColor.at<Vec3b>(i, j)[0] - dataColor.at<Vec3b>(point_XY.at<int>(0, p), point_XY.at<int>(1, p))[2]), 2);

int colorDistance = rDistance + gDistance + bDistance;

if (colorDistance <= colorRadiusSpace) //如果色彩差异在指定范围内

{

//这里用高斯核计算中心

coreVector[0] = coreVector[0] + i - point_XY.at<int>(0, p);

coreVector[1] = coreVector[1] + j - point_XY.at<int>(1, p);

coreNeiborNum += 1;

}

}

}

}

if (coreNeiborNum != 0)

{

//求meanshift向量并移动模点

coreVector[0] = coreVector[0] / coreNeiborNum;

coreVector[1] = coreVector[1] / coreNeiborNum;

point_XY.at<int>(0, p) = point_XY.at<int>(0, p) + coreVector[0];

if (point_XY.at<int>(0, p) < 0)point_XY.at<int>(0, p) = 0;

if (point_XY.at<int>(0, p) > iHeight) point_XY.at<int>(0, p) = iHeight;

point_XY.at<int>(1, p) = point_XY.at<int>(1, p) + coreVector[1];

if (point_XY.at<int>(1, p) < 0) point_XY.at<int>(1, p) = 0;

if (point_XY.at<int>(1, p) > iWidth) point_XY.at<int>(1, p) = iWidth;

coreIteration++;

if (coreVector[0] <= 5 && coreVector[1] <= 5)

{

break;

}

}

else

{

break;

}

} while (coreIteration <= 10);

//cout << "p" << p << endl;

std::cout << p << " 模点移动次数为" << coreIteration << endl;

}

//cout << "点坐标" << point_XY << endl;

return point_XY;

}

//筛选合格点,将颜色相近的点合并

Mat MergeCorePoint(Mat data, Mat sCorePoint_XY, Mat newDataXY, int colorDisparity)

{

int colorDisparitySpace = pow(colorDisparity, 2) + pow(colorDisparity, 2) + pow(colorDisparity, 2);

int mergeTimes = 0;

for (int p1 = 0; p1 < sCorePoint_XY.cols; p1++)

{

if (sCorePoint_XY.at<int>(0, p1) != 0)

{

for (int p2 = 0; p2 < sCorePoint_XY.cols; p2++) //p2=p1+1

{

if ((sCorePoint_XY.at<int>(0, p2) != 0) && (p1 != p2))

{

//三个总和差距小合并

/*int rPoColorDisparity = pow(data.at(sCorePoint_XY.at(0, p1), sCorePoint_XY.at(1, p1))[0]

- data.at(sCorePoint_XY.at(0, p2), sCorePoint_XY.at(1, p2))[0], 2);

int gPoColorDisparity = pow(data.at(sCorePoint_XY.at(0, p1), sCorePoint_XY.at(1, p1))[1]

- data.at(sCorePoint_XY.at(0, p2), sCorePoint_XY.at(1, p2))[1], 2);

int bPoColorDisparity = pow(data.at(sCorePoint_XY.at(0, p1), sCorePoint_XY.at(1, p1))[2]

- data.at(sCorePoint_XY.at(0, p2), sCorePoint_XY.at(1, p2))[2], 2);

int pointColorDisparity = rPoColorDisparity + gPoColorDisparity + bPoColorDisparity;

if (pointColorDisparity < colorDisparitySpace)

{

newDataXY.at(sCorePoint_XY.at(0, p2), sCorePoint_XY.at(1, p2))[0] =

newDataXY.at(sCorePoint_XY.at(0, p1), sCorePoint_XY.at(1, p1))[0];

newDataXY.at(sCorePoint_XY.at(0, p2), sCorePoint_XY.at(1, p2))[1] =

newDataXY.at(sCorePoint_XY.at(0, p1), sCorePoint_XY.at(1, p1))[1];

sCorePoint_XY.at(0, p2) = 0;

sCorePoint_XY.at(1, p2) = 0;

}*/

//三个波段相差小就合并

int rPoDisparity = abs(data.at<Vec3b>(sCorePoint_XY.at<int>(0, p1), sCorePoint_XY.at<int>(1, p1))[0]

- data.at<Vec3b>(sCorePoint_XY.at<int>(0, p2), sCorePoint_XY.at<int>(1, p2))[0]);

int gPoDisparity = abs(data.at<Vec3b>(sCorePoint_XY.at<int>(0, p1), sCorePoint_XY.at<int>(1, p1))[1]

- data.at<Vec3b>(sCorePoint_XY.at<int>(0, p2), sCorePoint_XY.at<int>(1, p2))[1]);

int bPoDisparity = abs(data.at<Vec3b>(sCorePoint_XY.at<int>(0, p1), sCorePoint_XY.at<int>(1, p1))[2]

- data.at<Vec3b>(sCorePoint_XY.at<int>(0, p2), sCorePoint_XY.at<int>(1, p2))[2]);

if ((rPoDisparity < colorDisparity) && (gPoDisparity < colorDisparity) && (bPoDisparity < colorDisparity))

{

newDataXY.at<Vec2i>(sCorePoint_XY.at<int>(0, p2), sCorePoint_XY.at<int>(1, p2))[0] =

newDataXY.at<Vec2i>(sCorePoint_XY.at<int>(0, p1), sCorePoint_XY.at<int>(1, p1))[0];

newDataXY.at<Vec2i>(sCorePoint_XY.at<int>(0, p2), sCorePoint_XY.at<int>(1, p2))[1] =

newDataXY.at<Vec2i>(sCorePoint_XY.at<int>(0, p1), sCorePoint_XY.at<int>(1, p1))[1];

sCorePoint_XY.at<int>(0, p2) = 0;

sCorePoint_XY.at<int>(1, p2) = 0;

}

}

}

}

}

//将存在的剩下的模点导出

int newCorePointNum = 0;

//Mat newCorePoint_XY(2, newCorePointNum, CV_32S, Scalar::all(0));

for (int n = 0;n < sCorePoint_XY.cols; n++)

{

if (sCorePoint_XY.at<int>(0, n) > 0)

{

newCorePointNum++;

}

}

Mat newCorePoint_XY(2, newCorePointNum, CV_32S, Scalar::all(0));

int n1 = 0;

for (int n = 0; n < sCorePoint_XY.cols; n++)

{

if (sCorePoint_XY.at<int>(0, n) != 0)

{

newCorePoint_XY.at<int>(0, n1) = sCorePoint_XY.at<int>(0, n);

newCorePoint_XY.at<int>(1, n1) = sCorePoint_XY.at<int>(1, n);

n1++;

}

}

//输出所对应的颜色信息(用于验证)

for (int i = 0; i < newCorePointNum; i++)

{

for (int c = 0; c < data.channels(); c++)

{

cout <<"第"<<i+1<<"个模点颜色为 "<< int(data.at<Vec3b>(newCorePoint_XY.at<int>(0, i), newCorePoint_XY.at<int>(1, i))[c])<<" ";

}

cout << endl;

}

Mat newDataColorSpa(data.rows, data.cols, CV_8UC3, Scalar::all(0)); //存放新的图像(分割后)

for (int r = 0; r < newDataXY.rows; r++)

{

for (int c = 0; c < newDataXY.cols; c++)

{

if ((newDataXY.at<Vec2i>(newDataXY.at<Vec2i>(r, c)[0], newDataXY.at<Vec2i>(r, c)[1])[0] != newDataXY.at<Vec2i>(r, c)[0]) &&

(newDataXY.at<Vec2i>(newDataXY.at<Vec2i>(r, c)[0], newDataXY.at<Vec2i>(r, c)[1])[1] != newDataXY.at<Vec2i>(r, c)[1]))

{

newDataXY.at<Vec2i>(r, c)[0] = newDataXY.at<Vec2i>(newDataXY.at<Vec2i>(r, c)[0], newDataXY.at<Vec2i>(r, c)[1])[0];

newDataXY.at<Vec2i>(r, c)[1] = newDataXY.at<Vec2i>(newDataXY.at<Vec2i>(r, c)[0], newDataXY.at<Vec2i>(r, c)[1])[1];

}

for (int ch = 0; ch < data.channels(); ch++)

{

newDataColorSpa.at<Vec3b>(r, c)[ch] = data.at<Vec3b>(newDataXY.at<Vec2i>(r, c)[0], newDataXY.at<Vec2i>(r, c)[1])[ch];

//cout << newDataColorSpa.at(r, c)[ch]<

}

}

}

return newDataColorSpa;

std::system("pause");

}

//将其根据位置赋值并输出

void SaveImage(const char* pszRasterFile, Mat newData, const char*path)

{

//int bytesPerLine = (newData.cols * 24 ) / 8;

CPLSetConfigOption("GDAL_FILNAME_IS_UTF8", "NO");

unsigned char *pBuf = new unsigned char[newData.cols*newData.rows*newData.channels()];

for (int r = 1; r <= newData.channels(); ++r)

{

for (int i = 0; i < newData.rows; i++)

{

for (int j = 0; j < newData.cols; j++)

{

pBuf[(r - 1)*newData.rows*newData.cols+i*newData.cols + j] = newData.at<Vec3b>(i, j)[r - 1];

}

}

}

GDALAllRegister();//注册数据集

GDALDriver *poDriver;

GDALDataset *BiomassDataset;

poDriver = GetGDALDriverManager()->GetDriverByName("Gtiff");

//const char *output_file = "D:\xxxx";

BiomassDataset = poDriver->Create(pszRasterFile, newData.cols, newData.rows, 3, GDT_Byte, NULL);

int panBandMap[3] = { 1, 2, 3 };

BiomassDataset->RasterIO(GF_Write, 0, 0, newData.cols, newData.rows, pBuf, newData.cols, newData.rows, GDT_Byte, 3, panBandMap, 0,0,0);

GDALClose(BiomassDataset);

BiomassDataset = NULL;

delete [] pBuf;

pBuf = NULL;

}

int main()

{

const char* path = "G:/ER03634.tif";

const char* pszRasterFile = "G:/result/Mean8_8.tif";

aboutmat sourseData = Initialization(path);

int spaceRadius = 8; //空间搜索半径

int colorRadius = 2; //色彩空间半径

int colorDisparity = 4;

Mat corePointXY(2, sourseData.width*sourseData.height / 2, CV_32S, Scalar::all(0)); //存放模点坐标

Mat newDataXY = MeanShift(sourseData, spaceRadius, colorRadius, corePointXY);

//Mat soursePointXY = randomPointmeanShift(sourseData, spaceRadius, colorRadius);

Mat newData = MergeCorePoint(sourseData.mat, corePointXY, newDataXY, colorDisparity);

cout << newDataXY.col(2) << endl;

SaveImage(pszRasterFile, newData, path);

return 0;

}

3.2 分割结果

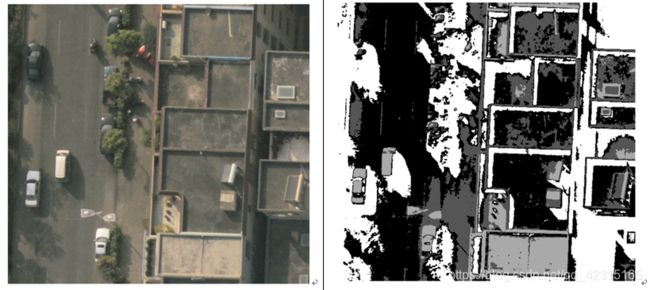

首先用openCV库的库函数进行分割,作为对照结果如下(左:原图,右:库函数分割结果)

从左到右依次为:(8-8-4,8-8-16,16-4-4)数据说明:第一个参数表示空间半径(像素),第二个参数表示色彩半径(像素),第三个参数表示类合并最小半径,若无说明,合并方法为三个总和差距小合并。

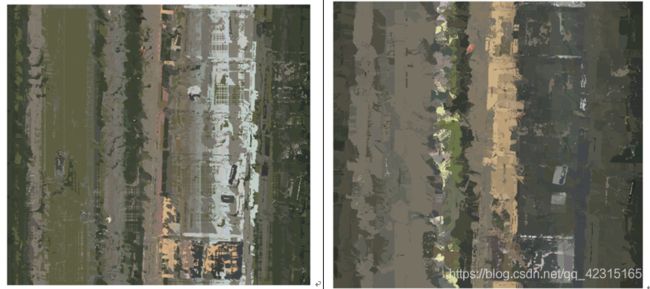

不同空间半径分割结果(左侧8,右侧16)

不同合并方法分割结果

注:都是8-8-8的窗口大小,左:合并方法为三个总和差距小合并;右:合并方法为一个波段相差小就合并。

3.3 结果分析

由分割总体结果显示来看,分割效果较差,代码优化不好导致分割时间较长。 代码相比openCV库Mean—Shift分割函数有一些改进:

- 1.其库函数的寻找模点(密度最大的点)方法为随机选取点进行寻找,改进对所有像元遍历寻找,更符合Mean-Shift原理,但耗费时间更长。

- 2.核密度计算由均匀核函数改进为高斯核函数,即越靠近中心点,对中心点的密度贡献越大,更符合实际情况。

- 3.平滑时,库函数对寻找到的模点进行洪水蔓延算法平滑,改进为在寻找模点的同时进行图像平滑,避免了二次遍历,也更符合MeanShift原理。

- 4.在合并类时采取了两种方法,可以任意选择。 由不同分类参数结果可以得出结论:在一定范围内,空间半径越大,分割效果越差,运行时间越长;色彩半径同样;合并方法“一个波段相差小就合并”比“三个总和差距小合并”更好。