Kubernetes集群搭建和应用部署操作手册

Kubernetes集群搭建和应用部署操作手册

——某大数据平台

说明:一些扩展命令和扩展脚本仅供参考,不需要执行。

Kubernetes集群搭建

- 环境配置

准备三台机器

| 序号 |

服务器 |

主从节点 |

| 1 |

10.1.219.11 |

master |

| 2 |

10.1.219.12 |

node01 |

| 3 |

10.1.219.13 |

node02 |

设置系统主机名以及host文件的相互解析

hostnamectl set-hostname master

hostnamectl set-hostname node01

hostnamectl set-hostname node02

以下安装步骤主从节点都要执行

-

- 安装依赖包

yum install -y conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget vim net-tools git

-

- 设置防火墙为iptables并设置空规则

systemctl stop firewalld && systemctl disable firewalld

yum -y install iptables-services && systemctl start iptables && systemctl enable iptables && iptables -F && service iptables save

-

- 关闭selinux

swapoff -a && sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

setenforce 0 && sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

-

- 调整内核参数,对于k8s

cat > kubernetes.conf <

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

net.ipv4.tcp_tw_recycle=0

vm.swappiness=0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

cp kubernetes.conf /etc/sysctl.d/kubernetes.conf

sysctl -p /etc/sysctl.d/kubernetes.conf

-

- 关闭系统不需要的服务

systemctl stop postfix && systemctl disable postfix

systemd-journald 用于检索 systemd 的日志,是 systemd 自带的日志系统。

设置rsyslogd 和systemd journald

mkdir /var/log/journal

mkdir /etc/systemd/journald.conf.d

cat > /etc/systemd/journald.conf.d/99-prophet.conf << EOF

[Journal]

Storage=persistent

Compress=yes

SyncIntervalSec=5m

RateLimitInterval=30s

RateLimitBurst=1000

SystemMaxUse=10G

SystemMaxFileSize=200M

MaxRetentionSec=2week

ForwardToSyslog=no

EOF

systemctl restart systemd-journald

查看内核版本,需要将内核升级到4.4版本,老版本会有问题,如果已经是4.4版本无需升级。

cat /proc/version

4.4.237-1.el7.elrepo

4.4.247-1.el7.elrepo.x86_64

-

- 升级系统内核为4.4

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

设置系统重启后内核版本加载,以下高亮显示为当前最新内核版本号,会根据升级时间不同而不同,可以根据以上查看内核版本命令查看,并替换以下命令的高亮部分。

yum --enablerepo=elrepo-kernel install -y kernel-lt

grub2-set-default 'CentOS Linux (4.4.237-1.el7.elrepo.x86_64) 7 (Core)'

重启一下服务器,如果已经是4.4版本,无需重启,

reboot

kube-proxy 开启ipvs的前置条件

modprobe br_netfilter

注意:如果Linux内核更新版本linux kernel >= 4.19,使用'nf_conntrack' 代替'nf_conntrack_ipv4'

cat > /etc/sysconfig/modules/ipvs.modules << EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

- 安装docker

yum install -y yum-utils device-mapper-persistent-data lvm2

-

- 导入阿里云的镜像仓库

yum-config-manager \

--add-repo \

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

-

- 安装docker-ce

yum update -y && yum install -y docker-ce

安装完docker-ce相关组件重启服务器

reboot

重启后,Linux内核又变回原来的,所以要重新设置成4.4并再次重启,如果之前已经是4.4版本无需再次设置和重启。

grub2-set-default 'CentOS Linux (4.4.237-1.el7.elrepo.x86_64) 7 (Core)' && reboot

-

- 启动docker,并将docker设置为开机自启动

systemctl start docker

systemctl enable docker

-

- 创建docker配置文件

mkdir /etc/docker

cat > /etc/docker/daemon.json <

{

"exec-opts":["native.cgroupdriver=systemd"],

"log-driver":"json-file",

"log-opts":{

"max-size":"100m"

},

"registry-mirrors": ["https://docker.mirrors.ustc.edu.cn

}

EOF

创建docker.service.d文件夹,用于存放docker相关服务

mkdir -p /etc/systemd/system/docker.service.d

重启docker服务

systemctl daemon-reload && systemctl restart docker && systemctl enable docker

安装kubeadm 主从配置

cat <

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

- 安装kubeadm、kubectl和kubelet

目前选择的是1.18.17较稳定版本。

yum -y install kubeadm-1.18.17 kubectl-1.18.17 kubelet-1.18.17

设置启动开机自启kubelet服务

systemctl enable kubelet.service

扩展脚本,无需执行,供参考(如果服务器无法下载镜像,或者有打包好的镜像,可以使用该脚本进行镜像导入)。

创建load-images.sh,用于批量导入kubeadm-basic镜像,内容如下:

#!/bin/bash

ls /root/kubeadm-basic.images > /tmp/image-list.txt

cd /root/kubeadm-basic.images

for i in $(cat /tmp/image-list.txt)

do

docker load -i $i

done

rm -rf /tmp/image-list.txt

给创建的脚本赋权

chmod a+x load-images.sh

执行

./load-images.sh

Linux命令扩展,远程传输命令

scp -r kubeadm-basic.images load-images.sh root@node5:/root/

scp -r kubeadm-basic.images load-images.sh root@node7:/root/

以下命令只需要在主节点执行

将kubeadm配置输出到kubeadm-config.yaml

kubeadm config print init-defaults > kubeadm-config.yaml

打开kubeadm-config.yaml,并修改相关配置

vi kubeadm-config.yaml

改ip,advertiseAddress: 192.168.20.69

改kubernetes版本,kubernetesVersion: v1.18.17

添加,dnsDomain: cluster.local下一行添加(注意缩进,不可用tab,一般为2个或4个空格)

podSubnet: "10.244.0.0/16"

imageRepository改为如下地址,国内阿里云镜像,可以解决下载不到国外镜像的问题:

imageRepository: registry.aliyuncs.com/google_containers

文档最后添加

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:

SupportIPVSProxyMode: true

mode: ipvs

注意:以上高亮部分为主节点ip。

保存退出

:wq

执行kubeadm初始化

#v1.15.1版本使用如下命令

kubeadm init --config=kubeadm-config.yaml --experimental-upload-certs | tee kubeadm-init.log

#v1.15.1以上版本使用如下命令,此时最新版本为v1.18.17

kubeadm init --image-repository=registry.aliyuncs.com/google_containers --pod-network-cidr=10.244.0.0/16 --kubernetes-version=v1.18.17 --upload-certs | tee kubeadm-init.log

看日志提醒,执行如下语句

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubeadm命令扩展

节点重置(安装过程中不需要执行,如果节点安装出错可以使用,清除子节点也可以使用)

kubeadm reset -f

节点删除(安装过程中不需要执行)

kubectl delete node

创建install-k8s文件夹,将安装过程中的文件进行整理

mkdir install-k8s

mv kubeadm-init.log kubeadm-config.yaml install-k8s/

cd install-k8s/

mkdir core

将kubeadm-init.log kubeadm-config.yaml移动到core目录下

mv * core/

最后将安装文件放到usr/local文件夹下面

mv install-k8s/ /usr/local/

查看k8s日志

vi /usr/local/install-k8s/kubeadm-init.log

部署网络Flannel

Flannel是 CoreOS 团队针对 Kubernetes 设计的一个覆盖网络(Overlay Network)工具,其目的在于帮助每一个使用 Kuberentes 的 CoreOS 主机拥有一个完整的子网。

在install-k8s目录下面创建plugin文件夹,在plugin文件夹下面创建flannel文件夹

mkdir plugin

cd plugin

mkdir flannel

cd flannel

创建Flannel

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl create -f kube-flannel.yml

子节点添加

cd /usr/local/install-k8s/core

vi kubeadm-init.log

找到类似如下语句,到各子节点执行即可

kubeadm join 192.168.20.69:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:97d96ca8e4b393481213dec699f1d772dcbf4e81dbf51c568be61250ffea57e3

到此Kubernetes已安装完成,可以通过如下命令查看节点状态,如果为ready,说明安装成功

kubectl get node -o wide(-o wide表示查看节点详情,kubectl其他get命令都可添加以查看详情)

- 安装Helm

下载helm,如下载3.6.2版本

wget https://get.helm.sh/helm-v3.6.2-linux-amd64.tar.gz

在install-k8s目录下面新建helm文件夹,并解压下载的tar包,赋权

cd /usr/local/install-k8s/

mkdir helm

mv helm-v3.6.2-linux-amd64.tar.gz helm

cd helm

tar -zxvf helm-v3.6.2-linux-amd64.tar.gz

mv linux-amd64/helm /usr/local/bin/helm

chmod a+x /usr/local/bin/helm

helm应用仓库地址,可以在此地址查找需要安装的应用

https://artifacthub.io/

查看helm仓库

helm repo list

更新helm仓库

helm repo update

查看helm版本

helm version

如果显示类似如下信息,说明helm安装成功

# helm version

version.BuildInfo{Version:"v3.5.2", GitCommit:"167aac70832d3a384f65f9745335e9fb40169dc2", GitTreeState:"dirty", GoVersion:"go1.15.7"}

helm扩展命令

helm list -a

helm del --purge els1

helm install --name elasticsearch-master -f values.yaml --namespace elastic --version 7.10.1 .

- 使用helm安装Dashboard

在install-k8s文件夹下面创建dashboard文件夹

cd /usr/local/install-k8s/plugin

mkdir dashboard

cd dashboard

helm增加k8s-dashboard的镜像源

helm repo add k8s-dashboard https://kubernetes.github.io/dashboard

helm获取dashboard,如获取4.2.0版本的kubernetes-dashboard

helm fetch k8s-dashboard/kubernetes-dashboard --version 4.2.0

解压kubernetes-dashboard-4.2.0.tgz

tar -zxvf kubernetes-dashboard-4.2.0.tgz

cd kubernetes-dashboard

新建kubernetes-dashboard.yaml文件

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

- apiGroups: ["*"]

resources: ["*"]

verbs: ["*"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

使用helm安装dashboard

helm install . -n kubernetes-dashboard --namespace kube-system -f kubernetes-dashboard.yaml

扩展命令,删除名称空间为kube-system下的pod

kubectl delete pod kubernetes-dashboard-79599d7b8d-bnpp2 -n kube-system

编辑dashboard服务,type改为NodePort

kubectl edit svc kubernetes-dashboard -n kube-system

查看pod情况(安装过程中可不执行)

kubectl get pod -n kube-system -o wide

获取名称空间在kube-system下面的服务,查看服务情况

kubectl get svc -n kube-system

获取登录token,其中高亮部分需要替换以下第一条语句执行的token名称

kubectl -n kube-system get secret |grep kubernetes-dashboard-token

kubectl describe secret kubernetes-dashboard-token-llqjx -n kube-system

如果登录后有访问权限问题,需要添加如下代码进行角色绑定:

kubectl create clusterrolebinding kubernetes-dashboard --clusterrole=cluster-admin --serviceaccount=kube-system:kubernetes-dashboard

- 使用helm安装Logstash

在install-k8s文件夹下面创建dashboard文件夹

cd /usr/local/install-k8s/plugin

mkdir elk

cd elk

helm增加elastic的镜像源

helm repo add elastic https://helm.elastic.co

helm获取logstash,如获取7.12.1版本的logstash

helm fetch elastic/logstash --version 7.12.1

解压logstash-7.12.1.tgz

tar -zxvf logstash-7.12.1.tgz

cd logstash

进入目录

cd logstash-7.12.1/config

新建logstash.conf

vi logstash.conf

内容如下:

# Sample Logstash configuration for creating a simple

# Beats -> Logstash -> Elasticsearch pipeline.

input {

file {

path => ["/home/dmtd/log/*.log"]

type => "system"

start_position => "beginning"

}

}

filter {

ruby {

init => "@log = ['logtime','ip','loglevel','interface','className','methodName','thread','response_time','lines','log_status','log_message','user_id','org_id','log_size']"

code => "

byteArray=event.get('message').bytes.to_a

length=byteArray.length

message=event.get('message')+'|'+length.to_s

event.set('message',message)

messageArray=event.get('message').split('|')

event.cancel if @log.length != messageArray.length

new_event = LogStash::Event.new(Hash[@log.zip(messageArray)])

new_event.remove('@timestamp')

event.append(new_event) "

}

mutate {

convert => {

"user_id" => "integer"

"response_time" => "integer"

"log_size" => "integer"

"lines" => "integer"

}

}

}

output {

elasticsearch {

hosts => ["10.1.219.31:9200"]

index => "swbdp_logs_test"

document_type => "logs"

}

}

注意:高亮部分为elasticsearch地址。

创建命名空间elk

kubectl create namespace elk

使用helm安装logstash

helm install . -n logstash --namespace elk -f value.yaml

前后端应用打包和部署

- IDEA进行应用docker化

IDEA安装docker插件,新建dockerfile,如下图所示:

创建完以后,运行即可将项目docker化到本地仓库,https://192.168.1.69(admin/Harbor12345),具体DockerFile配置如下。

-

- 服务接口提供端swbdp-js

创建Dockerfile

FROM openjdk:8-jdk-alpine

ADD *.jar service-provider-2.0.1-SNAPSHOT.jar

ENTRYPOINT ["java","-Djava.security.egd=file:/dev/./urandom","-jar","/service-provider-2.0.1-SNAPSHOT.jar"]

镜像地址:hub.dmtd.com/swbdp/swbdp-js:v2.0.1

-

- 第三方服务third-provider-js

创建Dockerfile

FROM openjdk:8-jdk-alpine

ADD *.jar third-provider-1.0.1-SNAPSHOT.jar

ENTRYPOINT ["java","-Djava.security.egd=file:/dev/./urandom","-jar","/third-provider-1.0.1-SNAPSHOT.jar"]

镜像地址:hub.dmtd.com/swbdp/third-provider-js:v2.0.1

-

- 消费端服务swbd-js

创建Dockerfile

FROM openjdk:8-jdk-alpine

VOLUME ["/home/swbdp2.0/log"]

ADD *.jar swbd-2.0.1-SNAPSHOT.jar

ENTRYPOINT ["java","-Djava.security.egd=file:/dev/./urandom","-jar","/swbd-2.0.1-SNAPSHOT.jar"]

镜像地址:hub.dmtd.com/swbdp/swbd-js:v2.0.1

-

- 前端服务

创建Dockerfile

FROM node:lts-alpine as build-stage

WORKDIR /app

COPY package*.json ./

RUN npm install -g cnpm --registry=https://registry.npm.taobao.org

RUN cnpm install

COPY . .

RUN npm run build

# production stage

FROM nginx:stable-alpine as production-stage

COPY nginx/nginx.conf /etc/nginx/nginx.conf

COPY nginx/default.conf /etc/nginx/conf.d/default.conf

COPY nginx/rtsoms.conf /etc/nginx/conf.d/rtsoms.conf

COPY --from=build-stage /app/dist /usr/share/nginx/html

EXPOSE 8081

CMD ["nginx", "-g", "daemon off;"]

镜像地址:hub.dmtd.com/swbdp/swweb:v1.0.1

- 将镜像打包

登录本地服务器,192.168.20.69,通过命令打包镜像。

-

- 服务接口提供端

docker save -o swbdp-js.tar hub.dmtd.com/swbdp/swbdp-js:v2.0.1

-

- 第三方服务third-provider-js

docker save -o third-provider-js.tar hub.dmtd.com/swbdp/third-provider-js:v2.0.1

-

- 消费端服务swbd-js

docker save -o swbd-js.tar hub.dmtd.com/swbdp/swbd-js:v2.0.1

-

- 前端应用

docker save -o swweb.tar hub.dmtd.com/swbdp/swweb:v1.0.1

- 将打包好的tar包拷贝到服务器

将打包好的swbdp-js.tar、third-provider-js.tar、swbd-js.tar拷贝到服务器的k8s节点上,并通过命令将镜像导入到服务器上。

-

- 服务接口提供端

docker load -i swbdp-js.tar

-

- 第三方服务third-provider-js

docker load -i third-provider-js.tar

-

- 消费端服务swbd-js

docker load -i swbd-js.tar

-

- 前端应用

docker load -i swweb.tar

- 挂载日志

在master和node机器安装nfs(网络文件系统)。

第一步 找一台服务器nfs服务端,这里选择10.1.219.11

-

- 安装nfs

yum install -y nfs-utils

-

- 设置权限

vi /etc/exports

添加 :/data/nfs *{rw.no_root_squash}

-

- 挂载路径需要创建出来

创建/home/dmtd/log路径

mkdir -p /home/dmtd/log

第二步 在k8s集群node节点安装nfs

yum install -y nfs-utils

第三步 在nfs服务器启动nfs服务

systemctl start nfs

第四步 在k8s集群部署应用使用nfs持久网络存储

具体配置方式如下代码高亮部分所示,即可实现日志的挂载:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-dep1

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: swlog

mountPath: /home/swbdp2.0/log

ports:

- containerPort: 80

volumes:

- name: swlog

nfs:

server: 10.1.219.11

path: /home/dmtd/log

- 在k8s创建启动应用

- 服务接口提供端

kubectl create deployment swbdp-js --image=hub.dmtd.com/swbdp/swbdp-js:v2.0.1

#复制部署的swbdp-js3次

kubectl scale deployment swbdp-js --replicas=3

-

- 第三方服务third-provider-js

kubectl create deployment third-provider-js --image=hub.dmtd.com/swbdp/third-provider-js:v2.0.1

-

- 消费端服务swbd-js

kubectl create deployment swbd-js --image=hub.dmtd.com/swbdp/swbd-js:v2.0.1

#扩展端口,对外暴露端口设置为30000

kubectl expose deployment swbd-js --port=9089 --type=NodePort --target-port=30000

-

- 前端应用

kubectl create deployment swweb --image=hub.dmtd.com/swbdp/swweb:v1.0.1

#扩展端口,对外暴露端口设置为30001

kubectl expose deployment swweb --port=8667 --type=NodePort --target-port=30001

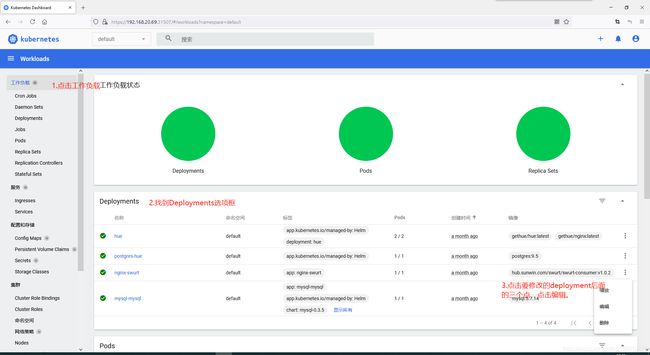

- Dashboard中的操作

- 扩展服务端部署数量

-

- 添加日志挂载

同样的方式打开编辑,找到YAML如下红框标记的位置,将挂载路径配置进去。

具体添加代码如下黄色部分标记。

spec:

replicas: 1

selector:

matchLabels:

app: nginx-swbd

template:

metadata:

creationTimestamp: null

labels:

app: nginx-swbd

spec:

volumes:

- name: swbdlog

nfs:

server: 10.1.219.11

path: /home/dmtd/log

containers:

- name: swbd-js

image: hub.dmtd.com/swbdp/swbd-js:v2.0.1

resources: {}

volumeMounts:

- name: swbdlog

mountPath: /home/swbdp2.0/log

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

imagePullPolicy: IfNotPresent

restartPolicy: Always

terminationGracePeriodSeconds: 30

dnsPolicy: ClusterFirst

securityContext: {}

schedulerName: default-scheduler

-

- 查看日志

打开工作负载,找到Pods,在想要查看的pod后面点击三个点,点击日志可以查看pod的日志情况。