摔倒检测+yolov5

摔倒检测

- 数据集来源

-

- 数据的处理

- 模型训练

数据集来源

开始使用目标检测检测人体,再通过宽高比值变化来判断是否摔倒,该方法在密集场景不管用,所以想着找一个摔倒数据集来进行检测是否摔倒,附上数据集来源:

中国华录杯——定向算法赛(人体摔倒姿态识别)这个训练集数据集我没有找到,官网下载的也只是未标注的测试集

Le2i Fall detection Dataset

• Annotations

- 标签格式为txt格式

- 标签前两行为跌倒帧数的起始与终止点,也存在没有这两行的文件

- 之后一行就是六个字段,帧编号, XX,人物bbx

Le2i Fall detection Dataset 原文下载速度不敢恭维,附上 百度云下载链接, 提取码:qghr

中国华录杯——定向算法赛(人体摔倒姿态识别)测试集,无标注,自己找的一些算法打的标注,还算过得去,需要自取百度云 百度云下载链接 , 提取码:asdh

以上两个数据集要是侵权了会删除

数据的处理

对Le2i Fall detection Dataset数据转换成voc格式的xml文件:

# coding=utf-8

"""

@Time: 2021/3/9 10:23

@IDE: PyCharm

@Author: chengzhang

@File: processVideo.py

"""

import os

from tqdm import tqdm

import cv2

from xml.dom.minidom import Document

count = 0

def process(fileDir):

dataset = [os.path.join(fileDir, i) for i in os.listdir(fileDir)]

for dir in dataset:

Annotation = os.path.join(dir, "Annotation_files")

Annotations = [os.path.join(Annotation, i) for i in os.listdir(Annotation)]

for ann in tqdm(Annotations):

txt = open(ann).readlines()

if len(txt[0].split(',')) < 2:

videoPath = ann.replace('Annotation_files', 'Videos').replace('.txt', '.avi')

start, end = int(txt[0].replace('\n', '')), int(txt[1].replace('\n', ''))

video(videoPath, txt, start, end)

def video(videoPath, txt, start, end):

global count

vs = cv2.VideoCapture(videoPath)

ind = 1

while True:

success, frame = vs.read()

if frame is None:

break

if (ind + 8) >= start and ind <= end:

Jpg = '{:06d}.jpg'.format(count)

outPutJpg = os.path.join(save, Jpg)

outPutXML = outPutJpg.replace('jpg', 'xml')

cv2.imwrite(outPutJpg, frame)

count += 1

imgShape = frame.shape

info = txt[ind].replace('\n', '').split(',')

_, _, xmin, ymin, xmax, ymax = info

bboxsInfo = [[int(xmin), int(ymin), int(xmax), int(ymax)]]

bbox2xml(bboxsInfo, imgShape, Jpg, outPutXML)

ind += 1

def bbox2xml(bboxsInfo, imgShape, outPutJpg, outPutXML):

# for boxInfo in bboxsInfo:

xmlBuilder = Document()

annotation = xmlBuilder.createElement("annotation") # 创建annotation标签

xmlBuilder.appendChild(annotation)

Pheight, Pwidth, Pdepth = imgShape

folder = xmlBuilder.createElement("folder") # folder标签

folderContent = xmlBuilder.createTextNode("VOC2007")

folder.appendChild(folderContent)

annotation.appendChild(folder)

filename = xmlBuilder.createElement("filename") # filename标签

filenameContent = xmlBuilder.createTextNode(outPutJpg)

filename.appendChild(filenameContent)

annotation.appendChild(filename)

size = xmlBuilder.createElement("size") # size标签

width = xmlBuilder.createElement("width") # size子标签width

widthContent = xmlBuilder.createTextNode(str(Pwidth))

width.appendChild(widthContent)

size.appendChild(width)

height = xmlBuilder.createElement("height") # size子标签height

heightContent = xmlBuilder.createTextNode(str(Pheight))

height.appendChild(heightContent)

size.appendChild(height)

depth = xmlBuilder.createElement("depth") # size子标签depth

depthContent = xmlBuilder.createTextNode(str(Pdepth))

depth.appendChild(depthContent)

size.appendChild(depth)

annotation.appendChild(size)

for bbox in bboxsInfo:

label = "fall"

box_xmin, box_ymin, box_xmax, box_ymax = bbox

object = xmlBuilder.createElement("object")

picname = xmlBuilder.createElement("name")

nameContent = xmlBuilder.createTextNode(label)

picname.appendChild(nameContent)

object.appendChild(picname)

pose = xmlBuilder.createElement("pose")

poseContent = xmlBuilder.createTextNode("Unspecified")

pose.appendChild(poseContent)

object.appendChild(pose)

truncated = xmlBuilder.createElement("truncated")

truncatedContent = xmlBuilder.createTextNode("0")

truncated.appendChild(truncatedContent)

object.appendChild(truncated)

difficult = xmlBuilder.createElement("difficult")

difficultContent = xmlBuilder.createTextNode("0")

difficult.appendChild(difficultContent)

object.appendChild(difficult)

bndbox = xmlBuilder.createElement("bndbox")

xmin = xmlBuilder.createElement("xmin")

xminContent = xmlBuilder.createTextNode(str(box_xmin))

xmin.appendChild(xminContent)

bndbox.appendChild(xmin)

ymin = xmlBuilder.createElement("ymin")

yminContent = xmlBuilder.createTextNode(str(box_ymin))

ymin.appendChild(yminContent)

bndbox.appendChild(ymin)

xmax = xmlBuilder.createElement("xmax")

xmaxContent = xmlBuilder.createTextNode(str(box_xmax))

xmax.appendChild(xmaxContent)

bndbox.appendChild(xmax)

ymax = xmlBuilder.createElement("ymax")

ymaxContent = xmlBuilder.createTextNode(str(box_ymax))

ymax.appendChild(ymaxContent)

bndbox.appendChild(ymax)

object.appendChild(bndbox)

annotation.appendChild(object)

f = open(outPutXML, 'w')

xmlBuilder.writexml(f, indent='\t', newl='\n', addindent='\t', encoding='utf-8')

f.close()

if __name__ == '__main__':

fileDir = r"E:\数据集\FallDataset\dataset" # 源数据集路径

save = r"E:\数据集\FallDataset\FallDataset" # 保存voc格式路径

process(fileDir)

模型训练

# coding=utf-8

"""

@Time: 2020/9/17 9:07

@IDE: PyCharm

@Author: chengzhang

@File: voc2v5.py

"""

import os

import glob

from tqdm import tqdm

import xml.etree.ElementTree as ET

import shutil

import argparse

from random import shuffle

import cv2

def check_x_y_w_h(norm_x, norm_y, norm_w, norm_h):

norm_x = 0.00001 if norm_x <= 0 else norm_x

norm_x = 0.99999 if norm_x >= 1 else norm_x

norm_y = 0.00001 if norm_y <= 0 else norm_y

norm_y = 0.99999 if norm_y >= 1 else norm_y

norm_w = 0.00001 if norm_w <= 0 else norm_w

norm_w = 0.99999 if norm_w >= 1 else norm_w

norm_h = 0.00001 if norm_h <= 0 else norm_h

norm_h = 0.99999 if norm_h >= 1 else norm_h

return norm_x, norm_y, norm_w, norm_h

def convert(size, box):

dw = 1. / (size[0])

dh = 1. / (size[1])

x = (box[0] + box[1]) / 2.0 - 1

y = (box[2] + box[3]) / 2.0 - 1

w = box[1] - box[0]

h = box[3] - box[2]

x = x * dw

w = w * dw

y = y * dh

h = h * dh

x, y, w, h = check_x_y_w_h(x, y, w, h)

return (x, y, w, h)

def write_txt(train_xml, dataset):

with open(dataset, "w", encoding="utf-8") as f:

for xml in tqdm(train_xml):

f.write(xml.replace('.xml', '.jpg') + '\n')

target_label_txt = xml.replace('.xml', '.txt')

img = cv2.imread(xml.replace('.xml', '.jpg'))

h, w, c = img.shape

# 输入文件xml

in_file = open(xml)

# 输出label txt

out_file = open(target_label_txt, 'w')

tree = ET.parse(in_file)

root = tree.getroot()

for obj in root.iter('object'):

try:

cls = obj.find('name').text

except:

continue

if cls not in classes:

continue

xmlbox = obj.find('bndbox')

b = (float(xmlbox.find('xmin').text), float(xmlbox.find('xmax').text), float(xmlbox.find('ymin').text),

float(xmlbox.find('ymax').text))

bb = convert((w, h), b)

out_file.write(str(0) + " " + " ".join([str(a) for a in bb]) + '\n')

def check_dir(dir):

try:

shutil.rmtree(dir)

except:

os.makedirs(dir)

if not os.path.exists(dir):

os.makedirs(dir)

def parse_annotation(img_dir):

all_imgs = []

all_xmls = []

for tmpdir in img_dir:

for home, dirs, files in os.walk(tmpdir):

for filename in files:

# 文件名列表,包含完整路径

if filename.endswith((".jpg", ".jpeg", ".png")):

filePath = os.path.join(home, filename)

all_imgs.append(filePath)

elif filename.endswith(".xml"):

filePath = os.path.join(home, filename)

all_xmls.append(filePath)

return all_xmls

if __name__ == '__main__':

classes = ['fall']

parser = argparse.ArgumentParser()

parser.add_argument("--train_txt", default="/data/share/imageAlgorithm/zhangcheng/code/yolov5/data/train_fall.txt")

parser.add_argument("--test_txt", default="/data/share/imageAlgorithm/zhangcheng/code/yolov5/data/val_fall.txt")

flags = parser.parse_args()

imgPathList = [

"/data/share/imageAlgorithm/zhangcheng/dataset/falldataset/FallDataset/",

"/data/share/imageAlgorithm/zhangcheng/dataset/falldataset/FallDataset_A/"

]

dataset = parse_annotation(imgPathList)

shuffle(dataset)

num = len(dataset)

trainNum = int(0.9 * num)

trainList = dataset[: trainNum]

testList = dataset[trainNum:]

write_txt(trainList, flags.train_txt)

write_txt(testList, flags.test_txt)

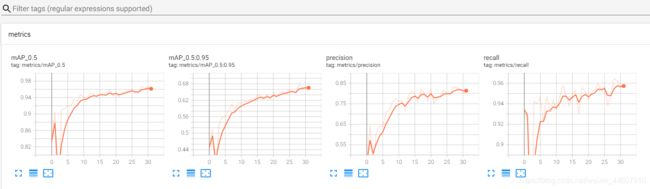

- 根据上面处理的数据进行训练

CUDA_VISIBLE_DEVICES=4,5,6,7 nohup python train.py --img 640 --batch 16 --epochs 600 --data ./data/fallDown.yaml --weights ./weights/yolov5l.pt > train_fall.log 2>&1 &