k3s,k9s harbor https

安装选项介绍 | Rancher文档

K3s Agent配置参考 | Rancher文档

Rancher 离线安装 - 3、安装 Kubernetes 集群 - 《Rancher 2.4.8 中文文档》 - 书栈网 · BookStack

1 k3s简介--5 less than K8s

k3s[1]是rancher®开源的一个Kubernetes发行版,从名字上就可以看出k3s相对k8s做了很多裁剪和优化,二进制程序不足50MB,占用资源更少,只需要512MB内存即可运行。

而之所以称为k3s是因为相对k8s裁剪了如下5个部分:

- 过时的功能和非默认功能

- Alpha功能

- 内置的云提供商插件

- 内置的存储驱动

- Docker

相对k8s最主要的优化如下:

- 使用内嵌轻量级数据库SQLite作为默认数据存储替代etcd,当然etcd仍然是支持的。

- 内置了local storage provider、service load balancer、helm controller、Traefik ingress controller,开箱即用。

- 所有Kubernetes控制平面组件如api-server、scheduler等封装成为一个精简二进制程序,控制平面只需要一个进程即可运行。

- 删除内置插件(比如cloudprovider插件和存储插件)。

- 减少外部依赖,操作系统只需要安装较新的内核以及支持cgroup即可,k3s安装包已经包含了containerd、Flannel、CoreDNS,非常方便地一键式安装,不需要额外安装Docker、Flannel等组件。

k3s的四大使用场景为:

- Edge

- IoT

- CI

- ARM

当然如果想学习k8s,而又不想折腾k8s的繁琐安装部署,完全可以使用k3s代替k8s,k3s包含了k8s的所有基础功能,而k8s附加功能其实大多数情况也用不到。

一 master节点

正如快速启动指南中提到的那样,你可以使用https://get.k3s.io 提供的安装脚本在基于 systemd 和 openrc 的系统上安装 K3s 作为服务。

该命令的最简单形式如下:

curl -sfL https://get.k3s.io | sh -参数

当然还有其它参数,可以在官网参数列表找到

--docker # 使用docker 作为runtime

--kube-apiserver-arg --feature-gates ServerSideApply=false # 关闭了 ServerSideApply 特性,毕竟不太想在yaml看到一堆 field,同时也能够减少占用的磁盘空间和内存

--disable servicelb # 我只有一个树莓派,就暂时先不用 lb 了吧

--disable traefik # K3s 使用 1.8 版本,自己部署使用2.x版本

--disable-cloud-controller # 不需要[root@jettoloader k3s-ansible-master]# k3s server --help | grep disable

--etcd-disable-snapshots (db) Disable automatic etcd snapshots

--disable value (components) Do not deploy packaged components and delete any deployed components (valid items: coredns, servicelb, traefik, local-storage, metrics-server)

--disable-scheduler (components) Disable Kubernetes default scheduler

--disable-cloud-controller (components) Disable k3s default cloud controller manager

--disable-kube-proxy (components) Disable running kube-proxy

--disable-network-policy (components) Disable k3s default network policy controller

--disable-helm-controller (components) Disable Helm controller静态pod路径

[root@localhost metrics-server]# ls /var/lib/rancher/k3s/server/manifests/

ccm.yaml coredns.yaml local-storage.yaml metrics-server rolebindings.yaml traefik.yaml国内用户,可以使用以下方法加速安装:

export INSTALL_K3S_SKIP_DOWNLOAD=true

export INSTALL_K3S_EXEC="--docker --write-kubeconfig ~/.kube/config --write-kubeconfig-mode 666"INSTALL_K3S_SKIP_DOWNLOAD=true效果为不去下载k3s可执行文件INSTALL_K3S_EXEC="(略)"效果为启动k3s服务时使用的额外参数--docker效果为使用docker而不是默认的containerd--write-kubeconfig-mode 666效果为将配置文件权限改为非所有者也可读可写,进而使kubectl命令无需root或sudo--write-kubeconfig ~/.kube/config效果为将配置文件写到k8s默认会用的位置,而不是k3s默认的位置/etc/rancher/k3s/k3s.yaml。后者会导致istio、helm需要额外设置或无法运行。

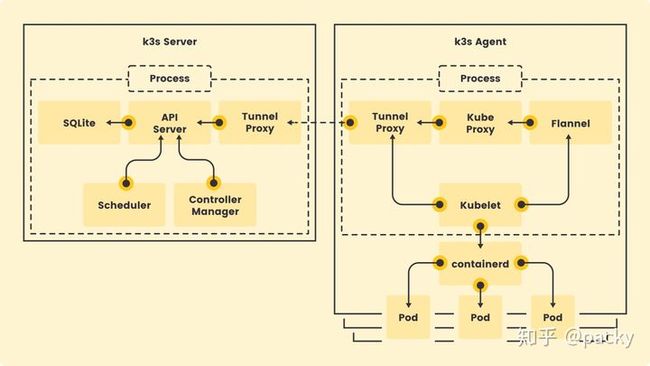

虽说 K3s 其内核就是个 K8s,但是他的整体架构和运行机制都被 rancher 魔改了。K3s 相较于 K8s 其较大的不同点如下:

- 存储etcd 使用 嵌入的 sqlite 替代,但是可以外接 etcd 存储

- apiserver 、schedule 等组件全部简化,并以进程的形式运行在节点上

- 网络插件使用 Flannel, 反向代理入口使用 traefik 代替 ingress nginx

- 默认使用 local-path-provisioner 提供本地存储卷

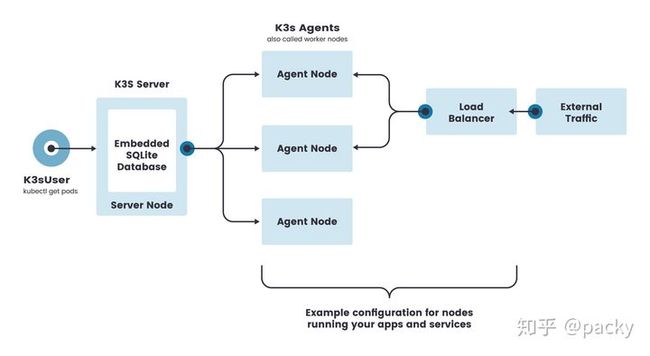

在真正使用K3s之前,最好先了解一下他的大体架构和运行方式,这里先上他的架构图:

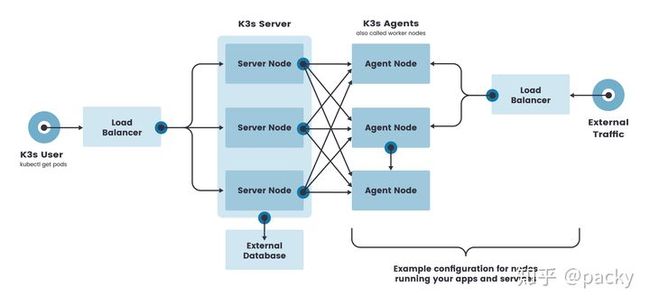

高可用

从架构图里,我们就能看出来 K3s 分为两大种部署方式:单点测试 和 高可用模式。这样看对比下来可以看出,单点测试模式只有一个控制节点(在 K3s 里叫做server node,相当于 K8s 的 master node),而且K3s的数据存储使用 sqlite 并内置在了控制节点上;高可用模式则是扩展 server node 变为3个,并且需要外接存储(etcd、mysql等),保证了集群的高可用

这可以看出来,K8s 所有控制面组件最终都以进程的形式运行在 server node 上,不再以静态pod的形式。数据库使用 SQLite ,没有etcd 那么重了。也就是说,当我们安装部署好 K3s 后,使用kubectl get po -n kube-system 时,则不会有 apiserver、scheduler 等控制面的pod了。

Agent 端 kubelet 和 kube proxy 都是进程化了,此外容器运行时也由docker 改为 containerd。

server node 和 agent node 通过特殊的代理通道连接。

从这个运行机制确实能感受到 K3s 极大的轻量化了 K8s。

网络

需要监听和开放的默认端口如下

PROTOCOL PORT SOURCE DESCRIPTION

TCP 6443 K3s agent nodes Kubernetes API

UCP 8472 K3s server and agent nodes Required only for Flannel VXLAN

TCP 10250 K3s server and agent nodes kubelet网络配置

K3s使用Flannel vxlan作为默认的CNI提供容器网络,如果需要修改的话,可以通过如下参数指定:

CLI FLAG AND VALUE DESCRIPTION

–flannel-backend=vxlan 使用vxlan(默认).

–flannel-backend=ipsec 使用IPSEC后端对网络流量进行加密.

–flannel-backend=host-gw 使用host_gw模式.

–flannel-backend=wireguard 使用WireGuard后端对网络流量进行加密。 可能需要其他内核模块和配置.如果用独立的CNI可以在安装时指定参数--flannel-backend=none, 然后单独安装自己的CNI

HA模式部署(外部数据库)

- 部署一个外部数据库

- 启动Server节点

使用外部数据库时需要指定datastore-endpoint,支持MySQL,PostgreSQL,etcd等

使用MySQL数据库

curl -sfL https://get.k3s.io | sh -s - server \

--datastore-endpoint="mysql://username:password@tcp(hostname:3306)/database-name"使用PG数据库

curl -sfL https://get.k3s.io | sh -s - server \

--datastore-endpoint="postgres://username:password@hostname:port/database-name"使用etcd数据库

curl -sfL https://get.k3s.io | sh -s - server \

--datastore-endpoint="https://etcd-host-1:2379,https://etcd-host-2:2379,https://etcd-host-3:2379"

对于需要证书认证的可以指定如下参数(或者环境变量)

--datastore-cafile K3S_DATASTORE_CAFILE

--datastore-certfile K3S_DATASTORE_CERTFILE

--datastore-keyfile K3S_DATASTORE_KEYFILE

- 3.配置固定IP(VIP)

K3s Agent注册时需要指定一个K3s Server的URL,对于HA模式,指定任意一个Server的IP都可以,但是建议是使用一个固定的IP(可以使用负载均衡,DNS,VIP)

- 4 启动Agent节点

K3S_TOKEN=SECRET k3s agent --server https://fixed-registration-address:6443HA模式部署(内置数据库)

这种模式下,Server节点个数必须是奇数个,推荐是三个Server节点

启动第一个Server节点的时候需要携带--cluster-init参数,以及K3S_TOKEN

K3S_TOKEN=SECRET k3s server --cluster-init然后在启动其他Server节点

K3S_TOKEN=SECRET k3s server --server https://:6443 ========================================================

[root@localhost k3s]# curl -sfL http://rancher-mirror.cnrancher.com/k3s/k3s-install.sh | INSTALL_K3S_MIRROR=cn INSTALL_K3S_EXEC='--service-node-port-range=1024-65535 --bind-address=0.0.0.0 --https-listen-port=6443 --cluster-cidr=10.42.0.0/16 --service-cidr=10.43.0.0/16 --cluster-dns=10.43.0.254 --cluster-domain=jettech.com' K3S_NODE_NAME=172.16.10.5 sh -通过如上只执行了一个命令即部署了一套all in one k3s单节点环境,相对k8s无需额外安装如下组件:

- kubelet

- kube-proxy

- Docker

- etcd

- ingress,如ngnix

当然可以使用k3s agent添加更多的worker node,只需要添加K3S_URL和K3S_TOKEN参数即可,其中K3S_URL为api-server URL,而k3S_TOKEN为node注册token,保存在master节点的/var/lib/rancher/k3s/server/node-token路径。

使用k8s一样使用k3s

使用k8s一样使用k3s命令工具

k3s内置了一个kubectl命令行工具,通过k3s kubectl调用,为了与k8s的kubectl命令一致,可以设置alias别名:

# 该步骤可以省略,在/usr/local/bin中已经添加了一个kubectl软链接到k3s

alias kubectl='k3s kubectl`

# 配置kubectl命令补全

source <(kubectl completion bash)[root@localhost bin]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

metrics-server-9cf544f65-4p277 1/1 Running 0 22m

coredns-85cb69466-j6xtx 1/1 Running 0 22m

local-path-provisioner-64ffb68fd-5bt6v 1/1 Running 0 22m

helm-install-traefik-crd--1-5hfpf 0/1 Completed 0 22m

helm-install-traefik--1-c42qv 0/1 Completed 1 22m

svclb-traefik-qvsks 2/2 Running 0 19m

traefik-786ff64748-vdn6v 1/1 Running 0 19m

svclb-traefik-ql97k 2/2 Running 0 14m我们发现并没有运行apiserver、controller-manager、scheduler、kube-proxy以及flannel等组件,因为这些都内嵌到了k3s进程。另外k3s已经给我们默认部署运行了traefik ingress、metrics-server等,不需要再额外安装了。

k3s默认没有使用Docker作为容器运行环境,而是使用了内置的contained,可以使用crictl子命令与CRI交互。

当然如果习惯使用docker命令行可以设置如下别名:

alias docker='k3s crictl'

# 配置docker命令补全

source <(docker completion)

complete -F _cli_bash_autocomplete docker当然我们只是使用crictl模拟了docker命令,相对真正的docker我们发现多了ATTEMPT以及POD ID,这是CRI所特有的。

解释:INSTALL_K3S_EXEC 命令是传参的,参数可以选用下面的所有,给kube-apiserver-arg,kube-controller-manager和kube-scheduler-arg 传参

OPTIONS:

--config FILE, -c FILE (config) Load configuration from FILE (default: "/etc/rancher/k3s/config.yaml") [$K3S_CONFIG_FILE]

--debug (logging) Turn on debug logs [$K3S_DEBUG]

-v value (logging) Number for the log level verbosity (default: 0)

--vmodule value (logging) Comma-separated list of pattern=N settings for file-filtered logging

--log value, -l value (logging) Log to file

--alsologtostderr (logging) Log to standard error as well as file (if set)

--bind-address value (listener) k3s bind address (default: 0.0.0.0)

--https-listen-port value (listener) HTTPS listen port (default: 6443)

--advertise-address value (listener) IPv4 address that apiserver uses to advertise to members of the cluster (default: node-external-ip/node-ip)

--advertise-port value (listener) Port that apiserver uses to advertise to members of the cluster (default: listen-port) (default: 0)

--tls-san value (listener) Add additional hostnames or IPv4/IPv6 addresses as Subject Alternative Names on the server TLS cert

--data-dir value, -d value (data) Folder to hold state default /var/lib/rancher/k3s or ${HOME}/.rancher/k3s if not root

--cluster-cidr value (networking) IPv4/IPv6 network CIDRs to use for pod IPs (default: 10.42.0.0/16)

--service-cidr value (networking) IPv4/IPv6 network CIDRs to use for service IPs (default: 10.43.0.0/16)

--service-node-port-range value (networking) Port range to reserve for services with NodePort visibility (default: "30000-32767")

--cluster-dns value (networking) IPv4 Cluster IP for coredns service. Should be in your service-cidr range (default: 10.43.0.10)

--cluster-domain value (networking) Cluster Domain (default: "cluster.local")

--flannel-backend value (networking) One of 'none', 'vxlan', 'ipsec', 'host-gw', or 'wireguard' (default: "vxlan")

--token value, -t value (cluster) Shared secret used to join a server or agent to a cluster [$K3S_TOKEN]

--token-file value (cluster) File containing the cluster-secret/token [$K3S_TOKEN_FILE]

--write-kubeconfig value, -o value (client) Write kubeconfig for admin client to this file [$K3S_KUBECONFIG_OUTPUT]

--write-kubeconfig-mode value (client) Write kubeconfig with this mode [$K3S_KUBECONFIG_MODE]

--kube-apiserver-arg value (flags) Customized flag for kube-apiserver process

--etcd-arg value (flags) Customized flag for etcd process

--kube-controller-manager-arg value (flags) Customized flag for kube-controller-manager process

--kube-scheduler-arg value (flags) Customized flag for kube-scheduler process

--kube-cloud-controller-manager-arg value (flags) Customized flag for kube-cloud-controller-manager process

--datastore-endpoint value (db) Specify etcd, Mysql, Postgres, or Sqlite (default) data source name [$K3S_DATASTORE_ENDPOINT]

--datastore-cafile value (db) TLS Certificate Authority file used to secure datastore backend communication [$K3S_DATASTORE_CAFILE]

--datastore-certfile value (db) TLS certification file used to secure datastore backend communication [$K3S_DATASTORE_CERTFILE]

--datastore-keyfile value (db) TLS key file used to secure datastore backend communication [$K3S_DATASTORE_KEYFILE]

--etcd-expose-metrics (db) Expose etcd metrics to client interface. (Default false)

--etcd-disable-snapshots (db) Disable automatic etcd snapshots

--etcd-snapshot-name value (db) Set the base name of etcd snapshots. Default: etcd-snapshot- (default: "etcd-snapshot")

--etcd-snapshot-schedule-cron value (db) Snapshot interval time in cron spec. eg. every 5 hours '* */5 * * *' (default: "0 */12 * * *")

--etcd-snapshot-retention value (db) Number of snapshots to retain (default: 5)

--etcd-snapshot-dir value (db) Directory to save db snapshots. (Default location: ${data-dir}/db/snapshots)

--etcd-s3 (db) Enable backup to S3

--etcd-s3-endpoint value (db) S3 endpoint url (default: "s3.amazonaws.com")

--etcd-s3-endpoint-ca value (db) S3 custom CA cert to connect to S3 endpoint

--etcd-s3-skip-ssl-verify (db) Disables S3 SSL certificate validation

--etcd-s3-access-key value (db) S3 access key [$AWS_ACCESS_KEY_ID]

--etcd-s3-secret-key value (db) S3 secret key [$AWS_SECRET_ACCESS_KEY]

--etcd-s3-bucket value (db) S3 bucket name

--etcd-s3-region value (db) S3 region / bucket location (optional) (default: "us-east-1")

--etcd-s3-folder value (db) S3 folder

--etcd-s3-insecure (db) Disables S3 over HTTPS

--etcd-s3-timeout value (db) S3 timeout (default: 30s)

--default-local-storage-path value (storage) Default local storage path for local provisioner storage class

--disable value (components) Do not deploy packaged components and delete any deployed components (valid items: coredns, servicelb, traefik, local-storage, metrics-server)

--disable-scheduler (components) Disable Kubernetes default scheduler

--disable-cloud-controller (components) Disable k3s default cloud controller manager

--disable-kube-proxy (components) Disable running kube-proxy

--disable-network-policy (components) Disable k3s default network policy controller

--disable-helm-controller (components) Disable Helm controller

--node-name value (agent/node) Node name [$K3S_NODE_NAME]

--with-node-id (agent/node) Append id to node name

--node-label value (agent/node) Registering and starting kubelet with set of labels

--node-taint value (agent/node) Registering kubelet with set of taints

--image-credential-provider-bin-dir value (agent/node) The path to the directory where credential provider plugin binaries are located (default: "/var/lib/rancher/credentialprovider/bin")

--image-credential-provider-config value (agent/node) The path to the credential provider plugin config file (default: "/var/lib/rancher/credentialprovider/config.yaml")

--docker (agent/runtime) Use docker instead of containerd

--container-runtime-endpoint value (agent/runtime) Disable embedded containerd and use alternative CRI implementation

--pause-image value (agent/runtime) Customized pause image for containerd or docker sandbox (default: "rancher/mirrored-pause:3.1")

--snapshotter value (agent/runtime) Override default containerd snapshotter (default: "overlayfs")

--private-registry value (agent/runtime) Private registry configuration file (default: "/etc/rancher/k3s/registries.yaml")

--node-ip value, -i value (agent/networking) IPv4/IPv6 addresses to advertise for node

--node-external-ip value (agent/networking) IPv4/IPv6 external IP addresses to advertise for node

--resolv-conf value (agent/networking) Kubelet resolv.conf file [$K3S_RESOLV_CONF]

--flannel-iface value (agent/networking) Override default flannel interface

--flannel-conf value (agent/networking) Override default flannel config file

--kubelet-arg value (agent/flags) Customized flag for kubelet process

--kube-proxy-arg value (agent/flags) Customized flag for kube-proxy process

--protect-kernel-defaults (agent/node) Kernel tuning behavior. If set, error if kernel tunables are different than kubelet defaults.

--rootless (experimental) Run rootless

--agent-token value (cluster) Shared secret used to join agents to the cluster, but not servers [$K3S_AGENT_TOKEN]

--agent-token-file value (cluster) File containing the agent secret [$K3S_AGENT_TOKEN_FILE]

--server value, -s value (cluster) Server to connect to, used to join a cluster [$K3S_URL]

--cluster-init (cluster) Initialize a new cluster using embedded Etcd [$K3S_CLUSTER_INIT]

--cluster-reset (cluster) Forget all peers and become sole member of a new cluster [$K3S_CLUSTER_RESET]

--cluster-reset-restore-path value (db) Path to snapshot file to be restored

--secrets-encryption (experimental) Enable Secret encryption at rest

--system-default-registry value (image) Private registry to be used for all system images [$K3S_SYSTEM_DEFAULT_REGISTRY]

--selinux (agent/node) Enable SELinux in containerd [$K3S_SELINUX]

--lb-server-port value (agent/node) Local port for supervisor client load-balancer. If the supervisor and apiserver are not colocated an additional port 1 less than this port will also be used for the apiserver client load-balancer. (default: 6444) [$K3S_LB_SERVER_PORT]

--no-flannel (deprecated) use --flannel-backend=none

--no-deploy value (deprecated) Do not deploy packaged components (valid items: coredns, servicelb, traefik, local-storage, metrics-server)

--cluster-secret value (deprecated) use --token [$K3S_CLUSTER_SECRET]

如何使用标志和环境变量

示例 A: K3S_KUBECONFIG_MODE#

允许写入 kubeconfig 文件的选项对于允许将 K3s 集群导入 Rancher 很有用。以下是传递该选项的两种方式。

使用标志 --write-kubeconfig-mode 644:

[root@localhost k3s]# curl -sfL http://rancher-mirror.cnrancher.com/k3s/k3s-install.sh | INSTALL_K3S_MIRROR=cn sh - --write-kubeconfig-mode 644使用环境变量 K3S_KUBECONFIG_MODE:

$ curl -sfL https://get.k3s.io | K3S_KUBECONFIG_MODE="644" sh -s -

[root@localhost k3s]# curl -sfL http://rancher-mirror.cnrancher.com/k3s/k3s-install.sh | INSTALL_K3S_MIRROR=cn K3S_KUBECONFIG_MODE="644" sh -示例 B:INSTALL_K3S_EXEC

[root@localhost k3s]# curl -sfL http://rancher-mirror.cnrancher.com/k3s/k3s-install.sh | INSTALL_K3S_MIRROR=cn INSTALL_K3S_EXEC='--service-node-port-range=1024-65535 --bind-address=0.0.0.0 --https-listen-port=6443 --cluster-cidr=10.42.0.0/16 --service-cidr=10.43.0.0/16 --cluster-dns=10.43.0.254 --cluster-domain=jettech.com' K3S_NODE_NAME=172.16.10.5 sh -示例 C:/etc/rancher/k3s/config.yaml 配置文件方式

除了使用环境变量和 CLI 参数配置 K3s 之外,K3s 还可以使用配置文件。

默认情况下,位于 的 YAML 文件中的值/etc/rancher/k3s/config.yaml将在安装时使用。

基本server配置文件的示例如下:

write-kubeconfig-mode: "0644"

tls-san:

- "foo.local"

node-label:

- "foo=bar"

- "something=amazing"通常,CLI 参数映射到它们各自的 YAML 键,可重复的 CLI 参数表示为 YAML 列表。

下面显示了一个仅使用 CLI 参数的相同配置来演示这一点:

k3s server \

--write-kubeconfig-mode "0644" \

--tls-san "foo.local" \

--node-label "foo=bar" \

--node-label "something=amazing"也可以同时使用配置文件和 CLI 参数。在这些情况下,值将从两个源加载,但 CLI 参数将优先。对于诸如 之类的可重复参数--node-label,CLI 参数将覆盖列表中的所有值。

最后,可以通过 cli 参数--config FILE, -c FILE或环境变量更改配置文件的位置$K3S_CONFIG_FILE。

Rancher Docs: Installation Options

K3s Server 配置参考 | Rancher文档

二 node节点

[root@localhost k3s]# curl -sfL http://rancher-mirror.cnrancher.com/k3s/k3s-install.sh | INSTALL_K3S_MIRROR=cn K3S_URL=https://172.16.10.5:6443 K3S_TOKEN=K102958f25bdb3fa9df981deaff123d936eb6fd6d96483ca147bee4fbc719bdafa8::server:acab1c0f7d143fe4ff96b311734aba31 K3S_NODE_NAME="172.16.10.15" sh -三 卸载

/usr/local/bin/k3s-uninstall.sh

[root@localhost bin]# rm -rf /etc/rancher/node/password

[root@localhost bin]# rm -rf /var/lib/rancher/k3s/server/cred/passwd

不是删除/etc/rancher/node/password文件 下次在启动agent和server密码不一致会导致agent注册不成功到serverharbor作为k3s的镜像仓库

K3s 默认的 containerd 配置文件目录为/var/lib/rancher/k3s/agent/etc/containerd/config.toml,但直接操作 containerd 的配置文件去设置镜像仓库或加速器相比于操作 docker 要复杂许多。K3s 为了简化配置 containerd 镜像仓库的复杂度,K3s 会在启动时检查/etc/rancher/k3s/中是否存在 registries.yaml 文件,如果存在该文件,就会根据 registries.yaml 的内容转换为 containerd 的配置并存储到/var/lib/rancher/k3s/agent/etc/containerd/config.toml,从而降低了配置 containerd 镜像仓库的复杂度

[root@localhost k3s]# cat /var/lib/rancher/k3s/agent/etc/containerd/config.toml

[plugins.opt]

path = "/var/lib/rancher/k3s/agent/containerd"

[plugins.cri]

stream_server_address = "127.0.0.1"

stream_server_port = "10010"

enable_selinux = false

sandbox_image = "rancher/mirrored-pause:3.1"

[plugins.cri.containerd]

snapshotter = "overlayfs"

disable_snapshot_annotations = true

[plugins.cri.cni]

bin_dir = "/var/lib/rancher/k3s/data/2e877cf4762c3c7df37cc556de3e08890fbf450914bb3ec042ad4f36b5a2413a/bin"

conf_dir = "/var/lib/rancher/k3s/agent/etc/cni/net.d"

[plugins.cri.containerd.runtimes.runc]

runtime_type = "io.containerd.runc.v2"

[plugins.cri.registry.mirrors]

[plugins.cri.registry.mirrors."192.168.99.41"]

endpoint = ["https://192.168.99.41"]

[plugins.cri.registry.mirrors."harbor.jettech.com"]

endpoint = ["https://harbor.jettech.com"]

[plugins.cri.registry.configs."harbor.jettech.com".tls]

ca_file = "/var/lib/rancher/k3s/cacerts.pem"containerd 使用了类似 k8s 中 svc 与 endpoint 的概念。svc 可以理解为访问 url,这个 url 会解析到对应的 endpoint 上。也可以理解 mirror 配置就是一个反向代理,它把客户端的请求代理到 endpoint 配置的后端镜像仓库。mirror 名称可以随意填写,但是必须符合 IP 或域名 的定义规则。并且可以配置多个 endpoint,默认解析到第一个 endpoint,如果第一个 endpoint 没有返回数据,则自动切换到第二个 endpoint,以此类推。

配置 K3S 仓库镜像,比如以下配置示例:创建 /etc/rancher/k3s/registries.yaml

cat >/etc/rancher/k3s/registries.yaml <实现的关键在于 rewrite 规则,在镜像名前面加上 harbor 项目名作为前缀,拉取镜像的请求才能被正确的路由。这一点至关重要。使用 Harbor 作为 K3S 的镜像代理缓存后端 - roy2220 - 博客园

值得一提的是 rewrite 特性并不是 containerd 官方版本的特性,由 rancher 魔改版本实现。rancher 给 containerd 提交了 Pull Request,撰写本文的时候还在讨论中,没有明确接受还是拒绝。

mirrors:

"*":

endpoint:

- "http://192.168.50.119"

"192.168.50.119":

endpoint:

- "http://192.168.50.119"

"reg.test.com":

endpoint:

- "http://192.168.50.119"

"docker.io":

endpoint:

- "https://7bezldxe.mirror.aliyuncs.com"

- "https://registry-1.docker.io"可以通过 crictl pull 192.168.50.119/library/alpine 和 crictl pull reg.test.com/library/alpine 获取到镜像,但镜像都是从同一个后端仓库获取。

注意: mirror 名称也可以设置为 * , 表示适配任意的仓库名称来获取到镜像,这样配置会出现很多镜像名称,不方便管理,不建议这样配置。

非安全(http)私有仓库配置

配置非安全(http)私有仓库,只需要在 endpoint 中指定 http 地址的后端仓库即可。

- 以

http://192.168.50.119仓库为例

cat >> /etc/rancher/k3s/registries.yaml <安全(https)私有仓库配置

- 使用授信 ssl 证书

与非安全(http)私有仓库配置类似,只需要配置 endpoint 中指定 https 地址的后端仓库即可。

cat >> /etc/rancher/k3s/registries.yaml <- 使用自签 ssl 证书

如果后端仓库使用的是自签名的 ssl 证书,那么需要配置 CA 证书 用于 ssl 证书的校验。

docs-rancher2/_index.md at master · cnrancher/docs-rancher2 · GitHub

可以配置 Containerd 连接到私有镜像仓库,并使用它们在节点上拉取私有镜像。

启动时,K3s 会检查/etc/rancher/k3s/中是否存在registries.yaml文件,并指示 containerd 使用文件中定义的镜像仓库。如果你想使用一个私有的镜像仓库,那么你需要在每个使用镜像仓库的节点上以 root 身份创建这个文件。

请注意,server 节点默认是可以调度的。如果你没有在 server 节点上设置污点,那么将在它们上运行工作负载,请确保在每个 server 节点上创建registries.yaml文件。

Containerd 中的配置可以用于通过 TLS 连接到私有镜像仓库,也可以与启用验证的镜像仓库连接。下一节将解释registries.yaml文件,并给出在 K3s 中使用私有镜像仓库配置的不同例子。

该文件由两大部分组成:

- mirrors

- configs

Mirrors

Mirrors 是一个用于定义专用镜像仓库的名称和 endpoint 的指令,例如。

mirrors:

mycustomreg.com:

endpoint:

- "https://mycustomreg.com:5000"

每个 mirror 必须有一个名称和一组 endpoint。当从镜像仓库中拉取镜像时,containerd 会逐一尝试这些 endpoint URL,并使用第一个可用的 endpoint。

Configs

Configs 部分定义了每个 mirror 的 TLS 和证书配置。对于每个 mirror,你可以定义auth和/或tls。TLS 部分包括:

| 指令 | 描述 |

|---|---|

cert_file |

用来与镜像仓库进行验证的客户证书路径 |

key_file |

用来验证镜像仓库的客户端密钥路径 |

ca_file |

定义用于验证镜像仓库服务器证书文件的 CA 证书路径 |

insecure_skip_verify |

定义是否应跳过镜像仓库的 TLS 验证的布尔值 |

凭证由用户名/密码或认证 token 组成:

- username: 镜像仓库身份验证的用户名

- password: 镜像仓库身份验证的用户密码

- auth: 镜像仓库 auth 的认证 token

以下是在不同模式下使用私有镜像仓库的基本例子:

使用 TLS

下面的例子展示了当你使用 TLS 时,如何在每个节点上配置/etc/rancher/k3s/registries.yaml。

有认证

mirrors:

docker.io:

endpoint:

- "https://mycustomreg.com:5000"

configs:

"mycustomreg:5000":

auth:

username: xxxxxx # 这是私有镜像仓库的用户名

password: xxxxxx # 这是私有镜像仓库的密码

tls:

cert_file: # 镜像仓库中使用的cert文件的路径。

key_file: # 镜像仓库中使用的key文件的路径。

ca_file: # 镜像仓库中使用的ca文件的路径。

无认证

mirrors:

docker.io:

endpoint:

- "https://mycustomreg.com:5000"

configs:

"mycustomreg:5000":

tls:

cert_file: # 镜像仓库中使用的cert文件的路径。

key_file: # 镜像仓库中使用的key文件的路径。

ca_file: # 镜像仓库中使用的ca文件的路径。

不使用 TLS

下面的例子展示了当你不使用 TLS 时,如何在每个节点上配置/etc/rancher/k3s/registries.yaml。

有认证

mirrors:

docker.io:

endpoint:

- "http://mycustomreg.com:5000"

configs:

"mycustomreg:5000":

auth:

username: xxxxxx # 这是私有镜像仓库的用户名

password: xxxxxx # 这是私有镜像仓库的密码

无认证

mirrors:

docker.io:

endpoint:

- "http://mycustomreg.com:5000"

在没有 TLS 通信的情况下,需要为 endpoints 指定

http://,否则将默认为 https。

为了使镜像仓库更改生效,你需要重新启动每个节点上的 K3s。

添加镜像到私有镜像仓库

首先,从 GitHub 上获取你正在使用的版本的 k3s-images.txt 文件。 从 docker.io 中拉取 k3s-images.txt 文件中列出的 K3s 镜像。

示例: docker pull docker.io/rancher/coredns-coredns:1.6.3

然后,将镜像重新标记成私有镜像仓库。

示例: docker tag coredns-coredns:1.6.3 mycustomreg:5000/coredns-coredns

最后,将镜像推送到私有镜像仓库。

示例: docker push mycustomreg:5000/coredns-coredns

私有仓库

[root@localhost ~]# docker run --name refistry -d -p 5000:5000 -v /opt/registry:/var/lib/registry docker.io/library/registry:latest或

[root@localhost bin]# k3s ctr images pull docker.io/library/registry:latest

[root@localhost bin]# k3s ctr images ls -q

docker.io/library/registry:latest

docker.io/rancher/klipper-helm:v0.6.6-build20211022

docker.io/rancher/klipper-lb:v0.3.4

docker.io/rancher/local-path-provisioner:v0.0.20

docker.io/rancher/mirrored-coredns-coredns:1.8.4

docker.io/rancher/mirrored-library-busybox:1.32.1

docker.io/rancher/mirrored-library-traefik:2.5.0

docker.io/rancher/mirrored-metrics-server:v0.5.0

docker.io/rancher/mirrored-pause:3.1通过ctr方式启动容器服务

[root@localhost bin]# k3s ctr run --null-io --net-host -mount type=bind,src=/opt/registry,dst=/var/lib/registry,options=rbind:rw -d docker.io/library/registry:latest jettech-registry

[root@localhost bin]# k3s ctr c ls

CONTAINER IMAGE RUNTIME

jettech-registry docker.io/library/registry:latest io.containerd.runc.v2

tag

[root@localhost bin]# ctr i tag docker.io/rancher/klipper-helm:v0.6.6-build20211022 172.16.10.5:5000/klipper-helm:v0.6.6-build20211022

push

[root@localhost bin]# ctr i push --plain-http 172.16.10.5:5000/klipper-helm:v0.6.6-build20211022--mount ="": 指定额外的容器挂载(例如:type=bind,src=/tmp,dst=/host,options=rbind:ro)

--net-host : 为容器启用主机网络

--privileged : 运行特权容器

ctr - Linux Man Pages (1)

k3s ctr images 操作

tag:

[root@localhost bin]# k3s ctr i tag docker.io/rancher/klipper-helm:v0.6.6-build20211022 harbor.jettech.com/test/rancher/klipper-helm:v0.6.6-build20211022

push和https:

[root@localhost bin]# k3s ctr images push harbor.jettech.com/test/rancher/klipper-helm:v0.6.6-build20211022

push和http:

[root@localhost bin]# k3s ctr images push --plain-http harbor.jettech.com/test/rancher/klipper-helm:v0.6.6-build20211022

pull和http:

[root@localhost bin]# k3s ctr images pull --plain-http 172.16.10.5:5000/klipper-helm:v0.6.6-build20211022[root@localhost ~]#cat >> /etc/rancher/k3s/registries.yaml

mirrors:

"192.168.99.41":

endpoint:

- "https://192.168.99.41"

"harbor.jettech.com":

endpoint:

- "https://harbor.jettech.com"

configs:

"harbor.jettech.com":

tls:

ca_file: /var/lib/rancher/k3s/cacerts.pem

#insecure_skip_verify: true

EOF

ca_file: /var/lib/rancher/k3s/cacerts.pem

#insecure_skip_verify: true

二者选其一

注意cacerts.pem 是harbor的ca根证书- ssl 双向认证

如果镜像仓库配置了双向认证,这个时候 containerd 作为客户端,那么需要为 containerd 配置 ssl 证书用于镜像仓库对 containerd 做认证。

cat >> /etc/rancher/k3s/registries.yaml <[root@localhost ssl]# cat /var/lib/rancher/k3s/agent/etc/containerd/config.toml

[plugins.opt]

path = "/var/lib/rancher/k3s/agent/containerd"

[plugins.cri]

stream_server_address = "127.0.0.1"

stream_server_port = "10010"

enable_selinux = false

sandbox_image = "rancher/mirrored-pause:3.1"

[plugins.cri.containerd]

snapshotter = "overlayfs"

disable_snapshot_annotations = true

[plugins.cri.cni]

bin_dir = "/var/lib/rancher/k3s/data/2e877cf4762c3c7df37cc556de3e08890fbf450914bb3ec042ad4f36b5a2413a/bin"

conf_dir = "/var/lib/rancher/k3s/agent/etc/cni/net.d"

[plugins.cri.containerd.runtimes.runc]

runtime_type = "io.containerd.runc.v2"

[plugins.cri.registry.mirrors]

[plugins.cri.registry.mirrors."harbor.jettech.com"]

endpoint = ["https://harbor.jettech.com"]

[plugins.cri.registry.configs."harbor.jettech.com".auth]

username = "admin"

password = "Harbor12345"

[plugins.cri.registry.configs."harbor.jettech.com".tls]

ca_file = "/var/lib/rancher/k3s/cakey.pem"

cert_file = "/var/lib/rancher/k3s/harbor.jettech.com.crt"

key_file = "/var/lib/rancher/k3s/harbor.jettech.com.key"仓库授权认证

对于仓库中的私有项目,需要用户名和密码认证授权才能获取镜像,可以通过添加 configs 来配置用户名和密码。配置仓库认证时,mirror 需要与 configs 匹配。比如,如果配置了一个 mirrors 为 192.168.50.119,那么在 configs 中也需要配置一个 192.168.50.119。

cat >> /etc/rancher/k3s/registries.yaml <配置 containerd 镜像仓库完全攻略-InfoQ

加速器配置

containerd 与 docker 都有默认仓库,并且都为 docker.io。如果配置中未指定 mirror 为 docker.io,重启 containerd 后会自动加载 docker.io 配置。与 docker 不同的是,containerd 可以修改 docker.io 对应的 endpoint( 默认为 https://registry-1.docker.io ),而 docker 无法修改。

docker 中可以通过 registry-mirrors 设置镜像加速地址。如果 pull 的镜像不带仓库地址(项目名+镜像名:tag),则会从默认镜像仓库去拉取镜像。如果配置了镜像加速地址,会先访问镜像加速仓库,如果没有返回数据,再访问默认吧镜像仓库。

containerd 目前没有直接配置镜像加速的功能,因为 containerd 中可以修改 docker.io 对应的 endpoint,所以可以通过修改 endpoint 来实现镜像加速下载。因为 endpoint 是轮训访问,所以可以给 docker.io 配置多个仓库地址来实现 加速地址+默认仓库地址。如下配置示例:

cat >> /etc/rancher/k3s/registries.yaml <完整配置示例

mirrors:

"192.168.50.119":

endpoint:

- "http://192.168.50.119"

"docker.io":

endpoint:

- "https://7bezldxe.mirror.aliyuncs.com"

- "https://registry-1.docker.io"

configs:

"192.168.50.119":

auth:

username: '' # this is the registry username

password: '' # this is the registry password

tls:

cert_file: '' # path to the cert file used in the registry

key_file: '' # path to the key file used in the registry

ca_file: '' # path to the ca file used in the registry

"docker.io":

auth:

username: '' # this is the registry username

password: '' # this is the registry password

tls:

cert_file: '' # path to the cert file used in the registry

key_file: '' # path to the key file used in the registry

ca_file: '' # path to the ca file used in the registry

如果 docker.io 的 endpoint 对应了带有私有项目的镜像仓库,那么这里需要为 docker.io 添加 auth 配置。https://rancher.com/docs/k3s/latest/en/installation/private-registry/

跳转中...https://link.zhihu.com/?target=https%3A//github.com/containerd/cri/blob/master/docs/registry.md

案例:nginx busybnox

配置私有仓库地址,认证相关

[root@localhost k3s]# cat /etc/rancher/k3s/registries.yaml

mirrors:

"192.168.99.41":

endpoint:

- "https://192.168.99.41"

"harbor.jettech.com":

endpoint:

- "https://harbor.jettech.com"

configs:

"harbor.jettech.com":

auth:

username: admin

password: Harbor12345

"harbor.jettech.com":

tls:

ca_file: /var/lib/rancher/k3s/cacerts.pem启动服务

systemctl restart k3scacerts.pem是私有镜像库harbor的跟证书

部署应用

[root@localhost k3s]# cat busybox.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: busybox

labels: {name: busybox}

spec:

replicas: 1

selector:

matchLabels: {name: busybox}

template:

metadata:

name: busybox

labels: {name: busybox}

spec:

containers:

- name: busybox

image: harbor.jettech.com/library/busybox:1.28.4

#image: busybox:1.28.4

args:

- /bin/sh

- -c

- sleep 10; touch /tmp/healthy; sleep 30000

readinessProbe: #就绪探针

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 10 #10s之后开始第一次探测

periodSeconds: 5[root@localhost k3s]# cat nginx.yaml

apiVersion: v1

kind: Service

metadata:

labels: {name: nginx}

name: nginx

spec:

ports:

- {name: t9080, nodePort: 30001, port: 80, protocol: TCP, targetPort: 80}

selector: {name: nginx}

type: NodePort

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

labels: {name: nginx}

spec:

replicas: 1

selector:

matchLabels: {name: nginx}

template:

metadata:

name: nginx

labels: {name: nginx}

spec:

containers:

- name: nginx

image: harbor.jettech.com/jettechtools/nginx:1.21.4

#image: nginx:1.21.4查看服务器启动情况

[root@localhost k3s]# k3s kubectl get all --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system pod/metrics-server-9cf544f65-86k8m 1/1 Running 0 34m

kube-system pod/local-path-provisioner-64ffb68fd-dzqj8 1/1 Running 0 34m

kube-system pod/helm-install-traefik-crd--1-g4wrf 0/1 Completed 0 34m

kube-system pod/helm-install-traefik--1-mbvj6 0/1 Completed 1 34m

kube-system pod/svclb-traefik-zlhkx 2/2 Running 0 33m

kube-system pod/coredns-85cb69466-24fsj 1/1 Running 0 34m

kube-system pod/traefik-786ff64748-pshkv 1/1 Running 0 33m

default pod/busybox-df657bd58-zfkwb 1/1 Running 0 18m

default pod/nginx-56446dcd6d-2qb4k 1/1 Running 0 17m

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.43.0.1 443/TCP 34m

kube-system service/kube-dns ClusterIP 10.43.0.254 53/UDP,53/TCP,9153/TCP 34m

kube-system service/metrics-server ClusterIP 10.43.77.182 443/TCP 34m

kube-system service/traefik LoadBalancer 10.43.150.180 172.16.10.5 80:5668/TCP,443:10383/TCP 33m

default service/nginx NodePort 10.43.229.75 80:30001/TCP 17m

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-system daemonset.apps/svclb-traefik 1 1 1 1 1 33m

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

kube-system deployment.apps/metrics-server 1/1 1 1 34m

kube-system deployment.apps/local-path-provisioner 1/1 1 1 34m

kube-system deployment.apps/coredns 1/1 1 1 34m

kube-system deployment.apps/traefik 1/1 1 1 33m

default deployment.apps/busybox 1/1 1 1 18m

default deployment.apps/nginx 1/1 1 1 17m

NAMESPACE NAME DESIRED CURRENT READY AGE

kube-system replicaset.apps/metrics-server-9cf544f65 1 1 1 34m

kube-system replicaset.apps/local-path-provisioner-64ffb68fd 1 1 1 34m

kube-system replicaset.apps/coredns-85cb69466 1 1 1 34m

kube-system replicaset.apps/traefik-786ff64748 1 1 1 33m

default replicaset.apps/busybox-df657bd58 1 1 1 18m

default replicaset.apps/nginx-56446dcd6d 1 1 1 17m

NAMESPACE NAME COMPLETIONS DURATION AGE

kube-system job.batch/helm-install-traefik-crd 1/1 23s 34m

kube-system job.batch/helm-install-traefik 1/1 25s 34m nslookup:

[root@localhost k3s]# kubectl exec -it pod/busybox-df657bd58-zfkwb sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # nslookup nginx

Server: 10.43.0.254

Address 1: 10.43.0.254 kube-dns.kube-system.svc.jettech.com

Name: nginx

Address 1: 10.43.229.75 nginx.default.svc.jettech.com

/ # nslookup nginx.default.svc.jettech.com

Server: 10.43.0.254

Address 1: 10.43.0.254 kube-dns.kube-system.svc.jettech.com

Name: nginx.default.svc.jettech.com

Address 1: 10.43.229.75 nginx.default.svc.jettech.com离线安装部署

针对生产环境下的 K3s,一个不可逾越的问题就是离线安装。在你的离线环境需要准备以下 3 个组件:

-

K3s 的安装脚本

-

K3s 的二进制文件

-

K3s 依赖的镜像

以上三个组件都可以通过K3s Release页面(https://github.com/k3s-io/k3s/releases)下载,如果在国内使用,推荐从 http://mirror.cnrancher.com 获得这些组件。

我认为离线安装的重点在于K3s 依赖的镜像部分,因为 K3s 的"安装脚本"和"二进制文件"只需要下载到对应目录,然后赋予相应的权限即可,非常简单。但K3s 依赖的镜像的安装方式取决于你使用的是手动部署镜像还是私有镜像仓库,也取决于容器运行时使用的是containerd还是docker。

针对不同的组合形式,可以分为以下几种形式来实现离线安装:

-

Containerd + 手动部署镜像方式

-

Docker + 手动部署镜像方式

-

Containerd + 私有镜像仓库方式

-

Docker + 私有镜像仓库方式

Containerd + 手动部署镜像方式

假设你已经将同一版本的 K3s 的安装脚本(k3s-install.sh)、K3s 的二进制文件(k3s)、K3s 依赖的镜像(k3s-airgap-images-amd64.tar)下载到了/root目录下。

如果你使用的容器运行时为containerd,在启动 K3s 时,它会检查/var/lib/rancher/k3s/agent/images/是否存在可用的镜像压缩包,如果存在,就将该镜像导入到containerd 镜像列表中。所以我们只需要下载 K3s 依赖的镜像到/var/lib/rancher/k3s/agent/images/目录,然后启动 K3s 即可。

1、导入镜像到 containerd 镜像列表

sudo mkdir -p /var/lib/rancher/k3s/agent/images/

sudo cp /root/k3s-airgap-images-amd64.tar /var/lib/rancher/k3s/agent/images/2、将 K3s 安装脚本和 K3s 二进制文件移动到对应目录并授予可执行权限

sudo chmod a+x /root/k3s /root/k3s-install.sh

sudo cp /root/k3s /usr/local/bin/3、安装 K3s

INSTALL_K3S_SKIP_DOWNLOAD=true /root/k3s-install.sh[root@localhost bin]# crictl images

IMAGE TAG IMAGE ID SIZE

docker.io/rancher/klipper-helm v0.6.6-build20211022 194c895f8d63f 242MB

docker.io/rancher/klipper-lb v0.3.4 746788bcc27e2 8.48MB

docker.io/rancher/local-path-provisioner v0.0.20 933989e1174c2 35.2MB

docker.io/rancher/mirrored-coredns-coredns 1.8.4 8d147537fb7d1 47.7MB

docker.io/rancher/mirrored-library-busybox 1.32.1 388056c9a6838 1.45MB

docker.io/rancher/mirrored-library-traefik 2.5.0 3c1baa65c3430 98.3MB

docker.io/rancher/mirrored-metrics-server v0.5.0 1c655933b9c56 64.8MB

docker.io/rancher/mirrored-pause 3.1 da86e6ba6ca19 746kBDocker + 手动部署镜像方式

假设你已经将同一版本的 K3s 的安装脚本(k3s-install.sh)、K3s 的二进制文件(k3s)、K3s 依赖的镜像(k3s-airgap-images-amd64.tar)下载到了/root目录下。

与 containerd 不同,使用 docker 作为容器运行时,启动 K3s 不会导入/var/lib/rancher/k3s/agent/images/目录下的镜像。所以在启动 K3s 之前我们需要将 K3s 依赖的镜像手动导入到 docker 镜像列表中。

1、导入镜像到 docker 镜像列表

sudo docker load -i /root/k3s-airgap-images-amd64.tar2、将 K3s 安装脚本和 K3s 二进制文件移动到对应目录并授予可执行权限

sudo chmod a+x /root/k3s /root/k3s-install.sh

sudo cp /root/k3s /usr/local/bin/3、安装 K3s

INSTALL_K3S_SKIP_DOWNLOAD=true INSTALL_K3S_EXEC='--docker' /root/k3s-install.sh稍等片刻,即可查看到 K3s 已经成功启动:

root@k3s-docker:~# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

rancher/klipper-helm v0.3.2 4be09ab862d4 7 weeks ago 145MB

rancher/coredns-coredns 1.8.0 296a6d5035e2 2 months ago 42.5MB

rancher/library-busybox 1.31.1 1c35c4412082 7 months ago 1.22MB

rancher/local-path-provisioner v0.0.14 e422121c9c5f 7 months ago 41.7MB

rancher/library-traefik 1.7.19 aa764f7db305 14 months ago 85.7MB

rancher/metrics-server v0.3.6 9dd718864ce6 14 months ago 39.9MB

rancher/klipper-lb v0.1.2 897ce3c5fc8f 19 months ago 6.1MB

rancher/pause 3.1 da86e6ba6ca1 3 years ago 742kB

Containerd + 私有镜像仓库方式

假设你已经将同一版本的 K3s 的安装脚本(k3s-install.sh)、K3s 的二进制文件(k3s)下载到了/root目录下。并且 K3s 所需要的镜像已经上传到了镜像仓库(本例的镜像仓库地址为:http://192.168.64.44:5000)。K3s 所需的镜像列表可以从 K3s Release页面的k3s-images.txt获得。

私有镜像仓库配置参考 | Rancher文档

sudo mkdir -p /etc/rancher/k3s

sudo cat >> /etc/rancher/k3s/registries.yaml <2、将 K3s 安装脚本和 K3s 二进制文件移动到对应目录并授予可执行权限

sudo chmod a+x /root/k3s /root/k3s-install.sh

sudo cp /root/k3s /usr/local/bin/3、安装 K3s

INSTALL_K3S_SKIP_DOWNLOAD=true /root/k3s-install.shDocker + 私有镜像仓库方式

假设你已经将同一版本的 K3s 的安装脚本(k3s-install.sh)、K3s 的二进制文件(k3s)下载到了/root目录下。并且 K3s 所需要的镜像已经上传到了镜像仓库(本例的镜像仓库地址为:http://192.168.64.44:5000)。K3s 所需的镜像列表可以从 K3s Release页面的k3s-images.txt获得。k3s-images.txt内容

k3s 版本:v1.22.5+k3s1

docker.io/rancher/klipper-helm:v0.6.6-build20211022

docker.io/rancher/klipper-lb:v0.3.4

docker.io/rancher/local-path-provisioner:v0.0.20

docker.io/rancher/mirrored-coredns-coredns:1.8.4

docker.io/rancher/mirrored-library-busybox:1.32.1

docker.io/rancher/mirrored-library-traefik:2.5.0

docker.io/rancher/mirrored-metrics-server:v0.5.0

docker.io/rancher/mirrored-pause:3.1

1、配置 K3s 镜像仓库

Docker 不支持像 containerd 那样可以通过修改 docker.io 对应的 endpoint(默认为 https://registry-1.docker.io)来间接修改默认镜像仓库的地址。但在Docker中可以通过配置registry-mirrors来实现从其他镜像仓库中获取K3s镜像。这样配置之后,会先从registry-mirrors配置的地址拉取镜像,如果获取不到才会从默认的docker.io获取镜像,从而满足了我们的需求。

cat >> /etc/docker/daemon.json <2、将 K3s 安装脚本和 K3s 二进制文件移动到对应目录并授予可执行权限

sudo chmod a+x /root/k3s /root/k3s-install.sh

sudo cp /root/k3s /usr/local/bin/3、安装 K3s

INSTALL_K3S_SKIP_DOWNLOAD=true INSTALL_K3S_EXEC='--docker' /root/k3s-install.sh后 记

手动部署镜像方式比较适合小规模安装、节点数量不多的场景。私有镜像仓库比较适合规模比较大节点数比较多的集群。本文的docker registry采用的是最简单的搭建方式docker run -d -p 5000:5000 --restart=always --name registry registry:2,可能在你的环境中由于镜像仓库的搭建方式不同,你可能需要修改一些关于registry的参数。

参考资料

K3s离线安装文档:

https://docs.rancher.cn/docs/k3s/installation/airgap/_index/

=========================================================

k3s是rancher®出品的一个简化、轻量的k8s,本篇博客记录k3s的安装及踩的部分坑。

从名字上也能看出,k3s比k8s少了些东西,详情可见其官网k3s.io,本地试验可参考官网离线安装教程

首先去其github的releases页下载主可执行文件k3s、离线安装包k3s-airgap-images-amd64.tar和安装脚本

我用的是v1.18.6+k3s1版本,其于2020年7月16日发布。

增加可执行文件和脚本的可执行权限

wget https://get.k3s.io -O install-k3s.sh

chmod +x install-k3s.sh[root@localhost bin]# cat install-k3s.sh

#!/bin/sh

set -e

set -o noglob

# Usage:

# curl ... | ENV_VAR=... sh -

# or

# ENV_VAR=... ./install.sh

#

# Example:

# Installing a server without traefik:

# curl ... | INSTALL_K3S_EXEC="--disable=traefik" sh -

# Installing an agent to point at a server:

# curl ... | K3S_TOKEN=xxx K3S_URL=https://server-url:6443 sh -

#

# Environment variables:

# - K3S_*

# Environment variables which begin with K3S_ will be preserved for the

# systemd service to use. Setting K3S_URL without explicitly setting

# a systemd exec command will default the command to "agent", and we

# enforce that K3S_TOKEN or K3S_CLUSTER_SECRET is also set.

#

# - INSTALL_K3S_SKIP_DOWNLOAD

# If set to true will not download k3s hash or binary.

#

# - INSTALL_K3S_FORCE_RESTART

# If set to true will always restart the K3s service

#

# - INSTALL_K3S_SYMLINK

# If set to 'skip' will not create symlinks, 'force' will overwrite,

# default will symlink if command does not exist in path.

#

# - INSTALL_K3S_SKIP_ENABLE

# If set to true will not enable or start k3s service.

#

# - INSTALL_K3S_SKIP_START

# If set to true will not start k3s service.

#

# - INSTALL_K3S_VERSION

# Version of k3s to download from github. Will attempt to download from the

# stable channel if not specified.

#

# - INSTALL_K3S_COMMIT

# Commit of k3s to download from temporary cloud storage.

# * (for developer & QA use)

#

# - INSTALL_K3S_BIN_DIR

# Directory to install k3s binary, links, and uninstall script to, or use

# /usr/local/bin as the default

#

# - INSTALL_K3S_BIN_DIR_READ_ONLY

# If set to true will not write files to INSTALL_K3S_BIN_DIR, forces

# setting INSTALL_K3S_SKIP_DOWNLOAD=true

#

# - INSTALL_K3S_SYSTEMD_DIR

# Directory to install systemd service and environment files to, or use

# /etc/systemd/system as the default

#

# - INSTALL_K3S_EXEC or script arguments

# Command with flags to use for launching k3s in the systemd service, if

# the command is not specified will default to "agent" if K3S_URL is set

# or "server" if not. The final systemd command resolves to a combination

# of EXEC and script args ($@).

#

# The following commands result in the same behavior:

# curl ... | INSTALL_K3S_EXEC="--disable=traefik" sh -s -

# curl ... | INSTALL_K3S_EXEC="server --disable=traefik" sh -s -

# curl ... | INSTALL_K3S_EXEC="server" sh -s - --disable=traefik

# curl ... | sh -s - server --disable=traefik

# curl ... | sh -s - --disable=traefik

#

# - INSTALL_K3S_NAME

# Name of systemd service to create, will default from the k3s exec command

# if not specified. If specified the name will be prefixed with 'k3s-'.

#

# - INSTALL_K3S_TYPE

# Type of systemd service to create, will default from the k3s exec command

# if not specified.

#

# - INSTALL_K3S_SELINUX_WARN

# If set to true will continue if k3s-selinux policy is not found.

#

# - INSTALL_K3S_SKIP_SELINUX_RPM

# If set to true will skip automatic installation of the k3s RPM.

#

# - INSTALL_K3S_CHANNEL_URL

# Channel URL for fetching k3s download URL.

# Defaults to 'https://update.k3s.io/v1-release/channels'.

#

# - INSTALL_K3S_CHANNEL

# Channel to use for fetching k3s download URL.

# Defaults to 'stable'.

GITHUB_URL=https://github.com/k3s-io/k3s/releases

STORAGE_URL=https://storage.googleapis.com/k3s-ci-builds

DOWNLOADER=

# --- helper functions for logs ---

info()

{

echo '[INFO] ' "$@"

}

warn()

{

echo '[WARN] ' "$@" >&2

}

fatal()

{

echo '[ERROR] ' "$@" >&2

exit 1

}

# --- fatal if no systemd or openrc ---

verify_system() {

if [ -x /sbin/openrc-run ]; then

HAS_OPENRC=true

return

fi

if [ -x /bin/systemctl ] || type systemctl > /dev/null 2>&1; then

HAS_SYSTEMD=true

return

fi

fatal 'Can not find systemd or openrc to use as a process supervisor for k3s'

}

# --- add quotes to command arguments ---

quote() {

for arg in "$@"; do

printf '%s\n' "$arg" | sed "s/'/'\\\\''/g;1s/^/'/;\$s/\$/'/"

done

}

# --- add indentation and trailing slash to quoted args ---

quote_indent() {

printf ' \\\n'

for arg in "$@"; do

printf '\t%s \\\n' "$(quote "$arg")"

done

}

# --- escape most punctuation characters, except quotes, forward slash, and space ---

escape() {

printf '%s' "$@" | sed -e 's/\([][!#$%&()*;<=>?\_`{|}]\)/\\\1/g;'

}

# --- escape double quotes ---

escape_dq() {

printf '%s' "$@" | sed -e 's/"/\\"/g'

}

# --- ensures $K3S_URL is empty or begins with https://, exiting fatally otherwise ---

verify_k3s_url() {

case "${K3S_URL}" in

"")

;;

https://*)

;;

*)

fatal "Only https:// URLs are supported for K3S_URL (have ${K3S_URL})"

;;

esac

}

# --- define needed environment variables ---

setup_env() {

# --- use command args if passed or create default ---

case "$1" in

# --- if we only have flags discover if command should be server or agent ---

(-*|"")

if [ -z "${K3S_URL}" ]; then

CMD_K3S=server

else

if [ -z "${K3S_TOKEN}" ] && [ -z "${K3S_TOKEN_FILE}" ] && [ -z "${K3S_CLUSTER_SECRET}" ]; then

fatal "Defaulted k3s exec command to 'agent' because K3S_URL is defined, but K3S_TOKEN, K3S_TOKEN_FILE or K3S_CLUSTER_SECRET is not defined."

fi

CMD_K3S=agent

fi

;;

# --- command is provided ---

(*)

CMD_K3S=$1

shift

;;

esac

verify_k3s_url

CMD_K3S_EXEC="${CMD_K3S}$(quote_indent "$@")"

# --- use systemd name if defined or create default ---

if [ -n "${INSTALL_K3S_NAME}" ]; then

SYSTEM_NAME=k3s-${INSTALL_K3S_NAME}

else

if [ "${CMD_K3S}" = server ]; then

SYSTEM_NAME=k3s

else

SYSTEM_NAME=k3s-${CMD_K3S}

fi

fi

# --- check for invalid characters in system name ---

valid_chars=$(printf '%s' "${SYSTEM_NAME}" | sed -e 's/[][!#$%&()*;<=>?\_`{|}/[:space:]]/^/g;' )

if [ "${SYSTEM_NAME}" != "${valid_chars}" ]; then

invalid_chars=$(printf '%s' "${valid_chars}" | sed -e 's/[^^]/ /g')

fatal "Invalid characters for system name:

${SYSTEM_NAME}

${invalid_chars}"

fi

# --- use sudo if we are not already root ---

SUDO=sudo

if [ $(id -u) -eq 0 ]; then

SUDO=

fi

# --- use systemd type if defined or create default ---

if [ -n "${INSTALL_K3S_TYPE}" ]; then

SYSTEMD_TYPE=${INSTALL_K3S_TYPE}

else

if [ "${CMD_K3S}" = server ]; then

SYSTEMD_TYPE=notify

else

SYSTEMD_TYPE=exec

fi

fi

# --- use binary install directory if defined or create default ---

if [ -n "${INSTALL_K3S_BIN_DIR}" ]; then

BIN_DIR=${INSTALL_K3S_BIN_DIR}

else

# --- use /usr/local/bin if root can write to it, otherwise use /opt/bin if it exists

BIN_DIR=/usr/local/bin

if ! $SUDO sh -c "touch ${BIN_DIR}/k3s-ro-test && rm -rf ${BIN_DIR}/k3s-ro-test"; then

if [ -d /opt/bin ]; then

BIN_DIR=/opt/bin

fi

fi

fi

# --- use systemd directory if defined or create default ---

if [ -n "${INSTALL_K3S_SYSTEMD_DIR}" ]; then

SYSTEMD_DIR="${INSTALL_K3S_SYSTEMD_DIR}"

else

SYSTEMD_DIR=/etc/systemd/system

fi

# --- set related files from system name ---

SERVICE_K3S=${SYSTEM_NAME}.service

UNINSTALL_K3S_SH=${UNINSTALL_K3S_SH:-${BIN_DIR}/${SYSTEM_NAME}-uninstall.sh}

KILLALL_K3S_SH=${KILLALL_K3S_SH:-${BIN_DIR}/k3s-killall.sh}

# --- use service or environment location depending on systemd/openrc ---

if [ "${HAS_SYSTEMD}" = true ]; then

FILE_K3S_SERVICE=${SYSTEMD_DIR}/${SERVICE_K3S}

FILE_K3S_ENV=${SYSTEMD_DIR}/${SERVICE_K3S}.env

elif [ "${HAS_OPENRC}" = true ]; then

$SUDO mkdir -p /etc/rancher/k3s

FILE_K3S_SERVICE=/etc/init.d/${SYSTEM_NAME}

FILE_K3S_ENV=/etc/rancher/k3s/${SYSTEM_NAME}.env

fi

# --- get hash of config & exec for currently installed k3s ---

PRE_INSTALL_HASHES=$(get_installed_hashes)

# --- if bin directory is read only skip download ---

if [ "${INSTALL_K3S_BIN_DIR_READ_ONLY}" = true ]; then

INSTALL_K3S_SKIP_DOWNLOAD=true

fi

# --- setup channel values

INSTALL_K3S_CHANNEL_URL=${INSTALL_K3S_CHANNEL_URL:-'https://update.k3s.io/v1-release/channels'}

INSTALL_K3S_CHANNEL=${INSTALL_K3S_CHANNEL:-'stable'}

}

# --- check if skip download environment variable set ---

can_skip_download() {

if [ "${INSTALL_K3S_SKIP_DOWNLOAD}" != true ]; then

return 1

fi

}

# --- verify an executable k3s binary is installed ---

verify_k3s_is_executable() {

if [ ! -x ${BIN_DIR}/k3s ]; then

fatal "Executable k3s binary not found at ${BIN_DIR}/k3s"

fi

}

# --- set arch and suffix, fatal if architecture not supported ---

setup_verify_arch() {

if [ -z "$ARCH" ]; then

ARCH=$(uname -m)

fi

case $ARCH in

amd64)

ARCH=amd64

SUFFIX=

;;

x86_64)

ARCH=amd64

SUFFIX=

;;

arm64)

ARCH=arm64

SUFFIX=-${ARCH}

;;

aarch64)

ARCH=arm64

SUFFIX=-${ARCH}

;;

arm*)

ARCH=arm

SUFFIX=-${ARCH}hf

;;

*)

fatal "Unsupported architecture $ARCH"

esac

}

# --- verify existence of network downloader executable ---

verify_downloader() {

# Return failure if it doesn't exist or is no executable

[ -x "$(command -v $1)" ] || return 1

# Set verified executable as our downloader program and return success

DOWNLOADER=$1

return 0

}

# --- create temporary directory and cleanup when done ---

setup_tmp() {

TMP_DIR=$(mktemp -d -t k3s-install.XXXXXXXXXX)

TMP_HASH=${TMP_DIR}/k3s.hash

TMP_BIN=${TMP_DIR}/k3s.bin

cleanup() {

code=$?

set +e

trap - EXIT

rm -rf ${TMP_DIR}

exit $code

}

trap cleanup INT EXIT

}

# --- use desired k3s version if defined or find version from channel ---

get_release_version() {

if [ -n "${INSTALL_K3S_COMMIT}" ]; then

VERSION_K3S="commit ${INSTALL_K3S_COMMIT}"

elif [ -n "${INSTALL_K3S_VERSION}" ]; then

VERSION_K3S=${INSTALL_K3S_VERSION}

else

info "Finding release for channel ${INSTALL_K3S_CHANNEL}"

version_url="${INSTALL_K3S_CHANNEL_URL}/${INSTALL_K3S_CHANNEL}"

case $DOWNLOADER in

curl)

VERSION_K3S=$(curl -w '%{url_effective}' -L -s -S ${version_url} -o /dev/null | sed -e 's|.*/||')

;;

wget)

VERSION_K3S=$(wget -SqO /dev/null ${version_url} 2>&1 | grep -i Location | sed -e 's|.*/||')

;;

*)

fatal "Incorrect downloader executable '$DOWNLOADER'"

;;

esac

fi

info "Using ${VERSION_K3S} as release"

}

# --- download from github url ---

download() {

[ $# -eq 2 ] || fatal 'download needs exactly 2 arguments'

case $DOWNLOADER in

curl)

curl -o $1 -sfL $2

;;

wget)

wget -qO $1 $2

;;

*)

fatal "Incorrect executable '$DOWNLOADER'"

;;

esac

# Abort if download command failed

[ $? -eq 0 ] || fatal 'Download failed'

}

# --- download hash from github url ---

download_hash() {

if [ -n "${INSTALL_K3S_COMMIT}" ]; then

HASH_URL=${STORAGE_URL}/k3s${SUFFIX}-${INSTALL_K3S_COMMIT}.sha256sum

else

HASH_URL=${GITHUB_URL}/download/${VERSION_K3S}/sha256sum-${ARCH}.txt

fi

info "Downloading hash ${HASH_URL}"

download ${TMP_HASH} ${HASH_URL}

HASH_EXPECTED=$(grep " k3s${SUFFIX}$" ${TMP_HASH})

HASH_EXPECTED=${HASH_EXPECTED%%[[:blank:]]*}

}

# --- check hash against installed version ---

installed_hash_matches() {

if [ -x ${BIN_DIR}/k3s ]; then

HASH_INSTALLED=$(sha256sum ${BIN_DIR}/k3s)

HASH_INSTALLED=${HASH_INSTALLED%%[[:blank:]]*}

if [ "${HASH_EXPECTED}" = "${HASH_INSTALLED}" ]; then

return

fi

fi

return 1

}

# --- download binary from github url ---

download_binary() {

if [ -n "${INSTALL_K3S_COMMIT}" ]; then

BIN_URL=${STORAGE_URL}/k3s${SUFFIX}-${INSTALL_K3S_COMMIT}

else

BIN_URL=${GITHUB_URL}/download/${VERSION_K3S}/k3s${SUFFIX}

fi

info "Downloading binary ${BIN_URL}"

download ${TMP_BIN} ${BIN_URL}

}

# --- verify downloaded binary hash ---

verify_binary() {

info "Verifying binary download"

HASH_BIN=$(sha256sum ${TMP_BIN})

HASH_BIN=${HASH_BIN%%[[:blank:]]*}

if [ "${HASH_EXPECTED}" != "${HASH_BIN}" ]; then

fatal "Download sha256 does not match ${HASH_EXPECTED}, got ${HASH_BIN}"

fi

}

# --- setup permissions and move binary to system directory ---

setup_binary() {

chmod 755 ${TMP_BIN}

info "Installing k3s to ${BIN_DIR}/k3s"

$SUDO chown root:root ${TMP_BIN}

$SUDO mv -f ${TMP_BIN} ${BIN_DIR}/k3s

}

# --- setup selinux policy ---

setup_selinux() {

case ${INSTALL_K3S_CHANNEL} in

*testing)

rpm_channel=testing

;;

*latest)

rpm_channel=latest

;;

*)

rpm_channel=stable

;;

esac

rpm_site="rpm.rancher.io"

if [ "${rpm_channel}" = "testing" ]; then

rpm_site="rpm-testing.rancher.io"

fi

[ -r /etc/os-release ] && . /etc/os-release

if [ "${ID_LIKE%%[ ]*}" = "suse" ]; then

rpm_target=sle

rpm_site_infix=microos

package_installer=zypper

elif [ "${VERSION_ID%%.*}" = "7" ]; then

rpm_target=el7

rpm_site_infix=centos/7

package_installer=yum

else

rpm_target=el8

rpm_site_infix=centos/8

package_installer=yum

fi

if [ "${package_installer}" = "yum" ] && [ -x /usr/bin/dnf ]; then

package_installer=dnf

fi

policy_hint="please install:

${package_installer} install -y container-selinux

${package_installer} install -y https://${rpm_site}/k3s/${rpm_channel}/common/${rpm_site_infix}/noarch/k3s-selinux-0.4-1.${rpm_target}.noarch.rpm

"

if [ "$INSTALL_K3S_SKIP_SELINUX_RPM" = true ] || can_skip_download || [ ! -d /usr/share/selinux ]; then

info "Skipping installation of SELinux RPM"

elif [ "${ID_LIKE:-}" != coreos ] && [ "${VARIANT_ID:-}" != coreos ]; then

install_selinux_rpm ${rpm_site} ${rpm_channel} ${rpm_target} ${rpm_site_infix}

fi

policy_error=fatal

if [ "$INSTALL_K3S_SELINUX_WARN" = true ] || [ "${ID_LIKE:-}" = coreos ] || [ "${VARIANT_ID:-}" = coreos ]; then

policy_error=warn

fi

if ! $SUDO chcon -u system_u -r object_r -t container_runtime_exec_t ${BIN_DIR}/k3s >/dev/null 2>&1; then

if $SUDO grep '^\s*SELINUX=enforcing' /etc/selinux/config >/dev/null 2>&1; then

$policy_error "Failed to apply container_runtime_exec_t to ${BIN_DIR}/k3s, ${policy_hint}"

fi

elif [ ! -f /usr/share/selinux/packages/k3s.pp ]; then

if [ -x /usr/sbin/transactional-update ]; then

warn "Please reboot your machine to activate the changes and avoid data loss."

else

$policy_error "Failed to find the k3s-selinux policy, ${policy_hint}"

fi

fi

}

install_selinux_rpm() {

if [ -r /etc/redhat-release ] || [ -r /etc/centos-release ] || [ -r /etc/oracle-release ] || [ "${ID_LIKE%%[ ]*}" = "suse" ]; then

repodir=/etc/yum.repos.d

if [ -d /etc/zypp/repos.d ]; then

repodir=/etc/zypp/repos.d

fi

set +o noglob

$SUDO rm -f ${repodir}/rancher-k3s-common*.repo

set -o noglob

if [ -r /etc/redhat-release ] && [ "${3}" = "el7" ]; then

$SUDO yum install -y yum-utils

$SUDO yum-config-manager --enable rhel-7-server-extras-rpms

fi

$SUDO tee ${repodir}/rancher-k3s-common.repo >/dev/null << EOF

[rancher-k3s-common-${2}]

name=Rancher K3s Common (${2})

baseurl=https://${1}/k3s/${2}/common/${4}/noarch

enabled=1

gpgcheck=1

repo_gpgcheck=0

gpgkey=https://${1}/public.key

EOF

case ${3} in

sle)

rpm_installer="zypper --gpg-auto-import-keys"

if [ "${TRANSACTIONAL_UPDATE=false}" != "true" ] && [ -x /usr/sbin/transactional-update ]; then

rpm_installer="transactional-update --no-selfupdate -d run ${rpm_installer}"

: "${INSTALL_K3S_SKIP_START:=true}"

fi

;;

*)

rpm_installer="yum"

;;

esac

if [ "${rpm_installer}" = "yum" ] && [ -x /usr/bin/dnf ]; then

rpm_installer=dnf

fi

# shellcheck disable=SC2086

$SUDO ${rpm_installer} install -y "k3s-selinux"

fi

return

}

# --- download and verify k3s ---

download_and_verify() {

if can_skip_download; then

info 'Skipping k3s download and verify'

verify_k3s_is_executable

return

fi

setup_verify_arch

verify_downloader curl || verify_downloader wget || fatal 'Can not find curl or wget for downloading files'

setup_tmp

get_release_version

download_hash

if installed_hash_matches; then

info 'Skipping binary downloaded, installed k3s matches hash'

return

fi

download_binary

verify_binary

setup_binary

}

# --- add additional utility links ---

create_symlinks() {

[ "${INSTALL_K3S_BIN_DIR_READ_ONLY}" = true ] && return

[ "${INSTALL_K3S_SYMLINK}" = skip ] && return

for cmd in kubectl crictl ctr; do

if [ ! -e ${BIN_DIR}/${cmd} ] || [ "${INSTALL_K3S_SYMLINK}" = force ]; then

which_cmd=$(command -v ${cmd} 2>/dev/null || true)

if [ -z "${which_cmd}" ] || [ "${INSTALL_K3S_SYMLINK}" = force ]; then

info "Creating ${BIN_DIR}/${cmd} symlink to k3s"

$SUDO ln -sf k3s ${BIN_DIR}/${cmd}

else

info "Skipping ${BIN_DIR}/${cmd} symlink to k3s, command exists in PATH at ${which_cmd}"

fi

else

info "Skipping ${BIN_DIR}/${cmd} symlink to k3s, already exists"

fi

done

}

# --- create killall script ---

create_killall() {

[ "${INSTALL_K3S_BIN_DIR_READ_ONLY}" = true ] && return

info "Creating killall script ${KILLALL_K3S_SH}"

$SUDO tee ${KILLALL_K3S_SH} >/dev/null << \EOF

#!/bin/sh

[ $(id -u) -eq 0 ] || exec sudo $0 $@

for bin in /var/lib/rancher/k3s/data/**/bin/; do

[ -d $bin ] && export PATH=$PATH:$bin:$bin/aux

done

set -x

for service in /etc/systemd/system/k3s*.service; do

[ -s $service ] && systemctl stop $(basename $service)

done

for service in /etc/init.d/k3s*; do

[ -x $service ] && $service stop

done

pschildren() {

ps -e -o ppid= -o pid= | \

sed -e 's/^\s*//g; s/\s\s*/\t/g;' | \

grep -w "^$1" | \

cut -f2

}

pstree() {

for pid in $@; do

echo $pid

for child in $(pschildren $pid); do

pstree $child

done

done

}

killtree() {

kill -9 $(

{ set +x; } 2>/dev/null;

pstree $@;

set -x;

) 2>/dev/null

}

getshims() {

ps -e -o pid= -o args= | sed -e 's/^ *//; s/\s\s*/\t/;' | grep -w 'k3s/data/[^/]*/bin/containerd-shim' | cut -f1

}

killtree $({ set +x; } 2>/dev/null; getshims; set -x)

do_unmount_and_remove() {

set +x

while read -r _ path _; do

case "$path" in $1*) echo "$path" ;; esac

done < /proc/self/mounts | sort -r | xargs -r -t -n 1 sh -c 'umount "$0" && rm -rf "$0"'

set -x

}

do_unmount_and_remove '/run/k3s'

do_unmount_and_remove '/var/lib/rancher/k3s'

do_unmount_and_remove '/var/lib/kubelet/pods'

do_unmount_and_remove '/var/lib/kubelet/plugins'

do_unmount_and_remove '/run/netns/cni-'

# Remove CNI namespaces

ip netns show 2>/dev/null | grep cni- | xargs -r -t -n 1 ip netns delete

# Delete network interface(s) that match 'master cni0'

ip link show 2>/dev/null | grep 'master cni0' | while read ignore iface ignore; do

iface=${iface%%@*}

[ -z "$iface" ] || ip link delete $iface

done

ip link delete cni0

ip link delete flannel.1

ip link delete flannel-v6.1

rm -rf /var/lib/cni/

iptables-save | grep -v KUBE- | grep -v CNI- | iptables-restore

EOF

$SUDO chmod 755 ${KILLALL_K3S_SH}

$SUDO chown root:root ${KILLALL_K3S_SH}

}

# --- create uninstall script ---

create_uninstall() {

[ "${INSTALL_K3S_BIN_DIR_READ_ONLY}" = true ] && return

info "Creating uninstall script ${UNINSTALL_K3S_SH}"

$SUDO tee ${UNINSTALL_K3S_SH} >/dev/null << EOF

#!/bin/sh

set -x

[ \$(id -u) -eq 0 ] || exec sudo \$0 \$@

${KILLALL_K3S_SH}

if command -v systemctl; then

systemctl disable ${SYSTEM_NAME}

systemctl reset-failed ${SYSTEM_NAME}

systemctl daemon-reload

fi

if command -v rc-update; then

rc-update delete ${SYSTEM_NAME} default

fi

rm -f ${FILE_K3S_SERVICE}

rm -f ${FILE_K3S_ENV}

remove_uninstall() {

rm -f ${UNINSTALL_K3S_SH}

}

trap remove_uninstall EXIT

if (ls ${SYSTEMD_DIR}/k3s*.service || ls /etc/init.d/k3s*) >/dev/null 2>&1; then

set +x; echo 'Additional k3s services installed, skipping uninstall of k3s'; set -x

exit

fi

for cmd in kubectl crictl ctr; do

if [ -L ${BIN_DIR}/\$cmd ]; then

rm -f ${BIN_DIR}/\$cmd

fi

done

rm -rf /etc/rancher/k3s

rm -rf /run/k3s

rm -rf /run/flannel

rm -rf /var/lib/rancher/k3s

rm -rf /var/lib/kubelet

rm -f ${BIN_DIR}/k3s

rm -f ${KILLALL_K3S_SH}

if type yum >/dev/null 2>&1; then

yum remove -y k3s-selinux

rm -f /etc/yum.repos.d/rancher-k3s-common*.repo

elif type zypper >/dev/null 2>&1; then

uninstall_cmd="zypper remove -y k3s-selinux"

if [ "\${TRANSACTIONAL_UPDATE=false}" != "true" ] && [ -x /usr/sbin/transactional-update ]; then

uninstall_cmd="transactional-update --no-selfupdate -d run \$uninstall_cmd"

fi

\$uninstall_cmd

rm -f /etc/zypp/repos.d/rancher-k3s-common*.repo

fi

EOF

$SUDO chmod 755 ${UNINSTALL_K3S_SH}

$SUDO chown root:root ${UNINSTALL_K3S_SH}

}

# --- disable current service if loaded --

systemd_disable() {

$SUDO systemctl disable ${SYSTEM_NAME} >/dev/null 2>&1 || true

$SUDO rm -f /etc/systemd/system/${SERVICE_K3S} || true

$SUDO rm -f /etc/systemd/system/${SERVICE_K3S}.env || true

}

# --- capture current env and create file containing k3s_ variables ---

create_env_file() {

info "env: Creating environment file ${FILE_K3S_ENV}"

$SUDO touch ${FILE_K3S_ENV}

$SUDO chmod 0600 ${FILE_K3S_ENV}

sh -c export | while read x v; do echo $v; done | grep -E '^(K3S|CONTAINERD)_' | $SUDO tee ${FILE_K3S_ENV} >/dev/null

sh -c export | while read x v; do echo $v; done | grep -Ei '^(NO|HTTP|HTTPS)_PROXY' | $SUDO tee -a ${FILE_K3S_ENV} >/dev/null

}

# --- write systemd service file ---

create_systemd_service_file() {

info "systemd: Creating service file ${FILE_K3S_SERVICE}"

$SUDO tee ${FILE_K3S_SERVICE} >/dev/null << EOF

[Unit]

Description=Lightweight Kubernetes

Documentation=https://k3s.io

Wants=network-online.target

After=network-online.target

[Install]

WantedBy=multi-user.target

[Service]

Type=${SYSTEMD_TYPE}

EnvironmentFile=-/etc/default/%N

EnvironmentFile=-/etc/sysconfig/%N

EnvironmentFile=-${FILE_K3S_ENV}

KillMode=process

Delegate=yes

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=1048576

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

TimeoutStartSec=0

Restart=always

RestartSec=5s

ExecStartPre=/bin/sh -xc '! /usr/bin/systemctl is-enabled --quiet nm-cloud-setup.service'

ExecStartPre=-/sbin/modprobe br_netfilter

ExecStartPre=-/sbin/modprobe overlay

ExecStart=${BIN_DIR}/k3s \\

${CMD_K3S_EXEC}

EOF

}

# --- write openrc service file ---

create_openrc_service_file() {

LOG_FILE=/var/log/${SYSTEM_NAME}.log

info "openrc: Creating service file ${FILE_K3S_SERVICE}"

$SUDO tee ${FILE_K3S_SERVICE} >/dev/null << EOF

#!/sbin/openrc-run

depend() {

after network-online

want cgroups

}

start_pre() {

rm -f /tmp/k3s.*

}

supervisor=supervise-daemon

name=${SYSTEM_NAME}

command="${BIN_DIR}/k3s"

command_args="$(escape_dq "${CMD_K3S_EXEC}")

>>${LOG_FILE} 2>&1"

output_log=${LOG_FILE}

error_log=${LOG_FILE}

pidfile="/var/run/${SYSTEM_NAME}.pid"

respawn_delay=5

respawn_max=0

set -o allexport

if [ -f /etc/environment ]; then source /etc/environment; fi

if [ -f ${FILE_K3S_ENV} ]; then source ${FILE_K3S_ENV}; fi

set +o allexport

EOF

$SUDO chmod 0755 ${FILE_K3S_SERVICE}

$SUDO tee /etc/logrotate.d/${SYSTEM_NAME} >/dev/null << EOF

${LOG_FILE} {

missingok

notifempty

copytruncate

}

EOF

}

# --- write systemd or openrc service file ---

create_service_file() {

[ "${HAS_SYSTEMD}" = true ] && create_systemd_service_file

[ "${HAS_OPENRC}" = true ] && create_openrc_service_file

return 0

}

# --- get hashes of the current k3s bin and service files

get_installed_hashes() {

$SUDO sha256sum ${BIN_DIR}/k3s ${FILE_K3S_SERVICE} ${FILE_K3S_ENV} 2>&1 || true

}

# --- enable and start systemd service ---

systemd_enable() {

info "systemd: Enabling ${SYSTEM_NAME} unit"

$SUDO systemctl enable ${FILE_K3S_SERVICE} >/dev/null

$SUDO systemctl daemon-reload >/dev/null

}

systemd_start() {

info "systemd: Starting ${SYSTEM_NAME}"

$SUDO systemctl restart ${SYSTEM_NAME}

}

# --- enable and start openrc service ---

openrc_enable() {

info "openrc: Enabling ${SYSTEM_NAME} service for default runlevel"

$SUDO rc-update add ${SYSTEM_NAME} default >/dev/null

}

openrc_start() {

info "openrc: Starting ${SYSTEM_NAME}"

$SUDO ${FILE_K3S_SERVICE} restart

}

# --- startup systemd or openrc service ---

service_enable_and_start() {

if [ -f "/proc/cgroups" ] && [ "$(grep memory /proc/cgroups | while read -r n n n enabled; do echo $enabled; done)" -eq 0 ];

then

info 'Failed to find memory cgroup, you may need to add "cgroup_memory=1 cgroup_enable=memory" to your linux cmdline (/boot/cmdline.txt on a Raspberry Pi)'

fi

[ "${INSTALL_K3S_SKIP_ENABLE}" = true ] && return

[ "${HAS_SYSTEMD}" = true ] && systemd_enable

[ "${HAS_OPENRC}" = true ] && openrc_enable

[ "${INSTALL_K3S_SKIP_START}" = true ] && return

POST_INSTALL_HASHES=$(get_installed_hashes)

if [ "${PRE_INSTALL_HASHES}" = "${POST_INSTALL_HASHES}" ] && [ "${INSTALL_K3S_FORCE_RESTART}" != true ]; then

info 'No change detected so skipping service start'

return

fi

[ "${HAS_SYSTEMD}" = true ] && systemd_start

[ "${HAS_OPENRC}" = true ] && openrc_start

return 0

}

# --- re-evaluate args to include env command ---

eval set -- $(escape "${INSTALL_K3S_EXEC}") $(quote "$@")

# --- run the install process --

{

verify_system

setup_env "$@"

download_and_verify

setup_selinux

create_symlinks

create_killall

create_uninstall

systemd_disable

create_env_file

create_service_file

service_enable_and_start

}- 从您将升级到的 K3s 版本的发布页面下载新的气隙映像(tar 文件)。将 tar

/var/lib/rancher/k3s/agent/images/放在每个节点上的目录中。删除旧的 tar 文件。 /usr/local/bin在每个节点上复制并替换旧的 K3s 二进制文件。复制https://get.k3s.io上的安装脚本(因为它可能自上次发布以来已更改)。就像您过去使用相同的环境变量所做的那样再次运行脚本。- 重启 K3s 服务(如果安装程序没有自动重启)。

拷贝包

mkdir -p /var/lib/rancher/k3s/agent/images/

cp ./k3s-airgap-images-$ARCH.tar /var/lib/rancher/k3s/agent/images/

cp ./k3s-$ARCH /usr/local/bin/k3s

安装

安装时指定环境变量

# INSTALL_K3S_SKIP_DOWNLOAD=true表示安装时跳过下载

export INSTALL_K3S_SKIP_DOWNLOAD=true

export INSTALL_K3S_EXEC="--write-kubeconfig ~/.kube/config --write-kubeconfig-mode 666 --service-node-port-range=1024-65535 --bind-address=0.0.0.0 --https-listen-port=6443 --cluster-cidr=10.42.0.0/16 --service-cidr=10.43.0.0/16 --cluster-dns=10.43.0.254 --cluster-domain=jettech.com --node-name=172.16.10.5"

逐个解释一下:

INSTALL_K3S_SKIP_DOWNLOAD=true效果为不去下载k3s可执行文件INSTALL_K3S_EXEC="(略)"效果为启动k3s服务时使用的额外参数--docker效果为使用docker而不是默认的containerd--write-kubeconfig-mode 666效果为将配置文件权限改为非所有者也可读可写,进而使kubectl命令无需root或sudo--write-kubeconfig ~/.kube/config效果为将配置文件写到k8s默认会用的位置,而不是k3s默认的位置/etc/rancher/k3s/k3s.yaml。后者会导致istio、helm需要额外设置或无法运行。[root@localhost bin]# ./install-k3s.sh [INFO] Skipping k3s download and verify [INFO] Skipping installation of SELinux RPM [INFO] Creating /usr/local/bin/kubectl symlink to k3s [INFO] Creating /usr/local/bin/crictl symlink to k3s [INFO] Skipping /usr/local/bin/ctr symlink to k3s, command exists in PATH at /usr/bin/ctr [INFO] Creating killall script /usr/local/bin/k3s-killall.sh [INFO] Creating uninstall script /usr/local/bin/k3s-uninstall.sh [INFO] env: Creating environment file /etc/systemd/system/k3s.service.env [INFO] systemd: Creating service file /etc/systemd/system/k3s.service [INFO] systemd: Enabling k3s unit Created symlink from /etc/systemd/system/multi-user.target.wants/k3s.service to /etc/systemd/system/k3s.service. [INFO] systemd: Starting k3s[root@localhost bin]# kubectl get nodes NAME STATUS ROLES AGE VERSION 172.16.10.5 Ready control-plane,master 4m40s v1.22.5+k3s1 [root@localhost bin]# k3s kubectl get all --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system pod/local-path-provisioner-64ffb68fd-6zsk6 1/1 Running 0 4m47s kube-system pod/coredns-85cb69466-jpmm9 1/1 Running 0 4m47s kube-system pod/metrics-server-9cf544f65-x5tpd 1/1 Running 0 4m47s kube-system pod/helm-install-traefik-crd--1-7bnhh 0/1 Completed 0 4m47s kube-system pod/helm-install-traefik--1-lndn4 0/1 Completed 1 4m47s kube-system pod/svclb-traefik-ntbbp 2/2 Running 0 4m23s kube-system pod/traefik-786ff64748-5dxqz 1/1 Running 0 4m23s NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE default service/kubernetes ClusterIP 10.43.0.1443/TCP 5m2s kube-system service/kube-dns ClusterIP 10.43.0.254 53/UDP,53/TCP,9153/TCP 4m59s kube-system service/metrics-server ClusterIP 10.43.0.223 443/TCP 4m58s kube-system service/traefik LoadBalancer 10.43.92.185 172.16.10.5 80:63443/TCP,443:26753/TCP 4m24s NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE kube-system daemonset.apps/svclb-traefik 1 1 1 1 1 4m24s NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE kube-system deployment.apps/local-path-provisioner 1/1 1 1 4m59s kube-system deployment.apps/coredns 1/1 1 1 4m59s kube-system deployment.apps/metrics-server 1/1 1 1 4m58s kube-system deployment.apps/traefik 1/1 1 1 4m24s NAMESPACE NAME DESIRED CURRENT READY AGE kube-system replicaset.apps/local-path-provisioner-64ffb68fd 1 1 1 4m48s kube-system replicaset.apps/coredns-85cb69466 1 1 1 4m48s kube-system replicaset.apps/metrics-server-9cf544f65 1 1 1 4m48s kube-system replicaset.apps/traefik-786ff64748 1 1 1 4m24s NAMESPACE NAME COMPLETIONS DURATION AGE kube-system job.batch/helm-install-traefik-crd 1/1 22s 4m57s kube-system job.batch/helm-install-traefik 1/1 25s 4m57s 添加节点node

-

[root@jettoloader k3s]# ls clean.sh install-k3s.sh k3s k3s-airgap-images-amd64.tar registries.yaml [root@jettoloader k3s]# mkdir -p /var/lib/rancher/k3s/agent/images [root@jettoloader k3s]# cp k3s /usr/local/bin/k3s [root@jettoloader k3s]# cp k3s-airgap-images-amd64.tar /var/lib/rancher/k3s/agent/images node: K3S_TOKEN是在master/server节点上面的 cat /var/lib/rancher/k3s/server/node-token export INSTALL_K3S_SKIP_DOWNLOAD=true export K3S_URL=https://172.16.10.5:6443 export K3S_TOKEN=K104e0ef4bfa1da8d10bc558b0480132a2450b47b7dc39fdf9580aa2ac5242c8434::server:b7a800ef5cb1f94223b2ca41f3f93654 export INSTALL_K3S_EXEC="--node-name=172.16.10.15" ./install-k3s.sh查看

[root@localhost k3s]# kubectl get nodes NAME STATUS ROLES AGE VERSION 172.16.10.5 Ready control-plane,master 24h v1.22.5+k3s1 172.16.10.15 Ready6m14s v1.22.5+k3s1 [root@localhost test]# kubectl create -f busybox.yaml -f nginx.yaml deployment.apps/busybox created service/nginx created deployment.apps/nginx created [root@localhost test]# [root@localhost test]# k3s kubectl get pods,svc -o wide -A NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-system pod/local-path-provisioner-64ffb68fd-dzqj8 1/1 Running 0 24h 10.42.0.4 172.16.10.5kube-system pod/helm-install-traefik-crd--1-g4wrf 0/1 Completed 0 24h 10.42.0.3 172.16.10.5 kube-system pod/helm-install-traefik--1-mbvj6 0/1 Completed 1 24h 10.42.0.2 172.16.10.5 kube-system pod/svclb-traefik-zlhkx 2/2 Running 0 24h 10.42.0.7 172.16.10.5 kube-system pod/coredns-85cb69466-24fsj 1/1 Running 0 24h 10.42.0.5 172.16.10.5 kube-system pod/traefik-786ff64748-pshkv 1/1 Running 0 24h 10.42.0.8 172.16.10.5 kube-system pod/kuboard-59c465b769-2lphq 1/1 Running 0 3h19m 10.42.0.14 172.16.10.5 kube-system pod/metrics-server-6fd7ccb946-z2z5v 1/1 Running 0 3h19m 172.16.10.5 172.16.10.5 kube-system pod/svclb-traefik-sbbrf 2/2 Running 0 7m18s 10.42.1.2 172.16.10.15 default pod/busybox-df657bd58-bp5pv 0/1 ErrImagePull 0 3s 10.42.1.3 172.16.10.15 default pod/nginx-56446dcd6d-8llsz 0/1 ErrImagePull 0 3s 10.42.1.4 172.16.10.15 NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR default service/kubernetes ClusterIP 10.43.0.1 443/TCP 24h kube-system service/kube-dns ClusterIP 10.43.0.254 53/UDP,53/TCP,9153/TCP 24h k8s-app=kube-dns kube-system service/kuboard NodePort 10.43.32.85 80:32567/TCP 3h19m k8s.kuboard.cn/layer=monitor,k8s.kuboard.cn/name=kuboard kube-system service/metrics-server ClusterIP 10.43.93.46 443/TCP 3h19m k8s-app=metrics-server kube-system service/traefik LoadBalancer 10.43.150.180 172.16.10.15,172.16.10.5 80:5668/TCP,443:10383/TCP 24h app.kubernetes.io/instance=traefik,app.kubernetes.io/name=traefik default service/nginx NodePort 10.43.197.233 80:30001/TCP 3s name=nginx 报错要在节点上面添加私有仓库配置文件 ca_file证书和server一样也是harbor的根证书

[root@localhost k3s]# cat /etc/rancher/k3s/registries.yaml mirrors: "192.168.99.41": endpoint: - "https://192.168.99.41" "harbor.jettech.com": endpoint: - "https://harbor.jettech.com" configs: "harbor.jettech.com": auth: username: admin password: Harbor12345 "harbor.jettech.com": tls: ca_file: /var/lib/rancher/k3s/cacerts.pem #insecure_skip_verify: true ca_file: /var/lib/rancher/k3s/cacerts.pem #insecure_skip_verify: true 二者选其一启动服务

systemctl restart k3s-agent再次查看

[root@localhost k3s]# k3s kubectl get pods,svc -o wide -A NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-system pod/local-path-provisioner-64ffb68fd-dzqj8 1/1 Running 0 24h 10.42.0.4 172.16.10.5kube-system pod/helm-install-traefik-crd--1-g4wrf 0/1 Completed 0 24h 10.42.0.3 172.16.10.5 kube-system pod/helm-install-traefik--1-mbvj6 0/1 Completed 1 24h 10.42.0.2 172.16.10.5 kube-system pod/svclb-traefik-zlhkx 2/2 Running 0 24h 10.42.0.7 172.16.10.5 kube-system pod/coredns-85cb69466-24fsj 1/1 Running 0 24h 10.42.0.5 172.16.10.5 kube-system pod/traefik-786ff64748-pshkv 1/1 Running 0 24h 10.42.0.8 172.16.10.5 kube-system pod/kuboard-59c465b769-2lphq 1/1 Running 0 3h55m 10.42.0.14 172.16.10.5 kube-system pod/metrics-server-6fd7ccb946-z2z5v 1/1 Running 0 3h55m 172.16.10.5 172.16.10.5 kube-system pod/svclb-traefik-sbbrf 2/2 Running 0 43m 10.42.1.2 172.16.10.15 default pod/nginx-56446dcd6d-lfsq6 1/1 Running 0 4m30s 10.42.1.9 172.16.10.15 default pod/busybox-df657bd58-nj4f6 1/1 Running 0 4m30s 10.42.1.10 172.16.10.15 NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR default service/kubernetes ClusterIP 10.43.0.1 443/TCP 24h kube-system service/kube-dns ClusterIP 10.43.0.254 53/UDP,53/TCP,9153/TCP 24h k8s-app=kube-dns kube-system service/kuboard NodePort 10.43.32.85 80:32567/TCP 3h55m k8s.kuboard.cn/layer=monitor,k8s.kuboard.cn/name=kuboard kube-system service/metrics-server ClusterIP 10.43.93.46 443/TCP 3h55m k8s-app=metrics-server kube-system service/traefik LoadBalancer 10.43.150.180 172.16.10.15,172.16.10.5 80:5668/TCP,443:10383/TCP 24h app.kubernetes.io/instance=traefik,app.kubernetes.io/name=traefik default service/nginx NodePort 10.43.218.127 80:30001/TCP 4m30s name=nginx

案例dashboard

1 Kuboard 什么?

Kuboard,是一款免费的 Kubernetes 图形化管理工具,Kuboard 力图帮助用户快速在 Kubernetes 上落地微服务,Kubernetes 容器编排已越来越被大家关注,然而使用 Kubernetes 的门槛却依然很高,主要体现在这几个方面:

- 集群的安装复杂,出错概率大

- Kubernetes相较于容器化,引入了许多新的概念,学习难度高

- 需要手工编写 YAML 文件,难以在多环境下管理

- 缺少好的实战案例可以参考

2 Kuboard 特点

- 无需编写YAML

- 纯图形化环境

- 多环境管理

拓展阅读

k8s/k3s 集群存在很多的前端管理工具的,一般大家熟知的 K8s dashboard,国内最新的面板工具 Kuboard 功能很强大,在使用和应用的层面同样新颖,强烈推荐同学可以体验使用,面板屏蔽掉底层的一些操作逻辑,在使用和应用层面功能很强大也会给予管理员或者普通用户很好的使用体验。

安装 Kuboard v2 | Kuboard

[root@localhost ui]# cat kuboard.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kuboard

namespace: kube-system

annotations:

k8s.kuboard.cn/displayName: kuboard

k8s.kuboard.cn/ingress: "true"

k8s.kuboard.cn/service: NodePort

k8s.kuboard.cn/workload: kuboard

labels:

k8s.kuboard.cn/layer: monitor