kubeadm方式安装k8s集群(v1.23.5)

1.集群规划

| 序号 | IP | HostName | 角色 |

|---|---|---|---|

| 1 | 192.168.123.21 | vm21 | Master,Node |

| 2 | 192.168.123.22 | vm22 | Node |

| 3 | 192.168.123.23 | vm23 | Node |

2.软件版本

| 序号 | 软件名称 | 版本 |

|---|---|---|

| 1 | Centos | 7.9.2009,内核升级到5.17.1-1.el7.elrepo.x86_64 |

| 2 | Docker-ce | v20.10.9 |

| 3 | kubeadm | v1.23.5 |

| 4 | kubectl | v1.23.5 |

| 5 | kubelet | v1.23.5 |

3.初始化虚拟机

3.1安装虚拟机

在所有虚拟机上进行以下操作

3.2升级内核

#在所有虚拟机上进行操作

#更新yum源仓库

yum update -y

#导入ELRepo仓库的公共密钥

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

#安装ELRepo仓库的yum源

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

#查看可用的系统内核包

[root@vm01 ~]# yum --disablerepo="*" --enablerepo="elrepo-kernel" list available

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* elrepo-kernel: ftp.yz.yamagata-u.ac.jp

Available Packages

elrepo-release.noarch 7.0-5.el7.elrepo elrepo-kernel

kernel-lt.x86_64 5.4.188-1.el7.elrepo elrepo-kernel

kernel-lt-devel.x86_64 5.4.188-1.el7.elrepo elrepo-kernel

kernel-lt-doc.noarch 5.4.188-1.el7.elrepo elrepo-kernel

kernel-lt-headers.x86_64 5.4.188-1.el7.elrepo elrepo-kernel

kernel-lt-tools.x86_64 5.4.188-1.el7.elrepo elrepo-kernel

kernel-lt-tools-libs.x86_64 5.4.188-1.el7.elrepo elrepo-kernel

kernel-lt-tools-libs-devel.x86_64 5.4.188-1.el7.elrepo elrepo-kernel

kernel-ml-devel.x86_64 5.17.1-1.el7.elrepo elrepo-kernel

kernel-ml-doc.noarch 5.17.1-1.el7.elrepo elrepo-kernel

kernel-ml-headers.x86_64 5.17.1-1.el7.elrepo elrepo-kernel

kernel-ml-tools.x86_64 5.17.1-1.el7.elrepo elrepo-kernel

kernel-ml-tools-libs.x86_64 5.17.1-1.el7.elrepo elrepo-kernel

kernel-ml-tools-libs-devel.x86_64 5.17.1-1.el7.elrepo elrepo-kernel

perf.x86_64 5.17.1-1.el7.elrepo elrepo-kernel

python-perf.x86_64 5.17.1-1.el7.elrepo elrepo-kernel

#安装最新版本内核

yum --enablerepo=elrepo-kernel install -y kernel-ml

#查看系统上的所有可用内核

sudo awk -F\' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg

#设置默认版本,其中 0 是上面查询出来的可用内核

grub2-set-default 0

#生成 grub 配置文件

grub2-mkconfig -o /boot/grub2/grub.cfg

#重启

reboot

•

#删除旧内核(可选)

#查看系统中全部的内核

rpm -qa | grep kernel

#删除旧内核的 RPM 包,具体内容视上述命令的返回结果而定

yum remove kernel-3.10.0-514.el7.x86_64 \

kernel-tools-libs-3.10.0-862.11.6.el7.x86_64 \

kernel-tools-3.10.0-862.11.6.el7.x86_64 \

kernel-3.10.0-862.11.6.el7.x86_64

4.系统设置

以下操作在所有节点执行

4.1关闭防火墙

systemctl stop firewalld && systemctl disable firewalld

4.2关闭 swap

swapoff -a && sed -i '/ swap / s/^(.*)$/#1/g' /etc/fstab

4.3关闭 selinux

setenforce 0 && sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

4.4时间同步

yum -y install ntpdate ntpdate time.windows.com hwclock --systohc

4.5系统优化

# 将桥接的IPv4流量传递到iptables的链 cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF # 生效 sysctl --system

4.6安装模块

tee /etc/modules-load.d/ipvs.conf <<'EOF' ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack ip_tables ip_set ipt_rpfilter ipt_REJECT ipip EOF systemctl enable --now systemd-modules-load.service lsmod |grep -e ip_vs -e nf_conntrack

4.7设置hosts

cat >> /etc/hosts << EOF 192.168.123.21 vm21 192.168.123.22 vm22 192.168.123.23 vm23 EOF

4.8设置日志的保存方式

在Centos7以后,因为引导方式改为了system.d,所以有两个日志系统同时在工作,默认的是rsyslogd,以及systemd journald,使用systemd journald更好一些,因此我们更改默认为systemd journald,只保留一个日志的保存方式。

mkdir /var/log/journal mkdir /etc/systemd/journal.conf.d cat >/etc/systemd/journal.conf.d/99-prophet.conf <

4.9设置时区

timedatectl set-timezone Asia/Shanghai

4.10安装常用软件

yum install -y net-tools.x86_64 vim wget ipvsadm ipset sysstat conntrack libseccomp yum install -y yum-utils device-mapper-persistent-data lvm2 wget git

5.安装docker-ce

5.1导入镜像源

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

5.2查找可以docker版本

yum list docker-ce --showduplicates | sort -r

5.3安装docker

yum -y install docker-ce-20.10.10-3.el7

5.4设置镜像加速

# 换成阿里Docker仓库

mkdir -p /etc/docker/

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://ung2thfc.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

5.5启动docker

systemctl enable docker && systemctl start docker && systemctl status docker && docker info docker --version

6.安装kubelet

6.1配置k8s源

cat > /etc/yum.repos.d/kubernetes.repo << EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

6.2安装k8s组件

yum install -y kubelet-1.23.5 kubeadm-1.23.5 kubectl-1.23.5

6.3启动kubelet

systemctl enable kubelet

6.4列出k8s所需镜像包

kubeadm config images list --kubernetes-version=v1.23.5 ################################################ 以下是该版本所需的镜像包,可以提前下载 ################################################ k8s.gcr.io/kube-apiserver:v1.23.5 k8s.gcr.io/kube-controller-manager:v1.23.5 k8s.gcr.io/kube-scheduler:v1.23.5 k8s.gcr.io/kube-proxy:v1.23.5 k8s.gcr.io/pause:3.6 k8s.gcr.io/etcd:3.5.1-0 k8s.gcr.io/coredns/coredns:v1.8.6 ################################################

6.5下载镜像包

#因为无法下载国外镜像包,所以曲线救国一下 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.23.5 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.23.5 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.23.5 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.23.5 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.1-0 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.6 #以下为calico所需镜像,也一并下载下来了 docker pull calico/kube-controllers:v3.22.1 docker pull calico/cni:v3.22.1 docker pull calico/pod2daemon-flexvol:v3.22.1 docker pull calico/node:v3.22.1 #将阿里镜像包,tag成k8s.gcr.io docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.23.5 k8s.gcr.io/kube-apiserver:v1.23.5 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.23.5 k8s.gcr.io/kube-controller-manager:v1.23.5 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.23.5 k8s.gcr.io/kube-scheduler:v1.23.5 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.23.5 k8s.gcr.io/kube-proxy:v1.23.5 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6 k8s.gcr.io/pause:3.6 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.1-0 k8s.gcr.io/etcd:3.5.1-0 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.6 k8s.gcr.io/coredns/coredns:v1.8.6

7.安装k8s

7.1安装Master

kubeadm init \ --apiserver-advertise-address=192.168.123.21 \ #主节点地址 --image-repository registry.aliyuncs.com/google_containers \ #主节点地址 --kubernetes-version v1.23.5 \ --ignore-preflight-errors=Swap,NumCPU \ #忽略错误类型,有时swap、CPU等条件不足 --token-ttl 0 \ --service-cidr=10.96.0.0/12 \ #服务的地址段 --pod-network-cidr=10.244.0.0/16 #pod的地址段 ####################################################################### # 响应结果里会出现如下两部分待执行的命令 ####################################################################### #1.Master节点待执行 # mkdir -p $HOME/.kube # cp -i /etc/kubernetes/admin.conf $HOME/.kube/config # chown $(id -u):$(id -g) $HOME/.kube/config #2.WorkNode节点执行 # kubeadm init --kubernetes-version=v1.23.5 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --ignore-preflight-errors=Swap

7.2拷贝配置信息

# 依据上面的提示,在master节点上操作 mkdir -p $HOME/.kube cp -i /etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/config

7.3加入工作node节点

#分别在vm22和vm23上执行以下命令 kubeadm init --kubernetes-version=v1.23.5 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --ignore-preflight-errors=Swap

7.4查看集群状态信息

[root@vm21 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION vm21 NotReady control-plane,master 113s v1.23.5 vm22 NotReady10s v1.23.5 vm23 NotReady 10s v1.23.5

7.5修改调度模式

#修改proxy 模型为ipvs,执行以下命令后,修改mode为“ipvs"

kubectl edit configmap kube-proxy -n kube-system

#删除之前已生成kube-proxy的pods,集群会自动创建新kube-proxy的pod

for i in `kubectl get pods -n kube-system | grep kube-proxy | awk '{print $1}'`; do kubectl delete pod $i -n kube-system ;done

#验证ipvs模式

[root@vm21 ~]# kubectl get pods -A |grep kube-proxy

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system kube-proxy-8bb7r 1/1 Running 0 8s

kube-system kube-proxy-tfp98 1/1 Running 0 8s

kube-system kube-proxy-zh22j 1/1 Running 0 7s

#查看pod启动日志,看到"Using ipvs Proxier",说明已经设置成功

[root@vm21 ~]# kubectl logs kube-proxy-8bb7r -n kube-system

I0410 11:40:41.201062 1 node.go:163] Successfully retrieved node IP: 192.168.123.23

I0410 11:40:41.201183 1 server_others.go:138] "Detected node IP" address="192.168.123.23"

I0410 11:40:41.218878 1 server_others.go:269] "Using ipvs Proxier"

I0410 11:40:41.218917 1 server_others.go:271] "Creating dualStackProxier for ipvs"

I0410 11:40:41.218929 1 server_others.go:491] "Detect-local-mode set to ClusterCIDR, but no IPv6 cluster CIDR defined, , defaulting to no-op detect-local for IPv6"

I0410 11:40:41.219507 1 proxier.go:438] "IPVS scheduler not specified, use rr by default"

I0410 11:40:41.220166 1 proxier.go:438] "IPVS scheduler not specified, use rr by default"

I0410 11:40:41.220324 1 ipset.go:113] "Ipset name truncated" ipSetName="KUBE-6-LOAD-BALANCER-SOURCE-CIDR" truncatedName="KUBE-6-LOAD-BALANCER-SOURCE-CID"

I0410 11:40:41.220438 1 ipset.go:113] "Ipset name truncated" ipSetName="KUBE-6-NODE-PORT-LOCAL-SCTP-HASH" truncatedName="KUBE-6-NODE-PORT-LOCAL-SCTP-HAS"

I0410 11:40:41.220744 1 server.go:656] "Version info" version="v1.23.5"

I0410 11:40:41.223812 1 conntrack.go:52] "Setting nf_conntrack_max" nf_conntrack_max=131072

I0410 11:40:41.224189 1 config.go:226] "Starting endpoint slice config controller"

I0410 11:40:41.224212 1 shared_informer.go:240] Waiting for caches to sync for endpoint slice config

I0410 11:40:41.224303 1 config.go:317] "Starting service config controller"

I0410 11:40:41.224306 1 shared_informer.go:240] Waiting for caches to sync for service config

I0410 11:40:41.325841 1 shared_informer.go:247] Caches are synced for endpoint slice config

I0410 11:40:41.325926 1 shared_informer.go:247] Caches are synced for service config

8.安装网络组件

8.1生成yaml文件

mkdir -p /opt/yaml cd /opt/yaml curl https://docs.projectcalico.org/manifests/calico.yaml -O

8.2应用yaml

kubectl apply -f calico.yaml #通过 watch kubectl get pods -n kube-system 来查看calico容器的状态,等待一会后,即全部完成。

8.3查看集群状态

[root@vm21 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION vm21 Ready control-plane,master 6m19s v1.23.5 vm22 Ready5m31s v1.23.5 vm23 Ready 5m23s v1.23.5 #此时集群节点的status全部为Ready状态了

9.安装dashboard

9.1生成yaml文件

[root@vm21 ~]# vi dashboard.yaml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

protocol: TCP

nodePort: 30000

selector:

k8s-app: kubernetes-dashboard

type: NodePort

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.5.0

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.7

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

9.2应用yaml文件

kubectl apply -f dashboard.yaml

9.3查看生成容器状态

[root@vm21 ~]# kubectl get pods,svc -A -n kubernetes-dashboard NAMESPACE NAME READY STATUS RESTARTS AGE kubernetes-dashboard pod/dashboard-metrics-scraper-799d786dbf-w8vjl 1/1 Running 0 150m kubernetes-dashboard pod/kubernetes-dashboard-546cbc58cd-tzzx8 1/1 Running 0 150m NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes-dashboard service/dashboard-metrics-scraper ClusterIP 10.99.131.1088000/TCP 150m kubernetes-dashboard service/kubernetes-dashboard NodePort 10.111.10.26 443:31849/TCP 150m

9.4访问dashboard

在浏览器中输入 https://192.168.123.21:31849

9.5生成token

kubectl create serviceaccount dashboard-admin -n kube-system kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

9.6查看token

[root@vm21 ~]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

Name: dashboard-admin-token-6ttrh

Namespace: kube-system

Labels:

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 2f4180ec-ca91-4d7c-8fc2-67a7f0d3a449

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1099 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IndSM0M2cE0tZndOMlF2UERVZTFTSDJZQXAzVVhINWN2ay1DYWUxdHFfblkifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tNnR0cmgiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMmY0MTgwZWMtY2E5MS00ZDdjLThmYzItNjdhN2YwZDNhNDQ5Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.ann2P88mBQoMBFsQ7givODveM1Uv3BDmEu99sk-mDv648uH-PHKUyAI-zZuanzxrcv8vyemmCBCXztncFHqZpmDuP4U1ju65z4goYotI-bq1cbwc3RUmIlV75T6k7OJjH8gzfINJq6T6LkSv9lDumI-MsrWdbs5mqT34lRmsInZyKqqXIjHjv2ZvgLK67UAcGvgM-o0fkhRTFtkjjgy_wgS9xqD-G6mJ1NORmM6cSEHix5TdemoPNrK0K8ejEiPNWlO1K6PYt5R4jB-uoTgQbYQFiXwGguhmE3wu1FVwpxKRytqb5No_Vuf0Sh5SDgZ-FEbrXMxu8aq_b662ZBTKEw

9.7登录dashboard

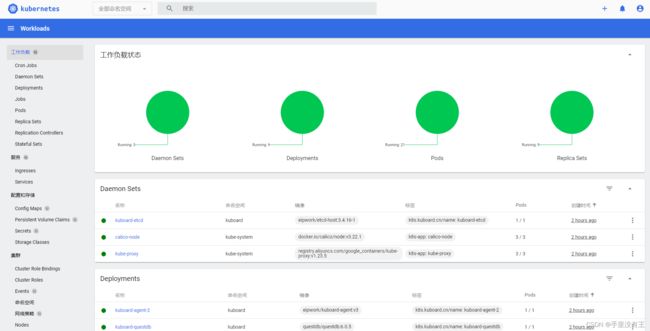

输入上面查找到的token值后,即可看到dashboard的主界面。这是官方的ui界面,个人感觉有些功能还不是很符合国人的习惯。

10.安装metrics-server

10.1查看apiserver启动参数

ps -ef | grep apiserver |grep enable-aggregator-routing #执行上面的命令后,无符合条件的返回内容

10.2修改apiserver启动参数

vi /etc/kubernetes/manifests/kube-apiserver.yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 192.168.123.21:6443

creationTimestamp: null

labels:

component: kube-apiserver

tier: control-plane

name: kube-apiserver

namespace: kube-system

spec:

containers:

- command:

- kube-apiserver

- --advertise-address=192.168.123.21

- --allow-privileged=true

- --authorization-mode=Node,RBAC

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --enable-admission-plugins=NodeRestriction

- --enable-bootstrap-token-auth=true

- --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

- --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt

- --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key

- --enable-aggregator-routing=true #添加本行

- --etcd-servers=https://127.0.0.1:2379

- --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt

- --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt

- --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key

- --requestheader-allowed-names=front-proxy-client

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --requestheader-extra-headers-prefix=X-Remote-Extra-

- --requestheader-group-headers=X-Remote-Group

- --requestheader-username-headers=X-Remote-User

- --secure-port=6443

- --service-account-issuer=https://kubernetes.default.svc.cluster.local

- --service-account-key-file=/etc/kubernetes/pki/sa.pub

- --service-account-signing-key-file=/etc/kubernetes/pki/sa.key

- --service-cluster-ip-range=10.96.0.0/12

- --tls-cert-file=/etc/kubernetes/pki/apiserver.crt

- --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

image: registry.aliyuncs.com/google_containers/kube-apiserver:v1.23.5

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 192.168.123.21

path: /livez

port: 6443

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

name: kube-apiserver

readinessProbe:

failureThreshold: 3

httpGet:

host: 192.168.123.21

path: /readyz

port: 6443

scheme: HTTPS

periodSeconds: 1

timeoutSeconds: 15

resources:

requests:

cpu: 250m

startupProbe:

failureThreshold: 24

httpGet:

host: 192.168.123.21

path: /livez

port: 6443

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

volumeMounts:

- mountPath: /etc/ssl/certs

name: ca-certs

readOnly: true

- mountPath: /etc/pki

name: etc-pki

readOnly: true

- mountPath: /etc/kubernetes/pki

name: k8s-certs

readOnly: true

hostNetwork: true

priorityClassName: system-node-critical

securityContext:

seccompProfile:

type: RuntimeDefault

volumes:

- hostPath:

path: /etc/ssl/certs

type: DirectoryOrCreate

name: ca-certs

- hostPath:

path: /etc/pki

type: DirectoryOrCreate

name: etc-pki

- hostPath:

path: /etc/kubernetes/pki

type: DirectoryOrCreate

name: k8s-certs

status: {}

#像vi编辑器一样,保存并退出,稍等片刻,改参数即会生效

10.3验证apiserver启动参数

[root@vm21 ~]# ps -ef | grep apiserver |grep enable-aggregator-routing root 49561 49541 12 20:05 ? 00:19:44 kube-apiserver --advertise-address=192.168.123.21 --allow-privileged=true --authorization-mode=Node,RBAC --client-ca-file=/etc/kubernetes/pki/ca.crt --enable-admission-plugins=NodeRestriction --enable-bootstrap-token-auth=true --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key --enable-aggregator-routing=true --etcd-servers=https://127.0.0.1:2379 --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key --requestheader-allowed-names=front-proxy-client --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt --requestheader-extra-headers-prefix=X-Remote-Extra- --requestheader-group-headers=X-Remote-Group --requestheader-username-headers=X-Remote-User --secure-port=6443 --service-account-issuer=https://kubernetes.default.svc.cluster.local --service-account-key-file=/etc/kubernetes/pki/sa.pub --service-account-signing-key-file=/etc/kubernetes/pki/sa.key --service-cluster-ip-range=10.96.0.0/12 --tls-cert-file=/etc/kubernetes/pki/apiserver.crt --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

10.4生成yaml文件

vi metrics-server.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- nodes/metrics

verbs:

- get

- apiGroups:

- ""

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP #注意此处

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls #注意此处

image: bitnami/metrics-server:0.6.1 #注意此处

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

#参考地址:https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.6.1/components.yaml

10.5创建metrics-server

kubectl apply -f metrics-server.yaml

10.6查看容器状态

[root@k8s01 opt]# kubectl get pod -n kube-system | grep metrics-server metrics-server-5d56fdf754-hpmqn 1/1 Running 0 5m19s

10.7检查Endpoints

[root@k8s01 opt]# kubectl describe svc metrics-server -n kube-system Name: metrics-server Namespace: kube-system Labels: k8s-app=metrics-server Annotations:Selector: k8s-app=metrics-server Type: ClusterIP IP Family Policy: SingleStack IP Families: IPv4 IP: 10.109.155.107 IPs: 10.109.155.107 Port: https 443/TCP TargetPort: https/TCP Endpoints: 10.244.236.133:4443 Session Affinity: None Events: #当Endpoints有值后,说明容器已创建成功

10.8查看资源使用情况

[root@vm21 ~]# kubectl top nodes NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% vm21 739m 18% 1489Mi 80% vm22 120m 3% 920Mi 49% vm23 117m 2% 966Mi 52% [root@vm21 ~]# kubectl top pods -A NAMESPACE NAME CPU(cores) MEMORY(bytes) kube-system calico-kube-controllers-56fcbf9d6b-82gdm 7m 26Mi kube-system calico-node-4f22w 44m 161Mi kube-system calico-node-8sjkh 76m 151Mi kube-system calico-node-9p62v 52m 167Mi kube-system coredns-6d8c4cb4d-hc58r 2m 49Mi kube-system coredns-6d8c4cb4d-sb8qs 19m 20Mi kube-system etcd-vm21 71m 65Mi kube-system kube-apiserver-vm21 199m 332Mi kube-system kube-controller-manager-vm21 48m 48Mi kube-system kube-proxy-8bb7r 10m 29Mi kube-system kube-proxy-tfp98 2m 20Mi kube-system kube-proxy-zh22j 1m 27Mi kube-system kube-scheduler-vm21 31m 18Mi kube-system metrics-server-5d56fdf754-27ltc 5m 27Mi kubernetes-dashboard dashboard-metrics-scraper-799d786dbf-w8vjl 11m 19Mi kubernetes-dashboard kubernetes-dashboard-546cbc58cd-tzzx8 34m 70Mi kuboard kuboard-agent-2-79d9f79d6d-dkv8d 12m 24Mi kuboard kuboard-agent-57bd54b468-jtsb6 13m 28Mi kuboard kuboard-etcd-4tn7p 14m 19Mi kuboard kuboard-questdb-78d4c946f5-dv649 54m 244Mi kuboard kuboard-v3-cf8b9b668-r4bc7 20m 76Mi

11.安装kuboard

11.1创建yaml文件

vi kuboard-v3.yaml

---

apiVersion: v1

kind: Namespace

metadata:

name: kuboard

---

apiVersion: v1

kind: ConfigMap

metadata:

name: kuboard-v3-config

namespace: kuboard

data:

# 关于如下参数的解释,请参考文档 https://kuboard.cn/install/v3/install-built-in.html

# [common]

KUBOARD_SERVER_NODE_PORT: '30080'

KUBOARD_AGENT_SERVER_UDP_PORT: '30081'

KUBOARD_AGENT_SERVER_TCP_PORT: '30081'

KUBOARD_SERVER_LOGRUS_LEVEL: info # error / debug / trace

# KUBOARD_AGENT_KEY 是 Agent 与 Kuboard 通信时的密钥,请修改为一个任意的包含字母、数字的32位字符串,此密钥变更后,需要删除 Kuboard Agent 重新导入。

KUBOARD_AGENT_KEY: 32b7d6572c6255211b4eec9009e4a816

KUBOARD_AGENT_IMAG: eipwork/kuboard-agent

KUBOARD_QUESTDB_IMAGE: questdb/questdb:6.0.5

KUBOARD_DISABLE_AUDIT: 'false' # 如果要禁用 Kuboard 审计功能,将此参数的值设置为 'true',必须带引号。

# 关于如下参数的解释,请参考文档 https://kuboard.cn/install/v3/install-gitlab.html

# [gitlab login]

# KUBOARD_LOGIN_TYPE: "gitlab"

# KUBOARD_ROOT_USER: "your-user-name-in-gitlab"

# GITLAB_BASE_URL: "http://gitlab.mycompany.com"

# GITLAB_APPLICATION_ID: "7c10882aa46810a0402d17c66103894ac5e43d6130b81c17f7f2d8ae182040b5"

# GITLAB_CLIENT_SECRET: "77c149bd3a4b6870bffa1a1afaf37cba28a1817f4cf518699065f5a8fe958889"

# 关于如下参数的解释,请参考文档 https://kuboard.cn/install/v3/install-github.html

# [github login]

# KUBOARD_LOGIN_TYPE: "github"

# KUBOARD_ROOT_USER: "your-user-name-in-github"

# GITHUB_CLIENT_ID: "17577d45e4de7dad88e0"

# GITHUB_CLIENT_SECRET: "ff738553a8c7e9ad39569c8d02c1d85ec19115a7"

# 关于如下参数的解释,请参考文档 https://kuboard.cn/install/v3/install-ldap.html

# [ldap login]

# KUBOARD_LOGIN_TYPE: "ldap"

# KUBOARD_ROOT_USER: "your-user-name-in-ldap"

# LDAP_HOST: "ldap-ip-address:389"

# LDAP_BIND_DN: "cn=admin,dc=example,dc=org"

# LDAP_BIND_PASSWORD: "admin"

# LDAP_BASE_DN: "dc=example,dc=org"

# LDAP_FILTER: "(objectClass=posixAccount)"

# LDAP_ID_ATTRIBUTE: "uid"

# LDAP_USER_NAME_ATTRIBUTE: "uid"

# LDAP_EMAIL_ATTRIBUTE: "mail"

# LDAP_DISPLAY_NAME_ATTRIBUTE: "cn"

# LDAP_GROUP_SEARCH_BASE_DN: "dc=example,dc=org"

# LDAP_GROUP_SEARCH_FILTER: "(objectClass=posixGroup)"

# LDAP_USER_MACHER_USER_ATTRIBUTE: "gidNumber"

# LDAP_USER_MACHER_GROUP_ATTRIBUTE: "gidNumber"

# LDAP_GROUP_NAME_ATTRIBUTE: "cn"

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: kuboard-boostrap

namespace: kuboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kuboard-boostrap-crb

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kuboard-boostrap

namespace: kuboard

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

k8s.kuboard.cn/name: kuboard-etcd

name: kuboard-etcd

namespace: kuboard

spec:

revisionHistoryLimit: 10

selector:

matchLabels:

k8s.kuboard.cn/name: kuboard-etcd

template:

metadata:

labels:

k8s.kuboard.cn/name: kuboard-etcd

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node-role.kubernetes.io/master

operator: Exists

- matchExpressions:

- key: node-role.kubernetes.io/control-plane

operator: Exists

- matchExpressions:

- key: k8s.kuboard.cn/role

operator: In

values:

- etcd

containers:

- env:

- name: HOSTNAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

- name: HOSTIP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.hostIP

image: 'eipwork/etcd-host:3.4.16-1'

imagePullPolicy: Always

name: etcd

ports:

- containerPort: 2381

hostPort: 2381

name: server

protocol: TCP

- containerPort: 2382

hostPort: 2382

name: peer

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 2381

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

volumeMounts:

- mountPath: /data

name: data

dnsPolicy: ClusterFirst

hostNetwork: true

restartPolicy: Always

serviceAccount: kuboard-boostrap

serviceAccountName: kuboard-boostrap

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

- key: node-role.kubernetes.io/control-plane

operator: Exists

volumes:

- hostPath:

path: /usr/share/kuboard/etcd

name: data

updateStrategy:

rollingUpdate:

maxUnavailable: 1

type: RollingUpdate

---

apiVersion: apps/v1

kind: Deployment

metadata:

annotations: {}

labels:

k8s.kuboard.cn/name: kuboard-v3

name: kuboard-v3

namespace: kuboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s.kuboard.cn/name: kuboard-v3

template:

metadata:

labels:

k8s.kuboard.cn/name: kuboard-v3

spec:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- preference:

matchExpressions:

- key: node-role.kubernetes.io/master

operator: Exists

weight: 100

- preference:

matchExpressions:

- key: node-role.kubernetes.io/control-plane

operator: Exists

weight: 100

containers:

- env:

- name: HOSTIP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.hostIP

- name: HOSTNAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

envFrom:

- configMapRef:

name: kuboard-v3-config

image: 'eipwork/kuboard:v3.3.0.8'

imagePullPolicy: Always

livenessProbe:

failureThreshold: 3

httpGet:

path: /kuboard-resources/version.json

port: 80

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: kuboard

ports:

- containerPort: 80

name: web

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 10081

name: peer

protocol: TCP

- containerPort: 10081

name: peer-u

protocol: UDP

readinessProbe:

failureThreshold: 3

httpGet:

path: /kuboard-resources/version.json

port: 80

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources: {}

# startupProbe:

# failureThreshold: 20

# httpGet:

# path: /kuboard-resources/version.json

# port: 80

# scheme: HTTP

# initialDelaySeconds: 5

# periodSeconds: 10

# successThreshold: 1

# timeoutSeconds: 1

dnsPolicy: ClusterFirst

restartPolicy: Always

serviceAccount: kuboard-boostrap

serviceAccountName: kuboard-boostrap

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

---

apiVersion: v1

kind: Service

metadata:

annotations: {}

labels:

k8s.kuboard.cn/name: kuboard-v3

name: kuboard-v3

namespace: kuboard

spec:

ports:

- name: web

nodePort: 30080

port: 80

protocol: TCP

targetPort: 80

- name: tcp

nodePort: 30081

port: 10081

protocol: TCP

targetPort: 10081

- name: udp

nodePort: 30081

port: 10081

protocol: UDP

targetPort: 10081

selector:

k8s.kuboard.cn/name: kuboard-v3

sessionAffinity: None

type: NodePort

11.2应用yaml文件

kubectl apply -f kuboard-v3.yaml

11.3查看容器状态

[root@vm21 yaml]# kubectl get svc,pods -n kuboard NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kuboard-v3 NodePort 10.102.141.14280:30080/TCP,10081:30081/TCP,10081:30081/UDP 5m8s NAME READY STATUS RESTARTS AGE pod/kuboard-agent-2-79d9f79d6d-dkv8d 1/1 Running 1 (3m20s ago) 3m43s pod/kuboard-agent-57bd54b468-jtsb6 1/1 Running 1 (3m21s ago) 3m43s pod/kuboard-etcd-4tn7p 1/1 Running 0 5m8s pod/kuboard-questdb-78d4c946f5-dv649 1/1 Running 0 3m43s pod/kuboard-v3-cf8b9b668-r4bc7 1/1 Running 0 5m8s

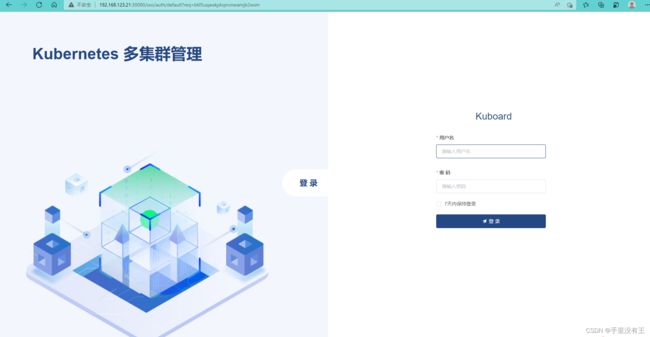

11.4访问web页面

当容器就绪后,在浏览器中访问 http://192.168.123.21:30080

输入账户(admin)和密码(Kuboard123),登录即可。