OpenCV杂谈_10

一. 需要做的前期准备

- 环境配置:

Python版本:3.6.0(这里需要注意一下,如果你的python版本为>=3.9,对于dlib包的导入将会很困难,具体导入方法博主还没有解决)

功能包:scipy (1.5.4)、imutils (0.5.4)、argparse (1.4.0)、dlib (19.22.0)、opencv-python (4.5.2.54)

- 如果想要对录制好的视频进行处理则需要提前获得的视频

- 下载shape_predictor_68_face_landmarks.dat,作者把它传到了云盘里,需要的自取:链接在此 提取码:demo

- 一个用的顺手的IDE(本人推荐Pycharm)

二. 须知

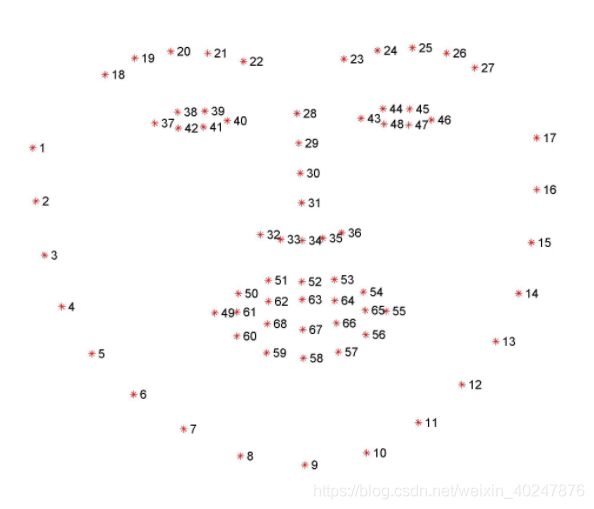

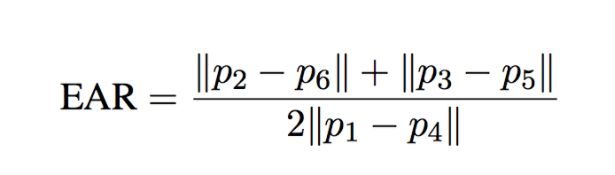

- 68 points face landmark:

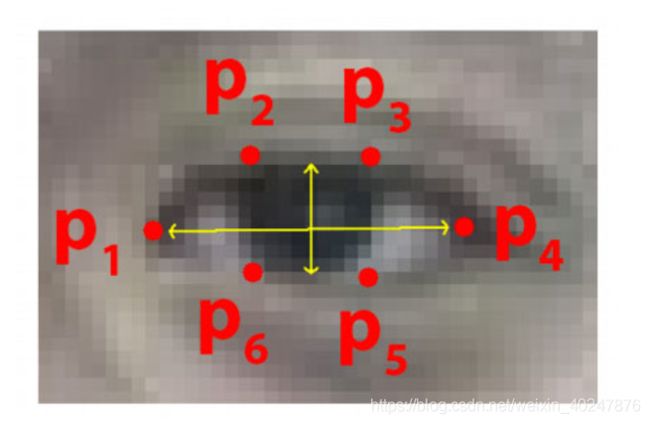

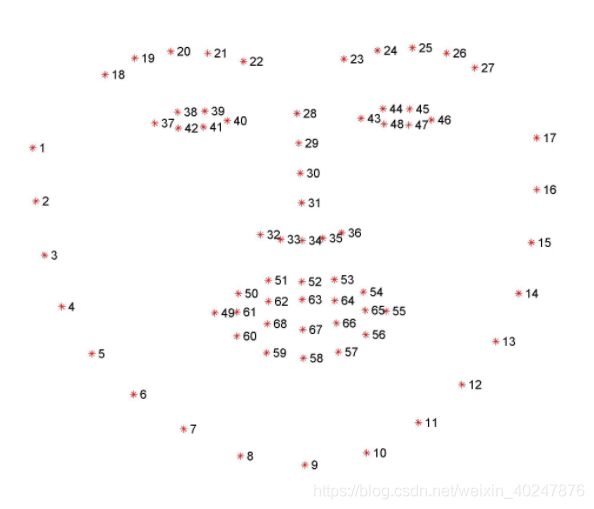

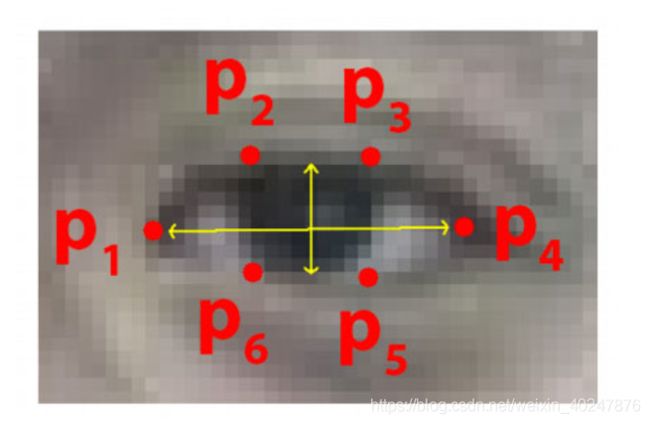

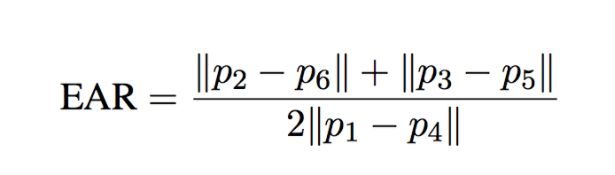

- 眼睛距离计算

三. 源码如下(闭眼检测)

import cv2

import dlib

from scipy.spatial import distance

def calculate_EAR(eye):

"""计算眼睛之间的距离"""

A = distance.euclidean(eye[1], eye[5])

B = distance.euclidean(eye[2], eye[4])

C = distance.euclidean(eye[0], eye[3])

ear_aspect_ratio = (A + B) / (2.0 * C)

return ear_aspect_ratio

cap = cv2.VideoCapture(0)

hog_face_detector = dlib.get_frontal_face_detector()

dlib_facelandmark = dlib.shape_predictor("shape_predictor_68_face_landmarks.dat")

while True:

_, frame = cap.read()

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

faces = hog_face_detector(gray)

for face in faces:

face_landmarks = dlib_facelandmark(gray, face)

leftEye = []

rightEye = []

for n in range(36, 42):

x = face_landmarks.part(n).x

y = face_landmarks.part(n).y

leftEye.append((x, y))

next_point = n + 1

if n == 41:

next_point = 36

x2 = face_landmarks.part(next_point).x

y2 = face_landmarks.part(next_point).y

cv2.line(frame, (x, y), (x2, y2), (0, 255, 0), 1)

for n in range(42, 48):

x = face_landmarks.part(n).x

y = face_landmarks.part(n).y

rightEye.append((x, y))

next_point = n + 1

if n == 47:

next_point = 42

x2 = face_landmarks.part(next_point).x

y2 = face_landmarks.part(next_point).y

cv2.line(frame, (x, y), (x2, y2), (0, 255, 0), 1)

left_ear = calculate_EAR(leftEye)

right_ear = calculate_EAR(rightEye)

EAR = (left_ear + right_ear) / 2

EAR = round(EAR, 2)

if EAR < 0.26:

cv2.putText(frame, "WAKE UP", (20, 100),

cv2.FONT_HERSHEY_SIMPLEX, 3, (0, 0, 255), 4)

cv2.putText(frame, "Are you Sleepy?", (20, 400),

cv2.FONT_HERSHEY_SIMPLEX, 2, (0, 0, 255), 4)

print("!!!")

print(EAR)

cv2.imshow("Are you Sleepy", frame)

key = cv2.waitKey(1)

if key == 27:

break

cap.release()

cv2.destroyAllWindows()

四. 源码如下(眨眼次数计算,注释懒得写成中文了,自行理解)

from scipy.spatial import distance as dist

from imutils.video import FileVideoStream

from imutils.video import VideoStream

from imutils import face_utils

import imutils

import time

import dlib

import cv2

def eye_aspect_ratio(eye):

A = dist.euclidean(eye[1], eye[5])

B = dist.euclidean(eye[2], eye[4])

C = dist.euclidean(eye[0], eye[3])

ear = (A + B) / (2.0 * C)

return ear

p = 'shape_predictor_68_face_landmarks.dat'

v = "video.mp4"

EYE_AR_THRESH = 0.25

EYE_AR_CONSEC_FRAMES = 2

COUNTER = 0

TOTAL = 0

print("[INFO] loading facial landmark predictor...")

detector = dlib.get_frontal_face_detector()

predictor = dlib.shape_predictor(p)

(lStart, lEnd) = face_utils.FACIAL_LANDMARKS_IDXS["left_eye"]

(rStart, rEnd) = face_utils.FACIAL_LANDMARKS_IDXS["right_eye"]

print("[INFO] starting video stream thread...")

vs = VideoStream(src=0).start()

fileStream = False

time.sleep(1.0)

while True:

if fileStream and not vs.more():

break

frame = vs.read()

frame = imutils.resize(frame, width=450)

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

rects = detector(gray, 0)

for rect in rects:

shape = predictor(gray, rect)

shape = face_utils.shape_to_np(shape)

leftEye = shape[lStart:lEnd]

rightEye = shape[rStart:rEnd]

leftEAR = eye_aspect_ratio(leftEye)

rightEAR = eye_aspect_ratio(rightEye)

ear = (leftEAR + rightEAR) / 2.0

leftEyeHull = cv2.convexHull(leftEye)

rightEyeHull = cv2.convexHull(rightEye)

cv2.drawContours(frame, [leftEyeHull], -1, (0, 255, 0), 1)

cv2.drawContours(frame, [rightEyeHull], -1, (0, 255, 0), 1)

if ear < EYE_AR_THRESH:

COUNTER += 1

else:

if COUNTER >= EYE_AR_CONSEC_FRAMES:

TOTAL += 1

COUNTER = 0

cv2.putText(frame, "Blinks: {}".format(TOTAL), (10, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2)

cv2.putText(frame, "EAR: {:.2f}".format(ear), (300, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2)

cv2.putText(frame, "COUNTER: {}".format(COUNTER), (140, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2)

cv2.imshow("Frame", frame)

key = cv2.waitKey(1) & 0xFF

if key == ord("q"):

break

cv2.destroyAllWindows()

vs.stop()

五. 感悟与分享

- 有关闭眼检测的教程:https://www.youtube.com/watch?v=OCJSJ-anywc&t=305s&ab_channel=MisbahMohammed

- 有关眨眼次数计算的教程:https://www.pyimagesearch.com/2017/04/24/eye-blink-detection-opencv-python-dlib/

以上内容均为英文,且需要。

- 其实原理并不复杂,就是通过检测出眼睛,之后再计算眼皮之间的距离,来获得最终想要的数据。

如有问题,敬请指正。欢迎转载,但请注明出处。