OpenCV角点检测—FLANN快速最近邻进行特征点匹配(9)

2.3 使用FLANN进行特征点匹配

本节我们将讲如何使用FlannBasedMatcher接口和FLANN()函数来实现快速高效匹配(快速最邻近逼近搜索函数库,Fast Library for Approximate Nearest Neighbors,FLANN)。

2.3.1 FlannBasedMatcher类的简单剖析

在OpenCV源码中,可以找到FlannBasedMatcher类:

![]()

可以看到,FlannBasedMatcher类也是继承自DecriptorMatcher,并且同样主要使用来自FlannBasedMatcher类的match方法进行匹配。

2.3.2 找到最佳匹配:DescriptorMatcher::match方法

FlannBasedMatcher::match()函数从每个描述符查询集中找到最佳匹配。

两个版本的源码:

void DescriptorMatcher::match(const Mat& queryDescriptors, const Mat& trainDescriptors, vector<DMatch>& matches, const Mat& mask=Mat())

- 参数一:查询描述符集;

- 参数二:训练描述符集;

- 参数三:得到的匹配,若查询描述符有在掩膜中被标记起来,则没有匹配添加到描述符中,则匹配量可能会比查询少;

- 参数四:指定输入查询和训练描述符允许匹配的掩膜;

void DescriptorMatcher::match(const Mat& queryDescriptors, vector<DMatch>& matches, const vector<Mat>& masks=vector<Mat>())

参数三:一组掩膜,每个masks[i]从第i个图像trainDescCollection[i]指定输入查询和训练描述符允许匹配的掩膜;

2.3.3 实例:使用FLANN进行特征点匹配

示例代码:

# include "opencv2/core/core.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/highgui/highgui.hpp"

#include CMakeLists.txt:

cmake_minimum_required(VERSION 3.6)

set(CMAKE_CXX_STANDARD 11)

set(CMAKE_BUILD_TYPE "Debug")

find_package(OpenCV REQUIRED)

include_directories(${OpenCV_INCLUDE_DIRS})

add_executable(open 1.cpp)

target_link_libraries(open ${OpenCV_LIBS})

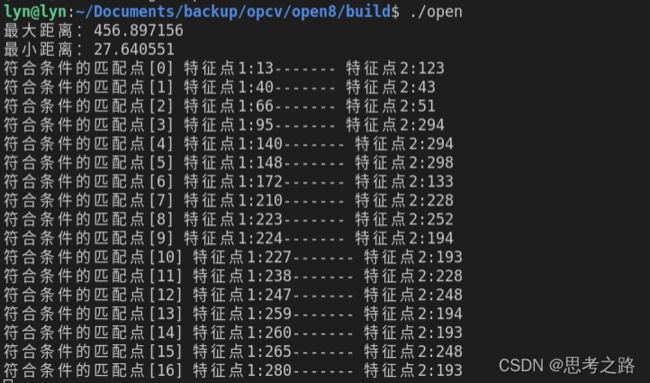

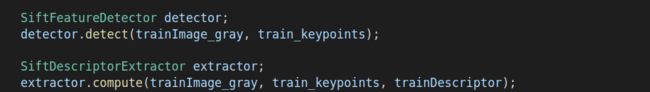

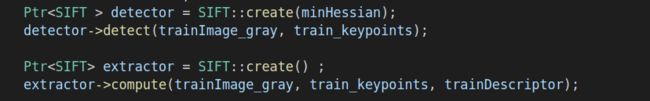

2.3.4 综合实例:FLANN结合SIFT进行关键点的描述和匹配

用SIFT进行关键点和描述子的提取,用FLANN进行匹配

实现代码:

# include "opencv2/core/core.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/highgui/highgui.hpp"

#include CMakeLists.txt:

cmake_minimum_required(VERSION 3.6)

set(CMAKE_CXX_STANDARD 11)

set(CMAKE_BUILD_TYPE "Debug")

find_package(OpenCV REQUIRED)

include_directories(${OpenCV_INCLUDE_DIRS})

add_executable(open 1.cpp)

target_link_libraries(open ${OpenCV_LIBS})

编译运行程序中出现的问题:

![]()

需要将其改为指针的使用方式;

![]()

解决方法:加入头文件#include,若不加,只能调用cvCvtColor()函数来进行图片的转换,但是这个函数的参数 CvMat 型的,而我们定义的是c++中的Mat类变量,所以要使用cvtColor()。

![]()

直接将该变量CV_BGR2GRAY改成对应的枚举数值:6即可解决;

补充:摄像头操作相关命令

可以使用camorama和cheese命令来显示摄像头捕捉到的视频,其中cheese -d 设备中通过 lsusb可以查看usb摄像头的型号, ls /dev/video*可以看到驱动的摄像头。

| 比较 | SIFT | SURF |

|---|---|---|

| 尺度空间 | DOG与不同的图片卷积 | 不同尺度的box filters与原图卷积 |

| 特征点检测 | 先进行非极大值抑制,再取出低对比对的点,再通过Hessian矩阵去除边缘的点 | 先用hessian确定候选的点,然后进行极大抑制 |

| 方向 | 在正方形区域内统计梯度的直方图,找到max的对应的方向,可以有多个方向 | 在圆形区域内,计算各个扇形范围内x,y方向的haar小波响应,找模最大的扇形方向 |

| 特征描述子 | 1616的采用划分成44的区域,计算每个采用区域样点的梯度方向和幅值,组成8bin直方图 | 2020的区域划分为44的子区域,每个区域找5*5个采用点,计算采用点的harr小波响应,记录dx的求和,dy的求和,dx,dy模分别的求和 |

理论上:SURF是SIFT速度的三倍。

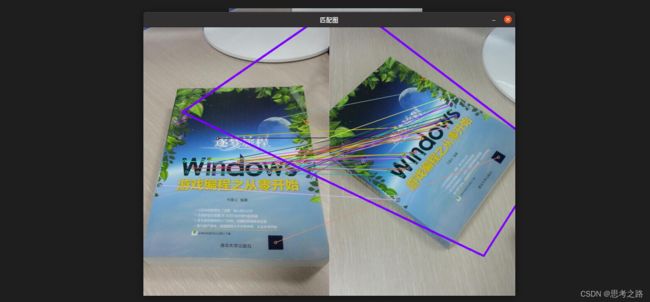

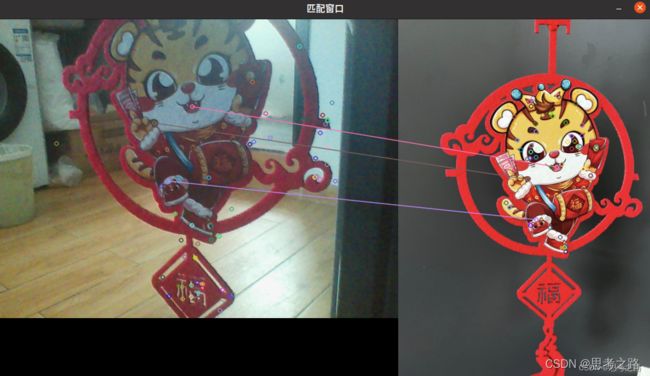

2.3 寻找已知物体

在FLANN特征匹配的基础上,可以利用Hormography映射找出已知物。就是利用findHomography函数通过匹配的关键点找出相应的变换,再利用perspectiveTransform函数映射点群。

2.3.1 寻找透视变换:findHomography()函数

s i x i ′ y i ′ I s_i x_{i'} y_{i'}I sixi′yi′I ~ H x i y i I H x_i y_iI HxiyiI

该函数的作用就是找到并返回原图像与目标图像之间的透视变换H:

Mat findHomography(InputArray srcPoints, InputArray dstPoints, int method=0, double ransacReprojThreshold=3, OutputArray mask=noArray())

- 参数一:原平面上对应的点,可以是CV_32FC2的矩阵类型或者vector.

- 参数二:目标平面对应的点,同上;

- 参数三:计算单应矩阵H的方法。可选标志符:

| 标志符 | 含义 |

|---|---|

| 0 | 使用所有点的常规方法 |

| CV_RANSAC | 基于RANSAC的鲁棒方法 |

| CV_LMEDS | 最小中值鲁棒方法 |

- 参数四:处理点对的内围层时,允许重投影误差的最大值。即dstpoint - (H*srcpoint) > 3时,这个的点可以被看作内i围层。若srcPints和dstPoints以像素为单位,参数可以取1~10;

- 参数五:通过上面的鲁棒性设置输出掩码。出入掩码会被忽略调;

2.3.2 进行透视矩阵变换:perspectiveTransform()函数

该函数的作用是:进行向量的透视矩阵 变换

void perspectiveTransform(InputArray src, OutputArray dst, InputArray m)

- 参数一:需要为三通道或三通道的浮点图像,其中每个元素都是而为或三维可被转换的矢量;

- 参数二:函数调用后的输出结果存放位置,尺寸和类型一样;

- 参数三:变换矩阵33 或44;

2.3.3 实例:寻找已知物体

示例代码:

#include "opencv2/core/core.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/calib3d/calib3d.hpp" //findHomography有关

#include "opencv2/opencv.hpp" //imgproc.hpp与line()函数有关

#include matcher = DescriptorMatcher::create("BruteForce-Hamming");

Ptr<DescriptorMatcher> matcher= DescriptorMatcher::create("FlannBased");

std::vector<DMatch> matches;

//匹配两幅图中的描述子

matcher->match(descriptors1, descriptors2, matches);

double max_dist = 0, min_dist = 100;

// 【6】计算关键点之间距离的最大和最小值;

for(int i = 0; i < descriptors1.rows; i++)

{

double dist = matches[i].distance;

if(dist < min_dist) min_dist = dist;

if(dist> max_dist) max_dist = dist;

}

cout << "max dist :" << max_dist;

cout << "min dist:" << min_dist;

// [7]存下匹配距离小与3*min_dist 的点对

std::vector<DMatch> good_matches;

for(int i = 0; i < descriptors1.rows; i++)

{

if(matches[i].distance < 3*min_dist)

{

good_matches.push_back(matches[i]);

}

}

// [8]绘制从图像中匹配出的关键点

Mat imageMatches;

drawMatches(srcImage1, keypoints1, srcImage2, keypoints2, good_matches,imageMatches, Scalar::all(-1),Scalar::all(-1), vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);//进行绘制

//定义两个局部变量

vector<Point2f> obj;

vector<Point2f> scene;

//从匹配成功的匹配中获取冠军点;

for(unsigned int i=0 ; i < good_matches.size(); i++)

{

obj.push_back(keypoints1[good_matches[i].queryIdx].pt);

scene.push_back(keypoints2[good_matches[i].trainIdx].pt);

}

Mat H = findHomography(obj, scene, 8); //计算透视变换

//从待测的变换中获取角点

vector<Point2f> obj_corners(4);

obj_corners[0] = cv::Point(0, 0);

obj_corners[1] = cv::Point(srcImage1.cols, 0);

obj_corners[3] = cv::Point(0, srcImage1.rows);

obj_corners[2] = cv::Point(srcImage1.cols, srcImage1.rows);

vector<Point2f> scence_corners(4);

//透视变换

perspectiveTransform(obj_corners, scence_corners, H);

//绘制角点间的直线

line(imageMatches, scence_corners[0] + Point2f(static_cast<float>(srcImage1.cols),0), scence_corners[1] + Point2f(static_cast<float>(srcImage1.cols), 0), Scalar(255, 0 ,123), 4);

line(imageMatches, scence_corners[1] + Point2f(static_cast<float>(srcImage1.cols),0), scence_corners[2] + Point2f(static_cast<float>(srcImage1.cols), 0), Scalar(255, 0 ,123), 4);

line(imageMatches, scence_corners[2] + Point2f(static_cast<float>(srcImage1.cols),0), scence_corners[3] + Point2f(static_cast<float>(srcImage1.cols), 0), Scalar(255, 0 ,123), 4);

line(imageMatches, scence_corners[3] + Point2f(static_cast<float>(srcImage1.cols),0), scence_corners[0] + Point2f(static_cast<float>(srcImage1.cols), 0), Scalar(255, 0 ,123), 4);

//[9]显示效果

imshow("匹配图", imageMatches);

//waitKey(0);

while(char(waitKey(1) != 'q')){}

return 0;

}