Notes of chapter 2: Multi-armed bandits

Chapter 2: Multi-armed bandits

- 1 Summary

-

- 1.1 The method of updating value table

-

- 1.1.1 Sample average method

- 1.1.2 Exponential recency-weighted average method (constant step size)

- 1.1.3 General form of incremental update and convergence criterion

- 1.2 The method of selecting actions

-

- 1.2.1 Greedy action selection method

- 1.2.2 ε \varepsilon ε-Greedy method

- 1.2.3 Upper-Confidence-Bound (UCB) action selection method

- 1.3 Gradient Bandit Algorithms

-

- 1.3.1 Selecting actions

- 1.3.2 Updating preference (like value table) for each action

- 1.4 Other methods

-

- 1.4.1 Optimistic Initial Values

- 1.4.2 Thompson Sampling

- 2 Exercises

-

- Exercise 2.1 ε \varepsilon ε-greedy

- Exercise 2.2: Bandit example

- Exercise 2.3 Greedy and ε \varepsilon ε-greedy

- Exercise 2.4 Weighted average of varying step size

- Exercise 2.5 (programming)

- Exercise 2.6: Mysterious Spikes

- Exercise 2.7: Unbiased Constant-Step-Size Trick

- Exercise 2.8: UCB Spikes

- Exercise 2.9

- Exercise 2.10

- Exercise 2.11 (programming)

- 2 Questions

-

- Q1 Bias in Sample-average methods

- Q2 Exercise 2.6: Mysterious Spikes

1 Summary

In this chapter we focus on the multi-armed bandits problem, in which there is only one state and thus one observation (nonassociative), and different actions correspond to different expected rewards/values.

We need to determine the decision-making policy according to our value table and update the value table after receiving the rewards. It still obeys the close-loop of “observe (no need in this case)” – “act” – “rewards” – “update estimate of value table”.

The trade-off between exploitation and exploration is considered in the following methods. Note we assume a costly exploration in this context.

1.1 The method of updating value table

1.1.1 Sample average method

Average all the rewards one action actually receives.

Q n + 1 = R 1 + R 2 + ⋯ + R n n Q_{n+1} =\frac{R_1 +R_2 +\cdots +R_{n}}{n} Qn+1=nR1+R2+⋯+Rn

In incremental form, it is equivalent to updating the estimate of value table with a step-size parameter α = 1 n \alpha=\frac{1}{n} α=n1

When n = 1 n=1 n=1, Q 2 = R 1 Q_2=R_1 Q2=R1

Q n + 1 = Q n + 1 n [ R n − Q n ] (2.3) Q_{n+1}=Q_{n}+\frac{1}{n}[R_n-Q_n] \tag{2.3} Qn+1=Qn+n1[Rn−Qn](2.3)

Sample average method is sure to converge the estimate to actual action values in stationary problems.

Note that n n n denotes the number of the specific action has selected. It should be tracked for each action.

1.1.2 Exponential recency-weighted average method (constant step size)

To weight more on recent rewards in nonstationary problems, constant step size could be adopted.

Q n + 1 = Q n + α [ R n − Q n ] (2.5) Q_{n+1}=Q_n +\alpha[R_n-Q_n] \tag{2.5} Qn+1=Qn+α[Rn−Qn](2.5) where α ∈ ( 0 , 1 ] \alpha \in (0,1] α∈(0,1] is constant.

When n = 1 n=1 n=1, Q 2 = ( 1 − α ) Q 1 + α R 1 Q_2=(1-\alpha)Q_1 +\alpha R_1 Q2=(1−α)Q1+αR1, Q 1 Q_1 Q1 is should be selected by user with prior knowledge. So Q Q Q is biased by initial estimate.

It calculates the value take as follows:

Q n + 1 = ( 1 − α ) n Q 1 + ∑ i = 1 n α ( 1 − α ) n − i R i (2.6) Q_{n+1}=(1-\alpha)^nQ_1+\sum_{i=1}^{n}\alpha(1-\alpha)^{n-i}R_i \tag{2.6} Qn+1=(1−α)nQ1+i=1∑nα(1−α)n−iRi(2.6)

The estimate never converges but continues to adapt to most recently received rewards. It is more appropriate to nonstationary problems.

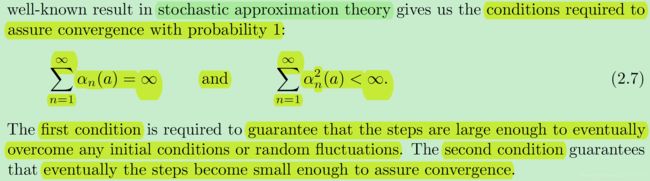

1.1.3 General form of incremental update and convergence criterion

- General update rule

N e w E s t i m a t e ← O l d E s t i m a t e + S t e p S i z e [ T a r g e t − O l d E s t i m a t e ] New Estimate\leftarrow Old Estimate+StepSize[Target-OldEstimate] NewEstimate←OldEstimate+StepSize[Target−OldEstimate] [ T a r g e t − O l d E s t i m a t e ] [Target-OldEstimate] [Target−OldEstimate] is an error in estimate. - Convergence criterion

1.2 The method of selecting actions

Exploration is needed because there is always uncertainty about the accuracy of the action-value estimates. The greedy actions are those that look best at present, but some of the other actions may actually be better

1.2.1 Greedy action selection method

A t = a r g m a x a Q t ( a ) A_t=\mathop{argmax}\limits_{a} Q_t(a) At=aargmaxQt(a)

1.2.2 ε \varepsilon ε-Greedy method

A t = { a r g m a x a Q t ( a ) , with probability of ( 1 − ϵ ) select an action randomly A_t=\begin{cases} \mathop{argmax}\limits_{a} Q_t(a), \text{with probability of }(1-\epsilon) \\ \text{select an action randomly} \end{cases} At=⎩⎨⎧aargmaxQt(a),with probability of (1−ϵ)select an action randomly

Without optimistic initialization, the greedy method always exploits current knowledge to maximize immediate reward. The ε \varepsilon ε-greedy methods will explore with a probability of ε \varepsilon ε, and the optimal action will converge to larger than 1 − ε 1-\varepsilon 1−ε after taking many actions.

1.2.3 Upper-Confidence-Bound (UCB) action selection method

A t = a r g m a x a [ Q t ( a ) + c l n t N t ( a ) ] A_t=\mathop{argmax}\limits_{a} [Q_t(a) + c \sqrt{\frac{lnt}{N_t(a)}}] At=aargmax[Qt(a)+cNt(a)lnt] where c > 0 c>0 c>0 controls the exploration.

UCB uses upper bound like the mean plus variation to select actions. When time gets larger, actions that are selected less often will have larger upper bound and are more likely to be selected. By taking the upper bound, it obeys the optimistic principle which will lead to exploration in reinforced learning. It has logarithmic regrets.

UCB often performs well, as shown here, but is more difficult than ε \varepsilon ε-greedy to extend beyond bandits to the more general reinforcement learning settings considered in the rest of this book. One difficulty is in dealing with nonstationary problems; methods more complex than those presented in Section 2.5 would be needed. Another difficulty is dealing with large state spaces.

1.3 Gradient Bandit Algorithms

1.3.1 Selecting actions

Gradient bandit does not use value tables to select actions, instead, it learns a numerical preference H ( a ) H(a) H(a) for each action. Only the relative preference matters. The probability of selecting each action is calculated using soft-max:

P r { A t = a } = e H t ( a ) ∑ b = 1 k e H t ( b ) = π t ( a ) P_r \{A_t=a\}=\frac{e^{H_t(a)}}{\sum_{b=1}^{k} e^{H_t (b)}} =\pi_t (a) Pr{At=a}=∑b=1keHt(b)eHt(a)=πt(a)

1.3.2 Updating preference (like value table) for each action

H t + 1 ( A t ) = H t ( A t ) + α ( R t − R ˉ t ) ( 1 A t = a − π t ( A t ) ) \begin{aligned} H_{t+1}(A_t)=H_{t}(A_t)+\alpha(R_t-\bar{R}_t)(\mathbb{1}_{A_t=a}-\pi_t(A_t)) \end{aligned} Ht+1(At)=Ht(At)+α(Rt−Rˉt)(1At=a−πt(At)) R ˉ t \bar{R}_t Rˉt is the mean of previous rewards.

It can be derived using stochastic gradient descent (refer to the book 60/38).

H t + 1 ( a ) = H t ( a ) + α ∂ E [ R t ] ∂ H t ( a ) H_{t+1}(a)=H_t (a)+\alpha \frac{\partial \mathbb{E}[R_t]}{\partial H_t(a)} Ht+1(a)=Ht(a)+α∂Ht(a)∂E[Rt]

1.4 Other methods

1.4.1 Optimistic Initial Values

-

The exponential recency weighted method is biased by the initial value one gives. If we like, we may set initial value estimates artificially high to encourage exploration in the short run (this is called optimistic initial values). This is a useful trick for stationary problems, but does not apply so well to non-stationary problems as the added exploration is only temporary.

-

By using large initial values, those actions that are not selected before is usually more likely to be selected. It is a simple way to encourage exploration. For sample-average method, bias disappears once all actions have been selected at least once. For methods with constant stepsize, the bias is permanent (though decreasing over time) Q 2 = ( 1 − α ) Q 1 + α R 1 Q_2=(1-\alpha)Q_1 +\alpha R_1 Q2=(1−α)Q1+αR1.

1.4.2 Thompson Sampling

It fits that distribution, picks samples from each of the distributions, and then picks the Arm that has the highest sample. It also has logarithmic regrets. Note that it can hardly be generalized to full reinforced learning problems.

2 Exercises

Exercise 2.1 ε \varepsilon ε-greedy

- In ε \varepsilon ε-greedy action selection, for the case of two actions and ε \varepsilon ε = 0.5, what is the probability that the greedy action is selected?

- Answer: P = ε + ( 1 − ε ) × 1 / 2 = 0.75 P=\varepsilon+(1-\varepsilon)\times 1/2=0.75 P=ε+(1−ε)×1/2=0.75

Exercise 2.2: Bandit example

- Consider a k-armed bandit problem with k = 4 actions, denoted 1, 2, 3, and 4. Consider applying to this problem a bandit algorithm using ε \varepsilon ε-greedy action selection, sample-average action-value estimates, and initial estimates of Q 1 ( a ) = 0 Q_1(a) = 0 Q1(a)=0, for all a a a. Suppose the initial sequence of actions and rewards is A 1 = 1 , R 1 = − 1 , A 2 = 2 , R 2 = 1 , A 3 = 2 , R 3 = − 2 , A 4 = 2 , R 4 = 2 , A 5 = 3 , R 5 = 0 A_1 = 1, R_1 = −1, A_2 = 2, R_2 = 1, A_3 = 2, R_3 = −2, A_4 = 2, R_4 = 2, A_5 = 3, R_5 = 0 A1=1,R1=−1,A2=2,R2=1,A3=2,R3=−2,A4=2,R4=2,A5=3,R5=0. On some of these time steps the ε \varepsilon ε case may have occurred, causing an action to be selected at random. On which time steps did this definitely occur? On which time steps could this possibly have occurred?

- Answer: The value table for each loop is as follows: { 0 , 0 , 0 , 0 } → { − 1 , 0 , 0 , 0 } → { − 1 , 1 , 0 , 0 } → { − 1 , − 0.5 , 0 , 0 } → { − 1 , 0.33 , 0 , 0 } → { − 1 , 0.33 , 0 , 0 } \{0,0,0,0\}\rightarrow \{-1,0,0,0\}\rightarrow \{-1,1,0,0\}\rightarrow \{-1,-0.5,0,0\}\rightarrow \{-1,0.33,0,0\}\rightarrow \{-1,0.33,0,0\} {0,0,0,0}→{−1,0,0,0}→{−1,1,0,0}→{−1,−0.5,0,0}→{−1,0.33,0,0}→{−1,0.33,0,0}. We can conclude that at the first step, second step, third step ε \varepsilon ε may occur. It must occur at the fourth and fifth step.

Exercise 2.3 Greedy and ε \varepsilon ε-greedy

- In the comparison shown in Figure 2.2, which method will perform best in the long run in terms of cumulative reward and probability of selecting the best action? How much better will it be? Express your answer quantitatively.

- ε = 0.01 \varepsilon=0.01 ε=0.01 will perform the best with % optimal action = ( 1 − ε ) + ε × 1 / 10 = 99.1 % =(1-\varepsilon)+\varepsilon \times 1/10=99.1\% =(1−ε)+ε×1/10=99.1%;

- ε = 0.1 \varepsilon=0.1 ε=0.1 will perform the less well with % optimal action = ( 1 − ε ) + ε × 1 / 10 = 91 % =(1-\varepsilon)+\varepsilon \times 1/10=91\% =(1−ε)+ε×1/10=91%;

- Greedy method probably gets stuck at some suboptimal point.

Exercise 2.4 Weighted average of varying step size

- If the step-size parameters, α n \alpha_n αn, are not constant, then the estimate Q n Q_n Qn is a weighted average of previously received rewards with a weighting divergent from that given by (2.6). What is the weighting on each prior reward for the general case, analogous to (2.6), in terms of the sequence of step-size parameters?

- Q n + 1 = ∏ i = 1 n ( 1 − α i ) Q 1 + ∑ i = 1 n α i ∏ j = 1 i ( 1 − α j ) R i Q_{n+1}=\prod_{i=1}^{n}(1-\alpha_i)Q_1 + \sum_{i=1}^{n}\alpha_i \prod_{j=1}^{i}(1-\alpha_j)R_i Qn+1=i=1∏n(1−αi)Q1+i=1∑nαij=1∏i(1−αj)Ri

Exercise 2.5 (programming)

- Similar with the homework.

Exercise 2.6: Mysterious Spikes

- The results shown in Figure 2.3 should be quite reliable because they are averages over 2000 individual, randomly chosen 10-armed bandit tasks. Why, then, are there oscillations and spikes in the early part of the curve for the optimistic method? In other words, what might make this method perform particularly better or worse, on average, on particular early steps?

- It may be because the low-value and high-value actions all have large initial estimates. It has to try the low-value actions multiple times to fix the estimate, so it perform worse on early steps, and the high-value actions will not be preferred immediately because there are other actions with high initial estimates. However, optimistic initialization only encourages exploration on early steps, so it may perform worse than the ε = 0.1 \varepsilon=0.1 ε=0.1-greedy method that will be 91% optimal in the long run.

- Due to optimisic initialization the first 10 actions will be a sweep through the actions in some random order. On the 11th turn, the action that did best in the first 10 turns is selected again.This action has a greater than chance probability of being the optimal one. It still disappoints due to the optimistic initialization, leading to the subsequent dip. (answer in the quizz)

Exercise 2.7: Unbiased Constant-Step-Size Trick

Use the step β n = α / o ˉ n , where o ˉ n = o ˉ n − 1 + α ( 1 − o ˉ n − 1 ) \text{Use the step }\beta_n=\alpha/\bar{o}_n, \text{ where }\bar{o}_n=\bar{o}_{n-1}+\alpha(1-\bar{o}_{n-1}) Use the step βn=α/oˉn, where oˉn=oˉn−1+α(1−oˉn−1) Q n + 1 = Q n + β ( R n − Q n ) o ˉ n Q n + 1 = o ˉ n Q n + α ( R n − Q n ) = o ˉ n − 1 Q n + α ( R n − o ˉ n − 1 Q n ) If we compare this equation with Eq (2.6) in the book, then o ˉ n Q n + 1 = ( 1 − α ) n o ˉ 0 Q 1 + ∑ i = 1 n α ( 1 − α ) n − i R i Because o ˉ 0 = 0 , Q 1 will disappear. \begin{aligned} Q_{n+1}&=Q_n+\beta(R_n-Q_n)\\ \bar{o}_n Q_{n+1}&=\bar{o}_n Q_n+ \alpha(R_n-Q_n)\\ &=\bar{o}_{n-1} Q_n+\alpha(R_n-\bar{o}_{n-1} Q_n) \end{aligned} \\\text{If we compare this equation with Eq (2.6) in the book, then}\\ \begin{aligned} \bar{o}_n Q_{n+1}&=(1-\alpha)^n \bar{o}_{0} Q_1+\sum_{i=1}^n \alpha(1-\alpha)^{n-i}R_i \end{aligned} \\\text{Because $\bar{o}_0=0, Q_1$ will disappear.} Qn+1oˉnQn+1=Qn+β(Rn−Qn)=oˉnQn+α(Rn−Qn)=oˉn−1Qn+α(Rn−oˉn−1Qn)If we compare this equation with Eq (2.6) in the book, thenoˉnQn+1=(1−α)noˉ0Q1+i=1∑nα(1−α)n−iRiBecause oˉ0=0,Q1 will disappear.

Exercise 2.8: UCB Spikes

- Before 11th step, all the actions that have not been select must be selected once because N t ( a ) = 0 N_t(a)=0 Nt(a)=0. Thus at the 11th step, the action with the optimal Q t ( a ) Q_t(a) Qt(a) should be selected because all the variance term is the same. Then at the 12nd step, the variance start to act again, so exploration begins and there is some drop.

- The uncertainty estimate of the action selected at timestep 11 will be less than the others. This action will thus be at a disadvantage at the next step. If c is large, then this effect dominates and the action that performed best in the first 10 steps is ruled out on step 12 (answer in the quizz)

Exercise 2.9

- Show that in the case of two actions, the soft-max distribution is the same

as that given by the logistic, or sigmoid, function often used in statistics and artificial neural networks. - It is easy to show that.

Exercise 2.10

- Suppose you face a 2-armed bandit task whose true action values change

randomly from time step to time step. Specifically, suppose that, for any time step, the true values of actions 1 and 2 are respectively 0.1 and 0.2 with probability 0.5 (case A), and 0.9 and 0.8 with probability 0.5 (case B). If you are not able to tell which case you face at any step, what is the best expectation of success you can achieve and how should you behave to achieve it? Now suppose that on each step you are told whether you are facing case A or case B (although you still don’t know the true action values). This is an associative search task. What is the best expectation of success you can achieve in this task, and how should you behave to achieve it? - The best expectation for both actions are both 0.5, you can behave randomly.

- You can estimate the value independently. Select action 2 in case A and action 1 in case B, the best expectation is 0.50.2+0.50.9=0.55.

Exercise 2.11 (programming)

- Similar to the homework.

2 Questions

Q1 Bias in Sample-average methods

Bias in Sample-average methods

In section 2.6 (page 34), it is said that “For the sample-average methods, the bias disappears once all actions have been selected at least once”, I am confused how it works.

In page 2.7, it is said that “the sample-average methods, which also treat the beginning of time as a special event, averaging all subsequent rewards with equal weights”. I am also confused because there is no initial value in the equation 2.1 in page 27.

To conclude, when some initial value or bias is set, how sample-average methods handle it? Why will it disappears once all actions have been selected? Does it mean the initial value will be overwrriten by equation 2.1 when it is selected for the first time?

I aslo posted it in piazza, but I do not get endorsed replies so far.

Q2 Exercise 2.6: Mysterious Spikes

I have anwsered the exercise in the following section, but I still cannot understand the spike, is it caused by the random selection pattern?

This article is for self-learners. If you are taking a course, please do not copy this note.