机器学习——线性回归与决策树实验(附效果以及完整代码)(数据集、测试集开源)

机器学习实验

实验名称:实验一、线性回归与决策树

一、实验目的

(1)掌握线性回归算法和决策树算法 ID3 的原理;

(2)学会线性回归算法和决策树算法 ID3 的实现和使用方法。

二、实验内容

本次实验为第一次实验,要求完成本次实验所有内容。具体实验内容如下:

1. 线性回归模型实验

(1)假设 line-ext.csv 是对变量 y 随变量 x 的变化情况的统计数据集。请根据教材的公式(3.7,3.8),使用 Python 语言编程计算线性回归模型的系数,建立一个线性回归模型。要求:

1)计算出回归系数,输出模型表达式,绘制散点图和回归直线,输出均方误差;

2)给出当自变量 x =0.8452 时,因变量 y 的预测值。

(2)对于上面的数据集 line-ext.csv,分别使用第三方模块 sklearn 模块中的类LinearRegression、第三方模块 statsmodels 中的函数ols()来计算线性回归模型的系数,建立线性回归模型,验证上面计算结果及预测结果。

2. 决策树算法实验

(1)隐形眼镜数据集 glass-lenses.txt 是著名的数据集。它包含了很多患者眼部状况的观察条件以及医生推荐的隐形眼镜类型。请使用Python 语言建立决策树模型 ID3,划分 25%的数据集作为测试数据集,并尝试进行后剪枝操作。要求 :

1)使用 Graphviz 工具,将此决策树绘制出来;

2)输出混淆矩阵和准确率。

数据集的属性信息如下:

– 4 Attributes

\1. age of the patient: (1) young, (2) pre-presbyopic (for short, pre ), (3) presbyopic

\2. spectacle prescription: (1) myope, (2) hypermetrope (for short, hyper )

\3. astigmatic: (1) no, (2) yes

\4. tear production rate: (1) reduced, (2) normal

– 3 Classes

1 : the patient should be fitted with hard contact lenses,

2 : the patient should be fitted with soft contact lenses,

3 : the patient should not be fitted with contact lenses.

(2)对于数据集 glass-lenses.txt,使用 sklearn 模块中的类 DecisionTreeClassifier(其中,criterion=’entropy’)来建立决策树模型 ID3,重复 2(1)中的操作,验证上面计算结果。

三、实验代码和过程

①线性回归模型实验

(一)

1)计算出回归系数,输出模型表达式,绘制散点图和回归直线,输出均方误差;

1、读入数据集

# 从CSV文件中读取数据,并返回2个数组。分别是自变量x和因变量y。方便TF计算模型。

def zc_read_csv():

zc_dataframe = pd.read_csv("line-ext.csv", sep=",")

x = []

y = []

for zc_index in zc_dataframe.index:

zc_row = zc_dataframe.loc[zc_index]

x.append(zc_row["YearsExperience"])

y.append(zc_row["Salary"])

return (x,y)

x, y = zc_read_csv()

2、计算回归系数

averageX = 0

averageY = 0

for i in range (len(x)):

averageX += x[i]

for i in range (len(y)):

averageY += y[i]

#先计算平均数

averageX = averageX/(len(x))

averageY = averageY/(len(y))

#计算回归系数W

numerator = 0

denominator = 0

twice = 0

for i in range (len(x)):

numerator+=(y[i]*(x[i]-averageX))

twice += (x[i]*x[i])

denominator +=x[i]

decominator = math.pow(denominator,2)/(len(x))

w = numerator/(twice-decominator)

b=0

for i in range (len(x)):

b +=(y[i]-w*x[i])

b /= (len(x))

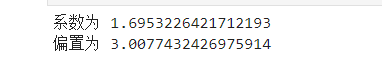

print("系数为",w)

print("偏置为",b)

3、输出模型表达式

s="模型表达式y="+repr(w)+"x+"+repr(b)

print(s)

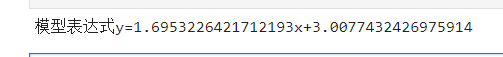

4、绘制散点图和回归直线

# 获得画图对象。

fig = plt.figure()

fig.set_size_inches(20, 8) # 整个绘图区域的宽度10和高度4

ax = fig.add_subplot(1, 2, 1) # 整个绘图区分成一行两列,当前图是第一个。

# 画出原始数据的散点图。

ax.set_title("salary table")

ax.set_xlabel("YearsExperience")

ax.set_ylabel("Salary")

ax.scatter(x, y)

plt.plot([0,1],[3.0077432426975914,4.7030658848688107],c='green',ls='-')

plt.show()

5、输出均方误差

mse = 0

for i in range(len(x)):

mse += ((x[i]*w+b)-y[i])*((x[i]*w+b)-y[i])

mse /= (len(x))

print("线性回归方程的均方误差:",mse)

2)给出当自变量 x =0.8452 时,因变量 y 的预测值。

predictY = 0.8542*w+b

print("预测值",predictY)

(二)

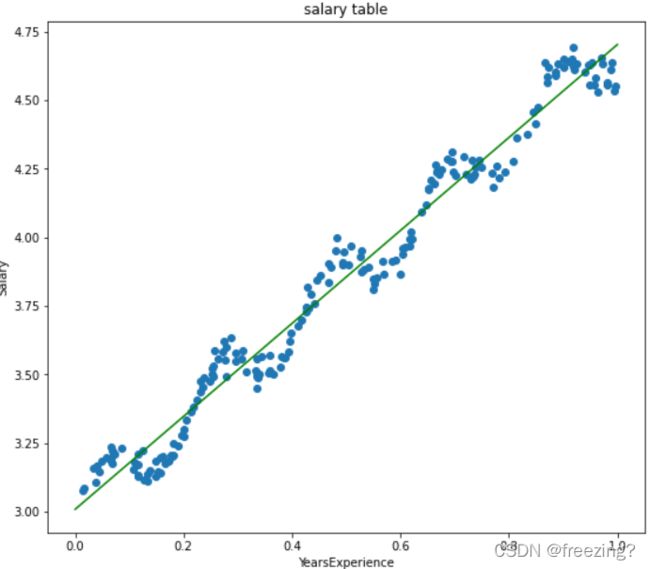

1)LinearRegression预测

from sklearn import linear_model

linearRegression = linear_model.LinearRegression()

arrayX = np.array(x)

arrayY = np.array(y)

xTest = (arrayX.reshape((len(arrayX)),-1))

yTest = (arrayY.reshape((len(arrayY)),-1))

linearRegression.fit(xTest,yTest)

print("系数:",linearRegression.coef_)

print("截距:",linearRegression.intercept_)

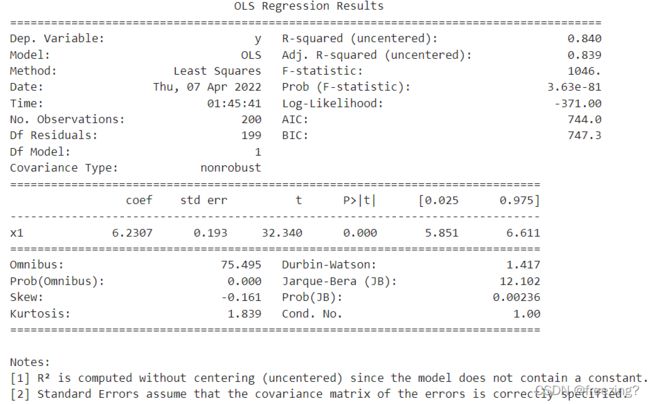

2)OLS拟合

import statsmodels.api as smf

ols = smf.OLS(y,x)

model = ols.fit()

print(model.summary())

②决策树实验

(一)

1)使用Python 语言建立决策树模型

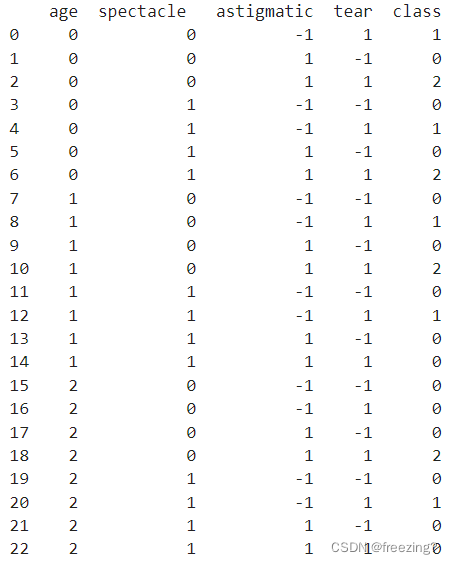

1、载入转化数据函数

#载入转化数据

#young:0,pre:1,presbyopic:2

#myope:0,hyper:1

#yes:1,no:-1

#no lenses:0,soft:1,hard:2

dic={'young':0,'pre':1,'presbyopic':2,'myope':0,'hyper':1,'yes':1,'no':-1,'no lenses':0,'soft':1,'hard':2}

2、读入数据

import pandas as pd

#载入转化数据

#young:0,pre:1,presbyopic:2

#myope:0,hyper:1

#yes:1,no:-1

#reduced:1,nomal:-1

#no lenses:0,soft:1,hard:2

dic={'young':0, 'pre':1, 'presbyopic':2, 'myope':0, 'hyper':1, 'yes':1, 'no':-1, 'reduced':-1, 'normal':1, 'no lenses':0, 'soft':1, 'hard':2}

#转换函数

def convert(v):

return dic[v]

def load_data(file):

data = pd.read_csv(file,sep='\t', header=0, names=['age', 'spectacle', 'astigmatic', 'tear','class'],index_col=False)

data = data.applymap(convert)

return data

data = load_data('glass-lenses.txt')

print(data)

3、计算信息熵、条件信息熵、信息增益

import pandas as pd

import numpy as np

#载入转化数据

#young:0,pre:1,presbyopic:2

#myope:0,hyper:1

#yes:1,no:-1

#reduced:1,nomal:-1

#no lenses:0,soft:1,hard:2

dic={'young':0, 'pre':1, 'presbyopic':2, 'myope':0, 'hyper':1, 'yes':1, 'no':-1, 'reduced':-1, 'normal':1, 'no lenses':0, 'soft':1, 'hard':2}

#转换函数

def convert(v):

return dic[v]

def load_data(file):

data = pd.read_csv(file,sep='\t', header=0, names=['id','age', 'spect', 'astig', 'tear','class'],index_col='id')

data = data.applymap(convert)

return data

data = load_data('glass-lenses.txt')

# print(data)

# 数据集信息熵H(D)

def entropyOfData(D):

nums = len(D)

cate_data = D['class'].value_counts()

cate_data = cate_data / nums # 类别及其概率

# 计算数据集的熵

return sum(- cate_data * np.log2(cate_data))

# 条件信息熵H(D|A)

def conditional_entropy(D, A):

# 特征A的不同取值的概率

nums = len(D) # 数据总数

A_data = D[A].value_counts()# 特征A的取值及个数

A_data = A_data / nums # 特征A的取值及其对应的概率

# print(A_data)

# 特征A=a的情况下,数据集的熵

D_ik = D.groupby([A, 'class']).count().iloc[:, 0].reset_index() # Dik的大小

D_i = D.groupby(A).count().iloc[:, 0] # Di的大小

# print(D_ik)

# print(D_i)

# 给定特征A=a的情况下,数据集类别的概率

D_ik['possibilities'] = D_ik.apply(lambda x: x[2]/D_i[x[0]], axis=1)

# print(D_ik)

# 给定特征A=a的情况下,数据集的熵

entropy = D_ik.groupby(A)['possibilities'].apply(lambda x: sum(- x * np.log2(x)))

# print(entropy)

# 计算条件熵H(D|A)

return sum(A_data * entropy)

# print(conditional_entropy(data, 'age'))

# 信息增益

def info_gain(D, A):

return entropyOfData(D) - conditional_entropy(D, A)

# print(info_gain(data, 'age'))

4、选择最优特征属性

# 获得最优特征

def getOptiFeature(D):

Max = -1

Opti = ''

for A in list(D)[:-1]:

cur = info_gain(D, A)

if Max < cur:

Opti = A

Max = cur

return Opti, Max

# 选择最优特征

print(getOptiFeature(data))

5、划分数据集,构建决策树

def majorityCnt(classList):

classCount = {}

for vote in classList: # 统计当前划分下每中情况的个数

if vote not in classCount.keys():

classCount[vote] = 0

classCount[vote] += 1

sortedClassCount = sorted(classCount.items, key=operator.itemgetter(

1), reversed=True) # reversed=True表示由大到小排序

# 对字典里的元素按照value值由大到小排序

return sortedClassCount[0][0]

def createTree(dataSet, labels):

classList = [example[-1]

for example in dataSet] # 创建数组存放所有标签值,取dataSet里最后一列(结果)

# 类别相同,停止划分

# 判断classList里是否全是一类,count() 方法用于统计某个元素在列表中出现的次数

if classList.count(classList[-1]) == len(classList):

return classList[-1] # 当全是一类时停止分割

# 长度为1,返回出现次数最多的类别

if len(classList[0]) == 1: # 当没有更多特征时停止分割,即分到最后一个特征也没有把数据完全分开,就返回多数的那个结果

return majorityCnt(classList)

# 按照信息增益最高选取分类特征属性

bestFeat = chooseBestFeatureToSplit(dataSet) # 返回分类的特征序号,按照最大熵原则进行分类

bestFeatLable = labels[bestFeat] # 该特征的label, #存储分类特征的标签

myTree = {bestFeatLable: {}} # 构建树的字典

del(labels[bestFeat]) # 从labels的list中删除该label

featValues = [example[bestFeat] for example in dataSet]

uniqueVals = set(featValues)

for value in uniqueVals:

# 子集合 ,将labels赋给sublabels,此时的labels已经删掉了用于分类的特征的标签

subLables = labels[:]

# 构建数据的子集合,并进行递归

myTree[bestFeatLable][value] = createTree(splitDataSet(dataSet, bestFeat, value), subLables)

return myTree

def classify(inputTree, featLabels, testVec):

firstStr = next(iter(inputTree)) # 根节点

secondDict = inputTree[firstStr]

featIndex = featLabels.index(firstStr) # 根节点对应的属性

classLabel = None

for key in secondDict.keys(): # 对每个分支循环

if testVec[featIndex] == key: # 测试样本进入某个分支

if type(secondDict[key]).__name__ == 'dict': # 该分支不是叶子节点,递归

classLabel = classify(secondDict[key], featLabels, testVec)

else: # 如果是叶子, 返回结果

classLabel = secondDict[key]

return classLabel

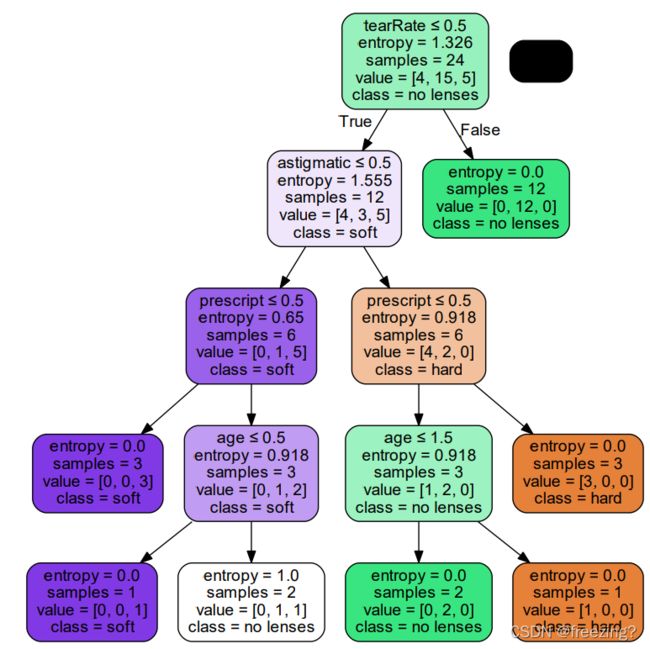

6、绘制决策树

if __name__ == '__main__':

with open('glass-lenses.txt', 'r') as fr_train: # 加载文件

lenses_train = [inst.strip().split('\t') for inst in fr_train.readlines()] # 处理文件

lenses_target_train = [] # 提取每组数据的类别,保存在列表里

for each in lenses_train:

lenses_target_train.append(each[-1])

lensesLabels_train = ['age', 'prescript', 'astigmatic', 'tearRate'] # 特征标签

lenses_list_train = [] # 保存lenses数据的临时列表

lenses_dict_train = {} # 保存lenses数据的字典,用于生成pandas

for each_label in lensesLabels_train: # 提取信息,生成字典

for each in lenses_train:

lenses_list_train.append(each[lensesLabels_train.index(each_label)])

lenses_dict_train[each_label] = lenses_list_train

lenses_list_train = []

#print(lenses_dict) # 打印字典信息

lenses_pd_train = pd.DataFrame(lenses_dict_train) # 生成pandas.DataFrame

#print(lenses_pd_train)

le_train = preprocessing.LabelEncoder() # 创建LabelEncoder()对象,用于序列化

for col in lenses_pd_train.columns: # 为每一列序列化

lenses_pd_train[col] = le_train.fit_transform(lenses_pd_train[col])

#print(lenses_pd_train)

clf = tree.DecisionTreeClassifier(criterion='entropy',max_depth=4) # 创建DecisionTreeClassifier()类

clf = clf.fit(lenses_pd_train.values.tolist(), lenses_target_train) # 使用数据,构建决策树

print(clf)

dot_data = StringIO()

tree.export_graphviz(clf, out_file=dot_data, # 绘制决策树

feature_names=lenses_pd_train.keys(),

class_names=clf.classes_,

filled=True, rounded=True,

special_characters=True)

graph = pydotplus.graph_from_dot_data(dot_data.getvalue())

graph.write_pdf("tree.pdf")

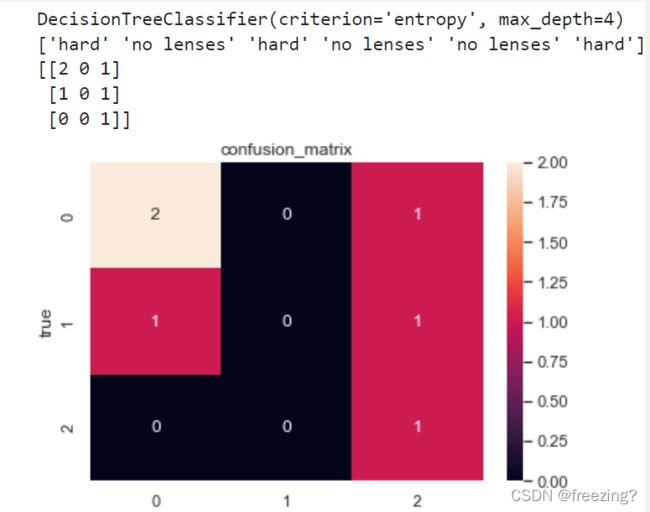

2)输出混淆矩阵和准确率。

决策树完整代码

import pandas as pd

import seaborn as sn

import numpy as np

from sklearn import preprocessing

from sklearn import tree

from io import StringIO

import pydotplus

import math

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.metrics import confusion_matrix

#载入转化数据

#young:0,pre:1,presbyopic:2

#myope:0,hyper:1

#yes:1,no:-1

#reduced:1,nomal:-1

#no lenses:0,soft:1,hard:2

dic={'young':0, 'pre':1, 'presbyopic':2, 'myope':0, 'hyper':1, 'yes':1, 'no':-1, 'reduced':-1, 'normal':1, 'no lenses':0, 'soft':1, 'hard':2}

#转换函数

def convert(v):

return dic[v]

def load_data(file):

data = pd.read_csv(file,sep='\t', header=0, names=['age', 'spect', 'astig', 'tear','class'])

data = data.applymap(convert)

return data

#print(data)

def calculateEnt(dataSet): # 计算香农熵

numEntries = len(dataSet)

labelCounts = {}

for featVec in dataSet:

currentLabel = featVec[-1] # 取得最后一列数据,该属性取值情况有多少个

if currentLabel not in labelCounts.keys():

labelCounts[currentLabel] = 0

labelCounts[currentLabel] += 1

# 计算熵

Ent = 0.0

for key in labelCounts:

prob = float(labelCounts[key])/numEntries

Ent -= prob*math.log(prob, 2)

return Ent

def splitDataSet(dataSet, axis, value):

retDataSet = []

for featVec in dataSet: # 取大列表中的每个小列表

if featVec[axis] == value:

reduceFeatVec = featVec[:axis]

reduceFeatVec.extend(featVec[axis+1:])

retDataSet.append(reduceFeatVec)

return retDataSet # 返回不含划分特征的子集

def chooseBestFeatureToSplit(dataSet):

numFeature = len(dataSet[0]) - 1

baseEntropy = calculateEnt(dataSet)

bestInforGain = 0

bestFeature = -1

for i in range(numFeature):

featList = [number[i] for number in dataSet] # 得到某个特征下所有值(某列)

uniquelVals = set(featList) # set无重复的属性特征值,得到所有无重复的属性取值

# 计算每个属性i的概论熵

newEntropy = 0

for value in uniquelVals:

subDataSet = splitDataSet(

dataSet, i, value) # 得到i属性下取i属性为value时的集合

prob = len(subDataSet)/float(len(dataSet)) # 每个属性取值为value时所占比重

newEntropy += prob*calculateEnt(subDataSet)

inforGain = baseEntropy - newEntropy # 当前属性i的信息增益

if inforGain > bestInforGain:

bestInforGain = inforGain

bestFeature = i

return bestFeature # 返回最大信息增益属性下标

def majorityCnt(classList):

classCount = {}

for vote in classList: # 统计当前划分下每中情况的个数

if vote not in classCount.keys():

classCount[vote] = 0

classCount[vote] += 1

sortedClassCount = sorted(classCount.items, key=operator.itemgetter(

1), reversed=True) # reversed=True表示由大到小排序

# 对字典里的元素按照value值由大到小排序

return sortedClassCount[0][0]

def createTree(dataSet, labels):

classList = [example[-1]

for example in dataSet] # 创建数组存放所有标签值,取dataSet里最后一列(结果)

# 类别相同,停止划分

# 判断classList里是否全是一类,count() 方法用于统计某个元素在列表中出现的次数

if classList.count(classList[-1]) == len(classList):

return classList[-1] # 当全是一类时停止分割

# 长度为1,返回出现次数最多的类别

if len(classList[0]) == 1: # 当没有更多特征时停止分割,即分到最后一个特征也没有把数据完全分开,就返回多数的那个结果

return majorityCnt(classList)

# 按照信息增益最高选取分类特征属性

bestFeat = chooseBestFeatureToSplit(dataSet) # 返回分类的特征序号,按照最大熵原则进行分类

bestFeatLable = labels[bestFeat] # 该特征的label, #存储分类特征的标签

myTree = {bestFeatLable: {}} # 构建树的字典

del(labels[bestFeat]) # 从labels的list中删除该label

featValues = [example[bestFeat] for example in dataSet]

uniqueVals = set(featValues)

for value in uniqueVals:

# 子集合 ,将labels赋给sublabels,此时的labels已经删掉了用于分类的特征的标签

subLables = labels[:]

# 构建数据的子集合,并进行递归

myTree[bestFeatLable][value] = createTree(splitDataSet(dataSet, bestFeat, value), subLables)

return myTree

def classify(inputTree, featLabels, testVec):

firstStr = next(iter(inputTree)) # 根节点

secondDict = inputTree[firstStr]

print(secondDict)

featIndex = featLabels.index(firstStr) # 根节点对应的属性

classLabel = None

for key in secondDict.keys(): # 对每个分支循环

if testVec[featIndex] == key: # 测试样本进入某个分支

if type(secondDict[key]).__name__ == 'dict': # 该分支不是叶子节点,递归

classLabel = classify(secondDict[key], featLabels, testVec)

else: # 如果是叶子, 返回结果

classLabel = secondDict[key]

return classLabel

if __name__ == '__main__':

with open('glass-lenses.txt', 'r') as fr_train: # 加载文件

lenses_train = [inst.strip().split('\t') for inst in fr_train.readlines()] # 处理文件

lenses_target_train = [] # 提取每组数据的类别,保存在列表里

for each in lenses_train:

lenses_target_train.append(each[-1])

lensesLabels_train = ['age','prescript','astigmatic','tearRate','class'] # 特征标签

lenses_list_train = [] # 保存lenses数据的临时列表

lenses_dict_train = {} # 保存lenses数据的字典,用于生成pandas

for each_label in lensesLabels_train: # 提取信息,生成字典

for each in lenses_train:

lenses_list_train.append(each[lensesLabels_train.index(each_label)])

lenses_dict_train[each_label] = lenses_list_train

lenses_list_train = []

#print(lenses_dict) # 打印字典信息

lenses_pd_train = pd.DataFrame(lenses_dict_train) # 生成pandas.DataFrame

#print(lenses_pd_train)

le_train = preprocessing.LabelEncoder() # 创建LabelEncoder()对象,用于序列化

for col in lenses_pd_train.columns: # 为每一列序列化

lenses_pd_train[col] = le_train.fit_transform(lenses_pd_train[col])

#print(lenses_pd_train)

clf = tree.DecisionTreeClassifier(criterion='entropy',max_depth=4) # 创建DecisionTreeClassifier()类

clf = clf.fit(lenses_pd_train.values.tolist(), lenses_target_train) # 使用数据,构建决策树

print(clf)

dot_data = StringIO()

tree.export_graphviz(clf, out_file=dot_data, # 绘制决策树

feature_names=lenses_pd_train.keys(),

class_names=clf.classes_,

filled=True, rounded=True,

special_characters=True)

graph = pydotplus.graph_from_dot_data(dot_data.getvalue())

graph.write_pdf("tree.pdf")

# fr = open('glass-lenses.txt')

# trainData=[inst.strip().split('\t') for inst in fr.readlines()]

# lensesLabels=['age','prescript','astigmatic','tearRate','class']

# lensesTree=createTree(trainData,lensesLabels)

# fr = open('Test.txt')

# testData = [lenses.strip().split('\t') for lenses in fr.readlines()]

# print(testData)

# testResults = classify(lensesTree,lensesLabels,testData)

# print(testResults)

with open('Test.txt', 'r') as fr_test: # 加载文件

lenses_test = [inst.strip().split('\t') for inst in fr_test.readlines()] # 处理文件

lenses_target_test = [] # 提取每组数据的类别,保存在列表里

for each in lenses_test:

lenses_target_test.append(each[-1])

lensesLabels_test = ['age', 'prescript', 'astigmatic', 'tearRate','class'] # 特征标签

lenses_list_test = [] # 保存lenses数据的临时列表

lenses_dict_test = {} # 保存lenses数据的字典,用于生成pandas

for each_label in lensesLabels_test: # 提取信息,生成字典

for each in lenses_test:

lenses_list_test.append(each[lensesLabels_test.index(each_label)])

lenses_dict_test[each_label] = lenses_list_test

lenses_list_test = []

#print(lenses_dict) # 打印字典信息

lenses_pd_test = pd.DataFrame(lenses_dict_test) # 生成pandas.DataFrame

#print(lenses_pd_test)

le_test = preprocessing.LabelEncoder() # 创建LabelEncoder()对象,用于序列化

for col in lenses_pd_test.columns: # 为每一列序列化

lenses_pd_test[col] = le_test.fit_transform(lenses_pd_test[col])

# print(clf.predict(lenses_pd_test))

sn.set()

f,ax=plt.subplots()

y_true = ['no lenses','soft','soft','no lenses','no lenses','hard']

y_pred = clf.predict(lenses_pd_test)

C2=confusion_matrix(y_true,y_pred,labels=['no lenses','soft','hard'])

# print(C2)

sn.heatmap(C2,annot=True,ax=ax)

ax.set_title('confusion_matrix')

ax.set_xlabel('predict')

ax.set_ylabel('true')

plt.show()

数据集

测试集开源

数据集开源

数据集、测试集已经上传