- Python 3.11.6 Windows 64位版安装程序下载:轻松上手Python最新版本

惠凯忱Montague

Python3.11.6Windows64位版安装程序下载:轻松上手Python最新版本去发现同类优质开源项目:https://gitcode.com/项目介绍在编程领域,Python无疑是一种极为流行且强大的编程语言。Python3.11.6Windows64位版安装程序的推出,为Windows用户提供了官方最新版本的安装便利。这个版本不仅包含了许多优化和新特性,而且确保了在64位Windows

- 【YOLOv11】ultralytics最新作品yolov11 AND 模型的训练、推理、验证、导出 以及 使用

Jackilina_Stone

#DeepLearning【改进】YOLO系列YOLO人工智能python计算机视觉深度学习

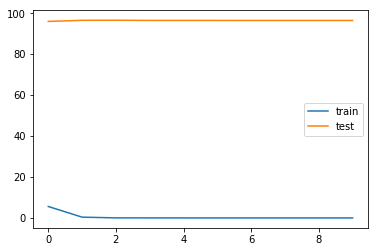

目录一ultralytics公司的最新作品YOLOV111yolov11的创新2安装YOLOv113PYTHONGuide二训练三验证四推理五导出模型六使用文档:https://docs.ultralytics.com/models/yolo11/代码链接:https://github.com/ultralytics/ultralyticsPerformanceMetrics

- python中常用函数表_Python列表中几个常用函数总结

weixin_39934613

python中常用函数表

1、append()方法用于在列表末尾添加新的对象。语法:list.append(obj)参数:list定义的列表obj所要添加到列表的对象例:list=['Microsoft','Amazon','Geogle']list.append('Apple')print(list)显示结果为:['Microsoft','Amazon','Geogle','Apple']2、extend()函数用于在列

- Python 与 面向对象编程(OOP)

lanbing

面向对象(OOP)python开发语言面向对象

Python是一种支持面向对象编程(OOP)的多范式语言,其OOP实现简洁灵活,但在某些设计选择上与传统OOP语言(如Java、C#)存在显著差异。以下是Python面向对象编程的核心特性、优势和局限性的全面解析:一、Python的OOP核心特性1.万物皆对象Python中所有数据类型(如整数、字符串)均为对象,继承自object基类。函数、模块、异常等也都是对象,可以赋值、传递或动态修改。例如n

- 【Python】Python —— 列表 (文末附思维导图)

Python——列表1定义用于存储任意数目、任意类型的数据集合。List(列表)是Python内置的一种数据类型。标准语法格式:1.a=[10,20,30,40]2.a=[10,20,‘abc’,True]是一种有序的集合,可以随时增加或删除其中的元素。标识是中括号[]。2创建2.1基本语法创建a=[10,20,'yangyaqi','石家庄学院',True]a[10,20,‘yangyaqi’,

- python源码编译安装和常见问题解决

运维天坑笔记

python开发语言linux

python编译安装1、下载源码包wgethttps://www.python.org/ftp/python/3.9.10/Python-3.9.10.tgztar-zxfPython-3.9.10.tgzcdpython39/2、编译安装./configure--prefix=/usr/local/python39--enable-shared--enable-optimizationsmake

- Python语法笔记

XiTang1

python笔记开发语言

Python的基本语法1.计算机相关的名词知识1.1计算机的组成计算机之父:冯.诺依曼,根据冯.诺依曼结构体系,计算机是分为5部分的1.输入设备把信息传递到计算机中,比如键盘、鼠标2.输出设备信息从计算机中传递出来,比如音响、显示器、打印机等等3.存储区计算机被发明出来就是用于数据的存储和计算的计算机上有两个存储数据的设备:内存、硬盘硬盘:电脑上的磁盘分区,存储在硬盘中的数据都是持久化存储【只要不

- Python编程:实现文件比对

倔强老吕

C++与python交互编程python哈希算法

Python提供了多个用于文件比对的库,适用于不同的比较场景。以下是主要的文件比对库及其特点:1.标准库中的比对工具1.1filecmp模块功能:文件和目录比较特点:比较文件内容(浅层和深层比较)比较目录结构内置dircmp类用于目录比较典型用途:importfilecmp#文件比较filecmp.cmp('file1.txt','file2.txt',shallow=False)#目录比较com

- Python, C ++,C #开发全球英才阐教版集结令APP

Geeker-2025

pythonc++c语言

以下是为使用**Python、C++和C#**开发**全球英才(阐教版)集结令APP**的深度技术方案,融合三语言优势构建跨平台、高智能的玄门英才聚合系统:---###一、系统架构设计```mermaidgraphTDA[多端客户端]-->B{C#阐道引擎}B-->C[C++玄法核心]C-->D[Python慧识层]D-->E[AI英才匹配]C-->F[天机推演]B-->G[三界通信]G-->H[

- Python, Rust 开发教育/医疗/文化资源去中心化分配APP

Geeker-2025

pythonrust

以下是为教育、医疗、文化资源设计的**去中心化分配APP**的完整技术方案,结合Python的灵活性和Rust的高性能与安全性,实现公平透明的资源分配:---###系统架构设计```mermaidgraphTDA[用户终端]-->B[区块链网络]A-->C[分配引擎]B-->D[智能合约]C-->E[资源数据库]D-->F[分配记录]subgraph技术栈C-.Rust.->G[核心分配算法]D-

- Python, Go 开发客户服务软件APP

Geeker-2025

pythongolang

以下是一个结合Python和Go开发的**客户服务软件APP**的完整技术方案,充分利用Python的AI能力和Go的高并发特性,构建高性能、智能化的客户服务系统:---###系统架构设计```mermaidgraphTDA[客户端]-->B[GoAPI网关]B-->C[工单管理]B-->D[实时聊天]B-->E[知识库]B-->F[AI引擎]C-->G[工单数据库]D-->H[消息队列]F-->

- 基于nodejs+vue.js服装商店电子商务管理系统

如果你是一个小白,你不懂得像javaPHP、Python等编程语言,那么Node.js是一个非常好的选择。采用vscode软件开发,配套软件安装.包安装调试部署成功,有视频讲解前端:html+vue+elementui+jQuery、js、css数据库:mysql,Navicatvue框架于Node运行环境的Web框架,随着互联网技术的飞速发展,世界逐渐成了一个地球村,空间的距离也不再是那么重要。

- 《Effective Python》第十一章 性能——延迟加载模块,通过动态导入减少 Python 程序启动时间

不学无术の码农

EffectivePython精读笔记python开发语言

引言本文基于《EffectivePython:125SpecificWaystoWriteBetterPython,3rdEdition》第11章:性能中的Item98:Lazy-LoadModuleswithDynamicImportstoReduceStartupTime。本文旨在总结书中关于延迟加载模块的核心观点,并结合我自己的开发经验,深入探讨其在实际项目中的应用场景与优化价值。Pytho

- 「日拱一码」010 Python常用库——statistics

胖达不服输

「日拱一码」pythonpython常用库statistics

目录平均值相关mean():计算算术平均值,即所有数值相加后除以数值的个数fmean():与mean()类似,但使用浮点运算,速度更快,精度更高geometric_mean():计算几何平均值,即所有数值相乘后开n次方根(n为数值的个数)harmonic_mean():计算调和平均值,即数值个数除以每个数值的倒数之和median():计算中位数,即将一组数值按大小顺序排列后位于中间的数。如果数值个

- 「日拱一码」013 Python常用库——Numpy

胖达不服输

「日拱一码」pythonnumpy常用库

目录数组创建numpy.array:创建一个ndarray对象numpy.zeros:创建一个指定形状和数据类型的全零数组numpy.ones:创建一个指定形状和数据类型的全1数组numpy.empty:创建一个指定形状和数据类型的未初始化数组。其元素值是随机的,取决于内存中的初始状态numpy.arange:类似于Python内置的range函数,但返回的是ndarraynumpy.linspa

- python日记Day17——Pandas之Excel处理

石石石大帅

Python笔记excelpython数据分析

python日记——Pandas之Excel处理创建文件importpandasaspddf=pd.DataFrame({'ID':[1,2,3],'Name':['Tom','BOb','Gigi']})df.to_excel("C:/Temp/Output.xlsx")print("done!")读取文件importpandasaspdpeople=pd.read_excel("C:/Temp

- 尚未调用 CoInitialize 问题解决

在线程开头处添加即可importpythoncompythoncom.CoInitialize()执行完成需要用pythoncom.CoUninitialize释放资源

- 【常见问题】Python自动化办公,打开输出的word文件,报错AttributeError: module ‘win32com.gen_py.00020905-0000-0000-

Python自动化办公,打开输出的word文件,出现ERROR:File"D:\Develop\Building_save_energy\BuildingDiagnoseRenovationTool.py",line2930,inopen_docdoc_app=win32.gencache.EnsureDispatch('Word.Application')File"C:\Users\Jay\.c

- 第十一节:Vben Admin 最新 v5.0 (vben5) + Python Flask 快速入门 - 角色菜单授权

锅锅来了

Vbenvben5VbenAdminpython3后台管理框架

Vben5系列文章目录基础篇✅第一节:VbenAdmin最新v5.0(vben5)+PythonFlask快速入门✅第二节:VbenAdmin最新v5.0(vben5)+PythonFlask快速入门-PythonFlask后端开发详解(附源码)✅第三节:VbenAdmin最新v5.0(vben5)+PythonFlask快速入门-对接后端登录接口(上)✅第四节:VbenAdmin最新v5.0(v

- python 内置函数大全及完整使用示例

慧一居士

Pythonpython

Python内置函数是预先定义好的高效工具,涵盖数学运算、类型转换、序列操作等多个领域。以下是常见内置函数的分类大全及使用示例:一、数学运算函数abs(x)返回数值的绝对值,支持整数、浮点数和复数[1][2][4]。abs(-10)#输出10abs(-3.5)#输出3.5abs(3+4j)#输出5.0divmod(a,b)返回商和余数的元组,等价于(a//b,a%b)[2][4]。divmod(9

- Python —— pandas 主要方法 和 常用属性(一)

墨码

笔记知识点python数据分析Pandas

Pandas基础类型Series类型创建SeriesSeries的自定义索引读取SeriesPandaspandas数据分析统计包,是一款功能强大的用于数据分析的操作工具,由于其的实用性对操作数据的方便性广受欢迎,今天就来学习一下Pandas数据包的用法吧!在此之前推荐了解一下numpy基础类型说道数据类型,大家熟知的大概都是intstrbool等数据类型,或者是Python中的listtuple

- 机器学习:集成学习方法之随机森林(Random Forest)

慕婉0307

机器学习集成学习机器学习随机森林

一、集成学习与随机森林概述1.1什么是集成学习集成学习(EnsembleLearning)是机器学习中一种强大的范式,它通过构建并结合多个基学习器(baselearner)来完成学习任务。集成学习的主要思想是"三个臭皮匠,顶个诸葛亮",即通过组合多个弱学习器来获得一个强学习器。集成学习方法主要分为两大类:Bagging(BootstrapAggregating):并行训练多个基学习器,然后通过投票

- 「日拱一码」014 Python常用库——Pandas

目录数据结构pandas.Series:一维数组,类似于数组,但索引可以是任意类型,而不仅仅是整数pandas.DataFrame:二维表格型数据结构,类似于Excel表格,每列可以是不同的数据类型数据读取与写入读取数据pd.read_csv():读取CSV文件pd.read_excel():读取Excel文件pd.read_sql():从数据库读取数据写入数据DataFrame.to_csv()

- Python 项目完整结构示例

慧一居士

Pythonpython

以下是一个典型的Python项目完整结构示例,适用于中等规模的应用程序或库。该结构遵循最佳实践,具有良好的模块化、可维护性和扩展性。项目结构示例my_project/├──src/#源代码目录│├──__init__.py#标记为Python包│├──main.py#主程序入口(可选)│├──core/#核心功能模块││├──__init__.py││├──app.py││└──utils.py│

- python tab键自动补全怎么用_python Tab自动补全命令设置

weixin_39961636

pythontab键自动补全怎么用

Mac/Windows下需要安装模块儿pipinstallpyreadlinepipinstallrlcompleterpipinstallreadline注意,需要先安装pyreadline之后才能顺利安装readlineMac下代码如下>>>importrlcompleter>>>importreadline>>>importos>>>importsys>>>>>>if'libedit'inr

- python tab键自动补全_为python命令行添加Tab键自动补全功能

weixin_39692253

pythontab键自动补全

在使用linux命令的时候我们习惯使用下Tab键,在python下我们也可以实现类似的功能。具体代码如下:$catstartup.py#!/usr/bin/python#pythonstartupfileimportsysimportreadlineimportrlcompleterimportatexitimportos#tabcompletionreadline.parse_and_bind(

- python tab键自动补全没反应_CentOS下为python命令行添加Tab键自动补全功能

weixin_39741459

pythontab键自动补全没反应

难道python命令就真的没办法使用Tab键的自动补全功能么?当然不是了,我们依然可以使用。只不过需要自己动手配置一下。操作系统环境:CentOSrelease6.4x86_32软件版本:Python2.6.6下面我们具体了解配置方法:1、编写一个Tab键自动补全功能的脚本。新手会说不会写怎么办?搜索引擎可以帮助你,关键字(pythontab键自动补全)1、编写一个Tab键自动补全功能的脚本。新手

- python命令行添加Tab键自动补全

weixin_30600503

python

1、编写一个tab的自动补全脚本,名为tab.py#!/usr/bin/python#pythontabcompleteimportsysimportreadlineimportrlcompleterimportatexitimportos#tabcompletionreadline.parse_and_bind('tab:complete')#historyfilehistfile=os.pat

- 【Python】edge-tts :便捷语音合成

宅男很神经

python开发语言

第一章:初识edge-tts——开启语音合成之旅1.1文本转语音(TTS)技术概述文本转语音(Text-to-Speech,TTS),顾名思义,是一种将输入的文本信息转换成可听的语音波形的技术。它是人机语音交互的关键组成部分,使得计算机能够像人一样“说话”。1.1.1TTS的发展简史与重要性TTS技术的研究可以追溯到上世纪中叶,早期的TTS系统通常基于参数合成或拼接合成的方法,声音机械、不自然。参

- 学会了编程才知道店员是“AI机器人”

IT-博通哥

Pythonpython开发语言

根据老罗点咖啡的视频中("给我来杯中杯"、"这是大杯,中杯是我们最小的杯型"的对话)我用Python代码模拟这个场景:classStarbucks:def__init__(self):self.cup_sizes={"中杯":"Tall","大杯":"Grande","超大杯":"Venti"}deforder_coffee(self,size):ifsize=="中杯":print("店员:这是

- 二分查找排序算法

周凡杨

java二分查找排序算法折半

一:概念 二分查找又称

折半查找(

折半搜索/

二分搜索),优点是比较次数少,查找速度快,平均性能好;其缺点是要求待查表为有序表,且插入删除困难。因此,折半查找方法适用于不经常变动而 查找频繁的有序列表。首先,假设表中元素是按升序排列,将表中间位置记录的关键字与查找关键字比较,如果两者相等,则查找成功;否则利用中间位置记录将表 分成前、后两个子表,如果中间位置记录的关键字大于查找关键字,则进一步

- java中的BigDecimal

bijian1013

javaBigDecimal

在项目开发过程中出现精度丢失问题,查资料用BigDecimal解决,并发现如下这篇BigDecimal的解决问题的思路和方法很值得学习,特转载。

原文地址:http://blog.csdn.net/ugg/article/de

- Shell echo命令详解

daizj

echoshell

Shell echo命令

Shell 的 echo 指令与 PHP 的 echo 指令类似,都是用于字符串的输出。命令格式:

echo string

您可以使用echo实现更复杂的输出格式控制。 1.显示普通字符串:

echo "It is a test"

这里的双引号完全可以省略,以下命令与上面实例效果一致:

echo Itis a test 2.显示转义

- Oracle DBA 简单操作

周凡杨

oracle dba sql

--执行次数多的SQL

select sql_text,executions from (

select sql_text,executions from v$sqlarea order by executions desc

) where rownum<81;

&nb

- 画图重绘

朱辉辉33

游戏

我第一次接触重绘是编写五子棋小游戏的时候,因为游戏里的棋盘是用线绘制的,而这些东西并不在系统自带的重绘里,所以在移动窗体时,棋盘并不会重绘出来。所以我们要重写系统的重绘方法。

在重写系统重绘方法时,我们要注意一定要调用父类的重绘方法,即加上super.paint(g),因为如果不调用父类的重绘方式,重写后会把父类的重绘覆盖掉,而父类的重绘方法是绘制画布,这样就导致我们

- 线程之初体验

西蜀石兰

线程

一直觉得多线程是学Java的一个分水岭,懂多线程才算入门。

之前看《编程思想》的多线程章节,看的云里雾里,知道线程类有哪几个方法,却依旧不知道线程到底是什么?书上都写线程是进程的模块,共享线程的资源,可是这跟多线程编程有毛线的关系,呜呜。。。

线程其实也是用户自定义的任务,不要过多的强调线程的属性,而忽略了线程最基本的属性。

你可以在线程类的run()方法中定义自己的任务,就跟正常的Ja

- linux集群互相免登陆配置

林鹤霄

linux

配置ssh免登陆

1、生成秘钥和公钥 ssh-keygen -t rsa

2、提示让你输入,什么都不输,三次回车之后会在~下面的.ssh文件夹中多出两个文件id_rsa 和 id_rsa.pub

其中id_rsa为秘钥,id_rsa.pub为公钥,使用公钥加密的数据只有私钥才能对这些数据解密 c

- mysql : Lock wait timeout exceeded; try restarting transaction

aigo

mysql

原文:http://www.cnblogs.com/freeliver54/archive/2010/09/30/1839042.html

原因是你使用的InnoDB 表类型的时候,

默认参数:innodb_lock_wait_timeout设置锁等待的时间是50s,

因为有的锁等待超过了这个时间,所以抱错.

你可以把这个时间加长,或者优化存储

- Socket编程 基本的聊天实现。

alleni123

socket

public class Server

{

//用来存储所有连接上来的客户

private List<ServerThread> clients;

public static void main(String[] args)

{

Server s = new Server();

s.startServer(9988);

}

publi

- 多线程监听器事件模式(一个简单的例子)

百合不是茶

线程监听模式

多线程的事件监听器模式

监听器时间模式经常与多线程使用,在多线程中如何知道我的线程正在执行那什么内容,可以通过时间监听器模式得到

创建多线程的事件监听器模式 思路:

1, 创建线程并启动,在创建线程的位置设置一个标记

2,创建队

- spring InitializingBean接口

bijian1013

javaspring

spring的事务的TransactionTemplate,其源码如下:

public class TransactionTemplate extends DefaultTransactionDefinition implements TransactionOperations, InitializingBean{

...

}

TransactionTemplate继承了DefaultT

- Oracle中询表的权限被授予给了哪些用户

bijian1013

oracle数据库权限

Oracle查询表将权限赋给了哪些用户的SQL,以备查用。

select t.table_name as "表名",

t.grantee as "被授权的属组",

t.owner as "对象所在的属组"

- 【Struts2五】Struts2 参数传值

bit1129

struts2

Struts2中参数传值的3种情况

1.请求参数绑定到Action的实例字段上

2.Action将值传递到转发的视图上

3.Action将值传递到重定向的视图上

一、请求参数绑定到Action的实例字段上以及Action将值传递到转发的视图上

Struts可以自动将请求URL中的请求参数或者表单提交的参数绑定到Action定义的实例字段上,绑定的规则使用ognl表达式语言

- 【Kafka十四】关于auto.offset.reset[Q/A]

bit1129

kafka

I got serveral questions about auto.offset.reset. This configuration parameter governs how consumer read the message from Kafka when there is no initial offset in ZooKeeper or

- nginx gzip压缩配置

ronin47

nginx gzip 压缩范例

nginx gzip压缩配置 更多

0

nginx

gzip

配置

随着nginx的发展,越来越多的网站使用nginx,因此nginx的优化变得越来越重要,今天我们来看看nginx的gzip压缩到底是怎么压缩的呢?

gzip(GNU-ZIP)是一种压缩技术。经过gzip压缩后页面大小可以变为原来的30%甚至更小,这样,用

- java-13.输入一个单向链表,输出该链表中倒数第 k 个节点

bylijinnan

java

two cursors.

Make the first cursor go K steps first.

/*

* 第 13 题:题目:输入一个单向链表,输出该链表中倒数第 k 个节点

*/

public void displayKthItemsBackWard(ListNode head,int k){

ListNode p1=head,p2=head;

- Spring源码学习-JdbcTemplate queryForObject

bylijinnan

javaspring

JdbcTemplate中有两个可能会混淆的queryForObject方法:

1.

Object queryForObject(String sql, Object[] args, Class requiredType)

2.

Object queryForObject(String sql, Object[] args, RowMapper rowMapper)

第1个方法是只查

- [冰川时代]在冰川时代,我们需要什么样的技术?

comsci

技术

看美国那边的气候情况....我有个感觉...是不是要进入小冰期了?

那么在小冰期里面...我们的户外活动肯定会出现很多问题...在室内呆着的情况会非常多...怎么在室内呆着而不发闷...怎么用最低的电力保证室内的温度.....这都需要技术手段...

&nb

- js 获取浏览器型号

cuityang

js浏览器

根据浏览器获取iphone和apk的下载地址

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8" content="text/html"/>

<meta name=

- C# socks5详解 转

dalan_123

socketC#

http://www.cnblogs.com/zhujiechang/archive/2008/10/21/1316308.html 这里主要讲的是用.NET实现基于Socket5下面的代理协议进行客户端的通讯,Socket4的实现是类似的,注意的事,这里不是讲用C#实现一个代理服务器,因为实现一个代理服务器需要实现很多协议,头大,而且现在市面上有很多现成的代理服务器用,性能又好,

- 运维 Centos问题汇总

dcj3sjt126com

云主机

一、sh 脚本不执行的原因

sh脚本不执行的原因 只有2个

1.权限不够

2.sh脚本里路径没写完整。

二、解决You have new mail in /var/spool/mail/root

修改/usr/share/logwatch/default.conf/logwatch.conf配置文件

MailTo =

MailFrom

三、查询连接数

- Yii防注入攻击笔记

dcj3sjt126com

sqlWEB安全yii

网站表单有注入漏洞须对所有用户输入的内容进行个过滤和检查,可以使用正则表达式或者直接输入字符判断,大部分是只允许输入字母和数字的,其它字符度不允许;对于内容复杂表单的内容,应该对html和script的符号进行转义替换:尤其是<,>,',"",&这几个符号 这里有个转义对照表:

http://blog.csdn.net/xinzhu1990/articl

- MongoDB简介[一]

eksliang

mongodbMongoDB简介

MongoDB简介

转载请出自出处:http://eksliang.iteye.com/blog/2173288 1.1易于使用

MongoDB是一个面向文档的数据库,而不是关系型数据库。与关系型数据库相比,面向文档的数据库不再有行的概念,取而代之的是更为灵活的“文档”模型。

另外,不

- zookeeper windows 入门安装和测试

greemranqq

zookeeper安装分布式

一、序言

以下是我对zookeeper 的一些理解: zookeeper 作为一个服务注册信息存储的管理工具,好吧,这样说得很抽象,我们举个“栗子”。

栗子1号:

假设我是一家KTV的老板,我同时拥有5家KTV,我肯定得时刻监视

- Spring之使用事务缘由(2-注解实现)

ihuning

spring

Spring事务注解实现

1. 依赖包:

1.1 spring包:

spring-beans-4.0.0.RELEASE.jar

spring-context-4.0.0.

- iOS App Launch Option

啸笑天

option

iOS 程序启动时总会调用application:didFinishLaunchingWithOptions:,其中第二个参数launchOptions为NSDictionary类型的对象,里面存储有此程序启动的原因。

launchOptions中的可能键值见UIApplication Class Reference的Launch Options Keys节 。

1、若用户直接

- jdk与jre的区别(_)

macroli

javajvmjdk

简单的说JDK是面向开发人员使用的SDK,它提供了Java的开发环境和运行环境。SDK是Software Development Kit 一般指软件开发包,可以包括函数库、编译程序等。

JDK就是Java Development Kit JRE是Java Runtime Enviroment是指Java的运行环境,是面向Java程序的使用者,而不是开发者。 如果安装了JDK,会发同你

- Updates were rejected because the tip of your current branch is behind

qiaolevip

学习永无止境每天进步一点点众观千象git

$ git push joe prod-2295-1

To

[email protected]:joe.le/dr-frontend.git

! [rejected] prod-2295-1 -> prod-2295-1 (non-fast-forward)

error: failed to push some refs to '

[email protected]

- [一起学Hive]之十四-Hive的元数据表结构详解

superlxw1234

hivehive元数据结构

关键字:Hive元数据、Hive元数据表结构

之前在 “[一起学Hive]之一–Hive概述,Hive是什么”中介绍过,Hive自己维护了一套元数据,用户通过HQL查询时候,Hive首先需要结合元数据,将HQL翻译成MapReduce去执行。

本文介绍一下Hive元数据中重要的一些表结构及用途,以Hive0.13为例。

文章最后面,会以一个示例来全面了解一下,

- Spring 3.2.14,4.1.7,4.2.RC2发布

wiselyman

Spring 3

Spring 3.2.14、4.1.7及4.2.RC2于6月30日发布。

其中Spring 3.2.1是一个维护版本(维护周期到2016-12-31截止),后续会继续根据需求和bug发布维护版本。此时,Spring官方强烈建议升级Spring框架至4.1.7 或者将要发布的4.2 。

其中Spring 4.1.7主要包含这些更新内容。