【项目二、蜂巢检测项目】二、模型改进:YOLOv5s-ShuffleNetV2

目录

- 前言

- 一、蜂巢数据集

- 二、YOLOv5s-ShuffleNetV2的实现

-

- 2.1、backbone部分

-

- 2.1.1、Focus替换

- 2.1.2、所有Conv+C3替换为Shuffle_Block

- 2.1.3、砍掉SPP

- 2.2、head部分

-

- 2.2.1、所有层结构输入输出channel相等

- 2.2.2、所有C3结构全部替换为DWConv

- 2.2.3、PAN的两个Concat改为ADD

- 2.3、总结

- 三、实验结果

- Reference

前言

马上要找工作了,想总结下自己做过的几个小项目。

之前已经总结过了我做的第一个项目:xxx病虫害检测项目,github源码地址:HuKai97/FFSSD-ResNet。CSDN讲解地址:

- 【项目一、xxx病虫害检测项目】1、SSD原理和源码分析

- 【项目一、xxx病虫害检测项目】2、网络结构尝试改进:Resnet50、SE、CBAM、Feature Fusion

- 【项目一、xxx病虫害检测项目】3、损失函数尝试:Focal loss

而这篇主要介绍我做的第二个项目,也是实验室项目。这次是在YOLOv5的基础上进行的改进,同项目其他讲解:

- 【项目二、蜂巢检测项目】一、串讲各类经典的卷积网络:InceptionV1-V4、ResNetV1-V2、MobileNetV1-V3、ShuffleNetV1-V2、ResNeXt、Xception

如果对YOLOv5不熟悉的同学可以先看看我写的YOLOv5源码讲解CSDN:【YOLOV5-5.x 源码讲解】整体项目文件导航,注释版YOLOv5源码我也开源在了Github上:HuKai97/yolov5-5.x-annotations,欢迎大家star!

因为我的数据集相对简单,只需要检测单类别:蜂巢。所以在原始的YOLOv5的baseline上,mAP就已经达到了96%了,所以这篇工作主要是对YOLOv5进行轻量化探索,在保证mAP不下降太多的情况下,尽可能的提升速度,使模型能够部署在一些边缘设备上,如树莓派等。

改进的思路:利用ShuffleNetv2中的轻量化思路,改进YOLOv5s的网络结构,使网络更适合一些单类/几个类的数据集。

第二篇主要是介绍下如何对YOLOv5改进的,已经每一步改进的思路。

代码已全部上传GitHub: HuKai97/YOLOv5-ShuffleNetv2,欢迎大家star!

一、蜂巢数据集

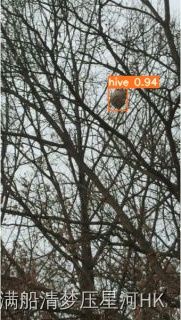

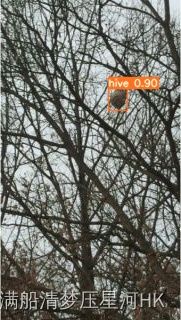

蜂巢数据集是实验室的数据集,总共有1754张原图,划分为训练集:测试集=8:2。蜂巢图片样例:

二、YOLOv5s-ShuffleNetV2的实现

2.1、backbone部分

yaml配置文件:

backbone:

# [from, number, module, args]

[[-1, 1, conv_bn_relu_maxpool, [32]], # 0-P2/4

[-1, 1, Shuffle_Block, [116, 2]], # 1-P3/8

[-1, 3, Shuffle_Block, [116, 1]], # 2

[-1, 1, Shuffle_Block, [232, 2]], # 3-P4/16

[-1, 7, Shuffle_Block, [232, 1]], # 4

[-1, 1, Shuffle_Block, [464, 2]], # 5-P5/32

[-1, 1, Shuffle_Block, [464, 1]], # 6

]

2.1.1、Focus替换

原始的YOLOv5s-5.0的stem是一个Focus切片操作,而v6是一个6x6Conv,这里仿照v6对Focus进行改进,改为1个3x3卷积(因为我们的任务本身不复杂,改为3x3后可以降低参数)

class conv_bn_relu_maxpool(nn.Module):

def __init__(self, c1, c2): # ch_in, ch_out

super(conv_bn_relu_maxpool, self).__init__()

self.conv = nn.Sequential(

nn.Conv2d(c1, c2, kernel_size=3, stride=2, padding=1, bias=False),

nn.BatchNorm2d(c2),

nn.ReLU(inplace=True),

)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

def forward(self, x):

return self.maxpool(self.conv(x))

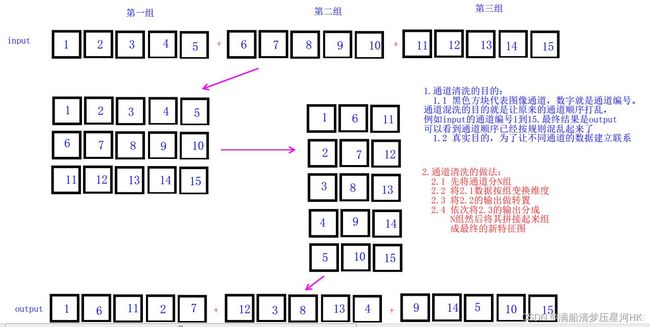

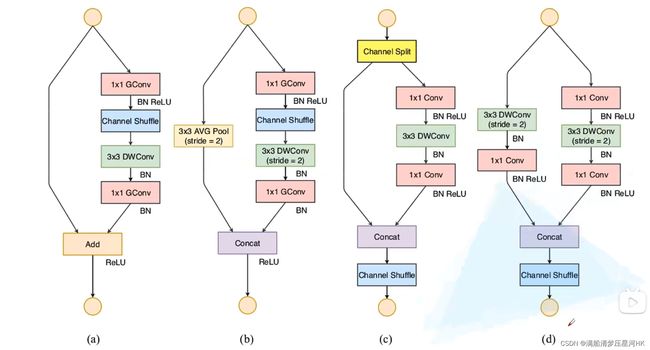

2.1.2、所有Conv+C3替换为Shuffle_Block

def channel_shuffle(x, groups):

batchsize, num_channels, height, width = x.data.size() # bs c h w

channels_per_group = num_channels // groups

# reshape

x = x.view(batchsize, groups, channels_per_group, height, width) # [bs,c,h,w] to [bs,group,channels_per_group,h,w]

x = torch.transpose(x, 1, 2).contiguous() # channel shuffle [bs,channels_per_group,group,h,w]

# flatten

x = x.view(batchsize, -1, height, width) # [bs,c,h,w]

return x

class Shuffle_Block(nn.Module):

def __init__(self, inp, oup, stride):

super(Shuffle_Block, self).__init__()

if not (1 <= stride <= 3):

raise ValueError('illegal stride value')

self.stride = stride

branch_features = oup // 2 # channel split to 2 feature map

assert (self.stride != 1) or (inp == branch_features << 1)

# stride=2 图d 左侧分支=3x3DW Conv + 1x1Conv

if self.stride > 1:

self.branch1 = nn.Sequential(

self.depthwise_conv(inp, inp, kernel_size=3, stride=self.stride, padding=1),

nn.BatchNorm2d(inp),

nn.Conv2d(inp, branch_features, kernel_size=1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(branch_features),

nn.ReLU(inplace=True),

)

# 右侧分支=1x1Conv + 3x3DW Conv + 1x1Conv

self.branch2 = nn.Sequential(

nn.Conv2d(inp if (self.stride > 1) else branch_features,

branch_features, kernel_size=1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(branch_features),

nn.ReLU(inplace=True),

self.depthwise_conv(branch_features, branch_features, kernel_size=3, stride=self.stride, padding=1),

nn.BatchNorm2d(branch_features),

nn.Conv2d(branch_features, branch_features, kernel_size=1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(branch_features),

nn.ReLU(inplace=True),

)

@staticmethod

def depthwise_conv(i, o, kernel_size, stride=1, padding=0, bias=False):

return nn.Conv2d(i, o, kernel_size, stride, padding, bias=bias, groups=i)

def forward(self, x):

# x/out: [bs, c, h, w]

if self.stride == 1:

x1, x2 = x.chunk(2, dim=1) # channel split to 2 feature map

out = torch.cat((x1, self.branch2(x2)), dim=1)

else:

out = torch.cat((self.branch1(x), self.branch2(x)), dim=1)

out = channel_shuffle(out, 2)

return out

2.1.3、砍掉SPP

砍掉了SPP结构和后面的一个C3结构,因为SPP的并行操作会影响速度。

2.2、head部分

head:

[[-1, 1, Conv, [96, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[ -1, 4 ], 1, Concat, [1]], # cat backbone P4

[-1, 1, DWConvblock, [96, 3, 1]], # 10

[-1, 1, Conv, [96, 1, 1 ]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 2], 1, Concat, [1]], # cat backbone P3

[-1, 1, DWConvblock, [96, 3, 1]], # 14 (P3/8-small)

[-1, 1, DWConvblock, [96, 3, 2]],

[[-1, 11], 1, ADD, [1]], # cat head P4

[-1, 1, DWConvblock, [96, 3, 1]], # 17 (P4/16-medium)

[-1, 1, DWConvblock, [ 96, 3, 2]],

[[-1, 7], 1, ADD, [1]], # cat head P5

[-1, 1, DWConvblock, [96, 3, 1]], # 20 (P5/32-large)

[[14, 17, 20], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

2.2.1、所有层结构输入输出channel相等

2.2.2、所有C3结构全部替换为DWConv

class DWConvblock(nn.Module):

def __init__(self, in_channels, out_channels, k, s):

super(DWConvblock, self).__init__()

self.p = k // 2

# depthwise conv

self.conv1 = nn.Conv2d(in_channels, in_channels, kernel_size=k, stride=s,

padding=self.p, groups=in_channels, bias=False)

self.bn1 = nn.BatchNorm2d(in_channels)

# pointwise conv

self.conv2 = nn.Conv2d(in_channels, out_channels, kernel_size=1, stride=1, padding=0, bias=False)

self.bn2 = nn.BatchNorm2d(out_channels)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = F.relu(x)

x = self.conv2(x)

x = self.bn2(x)

x = F.relu(x)

return x

2.2.3、PAN的两个Concat改为ADD

2.3、总结

ShuffleNeckV2提出的设计轻量化网络的四条准则:

G1、 卷积层的输入特征channel和输出特征channel要尽量相等;

G2、 尽量不要使用组卷积,或者组卷积g尽量小;

G3、 网络分支要尽量少,避免并行结构;

G4、 Element-Wise的操作要尽量少,如:ReLU、ADD、逐点卷积等;

YOLOv5s-ShuffleNetV2改进点总结:

- backbone的Focus替换为一个3x3Conv(c=32),因为v5-6.0就替换为了一个6x6Conv,这里为了进一步降低参数量,替换为3x3Conv;

- backbone所有Conv和C3替换为Shuffle Block;

- 砍掉SPP和后面的一个C3结构,SPP并行操作太多了(G3)

- head所有层输入输出channel=96(G1)

- head所有C3改为DWConv

- PAN的两个Concat改为ADD(channel太大,计算量太大,虽然违反了G4,但是计算量更小)

三、实验结果

GFLOPs=值/10^9

参数量(M)=值*4/1024/1024

| 模型 | YOLOv5s | YOLOv5s-ShuffleNetV2 |

|---|---|---|

| shape | 320x320 | 320x320 |

| 参数量 | 6.75M | 0.69M |

| FLOPs | 2.05G | 0.32G |

| 权重文件大小 | 13.6M | 1.6M |

| [email protected] | 0.967 | 0.955 |

| [email protected]~0.95 | 0.885 | 0.84 |

参数量、计算量、权重文件大小都压缩到YOLOv5s的1/10,精度[email protected]掉了1%左右(96.7%->95.5%),[email protected]~0.95掉了5个点左右(88.5%->84%)。

检测结果(左图yolov5s,右图yolov5s-shufflenetv2):

Reference

ppogg/YOLOv5-Lite

深度学习中模型计算量(FLOPs)和参数量(Params)的理解以及四种计算方法总结