k8s教程03(kubernetes控制器)

Pod的分类

白主式Pod:Pod退出了此类型的Pod不会被创建

控制器管理的Pod:在控制器的生命周期里,始绦要维持Pod的副本数且

声明式编程(Deployment):apply(优)、create

命令式(rs):create(优)、apply

什么是控制器

Kubernetes中内建了很多controller (控制器) , 这些相当于-个状态机,用来控制Pod的具体状态和行为

控制器类型

●ReplicationController 和ReplicaSet

●Deployment

●DaemonSet

●StateFulSet

●Job/CronJob

●Horizontal Pod Autoscaling

ReplicationController和ReplicaSet(一般应用程序)

ReplicationController (RC) 用来确保容器应用的副本数始终保持在用户定义的副本数,即如果有容器异常退出,会自动创建新的Pod来替代;而如果异常多出来的容器也会自动回收;

在新版本的Kubernetes中建议使用ReplicaSet来取代ReplicationController。ReplicaSet 跟ReplicationController没有本质的不同,只是名字不一样,并且ReplicaSet支持集合式的selector;

Deployment(一般应用程序)

Deployment为Pod和ReplicaSet提供了-个声明式定义(declarative)方法,用来替代以前的ReplicationController来方便的管理应用。典型的应用场景包括;

●定义Deployment来创建Pod和ReplicaSet

●滚动升级和回滚应用

●扩容和缩容

●暂停和继续Deployment

命令式编程:它侧重于如何实现程序,就像我们刚接触编程的时候那样,我们需要把程序的实现过程按照逻辑结果一步步写下来

声明式编程:它侧重于定义想要什么,然后告诉计算机/引擎,让他帮你去实现

DaemonSet(守护进程,有node节点)

DaemonSet确保全部(或者-些) Node上运行一个Pod的副本。当有Node加入集群时,也会为他们新增一个Pod.当有Node从集群移除时,这些Pod也会被回收。删除DaemonSet将会删除它创建的所有Pod使用DaemonSet的一些典型用法:

●运行集群存储daemon,例如在每个Node.上运行glusterd、 ceph

●在每个Node上运行日志收集daemon,例如fluentd、 logstash

●在每个Node上运行监控daemon,例如Prometheus Node Exporter、collectd、Datadog 代理、New Relic代理,或Ganglia gmond

Job(批处理脚本)

Job负责批处理任务,即仅执行- -次的任务, 它保证批处理任务的一个或多个Pod成功结束

CronJob 在特定的时间循环创建job(批处理脚本)

Cron, Job管理基于时间的Job,即:

●在给定时间点只运行一次

●周期性地在给定时间点运行

使用前提条件:当前使用的Kubernetes集群,版本>= 1.8 (对CronJob)。对于先前版本的集群,版本<1.8,启动API Server时,通过传递选项 --runtime-config=batch/v2alpha1=true可以开启batch/v2alpha1AP1

典型的用法如下所示: .

●在给定的时间点调度Job运行

●创建周期性运行的Job,例如:数据库备份、发送邮件

StatefulSet(有状态服务)

StatefulSet作为Controller 为Pod提供唯-的标识。它可以保证部署和scale的顺序

StatefulSet是为了解决有状态服务的问题(对应Deployments和ReplicaSets是为无状态服务而设计) ,其应用场景包括:

●稳定的持久化存储,即Pod重新调度后还是能访问到相同的持久化数据,基于PVC来实现

●稳定的网络标志,即Pod重新调度后其PodName和HostName不变,基于Headless Service (即没有Cluster IP的Service)来实现

●有序部署,有序扩展,即Pod是有顺序的,在部署或者扩展的时候要依据定义的顺序依次依次进行(即从0到N-1,在下一个Pod运行之前所有之前的Pod必须都是Running和Ready状态) .基于init containers来实现

●有序收缩,有序删除(即从N-1到0)

Horizontal Pod Autoscaling(自动扩展)

应用的资源使用率通常都有高峰和低谷的时候,如何削峰填谷,提高集群的整体资源利用率,让service中的Pod个数自动调整呢?这就有赖于Horizontal Pod Autoscaling了,顾名思义,使Pod水平自动缩放

Kuberneter Deployment控制器

RS与RC与Deployment关联

RC (ReplicationController )主要的作用就是用来确保容器应用的副本数始终保持在用户定义的副本数。即如果有容器异常退出,会自动创建新的Pod来替代;而如果异常多出来的容器也会自动回收

Kubernetes官方建议使用RS (ReplicaSet) 替代RC (ReplicationController) 进行部署,RS 跟RC没有本质的不同,只是名字不一样,并且RS支持集合式的selector

vi rs.yml

apiVersion: extensions/v1beta1

kind: ReplicaSet

metadata:

name: frontend

spec:

replicas: 3

selector:

matchLabels:

tier: frontend

template:

metadata:

labels:

tier: frontend

spec:

containers:

- name: myapp

image: hub.atguigu.com/library/myapp:v1

env:

- name: GET_HOSTS_FROM

value: dns

ports:

- containerPort: 80

查看模板信息

[root@k8s-master01 ~]# kubectl explain rs

KIND: ReplicaSet

VERSION: extensions/v1beta1

DESCRIPTION:

DEPRECATED - This group version of ReplicaSet is deprecated by

apps/v1beta2/ReplicaSet. See the release notes for more information.

ReplicaSet ensures that a specified number of pod replicas are running at

any given time.

FIELDS:

apiVersion <string>

APIVersion defines the versioned schema of this representation of an

object. Servers should convert recognized schemas to the latest internal

value, and may reject unrecognized values. More info:

https://git.k8s.io/community/contributors/devel/api-conventions.md#resources

kind <string>

Kind is a string value representing the REST resource this object

represents. Servers may infer this from the endpoint the client submits

requests to. Cannot be updated. In CamelCase. More info:

https://git.k8s.io/community/contributors/devel/api-conventions.md#types-kinds

metadata <Object>

If the Labels of a ReplicaSet are empty, they are defaulted to be the same

as the Pod(s) that the ReplicaSet manages. Standard object's metadata. More

info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#metadata

spec <Object>

Spec defines the specification of the desired behavior of the ReplicaSet.

More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status

status <Object>

Status is the most recently observed status of the ReplicaSet. This data

may be out of date by some window of time. Populated by the system.

Read-only. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status

创建pod

[root@k8s-master01 ~]# kubectl create -f rs.yml

replicaset.extensions/frontend created

[root@k8s-master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

frontend-2t7r8 1/1 Running 0 20s

frontend-7sm2w 1/1 Running 0 20s

frontend-j6fsq 1/1 Running 0 20s

lifecycle-demo 1/1 Running 0 123m

liveness-httpget-pod 0/1 Running 2 149m

[root@k8s-master01 ~]# kubectl delete pod --all

pod "frontend-2t7r8" deleted

pod "frontend-7sm2w" deleted

pod "frontend-j6fsq" deleted

pod "lifecycle-demo" deleted

pod "liveness-httpget-pod" deleted

[root@k8s-master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

frontend-gwqnq 1/1 Running 0 22s

frontend-v8rhn 1/1 Running 0 22s

frontend-vdfwm 1/1 Running 0 22s

[root@k8s-master01 ~]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

frontend-gwqnq 1/1 Running 0 2m58s tier=frontend

frontend-v8rhn 1/1 Running 0 2m58s tier=frontend

frontend-vdfwm 1/1 Running 0 2m58s tier=frontend

[root@k8s-master01 ~]# kubectl label pod frontend-gwqnq tier=frontend1

error: 'tier' already has a value (frontend), and --overwrite is false

[root@k8s-master01 ~]# kubectl label pod frontend-gwqnq tier=frontend1 --overwrite=True

pod/frontend-gwqnq labeled

[root@k8s-master01 ~]# kubectl get pod --show-labels NAME READY STATUS RESTARTS AGE LABELS

frontend-26zpn 1/1 Running 0 2s tier=frontend

frontend-gwqnq 1/1 Running 0 8m tier=frontend1

frontend-v8rhn 1/1 Running 0 8m tier=frontend

frontend-vdfwm 1/1 Running 0 8m tier=frontend

[root@k8s-master01 ~]# kubectl delete rs --all

replicaset.extensions "frontend" deleted

[root@k8s-master01 ~]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

frontend-gwqnq 1/1 Running 0 18m tier=frontend1

[root@k8s-master01 ~]# kubectl delete pod --all

pod "frontend-gwqnq" deleted

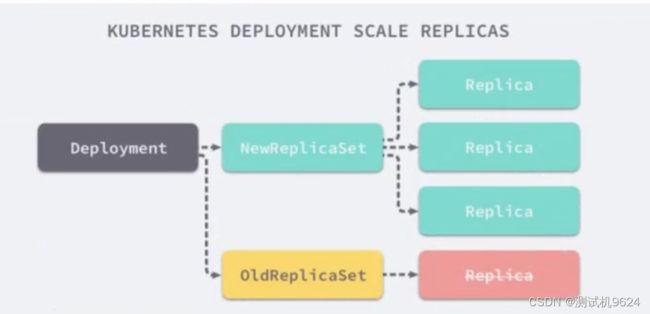

RS与Deployment的关联

Deployment

Deployment为Pod和ReplicaSet提供了。个声明式定义(declarative)方法,用来替代以前的ReplicationController来方便的管理应用。典型的应用场景包括:

●定义Deployment来创建Pod和ReplicaSet

●滚动升级和回滚应用

●扩容和缩容

●暂停和继续Deployment

1、部署一个简单的Nginx应用

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: hub.atguigu.com/library/myapp:v1

ports:

- containerPort: 80

kubectl create -f https://kubernetes.io/docs/user-guide/nginx-deployment.yaml --record

## --record参数可以记录命令,我们可以很方便的查看每次revision 的变化

创建

[root@k8s-master01 ~]# kubectl apply -f deployment.yml --record

deployment.extensions/nginx-deployment created

[root@k8s-master01 ~]# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deployment 3/3 3 3 53s

[root@k8s-master01 ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deployment-5df65767f 3 3 3 97s

[root@k8s-master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-5df65767f-4wxdv 1/1 Running 0 2m

nginx-deployment-5df65767f-5hh5v 1/1 Running 0 2m

nginx-deployment-5df65767f-g2w66 1/1 Running 0 2m

2、扩容

[root@k8s-master01 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-5df65767f-4wxdv 1/1 Running 1 15h 10.244.1.28 k8s-node01 <none> <none>

nginx-deployment-5df65767f-5hh5v 1/1 Running 1 15h 10.244.1.29 k8s-node01 <none> <none>

nginx-deployment-5df65767f-g2w66 1/1 Running 1 15h 10.244.2.8 k8s-node02 <none> <none>

[root@k8s-master01 ~]# curl 10.244.1.28

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

[root@k8s-master01 ~]# curl 10.244.1.29

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

[root@k8s-master01 ~]# curl 10.244.2.8

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

# 扩容

[root@k8s-master01 ~]# kubectl scale deployment nginx-deployment --replicas 10

deployment.extensions/nginx-deployment scaled

[root@k8s-master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-5df65767f-49p7g 1/1 Running 0 87s

nginx-deployment-5df65767f-4wxdv 1/1 Running 1 15h

nginx-deployment-5df65767f-57qt4 1/1 Running 0 87s

nginx-deployment-5df65767f-5hh5v 1/1 Running 1 15h

nginx-deployment-5df65767f-8fjv8 1/1 Running 0 87s

nginx-deployment-5df65767f-8hp5z 1/1 Running 0 87s

nginx-deployment-5df65767f-8mcd8 1/1 Running 0 87s

nginx-deployment-5df65767f-g2w66 1/1 Running 1 15h

nginx-deployment-5df65767f-w5w54 1/1 Running 0 87s

nginx-deployment-5df65767f-wl2q8 1/1 Running 0 87s

[root@k8s-master01 ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deployment-5df65767f 10 10 10 15h

3、如果集群支持horizontal pod autoscaling的话,还可以为Deployment设置自动扩展

kubectl autoscale deployment nginx-deployment --min=10 --max=15 --cpu-percent=80

4、更新镜像也比较简单

[root@k8s-master01 ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deployment-5df65767f 10 10 10 15h

# 更新镜像

[root@k8s-master01 ~]# kubectl set image deployment/nginx-deployment nginx=wangyanglinux/myapp:v2

deployment.extensions/nginx-deployment image updated

[root@k8s-master01 ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deployment-5c478875d8 10 10 10 56s

nginx-deployment-5df65767f 0 0 0 15h

[root@k8s-master01 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-5c478875d8-6tkzm 1/1 Running 0 113s 10.244.1.38 k8s-node01 <none> <none>

nginx-deployment-5c478875d8-dhr7f 1/1 Running 0 113s 10.244.2.16 k8s-node02 <none> <none>

nginx-deployment-5c478875d8-gtb9p 1/1 Running 0 111s 10.244.2.17 k8s-node02 <none> <none>

nginx-deployment-5c478875d8-h8bxl 1/1 Running 0 111s 10.244.1.40 k8s-node01 <none> <none>

nginx-deployment-5c478875d8-kfxsw 1/1 Running 0 110s 10.244.1.41 k8s-node01 <none> <none>

nginx-deployment-5c478875d8-kpcwh 1/1 Running 0 2m1s 10.244.2.15 k8s-node02 <none> <none>

nginx-deployment-5c478875d8-lhm9g 1/1 Running 0 112s 10.244.1.39 k8s-node01 <none> <none>

nginx-deployment-5c478875d8-n67m5 1/1 Running 0 2m1s 10.244.1.37 k8s-node01 <none> <none>

nginx-deployment-5c478875d8-pq5r7 1/1 Running 0 108s 10.244.2.18 k8s-node02 <none> <none>

nginx-deployment-5c478875d8-qf2hm 1/1 Running 0 107s 10.244.1.42 k8s-node01 <none> <none>

# 查看版本成了v2

[root@k8s-master01 ~]# curl 10.244.1.38

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

5、回滚

[root@k8s-master01 ~]# kubectl rollout undo deployment/nginx-deployment

deployment.extensions/nginx-deployment rolled back

[root@k8s-master01 ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deployment-5c478875d8 5 5 5 52s

nginx-deployment-5df65767f 6 6 4 15h

NAME DESIRED CURRENT READY AGE

nginx-deployment-5c478875d8 1 1 1 57s

nginx-deployment-5df65767f 10 10 8 15h

[root@k8s-master01 ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deployment-5c478875d8 0 0 0 58s

nginx-deployment-5df65767f 10 10 9 15h

[root@k8s-master01 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-5df65767f-9bshv 1/1 Running 0 31s 10.244.2.26 k8s-node02 <none> <none>

nginx-deployment-5df65767f-bvxfv 1/1 Running 0 31s 10.244.1.56 k8s-node01 <none> <none>

nginx-deployment-5df65767f-j56q2 1/1 Running 0 28s 10.244.1.58 k8s-node01 <none> <none>

nginx-deployment-5df65767f-nmxpm 1/1 Running 0 25s 10.244.2.28 k8s-node02 <none> <none>

nginx-deployment-5df65767f-qk282 1/1 Running 0 24s 10.244.1.61 k8s-node01 <none> <none>

nginx-deployment-5df65767f-rhcdf 1/1 Running 0 29s 10.244.2.27 k8s-node02 <none> <none>

nginx-deployment-5df65767f-rq268 1/1 Running 0 29s 10.244.1.57 k8s-node01 <none> <none>

nginx-deployment-5df65767f-tmzjr 1/1 Running 0 25s 10.244.1.60 k8s-node01 <none> <none>

nginx-deployment-5df65767f-w5rqj 1/1 Running 0 28s 10.244.1.59 k8s-node01 <none> <none>

nginx-deployment-5df65767f-wgbhq 1/1 Running 0 22s 10.244.2.29 k8s-node02 <none> <none>

# 回退到了v1版本

[root@k8s-master01 ~]# curl 10.244.2.26

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Deployment更新策略

Deployment可以保证在升级时只有一定数量的 Pod是down的。默认的,它会确保至少有比期望的Pod数量少-个是up状态(最多-个不可用)

Deployment同时也可以确保只创建出超过期望数量的一定数量的Pod.默认的,它会确保最多比期望的Pod数量多-个的Pod是up的(最多1个surge)

未来的Kuberentes版本中,将从1-1变成25%-25%

$ kubectl describe deployments

Rollover (多个rollout并行)

假如您创建了一个有5个niginx:1.7.9 replica的Deployment,但是当还只有3个nginx:1.7.9的replica创建出来的时候您就开始更新含有5个nginx:1.9.1 replica 的Deployment。在这种情况下,Deployment 会立即杀掉已创建的3个nginx:1.7.9的Pod,并开始创建nginx:1.9.1的Pod。它不会等到所有的5个nginx:1.7.9的Pod都创建完成后才开始改变航道

回退Deployment

只要Deployment 的rollout 被触发就会创建一个revision。 也就是说当且仅当Deployment的Pod template (如’.spec.template’)被更改,例如更新template中的label和容器镜像时,就会创建出一个新的revision. 其他的更新,比如打容Deployment 不会创建revision——因此我们可以很方便的手动或者自动扩容。这意味着当您回退到历史revision时,只有Deployment 中的Pod template 部分才会回退

kubectl set image deployment/nginx-deployment nginx-nginx:1.91 ## 设置指定版本镜像

kubectl rollout status deployments nginx-deployment ## 查看状态

kubectl get pods

kubectl rollout history deployment/nginx-deployment ## 查看历史版本

kubectl rollout undo deployment/nginx-deployment

kubectl rollout undo deployment/nginx-deployment --to-revision=2 ## 可以使用 --revision参数指定某个历史版本

kubectl rollout pause deployment/nginx-deployment ##暂停deployment 的更新

您可以用kubectl rollout status 命令查看Deployment是否完成。如果rollout成功完成,kubectl rollout status 将返回一个0值的 Exit Code

[root@k8s-master01 ~]# kubectl rollout status deployments nginx-deployment

deployment "nginx-deployment" successfully rolled out

[root@k8s-master01 ~]# echo $?

0

清理Policy

您可以通过设置 .spec.revisonHistoryLimit 项来指定deployment最多保留多少revision历史记录。默认的会保留所有的revision;如果将该项设置为0,Deployment 就不允许回退了

[root@k8s-master01 ~]# kubectl rollout history deployment/nginx-deployment

deployment.extensions/nginx-deployment

REVISION CHANGE-CAUSE

5 kubectl apply --filename=deployment.yml --record=true

6 kubectl apply --filename=deployment.yml --record=true

[root@k8s-master01 ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deployment-5c478875d8 0 0 0 23m

nginx-deployment-5df65767f 10 10 10 16h

kubernetes DaemonSet 控制器

什么是DaemonSet

DaemonSet确保全部(或者一些) Node上运行一个Pod的副本。当有Node加入集群时,也会为他们新增一个Pod。当有Node从集群移除时,这些Pod也会被回收。删除DaemonSet将会删除它创建的所有Pod

使用DaemonSet的一些典型用法:

●运行集群存储daemon,例如在每个Node上运行glusterd、 ceph

●在每个Node上运行日志收集daemon,例如fluentd、logstash

●在每个Node上运行监控daemon,例如Prometheus Node Exporter. collectd、 Datadog 代理、New Relic代理,或Ganglia gmond

vi daemonset.yml

apiVersion: apps/v1

kind: DaemonSet # 资源类型

metadata:

name: deamonset-example # 两个要对应

labels:

app: daemonset

spec:

selector:

matchLabels:

name: deamonset-example # 两个要对应

template:

metadata:

labels:

name: deamonset-example

spec:

containers:

- name: daemonset-example

image: wangyanglinux/myapp:v1

声明式创建pod

[root@k8s-master01 ~]# kubectl create -f daemonset.yml

daemonset.apps/deamonset-example created

[root@k8s-master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

deamonset-example-5t9dg 1/1 Running 0 25s

deamonset-example-jkdh8 1/1 Running 0 25s

nginx-deployment-5df65767f-9bshv 1/1 Running 0 5h4m

nginx-deployment-5df65767f-bvxfv 1/1 Running 0 5h4m

nginx-deployment-5df65767f-j56q2 1/1 Running 0 5h4m

nginx-deployment-5df65767f-nmxpm 1/1 Running 0 5h4m

nginx-deployment-5df65767f-qk282 1/1 Running 0 5h4m

nginx-deployment-5df65767f-rhcdf 1/1 Running 0 5h4m

nginx-deployment-5df65767f-rq268 1/1 Running 0 5h4m

nginx-deployment-5df65767f-tmzjr 1/1 Running 0 5h4m

nginx-deployment-5df65767f-w5rqj 1/1 Running 0 5h4m

nginx-deployment-5df65767f-wgbhq 1/1 Running 0 5h4m

[root@k8s-master01 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deamonset-example-5t9dg 1/1 Running 0 40s 10.244.2.30 k8s-node02 <none> <none>

deamonset-example-jkdh8 1/1 Running 0 40s 10.244.1.62 k8s-node01 <none> <none>

nginx-deployment-5df65767f-9bshv 1/1 Running 0 5h4m 10.244.2.26 k8s-node02 <none> <none>

nginx-deployment-5df65767f-bvxfv 1/1 Running 0 5h4m 10.244.1.56 k8s-node01 <none> <none>

nginx-deployment-5df65767f-j56q2 1/1 Running 0 5h4m 10.244.1.58 k8s-node01 <none> <none>

nginx-deployment-5df65767f-nmxpm 1/1 Running 0 5h4m 10.244.2.28 k8s-node02 <none> <none>

nginx-deployment-5df65767f-qk282 1/1 Running 0 5h4m 10.244.1.61 k8s-node01 <none> <none>

nginx-deployment-5df65767f-rhcdf 1/1 Running 0 5h4m 10.244.2.27 k8s-node02 <none> <none>

nginx-deployment-5df65767f-rq268 1/1 Running 0 5h4m 10.244.1.57 k8s-node01 <none> <none>

nginx-deployment-5df65767f-tmzjr 1/1 Running 0 5h4m 10.244.1.60 k8s-node01 <none> <none>

nginx-deployment-5df65767f-w5rqj 1/1 Running 0 5h4m 10.244.1.59 k8s-node01 <none> <none>

nginx-deployment-5df65767f-wgbhq 1/1 Running 0 5h4m 10.244.2.29 k8s-node02 <none> <none>

# 删掉一个,立马就给新创建一个

[root@k8s-master01 ~]# kubectl delete pod deamonset-example-5t9dg

pod "deamonset-example-5t9dg" deleted

[root@k8s-master01 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deamonset-example-jkdh8 1/1 Running 0 3m43s 10.244.1.62 k8s-node01 <none> <none>

deamonset-example-xjlmv 1/1 Running 0 9s 10.244.2.31 k8s-node02 <none> <none>

nginx-deployment-5df65767f-9bshv 1/1 Running 0 5h7m 10.244.2.26 k8s-node02 <none> <none>

nginx-deployment-5df65767f-bvxfv 1/1 Running 0 5h7m 10.244.1.56 k8s-node01 <none> <none>

nginx-deployment-5df65767f-j56q2 1/1 Running 0 5h7m 10.244.1.58 k8s-node01 <none> <none>

nginx-deployment-5df65767f-nmxpm 1/1 Running 0 5h7m 10.244.2.28 k8s-node02 <none> <none>

nginx-deployment-5df65767f-qk282 1/1 Running 0 5h7m 10.244.1.61 k8s-node01 <none> <none>

nginx-deployment-5df65767f-rhcdf 1/1 Running 0 5h7m 10.244.2.27 k8s-node02 <none> <none>

nginx-deployment-5df65767f-rq268 1/1 Running 0 5h7m 10.244.1.57 k8s-node01 <none> <none>

nginx-deployment-5df65767f-tmzjr 1/1 Running 0 5h7m 10.244.1.60 k8s-node01 <none> <none>

nginx-deployment-5df65767f-w5rqj 1/1 Running 0 5h7m 10.244.1.59 k8s-node01 <none> <none>

nginx-deployment-5df65767f-wgbhq 1/1 Running 0 5h7m 10.244.2.29 k8s-node02 <none> <none>

kubernetes Jobernetes控制器

Job

Job负责批处理任务,即仅执行-次的任务, 它保证批处理任务的一个或多个Pod成功结束

特殊说明

●spec.template格式同Pod

●RestartPolicy仅支持Never或OnFailure

●单个Pod时, 默认Pod成功运行后Job即结束

● .spec.completions标志Job结束需要成功运行的Pod个数,默认为1

● .spec.parallelism标志并行运行的Pod的个数,默认为1

● spec.activeDeadlineSeconds标志失败Pod的重试最大时间,超过这个时间不会继续重试

Example

vi job.yml

apiVersion: batch/v1

kind: Job

metadata:

name: pi

spec:

template:

metadata:

name: pi

spec:

containers:

- name: pi

image: perl:5.36.0

command: ["perl", "-Mbignum=bpi", "-wle", "print bpi(2000)"]

restartPolicy: Never

查看日志,可以显示出答应的2000 位 π 值

三台主机docker都安装了perl镜像(注意:不要使用latest,带有版本号的docker tag f9596eddf06f perl:5.36.0)

[root@k8s-master01 ~]# kubectl create -f job.yml

job.batch/pi created

[root@k8s-master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

deamonset-example-jkdh8 1/1 Running 0 12m

deamonset-example-xjlmv 1/1 Running 0 9m2s

nginx-deployment-5df65767f-9bshv 1/1 Running 0 5h16m

nginx-deployment-5df65767f-bvxfv 1/1 Running 0 5h16m

nginx-deployment-5df65767f-j56q2 1/1 Running 0 5h16m

nginx-deployment-5df65767f-nmxpm 1/1 Running 0 5h16m

nginx-deployment-5df65767f-qk282 1/1 Running 0 5h16m

nginx-deployment-5df65767f-rhcdf 1/1 Running 0 5h16m

nginx-deployment-5df65767f-rq268 1/1 Running 0 5h16m

nginx-deployment-5df65767f-tmzjr 1/1 Running 0 5h16m

nginx-deployment-5df65767f-w5rqj 1/1 Running 0 5h16m

nginx-deployment-5df65767f-wgbhq 1/1 Running 0 5h16m

pi-59srm 0/1 ContainerCreating 0 7s

[root@k8s-master01 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deamonset-example-jkdh8 1/1 Running 0 12m 10.244.1.62 k8s-node01 <none> <none>

deamonset-example-xjlmv 1/1 Running 0 9m19s 10.244.2.31 k8s-node02 <none> <none>

nginx-deployment-5df65767f-9bshv 1/1 Running 0 5h16m 10.244.2.26 k8s-node02 <none> <none>

nginx-deployment-5df65767f-bvxfv 1/1 Running 0 5h16m 10.244.1.56 k8s-node01 <none> <none>

nginx-deployment-5df65767f-j56q2 1/1 Running 0 5h16m 10.244.1.58 k8s-node01 <none> <none>

nginx-deployment-5df65767f-nmxpm 1/1 Running 0 5h16m 10.244.2.28 k8s-node02 <none> <none>

nginx-deployment-5df65767f-qk282 1/1 Running 0 5h16m 10.244.1.61 k8s-node01 <none> <none>

nginx-deployment-5df65767f-rhcdf 1/1 Running 0 5h16m 10.244.2.27 k8s-node02 <none> <none>

nginx-deployment-5df65767f-rq268 1/1 Running 0 5h16m 10.244.1.57 k8s-node01 <none> <none>

nginx-deployment-5df65767f-tmzjr 1/1 Running 0 5h16m 10.244.1.60 k8s-node01 <none> <none>

nginx-deployment-5df65767f-w5rqj 1/1 Running 0 5h16m 10.244.1.59 k8s-node01 <none> <none>

nginx-deployment-5df65767f-wgbhq 1/1 Running 0 5h16m 10.244.2.29 k8s-node02 <none> <none>

pi-59srm 0/1 ContainerCreating 0 24s <none> k8s-node01 <none> <none>

# 没有安装perl镜像报错

[root@k8s-master01 ~]# kubectl describe pod pi-59srm

Name: pi-59srm

Namespace: default

Priority: 0

Node: k8s-node01/192.168.192.130

Start Time: Tue, 31 May 2022 15:21:18 +0800

Labels: controller-uid=631ae502-ca79-4c20-a4f3-f6ff836caf90

job-name=pi

Annotations: <none>

Status: Pending

IP: 10.244.1.63

Controlled By: Job/pi

Containers:

pi:

Container ID:

Image: perl

Image ID:

Port: <none>

Host Port: <none>

Command:

perl

-Mbignum=bpi

-wle

print bpi(2000)

State: Waiting

Reason: ImagePullBackOff

Ready: False

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-2k8kw (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

default-token-2k8kw:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-2k8kw

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 119s default-scheduler Successfully assigned default/pi-59srm to k8s-node01

Warning Failed 75s kubelet, k8s-node01 Failed to pull image "perl": rpc error: code = Unknown desc = error pulling image configuration: Get https://production.cloudflare.docker.com/registry-v2/docker/registry/v2/blobs/sha256/17/1725f0319c998102f9e28ea08cf878479ea097631cbd71d4ae68084cb28ded58/data?verify=1653984692-TOutVPGZjtgcYIlOBQ7odZ3Nai4%3D: dial tcp 104.18.121.25:443: i/o timeout

Normal BackOff 75s kubelet, k8s-node01 Back-off pulling image "perl"

Warning Failed 75s kubelet, k8s-node01 Error: ImagePullBackOff

Normal Pulling 61s (x2 over 118s) kubelet, k8s-node01 Pulling image "perl"

Warning Failed 5s (x2 over 75s) kubelet, k8s-node01 Error: ErrImagePull

Warning Failed 5s kubelet, k8s-node01 Failed to pull image "perl": rpc error: code = Unknown desc = error pulling image configuration: Get https://production.cloudflare.docker.com/registry-v2/docker/registry/v2/blobs/sha256/17/1725f0319c998102f9e28ea08cf878479ea097631cbd71d4ae68084cb28ded58/data?verify=1653984761-LKewdu223Tqu6Uz7rwgnTd%2FpjrY%3D: dial tcp 104.18.121.25:443: i/o timeout

[root@k8s-master01 ~]# kubectl get job

NAME COMPLETIONS DURATION AGE

pi 0/1 97m 97m

[root@k8s-master01 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deamonset-example-jkdh8 1/1 Running 0 110m 10.244.1.62 k8s-node01 <none> <none>

deamonset-example-xjlmv 1/1 Running 1 107m 10.244.2.36 k8s-node02 <none> <none>

nginx-deployment-5df65767f-9bshv 1/1 Running 1 6h54m 10.244.2.33 k8s-node02 <none> <none>

nginx-deployment-5df65767f-bvxfv 1/1 Running 0 6h54m 10.244.1.56 k8s-node01 <none> <none>

nginx-deployment-5df65767f-j56q2 1/1 Running 0 6h54m 10.244.1.58 k8s-node01 <none> <none>

nginx-deployment-5df65767f-nmxpm 1/1 Running 1 6h54m 10.244.2.35 k8s-node02 <none> <none>

nginx-deployment-5df65767f-qk282 1/1 Running 0 6h54m 10.244.1.61 k8s-node01 <none> <none>

nginx-deployment-5df65767f-rhcdf 1/1 Running 1 6h54m 10.244.2.32 k8s-node02 <none> <none>

nginx-deployment-5df65767f-rq268 1/1 Running 0 6h54m 10.244.1.57 k8s-node01 <none> <none>

nginx-deployment-5df65767f-tmzjr 1/1 Running 0 6h54m 10.244.1.60 k8s-node01 <none> <none>

nginx-deployment-5df65767f-w5rqj 1/1 Running 0 6h54m 10.244.1.59 k8s-node01 <none> <none>

nginx-deployment-5df65767f-wgbhq 1/1 Running 1 6h54m 10.244.2.34 k8s-node02 <none> <none>

pi-98qk8 0/1 ErrImagePull 0 6m4s 10.244.2.37 k8s-node02 <none> <none>

[root@k8s-master01 ~]# kubectl get job

NAME COMPLETIONS DURATION AGE

pi 0/1 103m 103m

[root@k8s-master01 ~]# kubectl delete job pi

job.batch "pi" deleted

[root@k8s-master01 ~]# kubectl get job

No resources found.

[root@k8s-master01 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deamonset-example-jkdh8 1/1 Running 0 116m 10.244.1.62 k8s-node01 <none> <none>

deamonset-example-xjlmv 1/1 Running 1 113m 10.244.2.36 k8s-node02 <none> <none>

nginx-deployment-5df65767f-9bshv 1/1 Running 1 7h 10.244.2.33 k8s-node02 <none> <none>

nginx-deployment-5df65767f-bvxfv 1/1 Running 0 7h 10.244.1.56 k8s-node01 <none> <none>

nginx-deployment-5df65767f-j56q2 1/1 Running 0 7h 10.244.1.58 k8s-node01 <none> <none>

nginx-deployment-5df65767f-nmxpm 1/1 Running 1 7h 10.244.2.35 k8s-node02 <none> <none>

nginx-deployment-5df65767f-qk282 1/1 Running 0 7h 10.244.1.61 k8s-node01 <none> <none>

nginx-deployment-5df65767f-rhcdf 1/1 Running 1 7h 10.244.2.32 k8s-node02 <none> <none>

nginx-deployment-5df65767f-rq268 1/1 Running 0 7h 10.244.1.57 k8s-node01 <none> <none>

nginx-deployment-5df65767f-tmzjr 1/1 Running 0 7h 10.244.1.60 k8s-node01 <none> <none>

nginx-deployment-5df65767f-w5rqj 1/1 Running 0 7h 10.244.1.59 k8s-node01 <none> <none>

nginx-deployment-5df65767f-wgbhq 1/1 Running 1 7h 10.244.2.34 k8s-node02 <none> <none>

# 指定perl版本后,再次创建

[root@k8s-master01 ~]# docker tag f9596eddf06f perl:5.36.0

[root@k8s-master01 ~]# kubectl create -f job.yml

job.batch/pi created

[root@k8s-master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

deamonset-example-jkdh8 1/1 Running 0 131m

deamonset-example-xjlmv 1/1 Running 1 128m

nginx-deployment-5df65767f-9bshv 1/1 Running 1 7h15m

nginx-deployment-5df65767f-bvxfv 1/1 Running 0 7h15m

nginx-deployment-5df65767f-j56q2 1/1 Running 0 7h15m

nginx-deployment-5df65767f-nmxpm 1/1 Running 1 7h15m

nginx-deployment-5df65767f-qk282 1/1 Running 0 7h15m

nginx-deployment-5df65767f-rhcdf 1/1 Running 1 7h15m

nginx-deployment-5df65767f-rq268 1/1 Running 0 7h15m

nginx-deployment-5df65767f-tmzjr 1/1 Running 0 7h15m

nginx-deployment-5df65767f-w5rqj 1/1 Running 0 7h15m

nginx-deployment-5df65767f-wgbhq 1/1 Running 1 7h15m

pi-4hj2g 0/1 Completed 0 9s

[root@k8s-master01 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deamonset-example-jkdh8 1/1 Running 0 131m 10.244.1.62 k8s-node01 <none> <none>

deamonset-example-xjlmv 1/1 Running 1 128m 10.244.2.36 k8s-node02 <none> <none>

nginx-deployment-5df65767f-9bshv 1/1 Running 1 7h15m 10.244.2.33 k8s-node02 <none> <none>

nginx-deployment-5df65767f-bvxfv 1/1 Running 0 7h15m 10.244.1.56 k8s-node01 <none> <none>

nginx-deployment-5df65767f-j56q2 1/1 Running 0 7h15m 10.244.1.58 k8s-node01 <none> <none>

nginx-deployment-5df65767f-nmxpm 1/1 Running 1 7h15m 10.244.2.35 k8s-node02 <none> <none>

nginx-deployment-5df65767f-qk282 1/1 Running 0 7h15m 10.244.1.61 k8s-node01 <none> <none>

nginx-deployment-5df65767f-rhcdf 1/1 Running 1 7h15m 10.244.2.32 k8s-node02 <none> <none>

nginx-deployment-5df65767f-rq268 1/1 Running 0 7h15m 10.244.1.57 k8s-node01 <none> <none>

nginx-deployment-5df65767f-tmzjr 1/1 Running 0 7h15m 10.244.1.60 k8s-node01 <none> <none>

nginx-deployment-5df65767f-w5rqj 1/1 Running 0 7h15m 10.244.1.59 k8s-node01 <none> <none>

nginx-deployment-5df65767f-wgbhq 1/1 Running 1 7h15m 10.244.2.34 k8s-node02 <none> <none>

pi-4hj2g 0/1 Completed 0 20s 10.244.1.67 k8s-node01 <none> <none>

[root@k8s-master01 ~]# kubectl get job

NAME COMPLETIONS DURATION AGE

pi 1/1 8s 30s

# π值得2000位

[root@k8s-master01 ~]# kubectl log pi-4hj2g

log is DEPRECATED and will be removed in a future version. Use logs instead.

3.1415926535897932384626433832795028841971693993751058209749445923078164062862089986280348253421170679821480865132823066470938446095505822317253594081284811174502841027019385211055596446229489549303819644288109756659334461284756482337867831652712019091456485669234603486104543266482133936072602491412737245870066063155881748815209209628292540917153643678925903600113305305488204665213841469519415116094330572703657595919530921861173819326117931051185480744623799627495673518857527248912279381830119491298336733624406566430860213949463952247371907021798609437027705392171762931767523846748184676694051320005681271452635608277857713427577896091736371787214684409012249534301465495853710507922796892589235420199561121290219608640344181598136297747713099605187072113499999983729780499510597317328160963185950244594553469083026425223082533446850352619311881710100031378387528865875332083814206171776691473035982534904287554687311595628638823537875937519577818577805321712268066130019278766111959092164201989380952572010654858632788659361533818279682303019520353018529689957736225994138912497217752834791315155748572424541506959508295331168617278558890750983817546374649393192550604009277016711390098488240128583616035637076601047101819429555961989467678374494482553797747268471040475346462080466842590694912933136770289891521047521620569660240580381501935112533824300355876402474964732639141992726042699227967823547816360093417216412199245863150302861829745557067498385054945885869269956909272107975093029553211653449872027559602364806654991198818347977535663698074265425278625518184175746728909777727938000816470600161452491921732172147723501414419735685481613611573525521334757418494684385233239073941433345477624168625189835694855620992192221842725502542568876717904946016534668049886272327917860857843838279679766814541009538837863609506800642251252051173929848960841284886269456042419652850222106611863067442786220391949450471237137869609563643719172874677646575739624138908658326459958133904780275901

CronJob Spec

●spec.template格式同Pod

●RestartPolicy仅支持Never或OnFailure

●单个Pod时,默认Pod成功运行后Job即结束

● .spec.completions标志Job结束需要成功运行的Pod个数,默认为1

● .spec.parallelism标志并行运行的Pod的个数,默认为1

● spec.activeDeadlineseconds标志失败Pod的重试最大时间,超过这个时间不会继续重试

CronJob

Cron Job管理基于时间的Job,即:

●在给定时间点只运行一次

●周期性地在给定时间点运行

使用条件:当前使用的Kubernetes集群,版本>= 1.8 (对CronJob)

典型的用法如下所示:

●在给定的时间点调度Job运行

●创建周期性运行的Job,例如:数据库备份、发送邮件

CronJob Spec

● .spec.schedule: 调度,必需字段,指定任务运行周期,格式同Cron

● .spec.jobTemplate: job模板,必需宇段,指定需要运行的任务,格式同Job

● .spec.startingDeadlineSeconds:启动Job的期限(秒级别) , 该字段是可选的。如果因为任何原因而错过了被调度的时间,那么错过执行时间的Job将被认为是失败的。如果没有指定,则没有期限

● .spec.concurrencyPolicy:并发策略,该字段也是可选的。它指定了如何处理被Cron Job创建的Job的并发执行。只允许指定下面策略中的一种:

Allow(默认):允许并发运行Job

Forbid:禁止并发运行,如果前一个还没有完成,则直接跳过下一个

Replace:取消当前正在运行的Job, 用一个新的来替换

注意,当前策略只能应用于同一个Cron Job创建的Job。如果存在多个Cron Job,它们创建的Job之间总是允许并发运行。

● .spec.suspend:挂起,该字段也是可选的。如果设置为true,后续所有执行都会被挂起。它对已经开始执行的Job不起作用。默认值为false 。

● .spec.successfulJobsHistoryLimit和.spec.failedJobsHistorylimit:历史限制,是可选的字段。它们 指定了可以保留多少完成和失败的Job。默认情况下,它们分别设置为3和1。设置限制的值为θ,相关类型的Job完成后将不会被保留。

Example

vi cronjob.yml

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: hello

spec:

schedule: "*/1 * * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: hello

image: busybox

args:

- /bin/sh

- -c

- date; echo Hello from the Kubernetes cluster

restartPolicy: OnFailure

$ kubectl get cronjob

NAME SCHEDULE SUSPEND ACTIVE LAST-SCHEDULE

hello */1 * * * * False 0 <none>

$ kubectl get jobs

NAME DESIRED SUCCESSFUL AGE

hell0-1202039034 1 1 49s

$ pods=$(kubectl get pods --selector=job-name=hello-1202039034 --output=jsonpath={.items..metadata.name})

$ kubectl logs $pods

Mon Aug 29 21:34:09 UTC 2016

Hello from the Kubernetes cluster

# 注意,删除cronjob的时候不会自动删除job,这些job可以用kubectl delete job来删除

$ kubectl delete cronjob hello

cronjob "hello" deleted

创建pod

[root@k8s-master01 ~]# kubectl apply -f cronjob.yml

cronjob.batch/hello created

[root@k8s-master01 ~]# kubectl get cronjob

NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE

hello */1 * * * * False 0 <none> 22s

[root@k8s-master01 ~]# kubectl get job

NAME COMPLETIONS DURATION AGE

hello-1653990600 0/1 17s 17s

pi 1/1 8s 30m

[root@k8s-master01 ~]# kubectl delete job pi

job.batch "pi" deleted

[root@k8s-master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

deamonset-example-jkdh8 1/1 Running 0 162m

deamonset-example-xjlmv 1/1 Running 1 159m

hello-1653990600-9l64q 0/1 Completed 0 89s

hello-1653990660-n8vp7 0/1 Completed 0 29s

nginx-deployment-5df65767f-9bshv 1/1 Running 1 7h46m

nginx-deployment-5df65767f-bvxfv 1/1 Running 0 7h46m

nginx-deployment-5df65767f-j56q2 1/1 Running 0 7h46m

nginx-deployment-5df65767f-nmxpm 1/1 Running 1 7h46m

nginx-deployment-5df65767f-qk282 1/1 Running 0 7h46m

nginx-deployment-5df65767f-rhcdf 1/1 Running 1 7h46m

nginx-deployment-5df65767f-rq268 1/1 Running 0 7h46m

nginx-deployment-5df65767f-tmzjr 1/1 Running 0 7h46m

nginx-deployment-5df65767f-w5rqj 1/1 Running 0 7h46m

nginx-deployment-5df65767f-wgbhq 1/1 Running 1 7h46m

[root@k8s-master01 ~]# kubectl delete deployment --all

deployment.extensions "nginx-deployment" deleted

[root@k8s-master01 ~]# kubectl delete daemonset --all

daemonset.extensions "deamonset-example" deleted

[root@k8s-master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

hello-1653990660-n8vp7 0/1 Completed 0 3m16s

hello-1653990720-hhnhg 0/1 Completed 0 2m16s

hello-1653990780-ggkhw 0/1 Completed 0 76s

hello-1653990840-zcnxz 0/1 Completed 0 16s

[root@k8s-master01 ~]# kubectl get job

NAME COMPLETIONS DURATION AGE

hello-1653990720 1/1 9s 2m37s

hello-1653990780 1/1 65s 97s

hello-1653990840 1/1 12s 37s

[root@k8s-master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

hello-1653990840-zcnxz 0/1 Completed 0 2m27s

hello-1653990900-m4ndn 0/1 Completed 0 86s

hello-1653990960-vkpzl 0/1 Completed 0 26s

[root@k8s-master01 ~]# kubectl log hello-1653990960-vkpzl

log is DEPRECATED and will be removed in a future version. Use logs instead.

Tue May 31 10:03:24 UTC 2022

Hello from the Kubernetes cluster

[root@k8s-master01 ~]# kubectl delete cronjob --all

cronjob.batch "hello" deleted

[root@k8s-master01 ~]# kubectl get job

No resources found.

CrondJob本身的一些限制

创建Job操作应该是幂等的