PyTorch深度学习实践(十一)——迁移学习

文章目录

- 0 写在前面

- 1 AlexNet 详解

-

- 1.1 浅析一下卷积层

- 1.1 dropout

- 2 VGGNet

- 3 ResNet

- 4 利用AlexNet做迁移学习实战

-

- 4.1 归一化处理

- 4.2 图像处理

- 4.2 加载预训练模型

- 4.3 重新定义AlexNet的classifier模块

- 5 完整代码

0 写在前面

本研究生终于学完了基础部分的神经网络!!!现在来到《PyTorch深度学习实践》第四章——迁移学习,站在巨人的肩膀上学习!

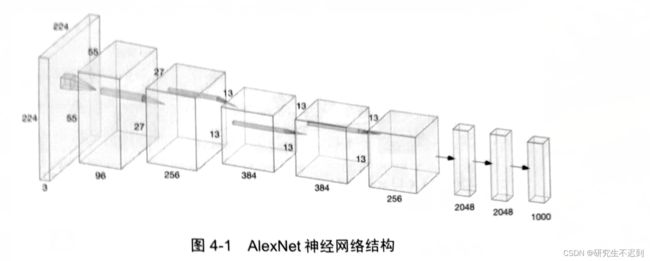

1 AlexNet 详解

1.1 浅析一下卷积层

- 第一层——卷积和池化操作

- 码字不易,多多点赞收藏+关注,真的是码字路上最大的动力了!

>首先是卷积操作

>输入数据:3×224×224;(3是通道数,可以认为是图片的张数)

>过滤器:11×11,stride=4,个数是3×96个(96组,每组3个)

>输出数据:96×55×55 (可以理解为是96张55×55的特征图)

>然后使用ReLU函数,使得特征图内的数值均保持在合理的范围内。

>接着使用3×3的核进行池化:

> 输入数据:96×55×55

> 核:3×3,stride=2

> 输出数据:96×27×27

- 第二层——卷积和池化过程

>首先是卷积操作

>输入数据:96×27×27;

>过滤器:5×5,stride=1,个数是96×256个(256组,每组96个)

>输出数据:256×27×27 (可以理解为是256张27×27的特征图)

>然后使用ReLU函数,使得特征图内的数值均保持在合理的范围内。

>接着使用3×3的核进行池化:

> 输入数据:256×27×27

> 核:3×3,stride=2

> 输出数据:256×13×13

- 第三层——卷积操作,没有池化

>首先是卷积操作

>输入数据:256×13×13;

>过滤器:3×3,stride=1,个数是256×384个(384组,每组256个)

>输出数据:384×13×13

>然后使用ReLU函数,使得特征图内的数值均保持在合理的范围内。

- 第四层——卷积操作,没有池化

>首先是卷积操作

>输入数据:384×13×13;

>过滤器:3×3,stride=1,个数是384×384个(384组,每组384个)

>输出数据:384×13×13

>然后使用ReLU函数,使得特征图内的数值均保持在合理的范围内。

- 第五层——卷积操作,没有池化

>首先是卷积操作

>输入数据:384×13×13;

>过滤器:3×3,stride=2,个数是384×256个(256组,每组384个)

>输出数据:256×6×6

>然后使用ReLU函数,使得特征图内的数值均保持在合理的范围内。

- 第六层——全连接层

>首先全连接操作

>输入数据:256×6×6;(是9216个神经元节点)

>输出数据:2048个神经元节点

>然后使用ReLU函数,使得特征图内的数值均保持在合理的范围内。

>再进行

- 第七层——全连接层

>首先将2048个神经元节点全连接到2048个神经元节点上

>然后经过ReLU层激活,进行Dropout

- 第八层——全连接层

>将2048个神经元节点全连接到1000个神经元节点上

>(因为这里是1000分类的问题)

1.1 dropout

- dropout是前向传播过程中随机丢弃的一些神经网络层节点。这种方法可以有效地避免模型过拟合。

2 VGGNet

- 这个模型的特点是,利用多个3×3的卷积核来代替AlexNet中的11×11的卷积核和5×5的卷积核。

- 好处:① 减少了训练的参数量,减少了资源的利用率。(因为3个3×3的卷积核相当于1个7×7的卷积核,2个3×3的卷积核相当于1个5×5的卷积核);②增加了非线性变化的次数,提高网络对特征的学习能力。

3 ResNet

- ResNet在2015年由微软实验室提出,也就是下面几个人,都是这个机器学习领域的大牛,后期考研多读读他们的论文!

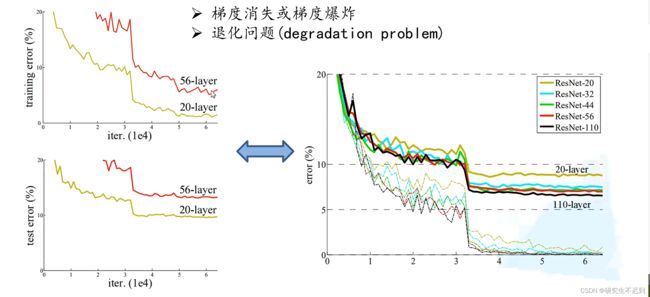

- 更深的网络效果却越差?

- 原因:①梯度消失、梯度下降——batch normalization;②退化问题(degradation problem)——用残差解决

- 解决:在两层或者两层以上的节点两端,增加一条“捷径”

4 利用AlexNet做迁移学习实战

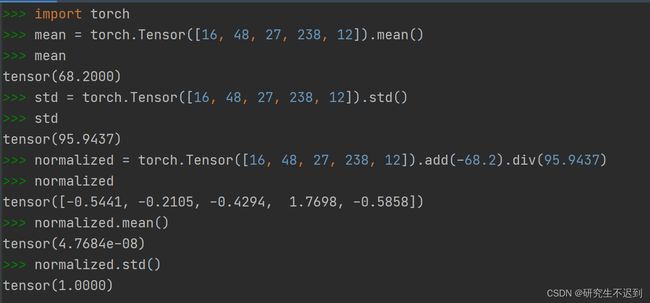

4.1 归一化处理

- “0均值归一化”:将数据都转换为均值为0、方差为1的数据。

- 举例:将

[16, 48, 27, 238, 12]归一化为[-0.5441, -0.2105, -0.4294, 1.7698, -0.5858]

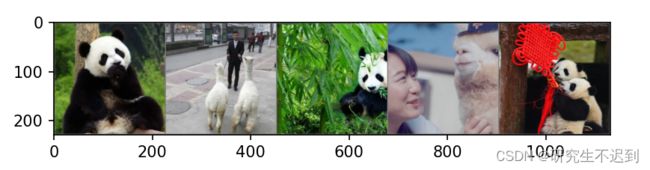

4.2 图像处理

- 这里是需要一个数据集的;我已经把数据集上传到“我的下载”中,有需要的小伙伴可以移步下方链接进行下载!

https://download.csdn.net/download/weixin_42521185/85052167

- 下载完成之后,解压缩一下,放在.py文件的同一目录下

- 运行下面这段代码

import torch

import torchvision

from torchvision import datasets, transforms

import matplotlib.pyplot as plt

import os

from torch.utils.data import Dataset

from torch.utils.data import DataLoader

data_transforms = {

'train': transforms.Compose([

transforms.Scale(230),

transforms.CenterCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

]),

'test': transforms.Compose([

transforms.Scale(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

])

}

data_directory = 'data'

trainset = datasets.ImageFolder(os.path.join(data_directory, 'train'),

data_transforms['train'])

testset = datasets.ImageFolder(os.path.join(data_directory,'test'),

data_transforms['test'])

trainloader = DataLoader(trainset, batch_size=5, shuffle=True,

num_workers=4)

testloader = DataLoader(testset, batch_size=5, shuffle=True,

num_workers=4)

def imshow(inputs):

inputs = inputs / 2 + 0.5

inputs = inputs.numpy().transpose((1, 2, 0))

plt.imshow(inputs)

plt.show()

if __name__ == '__main__':

inputs, classes = next(iter(trainloader))

imshow(torchvision.utils.make_grid(inputs))

4.2 加载预训练模型

- torchvision包中包括了AlexNet、VGG、ResNet 和 SqueezeNet等等模型。

- 参数

pretrained=True表示加载经过了ImageNet数据集训练之后的模型参数。

from torchvision import models

alexnet = models.alexnet(pretrained=True)

print(alexnet)

- AlexNet模型如下(这也是输出结果)

AlexNet(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(11, 11), stride=(4, 4), padding=(2, 2))

(1): ReLU(inplace=True)

(2): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

(3): Conv2d(64, 192, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(4): ReLU(inplace=True)

(5): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

(6): Conv2d(192, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(7): ReLU(inplace=True)

(8): Conv2d(384, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(9): ReLU(inplace=True)

(10): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(6, 6))

(classifier): Sequential(

(0): Dropout(p=0.5, inplace=False)

(1): Linear(in_features=9216, out_features=4096, bias=True)

(2): ReLU(inplace=True)

(3): Dropout(p=0.5, inplace=False)

(4): Linear(in_features=4096, out_features=4096, bias=True)

(5): ReLU(inplace=True)

(6): Linear(in_features=4096, out_features=1000, bias=True)

)

)

4.3 重新定义AlexNet的classifier模块

- 为了构造一个二元分类器,我们需要重新定义AlexNet的classifier模块

- 前两全连接层的参数可以保持不变,最后一层输出改为2。(之前的输出是1000,是1000分类问题)

import torch.nn as nn

for param in alexnet.parameters():

param.requires_grad = False

alexnet.classifier = nn.Sequential(

nn.Dropout(),

nn.Linear(256*6*6, 4096),

nn.ReLU(inplace=True),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(inplace=True),

nn.Linear(4096, 2)

)

5 完整代码

import torch

import torchvision

from torchvision import datasets, transforms

import matplotlib.pyplot as plt

import os

from torch.utils.data import Dataset

from torch.utils.data import DataLoader

from torch import optim

from torchvision import models

import torch.nn as nn

data_transforms = {

'train': transforms.Compose([

transforms.Scale(230),

transforms.CenterCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

]),

'test': transforms.Compose([

transforms.Scale(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

]),

}

data_directory = 'data'

trainset = datasets.ImageFolder(os.path.join(data_directory, 'train'), data_transforms['train'])

testset = datasets.ImageFolder(os.path.join(data_directory, 'test'), data_transforms['test'])

trainloader = torch.utils.data.DataLoader(trainset, batch_size=5, shuffle=True, num_workers=2)

testloader = torch.utils.data.DataLoader(testset, batch_size=5, shuffle=True, num_workers=2)

# def imshow(inputs):

#

# inputs = inputs / 2 + 0.5

# inputs = inputs.numpy().transpose((1, 2, 0))

# # print inputs

# plt.imshow(inputs)

# plt.show()

#

# inputs,classes = next(iter(trainloader))

#

# imshow(torchvision.utils.make_grid(inputs))

alexnet = models.alexnet(pretrained=True)

resnet152 = models.resnet18(pretrained=True)

# %%

for param in alexnet.parameters():

param.requires_grad = False

alexnet.classifier = nn.Sequential(

nn.Dropout(),

nn.Linear(256 * 6 * 6, 4096),

nn.ReLU(inplace=True),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(inplace=True),

nn.Linear(4096, 2), )

CUDA = torch.cuda.is_available()

if CUDA:

alexnet = alexnet.cuda()

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(alexnet.classifier.parameters(), lr=0.001, momentum=0.9)

def train(model, criterion, optimizer, epochs=1):

for epoch in range(epochs):

running_loss = 0.0

for i, data in enumerate(trainloader, 0):

inputs, labels = data

if CUDA:

inputs, labels = inputs.cuda(), labels.cuda()

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

if i % 10 == 9:

print('[Epoch:%d, Batch:%5d] Loss: %.3f' % (epoch + 1, i + 1, running_loss / 100))

running_loss = 0.0

print('Finished Training')

def test(testloader, model):

correct = 0

total = 0

for data in testloader:

images, labels = data

if CUDA:

images = images.cuda()

labels = labels.cuda()

outputs = model(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum()

print('Accuracy on the test set: %d %%' % (100 * correct / total))

def load_param(model, path):

if os.path.exists(path):

model.load_state_dict(torch.load(path))

def save_param(model, path):

torch.save(model.state_dict(), path)

if __name__ == '__main__':

# load_param(alexnet,'tl_model.pkl')

train(alexnet, criterion, optimizer, epochs=5)

save_param(alexnet, './data/models/tl_model.pth')

test(testloader, alexnet)

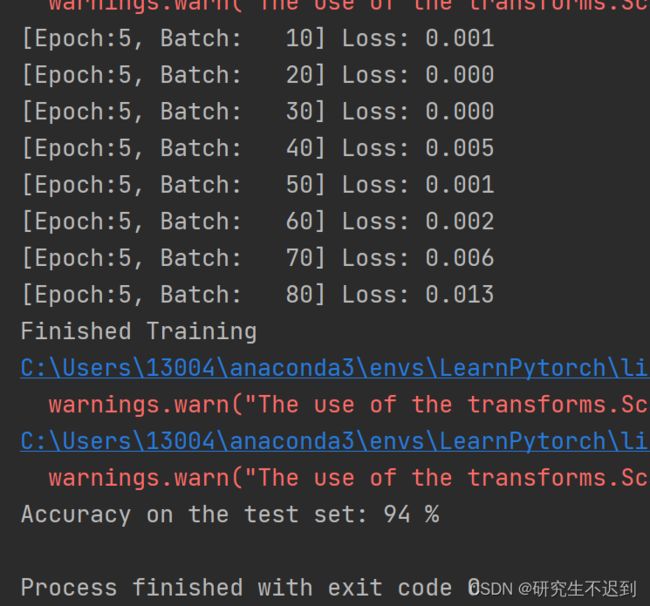

- 输出结果:

- 如果本文对你有帮助的话,麻烦收藏点赞分享一波~~