Kaldi:从零搭建语音识别系统

参考博客

DNN-HMM 语音识别系统搭建

基于Kaldi平台搭建DNN-HMM语音识别系统,这里针对汉语普通话建立语音识别系统,并在后期对识别率进行了分析。搭建一个完整的DNN-HMM系统首先需要准备训练数据,包括音频数据、声学数据和语言数据,这些数据需要按照一定的格式准备,完成之后使用训练脚本训练出一个基于三音素的GMM-HMM模型,然后进行强制对齐,之后使用DNN训练脚本进行DNN模型的训练。

1.创建一个工程目录

liuyuanyuan@liuyuanyuan-Lenovo-C4005:~/kaldi/egs$ mkdir -p speech/s5

liuyuanyuan@liuyuanyuan-Lenovo-C4005:~/kaldi/egs$ cd speech/s5

2.从官方wsj示例链接工具脚本集utils和训练脚本集steps

ln -s ../../wsj/s5/utils/ utils

ln -s ../../wsj/s5/steps/ steps

3.复制环境变量

cp ../../wsj/s5/path.sh ./

4.数据准备

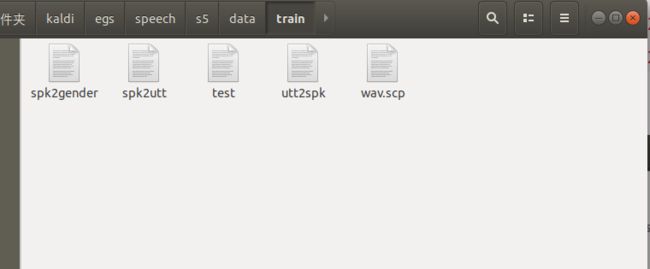

创建data/train, data/test, (data/dev可选),每个文件里面必须包含text, wav.scp, utt2spk, spk2utt

utils/utt2spk_to_spk2utt.pl data/test/utt2spk >data/test/spk2utt

utils/utt2spk_to_spk2utt.pl data/train/utt2spk >data/train/spk2utt

数据准备完毕

sh Create_ngram_LM.sh

sh DNN_train.sh

结果

liuyuanyuan@liuyuanyuan-Lenovo-C4005:~/kaldi/egs/asr1$ sh DNN_train.sh

============================================================================

MFCC Feature Extration & CMVN for Training

============================================================================

fix_data_dir.sh: kept all 558 utterances.

fix_data_dir.sh: old files are kept in data/train/.backup

steps/make_mfcc.sh --cmd run.pl --nj 10 data/train exp_FG/make_mfcc/train mfcc

steps/make_mfcc.sh: moving data/train/feats.scp to data/train/.backup

utils/validate_data_dir.sh: Successfully validated data-directory data/train

steps/make_mfcc.sh: [info]: no segments file exists: assuming wav.scp indexed by utterance.

Succeeded creating MFCC features for train

steps/compute_cmvn_stats.sh data/train exp_FG/make_mfcc/train mfcc

Succeeded creating CMVN stats for train

utils/validate_data_dir.sh: Successfully validated data-directory data/train

fix_data_dir.sh: kept all 162 utterances.

fix_data_dir.sh: old files are kept in data/test/.backup

steps/make_mfcc.sh --cmd run.pl --nj 10 data/test exp_FG/make_mfcc/test mfcc

steps/make_mfcc.sh: moving data/test/feats.scp to data/test/.backup

utils/validate_data_dir.sh: Successfully validated data-directory data/test

steps/make_mfcc.sh: [info]: no segments file exists: assuming wav.scp indexed by utterance.

Succeeded creating MFCC features for test

steps/compute_cmvn_stats.sh data/test exp_FG/make_mfcc/test mfcc

Succeeded creating CMVN stats for test

utils/validate_data_dir.sh: Successfully validated data-directory data/test

============================================================================

MonoPhone Training

============================================================================

steps/train_mono.sh --nj 18 --cmd run.pl data/train data/lang_bigram exp_FG/mono

steps/train_mono.sh: Initializing monophone system.

steps/train_mono.sh: Compiling training graphs

steps/train_mono.sh: Aligning data equally (pass 0)

steps/train_mono.sh: Pass 1

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 2

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 3

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 4

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 5

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 6

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 7

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 8

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 9

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 10

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 11

steps/train_mono.sh: Pass 12

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 13

steps/train_mono.sh: Pass 14

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 15

steps/train_mono.sh: Pass 16

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 17

steps/train_mono.sh: Pass 18

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 19

steps/train_mono.sh: Pass 20

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 21

steps/train_mono.sh: Pass 22

steps/train_mono.sh: Pass 23

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 24

steps/train_mono.sh: Pass 25

steps/train_mono.sh: Pass 26

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 27

steps/train_mono.sh: Pass 28

steps/train_mono.sh: Pass 29

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 30

steps/train_mono.sh: Pass 31

steps/train_mono.sh: Pass 32

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 33

steps/train_mono.sh: Pass 34

steps/train_mono.sh: Pass 35

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 36

steps/train_mono.sh: Pass 37

steps/train_mono.sh: Pass 38

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 39

steps/diagnostic/analyze_alignments.sh --cmd run.pl data/lang_bigram exp_FG/mono

analyze_phone_length_stats.py: WARNING: optional-silence sil is seen only 15.0537634409% of the time at utterance begin. This may not be optimal.

analyze_phone_length_stats.py: WARNING: optional-silence sil is seen only 11.8279569892% of the time at utterance end. This may not be optimal.

steps/diagnostic/analyze_alignments.sh: see stats in exp_FG/mono/log/analyze_alignments.log

2 warnings in exp_FG/mono/log/analyze_alignments.log

467 warnings in exp_FG/mono/log/align.*.*.log

exp_FG/mono: nj=18 align prob=-87.17 over 0.43h [retry=0.9%, fail=0.0%] states=159 gauss=1001

steps/train_mono.sh: Done training monophone system in exp_FG/mono

============================================================================

MonoPhone Testing

============================================================================

WARNING: the --mono, --left-biphone and --quinphone options are now deprecated and ignored.

tree-info exp_FG/mono/tree

tree-info exp_FG/mono/tree

make-h-transducer --disambig-syms-out=exp_FG/mono/graph/disambig_tid.int --transition-scale=1.0 data/lang_bigram/tmp/ilabels_1_0 exp_FG/mono/tree exp_FG/mono/final.mdl

fstrmsymbols exp_FG/mono/graph/disambig_tid.int

fstrmepslocal

fstdeterminizestar --use-log=true

fstminimizeencoded

fsttablecompose exp_FG/mono/graph/Ha.fst data/lang_bigram/tmp/CLG_1_0.fst

fstisstochastic exp_FG/mono/graph/HCLGa.fst

0.000440476 -0.000462013

add-self-loops --self-loop-scale=0.1 --reorder=true exp_FG/mono/final.mdl

steps/decode.sh --nj 18 --cmd run.pl exp_FG/mono/graph data/test exp_FG/mono/decode

decode.sh: feature type is delta

steps/diagnostic/analyze_lats.sh --cmd run.pl exp_FG/mono/graph exp_FG/mono/decode

analyze_phone_length_stats.py: WARNING: optional-silence sil is seen only 11.1111111111% of the time at utterance begin. This may not be optimal.

analyze_phone_length_stats.py: WARNING: optional-silence sil is seen only 8.64197530864% of the time at utterance end. This may not be optimal.

steps/diagnostic/analyze_lats.sh: see stats in exp_FG/mono/decode/log/analyze_alignments.log

Overall, lattice depth (10,50,90-percentile)=(1,1,3) and mean=1.6

steps/diagnostic/analyze_lats.sh: see stats in exp_FG/mono/decode/log/analyze_lattice_depth_stats.log

============================================================================

Tri-phone Training

============================================================================

steps/align_si.sh --boost-silence 1.25 --nj 18 --cmd run.pl data/train data/lang_bigram exp_FG/mono exp_FG/mono_ali

steps/align_si.sh: feature type is delta

steps/align_si.sh: aligning data in data/train using model from exp_FG/mono, putting alignments in exp_FG/mono_ali

steps/diagnostic/analyze_alignments.sh --cmd run.pl data/lang_bigram exp_FG/mono_ali

analyze_phone_length_stats.py: WARNING: optional-silence sil is seen only 15.0537634409% of the time at utterance begin. This may not be optimal.

analyze_phone_length_stats.py: WARNING: optional-silence sil is seen only 11.8279569892% of the time at utterance end. This may not be optimal.

steps/diagnostic/analyze_alignments.sh: see stats in exp_FG/mono_ali/log/analyze_alignments.log

steps/align_si.sh: done aligning data.

=========================

Sen = 2000 Gauss = 16000

=========================

steps/train_deltas.sh --cmd run.pl 2000 16000 data/train data/lang_bigram exp_FG/mono_ali exp_FG/tri_8_2000

steps/train_deltas.sh: accumulating tree stats

steps/train_deltas.sh: getting questions for tree-building, via clustering

steps/train_deltas.sh: building the tree

steps/train_deltas.sh: converting alignments from exp_FG/mono_ali to use current tree

steps/train_deltas.sh: compiling graphs of transcripts

steps/train_deltas.sh: training pass 1

steps/train_deltas.sh: training pass 2

steps/train_deltas.sh: training pass 3

steps/train_deltas.sh: training pass 4

steps/train_deltas.sh: training pass 5

steps/train_deltas.sh: training pass 6

steps/train_deltas.sh: training pass 7

steps/train_deltas.sh: training pass 8

steps/train_deltas.sh: training pass 9

steps/train_deltas.sh: training pass 10

steps/train_deltas.sh: aligning data

steps/train_deltas.sh: training pass 11

steps/train_deltas.sh: training pass 12

steps/train_deltas.sh: training pass 13

steps/train_deltas.sh: training pass 14

steps/train_deltas.sh: training pass 15

steps/train_deltas.sh: training pass 16

steps/train_deltas.sh: training pass 17

steps/train_deltas.sh: training pass 18

steps/train_deltas.sh: training pass 19

steps/train_deltas.sh: training pass 20

steps/train_deltas.sh: aligning data

steps/train_deltas.sh: training pass 21

steps/train_deltas.sh: training pass 22

steps/train_deltas.sh: training pass 23

steps/train_deltas.sh: training pass 24

steps/train_deltas.sh: training pass 25

steps/train_deltas.sh: training pass 26

steps/train_deltas.sh: training pass 27

steps/train_deltas.sh: training pass 28

steps/train_deltas.sh: training pass 29

steps/train_deltas.sh: training pass 30

steps/train_deltas.sh: aligning data

steps/train_deltas.sh: training pass 31

steps/train_deltas.sh: training pass 32

steps/train_deltas.sh: training pass 33

steps/train_deltas.sh: training pass 34

steps/diagnostic/analyze_alignments.sh --cmd run.pl data/lang_bigram exp_FG/tri_8_2000

analyze_phone_length_stats.py: WARNING: optional-silence sil is seen only 15.0537634409% of the time at utterance begin. This may not be optimal.

analyze_phone_length_stats.py: WARNING: optional-silence sil is seen only 11.8279569892% of the time at utterance end. This may not be optimal.

steps/diagnostic/analyze_alignments.sh: see stats in exp_FG/tri_8_2000/log/analyze_alignments.log

2 warnings in exp_FG/tri_8_2000/log/analyze_alignments.log

54 warnings in exp_FG/tri_8_2000/log/align.*.*.log

109 warnings in exp_FG/tri_8_2000/log/init_model.log

1110 warnings in exp_FG/tri_8_2000/log/update.*.log

1 warnings in exp_FG/tri_8_2000/log/build_tree.log

exp_FG/tri_8_2000: nj=18 align prob=-64.72 over 0.43h [retry=0.0%, fail=0.0%] states=376 gauss=7619 tree-impr=7.78

steps/train_deltas.sh: Done training system with delta+delta-delta features in exp_FG/tri_8_2000

============================================================================

Tri-phone Decoding

============================================================================

=========================

Sen = 2000

=========================

tree-info exp_FG/tri_8_2000/tree

tree-info exp_FG/tri_8_2000/tree

make-h-transducer --disambig-syms-out=exp_FG/tri_8_2000/graph/disambig_tid.int --transition-scale=1.0 data/lang_bigram/tmp/ilabels_3_1 exp_FG/tri_8_2000/tree exp_FG/tri_8_2000/final.mdl

fsttablecompose exp_FG/tri_8_2000/graph/Ha.fst data/lang_bigram/tmp/CLG_3_1.fst

fstdeterminizestar --use-log=true

fstrmepslocal

fstminimizeencoded

fstrmsymbols exp_FG/tri_8_2000/graph/disambig_tid.int

fstisstochastic exp_FG/tri_8_2000/graph/HCLGa.fst

0.000588827 -0.000462013

add-self-loops --self-loop-scale=0.1 --reorder=true exp_FG/tri_8_2000/final.mdl

steps/decode.sh --nj 18 --cmd run.pl exp_FG/tri_8_2000/graph data/test exp_FG/tri_8_2000/decode

decode.sh: feature type is delta

steps/diagnostic/analyze_lats.sh --cmd run.pl exp_FG/tri_8_2000/graph exp_FG/tri_8_2000/decode

analyze_phone_length_stats.py: WARNING: optional-silence sil is seen only 18.5185185185% of the time at utterance begin. This may not be optimal.

analyze_phone_length_stats.py: WARNING: optional-silence sil is seen only 16.049382716% of the time at utterance end. This may not be optimal.

steps/diagnostic/analyze_lats.sh: see stats in exp_FG/tri_8_2000/decode/log/analyze_alignments.log

Overall, lattice depth (10,50,90-percentile)=(1,1,2) and mean=1.3

steps/diagnostic/analyze_lats.sh: see stats in exp_FG/tri_8_2000/decode/log/analyze_lattice_depth_stats.log

============================================================================

DNN Hybrid Training

============================================================================

steps/align_si.sh --nj 18 --cmd run.pl data/train data/lang_bigram exp_FG/tri_8_2000 exp_FG/tri_8_2000_ali

steps/align_si.sh: feature type is delta

steps/align_si.sh: aligning data in data/train using model from exp_FG/tri_8_2000, putting alignments in exp_FG/tri_8_2000_ali

steps/diagnostic/analyze_alignments.sh --cmd run.pl data/lang_bigram exp_FG/tri_8_2000_ali

analyze_phone_length_stats.py: WARNING: optional-silence sil is seen only 15.0537634409% of the time at utterance begin. This may not be optimal.

analyze_phone_length_stats.py: WARNING: optional-silence sil is seen only 11.8279569892% of the time at utterance end. This may not be optimal.

steps/diagnostic/analyze_alignments.sh: see stats in exp_FG/tri_8_2000_ali/log/analyze_alignments.log

steps/align_si.sh: done aligning data.

steps/nnet2/train_tanh.sh --mix-up 5000 --initial-learning-rate 0.015 --final-learning-rate 0.002 --num-hidden-layers 3 --minibatch-size 128 --hidden-layer-dim 256 --num-jobs-nnet 18 --cmd run.pl --num-epochs 15 data/train data/lang_bigram exp_FG/tri_8_2000_ali exp_FG/DNN_tri_8_2000_aligned_layer3_nodes256

steps/nnet2/train_tanh.sh: calling get_lda.sh

steps/nnet2/get_lda.sh --transform-dir exp_FG/tri_8_2000_ali --splice-width 4 --cmd run.pl data/train data/lang_bigram exp_FG/tri_8_2000_ali exp_FG/DNN_tri_8_2000_aligned_layer3_nodes256

steps/nnet2/get_lda.sh: feature type is raw

feat-to-dim 'ark,s,cs:utils/subset_scp.pl --quiet 555 data/train/split18/1/feats.scp | apply-cmvn --utt2spk=ark:data/train/split18/1/utt2spk scp:data/train/split18/1/cmvn.scp scp:- ark:- |' -

apply-cmvn --utt2spk=ark:data/train/split18/1/utt2spk scp:data/train/split18/1/cmvn.scp scp:- ark:-

WARNING (feat-to-dim[5.5.773~1-99a8]:Close():kaldi-io.cc:515) Pipe utils/subset_scp.pl --quiet 555 data/train/split18/1/feats.scp | apply-cmvn --utt2spk=ark:data/train/split18/1/utt2spk scp:data/train/split18/1/cmvn.scp scp:- ark:- | had nonzero return status 36096

feat-to-dim 'ark,s,cs:utils/subset_scp.pl --quiet 555 data/train/split18/1/feats.scp | apply-cmvn --utt2spk=ark:data/train/split18/1/utt2spk scp:data/train/split18/1/cmvn.scp scp:- ark:- | splice-feats --left-context=4 --right-context=4 ark:- ark:- |' -

apply-cmvn --utt2spk=ark:data/train/split18/1/utt2spk scp:data/train/split18/1/cmvn.scp scp:- ark:-

splice-feats --left-context=4 --right-context=4 ark:- ark:-

WARNING (feat-to-dim[5.5.773~1-99a8]:Close():kaldi-io.cc:515) Pipe utils/subset_scp.pl --quiet 555 data/train/split18/1/feats.scp | apply-cmvn --utt2spk=ark:data/train/split18/1/utt2spk scp:data/train/split18/1/cmvn.scp scp:- ark:- | splice-feats --left-context=4 --right-context=4 ark:- ark:- | had nonzero return status 36096

steps/nnet2/get_lda.sh: Accumulating LDA statistics.

steps/nnet2/get_lda.sh: Finished estimating LDA

steps/nnet2/train_tanh.sh: calling get_egs.sh

steps/nnet2/get_egs.sh --transform-dir exp_FG/tri_8_2000_ali --splice-width 4 --samples-per-iter 200000 --num-jobs-nnet 18 --stage 0 --cmd run.pl --io-opts --max-jobs-run 5 data/train data/lang_bigram exp_FG/tri_8_2000_ali exp_FG/DNN_tri_8_2000_aligned_layer3_nodes256

steps/nnet2/get_egs.sh: feature type is raw

steps/nnet2/get_egs.sh: working out number of frames of training data

feat-to-len 'scp:head -n 10 data/train/feats.scp|' ark,t:-

steps/nnet2/get_egs.sh: Every epoch, splitting the data up into 1 iterations,

steps/nnet2/get_egs.sh: giving samples-per-iteration of 8702 (you requested 200000).

Getting validation and training subset examples.

steps/nnet2/get_egs.sh: extracting validation and training-subset alignments.

copy-int-vector ark:- ark,t:-

LOG (copy-int-vector[5.5.773~1-99a8]:main():copy-int-vector.cc:83) Copied 558 vectors of int32.

Getting subsets of validation examples for diagnostics and combination.

Creating training examples

Generating training examples on disk

steps/nnet2/get_egs.sh: rearranging examples into parts for different parallel jobs

steps/nnet2/get_egs.sh: Since iters-per-epoch == 1, just concatenating the data.

Shuffling the order of training examples

(in order to avoid stressing the disk, these won't all run at once).

steps/nnet2/get_egs.sh: Finished preparing training examples

steps/nnet2/train_tanh.sh: initializing neural net

Training transition probabilities and setting priors

steps/nnet2/train_tanh.sh: Will train for 15 + 5 epochs, equalling

steps/nnet2/train_tanh.sh: 15 + 5 = 20 iterations,

steps/nnet2/train_tanh.sh: (while reducing learning rate) + (with constant learning rate).

Training neural net (pass 0)

Training neural net (pass 1)

Training neural net (pass 2)

Training neural net (pass 3)

Training neural net (pass 4)

Training neural net (pass 5)

Training neural net (pass 6)

Training neural net (pass 7)

Training neural net (pass 8)

Training neural net (pass 9)

Training neural net (pass 10)

Training neural net (pass 11)

Training neural net (pass 12)

Training neural net (pass 13)

Mixing up from 376 to 5000 components

Training neural net (pass 14)

Training neural net (pass 15)

Training neural net (pass 16)

Training neural net (pass 17)

Training neural net (pass 18)

Training neural net (pass 19)

Setting num_iters_final=5

Getting average posterior for purposes of adjusting the priors.

Re-adjusting priors based on computed posteriors

Done

Cleaning up data

steps/nnet2/remove_egs.sh: Finished deleting examples in exp_FG/DNN_tri_8_2000_aligned_layer3_nodes256/egs

Removing most of the models

============================================================================

DNN Hybrid Testing

============================================================================

steps/nnet2/decode.sh --cmd run.pl --nj 18 exp_FG/tri_8_2000/graph data/test exp_FG/DNN_tri_8_2000_aligned_layer3_nodes256/decode

steps/nnet2/decode.sh: feature type is raw

steps/diagnostic/analyze_lats.sh --cmd run.pl --iter final exp_FG/tri_8_2000/graph exp_FG/DNN_tri_8_2000_aligned_layer3_nodes256/decode

analyze_phone_length_stats.py: WARNING: optional-silence sil is seen only 11.7283950617% of the time at utterance begin. This may not be optimal.

analyze_phone_length_stats.py: WARNING: optional-silence sil is seen only 11.1111111111% of the time at utterance end. This may not be optimal.

steps/diagnostic/analyze_lats.sh: see stats in exp_FG/DNN_tri_8_2000_aligned_layer3_nodes256/decode/log/analyze_alignments.log

Overall, lattice depth (10,50,90-percentile)=(1,1,5) and mean=2.2

steps/diagnostic/analyze_lats.sh: see stats in exp_FG/DNN_tri_8_2000_aligned_layer3_nodes256/decode/log/analyze_lattice_depth_stats.log

score best paths

score confidence and timing with sclite

Decoding done.