yum安装K8S,k8s加dockerfile部署运行nginx+tomcat+httpd实现动静分离,容器重建后自动映射端口

K8S,kubeadm

- 1、使用kubeadm安装k8s

-

- 1.1 设备都初始化yum源:

- 1.2 设备都安装docker

- 1.3 设备都安装K8S源

- 1.4 master开始初始化

- 1.5 node节点配置加入集群

- 1.6 master安装kube-flannel网络

- 2、k8s加dockerfile部署运行nginx+tomcat+httpd实现动静分离,容器重建后自动映射端口

-

- 2.1 master配置nginx的dockerfile镜像

- 2.2 master配置tomcat的dockefile镜像

- 2.3 master配置httpd的dockefile镜像

- 2.4 master制作K8S的容器文件

- 2.5 在k8s-master运行容器,测试动静分离

- 2.6 查看nginx容器的外网映射关系

- 2.7 重建容器,测试容器重建后映射端口

1、使用kubeadm安装k8s

K8S的组件:K8S组件文档

- kube-apiserver:提供对K8S资源操作的唯一入口,让K8S的认证,授权,访问控制,API注册等功能得以实现

- etcd:为K8S提供分布式集群,对数据进行备份等功能

- kube-scheduler:K8S的pod调度器,主要用于master将node节点的资源情况收集并判断新的pod容器存放到资源空闲node节点上。

- kube-controller-manager:K8S的管理中心,主要负责维护K8S集群的健康状态,自动扩展,故障处理,账户认证,namespace、endpoint等资源的控制

- kube-proxy:是K8S的service一部分,主要是用于对K8S的master和node之间、pod和pod容器之间的网路访问控制等

- kubelet:主要用于维护pod容器的生命周期,接收master对node节点的指令等

- kubelet-dns:主要用于内部pod和pod之间进行域名解析

安装K8S集群主要使用到kubeadm,kubelet,docker工具进行安装

kubeadm:kubeadm工具介绍

集群部署文档:K8S集群部署文档

准备环境:

| 工具 | 版本 |

|---|---|

| 系统 | centos 7.7 |

| kubeadm | 1.17.2 |

| kubelet | 1.17.2 |

| docker | 19.03.5 |

| master | 192.168.116.130 |

| node | 192.168.116.132 |

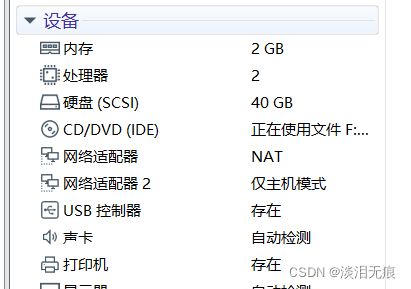

master节点和node节点配置:

CPU要双核以上

设备的内核要升级到4.0版本以上

内核升级文档:内核

1.1 设备都初始化yum源:

cd /etc/yum.repos.d/

yum install -y wget

wget http://mirrors.aliyun.com/repo/Centos-7.repo

wget http://mirrors.aliyun.com/repo/epel-7.repo

mv CentOS-Base.repo CentOS-Base.repo.bak

yum clean all

yum makecache

systemctl disable firewalld

systemctl stop firewalld

sed -i 's/SELINUX=enforcing$/SELINUX=disabled/g' /etc/selinux/config

setenforce 0

设置hosts

vi /etc/hosts

192.168.116.130 k8s-master

192.168.116.132 k8s-node1

修改内核参数,加载br_netfilter模块

设置开机启动模块

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

modprobe br_netfilter 手动临时加载模块

lsmod |grep br_netfilter 查看模块是否加载成功

vi /etc/sysctl.d/k8s.conf 修改内核参数

添加

net.ipv4.ip_forward=1

net.bridge.bridge-nf-call-ip6tables =1

net.bridge.bridge-nf-call-iptables = 1

sysctl -p /etc/sysctl.d/k8s.conf 启动修改

sysctl -a |grep bridge 确认修改成功

1.2 设备都安装docker

wget -O /etc/yum.repos.d/docker-ce.repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum list docker-ce --showduplicates | sort -r 查看可以安装的docker全部版本

yum install docker-ce.x86_64 3:19.03.5-3.el7 -y 指定版本安装

vi /usr/lib/systemd/system/docker.service 修改文件启动模式为systemd

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --exec-opt native.cgroupdriver=systemd

systemctl daemon-reload

systemctl start docker

systemctl enable docker

1.3 设备都安装K8S源

K8S不需要swap分区,关闭swap

swapoff -a 关闭swap分区

vi /etc/fstab 在开机挂载中取消挂载,对分区输入#注释

#/dev/mapper/centos-swap swap swap defaults 0 0

mount -a

设置k8s源

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

EOF

yum list kubeadm --showduplicates | sort -r 查看K8S版本

yum install kubeadm-1.17.2-0 kubectl-1.17.2-0 kubelet-1.17.2-0 -y 安装指定版本

systemctl enable kubelet master和node都要设置这个开机自启,要不然K8S不会开机自启

1.4 master开始初始化

hostnamectl set-hostname k8s-master

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.17.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.17.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.17.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.17.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.6.5

开始初始化

kubeadm init --apiserver-advertise-address=192.168.116.130 --kubernetes-version=v1.17.2 --pod-network-cidr=10.100.0.0/16 --service-cidr=10.200.0.0/16 --service-dns-domain=k8s-master --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --ignore-preflight-errors=swap

--apiserver-advertise-address=192.168.116.130 指定本机IP

--kubernetes-version=v1.17.2 指定安装的K8S的版本

--pod-network-cidr=10.100.0.0/16 设置pod的网络

--service-cidr=10.200.0.0/16 设置service网络,也就是master和node之间的网络

--service-dns-domain=k8s-master 设置本机域名

repository=registry.cn-hangzhou.aliyuncs.com/google_containers 设置镜像来源

--ignore-preflight-errors=swap 忽略swap告警

出现下面这个图表示安装成功,按照提示配置k8s

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

注意:这个需要手动保存好,到时候添加node需要用到

kubeadm join 192.168.116.130:6443 --token ml18rj.3cvr880320x2m9g3 \

--discovery-token-ca-cert-hash sha256:811d86ea167e730b16ea43a26ad065f37def1d325c352da7ff73b8b131115c01

1.5 node节点配置加入集群

按照上面安装好docker和k8s,源等

hostnamectl set-hostname k8s-node1

还是要下载镜像

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.17.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.17.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.17.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.17.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.6.5

将master安装后出现的这句话运行即可加入

kubeadm join 192.168.116.130:6443 --token ml18rj.3cvr880320x2m9g3 \

--discovery-token-ca-cert-hash sha256:811d86ea167e730b16ea43a26ad065f37def1d325c352da7ff73b8b131115c01

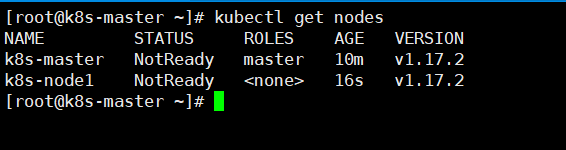

master查看node是否加入成功

kubectl get nodes

1.6 master安装kube-flannel网络

默认K8S集群会没有网络,需要自己运行网络容器

下载这个文件

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

vi kube-flannel.yml 然后修改pod网段

"Network": "10.100.0.0/16", #修改这个为上面定义的--pod-network-cidr=10.100.0.0/16

kubectl apply -f kube-flannel.yml 然后运行容器

上面如果不能下载,只能自己去网站下载:flannel

yum install -y unzip

将下载好的flannel-master.zip 放到k8s-master目录下

unzip flannel-master.zip

cd flannel-master/Documentation/

vi kube-flannel.yml 修改这里

"Network": "10.100.0.0/16", #修改这个为上面定义的--pod-network-cidr=10.100.0.0/16

kubectl apply -f kube-flannel.yml

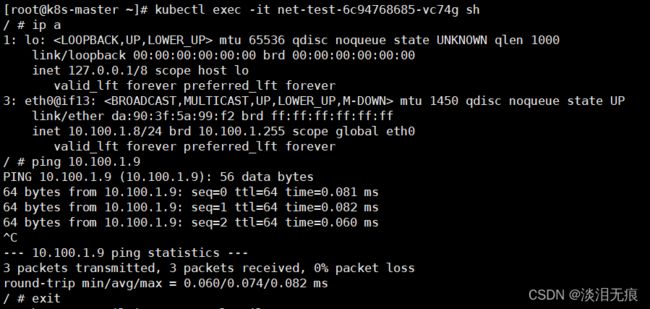

k8s-master创建容器测试能否通外网

kubectl run net-test --image=alpine --replicas=2 sleep 360000 创建2个容器

kubectl get pod -A 查看容器id

kubectl exec -it net-test-6c94768685-hqx2k sh 指定id连接容器

ip a 查看容器获取到的ip是10.100.0.0/16网段

ping 114.114.114.114 测试外网通信

kubectl exec -it net-test-6c94768685-vc74g sh 连接另外一台容器

ping 10.100.1.9 ping另外一台容器IP

2、k8s加dockerfile部署运行nginx+tomcat+httpd实现动静分离,容器重建后自动映射端口

2.1 master配置nginx的dockerfile镜像

mkdir /dockerfile/nginx -p

cd /dockerfile/nginx/

下载nginx源码包

wget http://nginx.org/download/nginx-1.18.0.tar.gz

设置alpine的源

vi repositories

https://mirrors.aliyun.com/alpine/v3.14/main

https://mirrors.aliyun.com/alpine/v3.14/community

vi nginx.conf 配置nginx的配置文件

user nginx;

daemon off;

worker_processes auto;

error_log /app/nginx/logs/nginx-error.log;

pid /app/nginx/run/nginx.pid;

events {

worker_connections 10240;

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /app/nginx/logs/nginx-access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 4096;

server {

listen 80;

server_name k8s-nginx;

location / {

proxy_pass http://k8s-httpd-1-service:80;

index index.html index.htm;

}

location ~* \.jsp$ {

proxy_pass http://k8s-tomcat-1-service:8080;

}

}

}

vi nginx-dockerfile

FROM alpine

COPY repositories /etc/apk/

RUN addgroup -S nginx && adduser -s /sbin/nologin -S -D -G nginx nginx

#nginx

RUN mkdir /app

RUN apk add psmisc net-tools wget gcc g++ make pcre-dev zlib-dev

ADD nginx-1.18.0.tar.gz /app/

RUN cd /app/nginx-1.18.0/ && ./configure --prefix=/app/nginx --user=nginx\

--user=nginx\

--group=nginx\

--with-http_ssl_module\

--with-http_v2_module\

--with-http_realip_module\

--with-http_stub_status_module\

--with-http_gzip_static_module\

--with-pcre\

--with-stream\

--with-stream_ssl_module\

--with-stream_realip_module && make && make install

RUN mkdir /app/nginx/run

ENV PATH=$PATH:/app/nginx/sbin

RUN chown nginx.nginx -R /app/

COPY nginx.conf /app/nginx/conf/

EXPOSE 80

CMD ["nginx"]

制作nginx镜像

docker build -t test/nginx:v1.0 -f nginx-dockerfile .

2.2 master配置tomcat的dockefile镜像

mkdir /dockerfile/tomcat

cd /dockerfile/tomcat/

下载jdk包

wget https://repo.huaweicloud.com/java/jdk/8u191-b12/jdk-8u191-linux-x64.tar.gz

下载tomcat包

wget https://mirrors.bfsu.edu.cn/apache/tomcat/tomcat-8/v8.5.77/bin/apache-tomcat-8.5.77.tar.gz --no-check-certificate

设置jdk路径

vi tomcat.conf

JAVA_HOME=/usr/local/jdk

设置tomcat主页

vi index.jsp

<%@ page language="java" contentType="text/html; charset=UTF-8"

pageEncoding="UTF-8"%>

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<title>tomcat-test</title>

</head>

<body>

<h1>tomcat-test</h1>

<%

out.println("test");

%>

<br>

<%=request.getRequestURL()%>

</body>

</html>

配置镜像dockerfile

vi tomcat-dockerfile

FROM centos:7.7.1908

RUN yum install -y wget

#jdk

COPY jdk-8u191-linux-x64.tar.gz /

ADD jdk-8u191-linux-x64.tar.gz /usr/local/

RUN ln -s /usr/local/jdk1.8.0_191 /usr/local/jdk

ENV JAVA_HOME=/usr/local/jdk

ENV PATH=$PATH:$JAVA_HOME/bin

ENV JRE_HOME=$JAVA_HOME/jre

ENV CLASSPATH=$JAVA_HOME/lib/:$JRE_HOME/lib

#tomcat

COPY apache-tomcat-8.5.77.tar.gz /

ADD apache-tomcat-8.5.77.tar.gz /usr/local/

RUN ln -s /usr/local/apache-tomcat-8.5.77 /usr/local/tomcat

ENV PATH=$PATH:/usr/local/tomcat/bin

COPY tomcat.conf /usr/local/tomcat/conf/

COPY index.jsp /usr/local/tomcat/webapps/ROOT/

EXPOSE 8080

CMD ["/usr/local/tomcat/bin/catalina.sh","run" ]

制作tomcat镜像

docker build -t test/tomcat:v1.0 -f tomcat-dockerfile .

前台运行测试镜像能否使用

docker run --rm -it -p 8080:8080 test/tomcat:v1.0

curl 192.168.116.130:8080

2.3 master配置httpd的dockefile镜像

mkdir /dockerfile/httpd

cd /dockerfile/httpd/

设置alpine的源

vi repositories

https://mirrors.aliyun.com/alpine/v3.14/main

https://mirrors.aliyun.com/alpine/v3.14/community

下载源码包

wget https://dlcdn.apache.org/apr/apr-1.7.0.tar.gz --no-check-certificate

wget https://dlcdn.apache.org/apr/apr-util-1.6.1.tar.gz --no-check-certificate

wget http://archive.apache.org/dist/httpd/httpd-2.4.46.tar.gz --no-check-certificate

vi httpd-dockerfile

FROM alpine

COPY repositories /etc/apk/

RUN apk add wget make curl net-tools psmisc gcc g++ expat-dev pcre-dev

RUN mkdir /app

ADD apr-1.7.0.tar.gz /app

ADD apr-util-1.6.1.tar.gz /app

ADD httpd-2.4.46.tar.gz /app

RUN cd /app/apr-1.7.0/ && sed -ri 's@\$RM "\$cfgfile"@\# \$RM "\$cfgfile"@g' configure && ./configure --prefix=/app/apr && make && make install

RUN cd /app/apr-util-1.6.1/ && ./configure --prefix=/app/apr-util --with-apr=/app/apr/ && make && make install

RUN cd /app/httpd-2.4.46/ && ./configure --prefix=/app/httpd --with-apr=/app/apr/ --with-apr-util=/app/apr-util/ && make && make install

RUN echo "ServerName k8s-httpd-1-service" >>/app/httpd/conf/httpd.conf

ENV PATH=$PATH:/app/httpd/bin

EXPOSE 80

ENTRYPOINT [ "httpd" ]

CMD ["-D", "FOREGROUND"]

docker build -t test/httpd:v1.0 -f httpd-dockerfile . 制作镜像

测试镜像是否能前台运行

docker run --rm -it -p 80:80 test/httpd:v1.0

curl 192.168.116.130

2.4 master制作K8S的容器文件

将k8s-master制作好的镜像保存传送到node节点,要不然容器运行不了

docker save test/nginx:v1.0 -o /root/k8s-nginx.tar

docker save test/tomcat:v1.0 -o /root/k8s-tomcat.tar

docker save test/httpd:v1.0 -o /root/k8s-httpd.tar

scp /root/k8s-nginx.tar 192.168.116.132:/root/

scp /root/k8s-tomcat.tar 192.168.116.132:/root/

scp /root/k8s-httpd.tar 192.168.116.132:/root/

node节点导入镜像到docker

注意:因为是用本地镜像,所以有多个node的话,每个node都要手动导入镜像

docker load -i k8s-nginx.tar

docker load -i k8s-tomcat.tar

docker load -i k8s-httpd.tar

在k8s-master制作tomcat.yml容器文件

vi tomcat.yml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: k8s-tomcat-1

name: k8s-tomcat-1-deployment

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: k8s-tomcat-1

template:

metadata:

labels:

app: k8s-tomcat-1

spec:

containers:

- name: k8s-tomcat-1-container

image: test/tomcat:v1.0

imagePullPolicy: Never

ports:

- containerPort: 8080

protocol: TCP

name: k8s-tomcat-1

---

apiVersion: v1

kind: Service

metadata:

labels:

app: k8s-tomcat-1

name: k8s-tomcat-1-service

namespace: default

spec:

ports:

- name: k8s-tomcat-1

port: 8080

selector:

app: k8s-tomcat-1

在k8s-master制作httpd.yml容器文件

vi httpd.yml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: k8s-httpd-1

name: k8s-httpd-1-deployment

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: k8s-httpd-1

template:

metadata:

labels:

app: k8s-httpd-1

spec:

containers:

- name: k8s-httpd-1-container

image: test/httpd:v1.0

imagePullPolicy: Never

ports:

- containerPort: 80

protocol: TCP

name: k8s-httpd-1

---

apiVersion: v1

kind: Service

metadata:

labels:

app: k8s-httpd-1

name: k8s-httpd-1-service

namespace: default

spec:

ports:

- name: k8s-httpd-1

port: 80

selector:

app: k8s-httpd-1

在k8s-master制作nginx.yml容器文件

vi nginx.yml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx-deployment

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx-container

image: test/nginx:v1.0

imagePullPolicy: Never

ports:

- containerPort: 80

protocol: TCP

name: httpd

---

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx

name: k8s-nginx-service

namespace: default

spec:

type: NodePort

ports:

- name: httpd

port: 80

protocol: TCP

targetPort: 80

nodePort: 30080

selector:

app: nginx

sessionAffinity: ClientIP

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800

2.5 在k8s-master运行容器,测试动静分离

kubectl run net-test --image=alpine --replicas=1 sleep 360000 创建测试容器

kubectl apply -f httpd.yml

kubectl apply -f tomcat.yml

kubectl apply -f nginx.yml

确认容器和service都正常运行

kubectl get pod -n default

kubectl get service

设置测试容器的源和安装工具

vi /etc/apk/repositories 把文件改为下面这些源

https://mirrors.aliyun.com/alpine/v3.14/main

https://mirrors.aliyun.com/alpine/v3.14/community

apk add curl

kubectl exec -it net-test-6c94768685-6cvv8 sh

curl http://k8s-httpd-1-service/index.html

kubectl exec -it net-test-6c94768685-6cvv8 sh

curl http://k8s-tomcat-1-service:8080/index.jsp

kubectl exec -it net-test-6c94768685-6cvv8 sh

可以看到访问nginx容器的静态文件被调用到了httpd容器

curl http://k8s-nginx-service/index.html

可以看到访问nginx容器的动态文件被调用到了tomcat容器

curl http://k8s-nginx-service/index.jsp

2.6 查看nginx容器的外网映射关系

查看容器被安装在哪个node节点上

kubectl get pod -n default -o wide

查看nginx对应的外网映射端口

kubectl get service

curl http://192.168.116.132:30080/index.html

curl http://192.168.116.132:30080/index.jsp

2.7 重建容器,测试容器重建后映射端口

kubectl get pod -n default 查看老的容器

kubectl delete pod k8s-httpd-1-deployment-5ccc64548b-4twr9 -n default 指定pod删除容器

kubectl get pod -n default 可以看到新的容器自动生成

因为nginx容器使用了service名称作反向代理,所以容器即使重建导致IP变动,nginx容器的反向代理不会变化

curl http://192.168.116.132:30080/index.html