- 【Spark征服之路-3.7-Spark-SQL核心编程(六)】

qq_46394486

sparksqlajax

数据加载与保存:通用方式:SparkSQL提供了通用的保存数据和数据加载的方式。这里的通用指的是使用相同的API,根据不同的参数读取和保存不同格式的数据,SparkSQL默认读取和保存的文件格式为parquet加载数据:spark.read.load是加载数据的通用方法。如果读取不同格式的数据,可以对不同的数据格式进行设定。spark.read.format("…")[.option("…")].

- 深入解析 Spark:关键问题与答案汇总

※尘

sqlhivespark

在大数据处理领域,Spark凭借其高效的计算能力和丰富的功能,成为了众多开发者和企业的首选框架。然而,在使用Spark的过程中,我们会遇到各种各样的问题,从性能优化到算子使用等。本文将围绕Spark的一些核心问题进行详细解答,帮助大家更好地理解和运用Spark。Spark性能优化策略Spark性能优化是提升作业执行效率的关键,主要可以从以下几个方面入手:首先,资源配置优化至关重要。合理设置Exec

- spark on yarn

不辉放弃

pyspark大数据开发

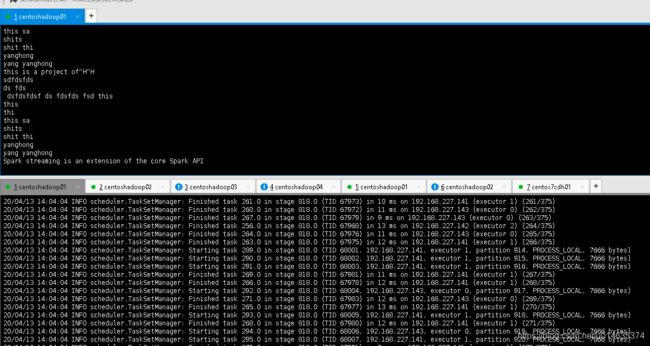

SparkonYARN是指将Spark应用程序运行在HadoopYARN集群上,借助YARN的资源管理和调度能力来管理Spark的计算资源。这种模式能充分利用现有Hadoop集群资源,简化集群管理,是企业中常用的Spark部署方式。核心角色•Spark应用:包含Driver进程和Executor进程。Driver负责任务调度、逻辑处理;Executor负责执行具体任务并存储数据。•YARN组件:◦

- Spark RDD 之 Partition

博弈史密斯

SparkRDD怎么理解RDD的粗粒度模式?对比细粒度模式SparkRDD的task数量是由什么决定的?一份待处理的原始数据会被按照相应的逻辑(例如jdbc和hdfs的split逻辑)切分成n份,每份数据对应到RDD中的一个Partition,Partition的数量决定了task的数量,影响着程序的并行度支持保存点(checkpoint)虽然RDD可以通过lineage实现faultrecove

- 计算机专业大数据毕业设计-基于 Spark 的音乐数据分析项目(源码+LW+部署文档+全bao+远程调试+代码讲解等)

程序猿八哥

数据可视化计算机毕设spark大数据课程设计spark

博主介绍:✌️码农一枚,专注于大学生项目实战开发、讲解和毕业文撰写修改等。全栈领域优质创作者,博客之星、掘金/华为云/阿里云/InfoQ等平台优质作者、专注于Java、小程序技术领域和毕业项目实战✌️技术范围::小程序、SpringBoot、SSM、JSP、Vue、PHP、Java、python、爬虫、数据可视化、大数据、物联网、机器学习等设计与开发。主要内容:免费功能设计,开题报告、任务书、全b

- 绝佳组合 SpringBoot + Lua + Redis = 王炸!

Java精选面试题(微信小程序):5000+道面试题和选择题,真实面经,简历模版,包含Java基础、并发、JVM、线程、MQ系列、Redis、Spring系列、Elasticsearch、Docker、K8s、Flink、Spark、架构设计、大厂真题等,在线随时刷题!前言曾经有一位魔术师,他擅长将SpringBoot和Redis这两个强大的工具结合成一种令人惊叹的组合。他的魔法武器是Redis的

- AI日报-20250620:华为云重磅发布盘古大模型5.5!宇树科技C轮融资引爆资本圈!Genspark AI Pod震撼发布!

未来世界2099

AI日报人工智能华为云科技业界资讯

1、昆仑万维开源Skywork-SWE-32B:32B模型刷新代码修复SOTA,性能直逼闭源巨头2、腾讯AILab开源音乐生成大模型SongGeneration,人人皆可创作音乐!3、重磅!ManusAIWindows版免码开放,职场效率革命来袭!4、B站618商单效率飙升5倍!通义千问3助力AI选人功能大爆发5、HailuoVideoAgent震撼发布:零门槛生成专业级视频,创意秒变现实!6、中

- SPARKLE:深度剖析强化学习如何提升语言模型推理能力

摘要:强化学习(ReinforcementLearning,RL)已经成为赋予语言模型高级推理能力的主导范式。尽管基于RL的训练方法(例如GRPO)已经展示了显著的经验性收益,但对其优势的细致理解仍然不足。为了填补这一空白,我们引入了一个细粒度的分析框架,以剖析RL对推理的影响。我们的框架特别研究了被认为可以从RL训练中受益的关键要素:(1)计划遵循和执行,(2)问题分解,以及(3)改进的推理和知

- 24.park和unpark方法

卷土重来…

java并发编程java

1.park方法可以暂停线程,线程状态为wait。2.unpark方法可以恢复线程,线程状态为runnable。3.LockSupport的静态方法。4.park和unpark方法调用不分先后,unpark先调用,park后执行也可以恢复线程。publicclassParkDemo{publicstaticvoidmain(String[]args){Threadt1=newThread(()->

- 安全运维的 “五层防护”:构建全方位安全体系

KKKlucifer

安全运维

在数字化运维场景中,异构系统复杂、攻击手段隐蔽等挑战日益突出。保旺达基于“全域纳管-身份认证-行为监测-自动响应-审计溯源”的五层防护架构,融合AI、零信任等技术,构建全链路安全运维体系,以下从技术逻辑与实践落地展开解析:第一层:全域资产纳管——筑牢安全根基挑战云网基础设施包含分布式计算(Hadoop/Spark)、数据流处理(Storm/Flink)等异构组件,通信协议繁杂,传统方案难以全面纳管

- Hive 事务表(ACID)问题梳理

文章目录问题描述分析原因什么是事务表概念事务表和普通内部表的区别相关配置事务表的适用场景注意事项设计原理与实现文件管理格式参考博客问题描述工作中需要使用pyspark读取Hive中的数据,但是发现可以获取metastore,外部表的数据可以读取,内部表数据有些表报错信息是:AnalysisException:org.apache.hadoop.hive.ql.metadata.HiveExcept

- 云原生--微服务、CICD、SaaS、PaaS、IaaS

青秋.

云原生docker云原生微服务kubernetesserverlessservice_meshci/cd

往期推荐浅学React和JSX-CSDN博客一文搞懂大数据流式计算引擎Flink【万字详解,史上最全】-CSDN博客一文入门大数据准流式计算引擎Spark【万字详解,全网最新】_大数据spark-CSDN博客目录1.云原生概念和特点2.常见云模式3.云对外提供服务的架构模式3.1IaaS(Infrastructure-as-a-Service)3.2PaaS(Platform-as-a-Servi

- Spark运行架构

EmoGP

Sparkspark架构大数据

Spark框架的核心是一个计算引擎,整体来说,它采用了标准master-slave的结构 如下图所示,它展示了一个Spark执行时的基本结构,图形中的Driver表示master,负责管理整个集群中的作业任务调度,图形中的Executor则是slave,负责实际执行任务。由上图可以看出,对于Spark框架有两个核心组件:DriverSpark驱动器节点,用于执行Spark任务中的main方法,负

- Spark 各种配置项

zhixingheyi_tian

大数据sparkSparkConfsparkjvmjava

/bin/spark-shell--masteryarn--deploy-modeclient/bin/spark-shell--masteryarn--deploy-modeclusterTherearetwodeploymodesthatcanbeusedtolaunchSparkapplicationsonYARN.Inclustermode,theSparkdriverrunsinside

- Spark RDD 及性能调优

Aurora_NeAr

sparkwpfc#

RDDProgrammingRDD核心架构与特性分区(Partitions):数据被切分为多个分区;每个分区在集群节点上独立处理;分区是并行计算的基本单位。计算函数(ComputeFunction):每个分区应用相同的转换函数;惰性执行机制。依赖关系(Dependencies)窄依赖:1个父分区→1个子分区(map、filter)。宽依赖:1个父分区→多个子分区(groupByKey、join)。

- Apache Iceberg数据湖基础

Aurora_NeAr

apache

IntroducingApacheIceberg数据湖的演进与挑战传统数据湖(Hive表格式)的缺陷:分区锁定:查询必须显式指定分区字段(如WHEREdt='2025-07-01')。无原子性:并发写入导致数据覆盖或部分可见。低效元数据:LIST操作扫描全部分区目录(云存储成本高)。Iceberg的革新目标:解耦计算引擎与存储格式(支持Spark/Flink/Trino等);提供ACID事务、模式

- 大数据技术之Flink

第1章Flink概述1.1Flink是什么1.2Flink特点1.3FlinkvsSparkStreaming表Flink和Streaming对比FlinkStreaming计算模型流计算微批处理时间语义事件时间、处理时间处理时间窗口多、灵活少、不灵活(窗口必须是批次的整数倍)状态有没有流式SQL有没有1.4Flink的应用场景1.5Flink分层API第2章Flink快速上手2.1创建项目在准备

- Hadoop核心组件最全介绍

Cachel wood

大数据开发hadoop大数据分布式spark数据库计算机网络

文章目录一、Hadoop核心组件1.HDFS(HadoopDistributedFileSystem)2.YARN(YetAnotherResourceNegotiator)3.MapReduce二、数据存储与管理1.HBase2.Hive3.HCatalog4.Phoenix三、数据处理与计算1.Spark2.Flink3.Tez4.Storm5.Presto6.Impala四、资源调度与集群管

- 大数据分析技术的学习路径,不是绝对的,仅供参考

水云桐程序员

学习大数据数据分析学习方法

阶段一:基础筑基(1-3个月)1.编程语言:Python:掌握基础语法、数据结构、流程控制、函数、面向对象编程、常用库(NumPy,Pandas)。SQL:精通SELECT语句(过滤、排序、分组、聚合、连接)、DDL/DML基础。理解关系型数据库概念(表、主键、外键、索引)。MySQL或PostgreSQL是很好的起点。Java/Scala:深入理解Hadoop/Spark等框架会更有优势。初学者

- 大数据开发高频面试题:Spark与MapReduce解析

被招网约司机的盯上了好几天实习了六个月,到期被通知不能转正。外包裁员让我去友商我该去吗?offer比较华为状态码浏览器插件嵌入式项目推荐2019秋招总结+云从语音算法面经+银行群面面经科大讯飞语音算法面经语音算法美团一面已挂科大讯飞智能语音方向值得去吗?语音算法oc科大讯飞语音算法二面荣耀一面语音算法面经,已挂荣耀_语音算法工程一面科大讯飞语音一面凉经8.18携程机器学习(语音方向)一面【vivo

- spark处理kafka的用户行为数据写入hive

月光一族吖

sparkkafkahive

在CentOS上部署Hadoop(Hadoop3.4.1)和Hive(Hive3.1.2)的详细步骤说明。这份指南面向单机安装(伪集群模式),如果需要搭建真正的多节点集群,各节点间的网络互访、SSH免密登录以及配置同步需进一步调整。注意:本指南假设你已拥有root权限或者具有sudo权限,并且系统连接Internet(用于下载安装包)。步骤中的版本号可根据实际需要进行更改。一、环境准备更新系统软件

- Spark 4.0的VariantType 类型以及内部存储

鸿乃江边鸟

大数据SQLsparksparksql大数据

背景本文基于Spark4.0总结Spark中的VariantType类型,用尽量少的字节来存储Json的格式化数据分析这里主要介绍Variant的存储,我们从VariantBuilder.buildJson方法(把对应的json数据存储为VariantType类型)开始:publicstaticVariantparseJson(JsonParserparser,booleanallowDuplic

- 如何学习才能更好地理解人工智能工程技术专业和其他信息技术专业的关联性?

人工智能教学实践

python编程实践人工智能学习人工智能

要深入理解人工智能工程技术专业与其他信息技术专业的关联性,需要跳出单一专业的学习框架,通过“理论筑基-实践串联-跨学科整合”的路径构建系统性认知。以下是分阶段、可落地的学习方法:一、建立“专业关联”的理论认知框架绘制知识关联图谱操作方法:用XMind或Notion绘制思维导图,以AI为中心,辐射关联专业的核心技术节点。例如:AI(机器学习)├─数据支撑:大数据技术(Hadoop/Spark)+数据

- Spark从入门到熟悉(篇二)

本文介绍Spark的RDD编程,并进行实战演练,加强对编程的理解,实现快速入手知识脉络包含如下8部分内容:创建RDD常用Action操作常用Transformation操作针对PairRDD的常用操作缓存操作共享变量分区操作编程实战创建RDD实现方式有如下两种方式实现:textFile加载本地或者集群文件系统中的数据用parallelize方法将Driver中的数据结构并行化成RDD示例"""te

- Kafka生态整合深度解析:构建现代化数据架构的核心枢纽

Kafka生态整合深度解析:构建现代化数据架构的核心枢纽导语:在当今数据驱动的时代,ApacheKafka已经成为企业级数据架构的核心组件。本文将深入探讨Kafka与主流技术栈的整合方案,帮助架构师和开发者构建高效、可扩展的现代化数据处理平台。文章目录Kafka生态整合深度解析:构建现代化数据架构的核心枢纽一、Kafka与流处理引擎的深度集成1.1Kafka+ApacheSpark:批流一体化处理

- Spark on Docker:容器化大数据开发环境搭建指南

AI天才研究院

ChatGPT实战ChatGPTAI大模型应用入门实战与进阶大数据sparkdockerai

SparkonDocker:容器化大数据开发环境搭建指南关键词:Spark、Docker、容器化、大数据开发、分布式计算、开发环境搭建、容器编排摘要:本文系统讲解如何通过Docker实现Spark开发环境的容器化部署,涵盖从基础概念到实战部署的完整流程。首先分析Spark分布式计算框架与Docker容器技术的核心原理及融合优势,接着详细演示单节点开发环境和多节点集群环境的搭建步骤,包括Docker

- SeaTunnel 社区月报(5-6 月):全新功能上线、Bug 大扫除、Merge 之星是谁?

SeaTunnel

bugSeaTunnel开源数据集成大数据

在5月和6月,SeaTunnel社区迎来了一轮密集更新:2.3.11正式发布,新增对Databend、Elasticsearch向量、HTTP批量写入、ClickHouse多表写入等多个连接器能力,全面提升了数据同步灵活性。同时,近100个修复与优化PR合入,涵盖Spark引擎并行性修复、Paimon精度兼容性增强、Mongo-CDCExactlyOnce默认值优化、OracleDDL类型支持补全

- Spark从入门到熟悉(篇三)

小新学习屋

数据分析spark大数据分布式

本文介绍Spark的DataFrame、SparkSQL,并进行SparkSQL实战,加强对编程的理解,实现快速入手知识脉络包含如下7部分内容:RDD和DataFrame、SparkSQL的对比创建DataFrameDataFrame保存成文件DataFrame的API交互DataFrame的SQL交互SparkSQL实战参考资料RDD和DataFrame、SparkSQL的对比RDD对比Data

- 大数据集群架构hadoop集群、Hbase集群、zookeeper、kafka、spark、flink、doris、dataeas(二)

争取不加班!

hadoophbasezookeeper大数据运维

zookeeper单节点部署wget-chttps://dlcdn.apache.org/zookeeper/zookeeper-3.8.4/apache-zookeeper-3.8.4-bin.tar.gz下载地址tarxfapache-zookeeper-3.8.4-bin.tar.gz-C/data/&&mv/data/apache-zookeeper-3.8.4-bin//data/zoo

- Hadoop、Spark、Flink 三大大数据处理框架的能力与应用场景

一、技术能力与应用场景对比产品能力特点应用场景Hadoop-基于MapReduce的批处理框架-HDFS分布式存储-容错性强、适合离线分析-作业调度使用YARN-日志离线分析-数据仓库存储-T+1报表分析-海量数据处理Spark-基于内存计算,速度快-支持批处理、流处理(StructuredStreaming)-支持SQL、ML、图计算等-支持多语言(Scala、Java、Python)-近实时处

- mysql主从数据同步

林鹤霄

mysql主从数据同步

配置mysql5.5主从服务器(转)

教程开始:一、安装MySQL

说明:在两台MySQL服务器192.168.21.169和192.168.21.168上分别进行如下操作,安装MySQL 5.5.22

二、配置MySQL主服务器(192.168.21.169)mysql -uroot -p &nb

- oracle学习笔记

caoyong

oracle

1、ORACLE的安装

a>、ORACLE的版本

8i,9i : i是internet

10g,11g : grid (网格)

12c : cloud (云计算)

b>、10g不支持win7

&

- 数据库,SQL零基础入门

天子之骄

sql数据库入门基本术语

数据库,SQL零基础入门

做网站肯定离不开数据库,本人之前没怎么具体接触SQL,这几天起早贪黑得各种入门,恶补脑洞。一些具体的知识点,可以让小白不再迷茫的术语,拿来与大家分享。

数据库,永久数据的一个或多个大型结构化集合,通常与更新和查询数据的软件相关

- pom.xml

一炮送你回车库

pom.xml

1、一级元素dependencies是可以被子项目继承的

2、一级元素dependencyManagement是定义该项目群里jar包版本号的,通常和一级元素properties一起使用,既然有继承,也肯定有一级元素modules来定义子元素

3、父项目里的一级元素<modules>

<module>lcas-admin-war</module>

<

- sql查地区省市县

3213213333332132

sqlmysql

-- db_yhm_city

SELECT * FROM db_yhm_city WHERE class_parent_id = 1 -- 海南 class_id = 9 港、奥、台 class_id = 33、34、35

SELECT * FROM db_yhm_city WHERE class_parent_id =169

SELECT d1.cla

- 关于监听器那些让人头疼的事

宝剑锋梅花香

画图板监听器鼠标监听器

本人初学JAVA,对于界面开发我只能说有点蛋疼,用JAVA来做界面的话确实需要一定的耐心(不使用插件,就算使用插件的话也没好多少)既然Java提供了界面开发,老师又要求做,只能硬着头皮上啦。但是监听器还真是个难懂的地方,我是上了几次课才略微搞懂了些。

- JAVA的遍历MAP

darkranger

map

Java Map遍历方式的选择

1. 阐述

对于Java中Map的遍历方式,很多文章都推荐使用entrySet,认为其比keySet的效率高很多。理由是:entrySet方法一次拿到所有key和value的集合;而keySet拿到的只是key的集合,针对每个key,都要去Map中额外查找一次value,从而降低了总体效率。那么实际情况如何呢?

为了解遍历性能的真实差距,包括在遍历ke

- POJ 2312 Battle City 优先多列+bfs

aijuans

搜索

来源:http://poj.org/problem?id=2312

题意:题目背景就是小时候玩的坦克大战,求从起点到终点最少需要多少步。已知S和R是不能走得,E是空的,可以走,B是砖,只有打掉后才可以通过。

思路:很容易看出来这是一道广搜的题目,但是因为走E和走B所需要的时间不一样,因此不能用普通的队列存点。因为对于走B来说,要先打掉砖才能通过,所以我们可以理解为走B需要两步,而走E是指需要1

- Hibernate与Jpa的关系,终于弄懂

avords

javaHibernate数据库jpa

我知道Jpa是一种规范,而Hibernate是它的一种实现。除了Hibernate,还有EclipseLink(曾经的toplink),OpenJPA等可供选择,所以使用Jpa的一个好处是,可以更换实现而不必改动太多代码。

在play中定义Model时,使用的是jpa的annotations,比如javax.persistence.Entity, Table, Column, OneToMany

- 酸爽的console.log

bee1314

console

在前端的开发中,console.log那是开发必备啊,简直直观。通过写小函数,组合大功能。更容易测试。但是在打版本时,就要删除console.log,打完版本进入开发状态又要添加,真不够爽。重复劳动太多。所以可以做些简单地封装,方便开发和上线。

/**

* log.js hufeng

* The safe wrapper for `console.xxx` functions

*

- 哈佛教授:穷人和过于忙碌的人有一个共同思维特质

bijian1013

时间管理励志人生穷人过于忙碌

一个跨学科团队今年完成了一项对资源稀缺状况下人的思维方式的研究,结论是:穷人和过于忙碌的人有一个共同思维特质,即注意力被稀缺资源过分占据,引起认知和判断力的全面下降。这项研究是心理学、行为经济学和政策研究学者协作的典范。

这个研究源于穆来纳森对自己拖延症的憎恨。他7岁从印度移民美国,很快就如鱼得水,哈佛毕业

- other operate

征客丶

OSosx

一、Mac Finder 设置排序方式,预览栏 在显示-》查看显示选项中

二、有时预览显示时,卡死在那,有可能是一些临时文件夹被删除了,如:/private/tmp[有待验证]

--------------------------------------------------------------------

若有其他凝问或文中有错误,请及时向我指出,

我好及时改正,同时也让我们一

- 【Scala五】分析Spark源代码总结的Scala语法三

bit1129

scala

1. If语句作为表达式

val properties = if (jobIdToActiveJob.contains(jobId)) {

jobIdToActiveJob(stage.jobId).properties

} else {

// this stage will be assigned to "default" po

- ZooKeeper 入门

BlueSkator

中间件zk

ZooKeeper是一个高可用的分布式数据管理与系统协调框架。基于对Paxos算法的实现,使该框架保证了分布式环境中数据的强一致性,也正是基于这样的特性,使得ZooKeeper解决很多分布式问题。网上对ZK的应用场景也有不少介绍,本文将结合作者身边的项目例子,系统地对ZK的应用场景进行一个分门归类的介绍。

值得注意的是,ZK并非天生就是为这些应用场景设计的,都是后来众多开发者根据其框架的特性,利

- MySQL取得当前时间的函数是什么 格式化日期的函数是什么

BreakingBad

mysqlDate

取得当前时间用 now() 就行。

在数据库中格式化时间 用DATE_FORMA T(date, format) .

根据格式串format 格式化日期或日期和时间值date,返回结果串。

可用DATE_FORMAT( ) 来格式化DATE 或DATETIME 值,以便得到所希望的格式。根据format字符串格式化date值:

%S, %s 两位数字形式的秒( 00,01,

- 读《研磨设计模式》-代码笔记-组合模式

bylijinnan

java设计模式

声明: 本文只为方便我个人查阅和理解,详细的分析以及源代码请移步 原作者的博客http://chjavach.iteye.com/

import java.util.ArrayList;

import java.util.List;

abstract class Component {

public abstract void printStruct(Str

- 4_JAVA+Oracle面试题(有答案)

chenke

oracle

基础测试题

卷面上不能出现任何的涂写文字,所有的答案要求写在答题纸上,考卷不得带走。

选择题

1、 What will happen when you attempt to compile and run the following code? (3)

public class Static {

static {

int x = 5; // 在static内有效

}

st

- 新一代工作流系统设计目标

comsci

工作算法脚本

用户只需要给工作流系统制定若干个需求,流程系统根据需求,并结合事先输入的组织机构和权限结构,调用若干算法,在流程展示版面上面显示出系统自动生成的流程图,然后由用户根据实际情况对该流程图进行微调,直到满意为止,流程在运行过程中,系统和用户可以根据情况对流程进行实时的调整,包括拓扑结构的调整,权限的调整,内置脚本的调整。。。。。

在这个设计中,最难的地方是系统根据什么来生成流

- oracle 行链接与行迁移

daizj

oracle行迁移

表里的一行对于一个数据块太大的情况有二种(一行在一个数据块里放不下)

第一种情况:

INSERT的时候,INSERT时候行的大小就超一个块的大小。Oracle把这行的数据存储在一连串的数据块里(Oracle Stores the data for the row in a chain of data blocks),这种情况称为行链接(Row Chain),一般不可避免(除非使用更大的数据

- [JShop]开源电子商务系统jshop的系统缓存实现

dinguangx

jshop电子商务

前言

jeeshop中通过SystemManager管理了大量的缓存数据,来提升系统的性能,但这些缓存数据全部都是存放于内存中的,无法满足特定场景的数据更新(如集群环境)。JShop对jeeshop的缓存机制进行了扩展,提供CacheProvider来辅助SystemManager管理这些缓存数据,通过CacheProvider,可以把缓存存放在内存,ehcache,redis,memcache

- 初三全学年难记忆单词

dcj3sjt126com

englishword

several 儿子;若干

shelf 架子

knowledge 知识;学问

librarian 图书管理员

abroad 到国外,在国外

surf 冲浪

wave 浪;波浪

twice 两次;两倍

describe 描写;叙述

especially 特别;尤其

attract 吸引

prize 奖品;奖赏

competition 比赛;竞争

event 大事;事件

O

- sphinx实践

dcj3sjt126com

sphinx

安装参考地址:http://briansnelson.com/How_to_install_Sphinx_on_Centos_Server

yum install sphinx

如果失败的话使用下面的方式安装

wget http://sphinxsearch.com/files/sphinx-2.2.9-1.rhel6.x86_64.rpm

yum loca

- JPA之JPQL(三)

frank1234

ormjpaJPQL

1 什么是JPQL

JPQL是Java Persistence Query Language的简称,可以看成是JPA中的HQL, JPQL支持各种复杂查询。

2 检索单个对象

@Test

public void querySingleObject1() {

Query query = em.createQuery("sele

- Remove Duplicates from Sorted Array II

hcx2013

remove

Follow up for "Remove Duplicates":What if duplicates are allowed at most twice?

For example,Given sorted array nums = [1,1,1,2,2,3],

Your function should return length

- Spring4新特性——Groovy Bean定义DSL

jinnianshilongnian

spring 4

Spring4新特性——泛型限定式依赖注入

Spring4新特性——核心容器的其他改进

Spring4新特性——Web开发的增强

Spring4新特性——集成Bean Validation 1.1(JSR-349)到SpringMVC

Spring4新特性——Groovy Bean定义DSL

Spring4新特性——更好的Java泛型操作API

Spring4新

- CentOS安装Mysql5.5

liuxingguome

centos

CentOS下以RPM方式安装MySQL5.5

首先卸载系统自带Mysql:

yum remove mysql mysql-server mysql-libs compat-mysql51

rm -rf /var/lib/mysql

rm /etc/my.cnf

查看是否还有mysql软件:

rpm -qa|grep mysql

去http://dev.mysql.c

- 第14章 工具函数(下)

onestopweb

函数

index.html

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd">

<html xmlns="http://www.w3.org/

- POJ 1050

SaraWon

二维数组子矩阵最大和

POJ ACM第1050题的详细描述,请参照

http://acm.pku.edu.cn/JudgeOnline/problem?id=1050

题目意思:

给定包含有正负整型的二维数组,找出所有子矩阵的和的最大值。

如二维数组

0 -2 -7 0

9 2 -6 2

-4 1 -4 1

-1 8 0 -2

中和最大的子矩阵是

9 2

-4 1

-1 8

且最大和是15

- [5]设计模式——单例模式

tsface

java单例设计模式虚拟机

单例模式:保证一个类仅有一个实例,并提供一个访问它的全局访问点

安全的单例模式:

/*

* @(#)Singleton.java 2014-8-1

*

* Copyright 2014 XXXX, Inc. All rights reserved.

*/

package com.fiberhome.singleton;

- Java8全新打造,英语学习supertool

yangshangchuan

javasuperword闭包java8函数式编程

superword是一个Java实现的英文单词分析软件,主要研究英语单词音近形似转化规律、前缀后缀规律、词之间的相似性规律等等。Clean code、Fluent style、Java8 feature: Lambdas, Streams and Functional-style Programming。

升学考试、工作求职、充电提高,都少不了英语的身影,英语对我们来说实在太重要