YOLOV5 自定义数据集 dataset (六)

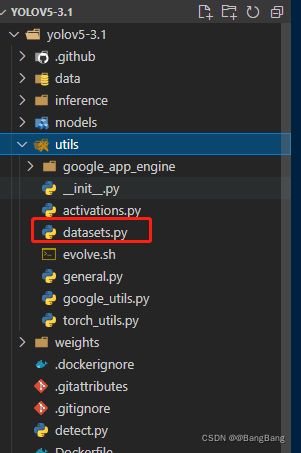

解读下与数据集相关的代码,代码所在位置为:yolov5-3.1 -> utils ->datasets.py

其中yolov5.3源码下载

导入头文件

import glob

import os

import random

import shutil

import time

from pathlib import Path

from threading import Thread

import cv2

import math

import numpy as np

import torch

from PIL import Image, ExifTags

from torch.utils.data import Dataset

from tqdm import tqdm

from utils.general import xyxy2xywh, xywh2xyxy, torch_distributed_zero_first

支持的数据格式

#支持的图像格式

img_formats = ['.bmp', '.jpg', '.jpeg', '.png', '.tif', '.tiff', '.dng']

#支持的视频格式

vid_formats = ['.mov', '.avi', '.mp4', '.mpg', '.mpeg', '.m4v', '.wmv', '.mkv']

获得Exif

Exif : Exchangeable image file format

是专门为数码相机的照片设定的,可以记录数码照片的属性信息和拍摄数据

其中有个orientation,数码相机可以横着拍和竖着拍,所以需要了解照片的朝向

def exif_size(img):

# Returns exif-corrected PIL size

s = img.size # (width, height)

try:

rotation = dict(img._getexif().items())[orientation]

if rotation == 6: # rotation 270

s = (s[1], s[0])

elif rotation == 8: # rotation 90

s = (s[1], s[0])

except:

pass

return s

在处理的时候我们可能需要把原来的数码相机做一个旋转,否则图片不是正方的话,目标检测就不容易识别到其中的物体

LoadImagesAndLabels类

自定义数据集,定义LoadImagesAndLabels类,继承DataSet,重写抽象方法:__len()__, __getitem()__

class LoadImagesAndLabels(Dataset): # for training/testing

def __init__(self, path, img_size=640, batch_size=16, augment=False, hyp=None, rect=False, image_weights=False,

cache_images=False, single_cls=False, stride=32, pad=0.0, rank=-1):

self.img_size = img_size #输入图片的分辨率

self.augment = augment #数据增强

self.hyp = hyp #超参数

self.image_weights = image_weights #图片采集权重

self.rect = False if image_weights else rect #矩形训练

# mosaic数据增强

self.mosaic = self.augment and not self.rect # load 4 images at a time into a mosaic (only during training)

# mosaic增强边界值

self.mosaic_border = [-img_size // 2, -img_size // 2]

self.stride = stride #下采样倍数

def img2label_paths(img_paths):

# Define label paths as a function of image paths

# windows sa="\\images\\" sb="\\labels\\"

sa, sb = os.sep + 'images' + os.sep, os.sep + 'labels' + os.sep # ubantu :/images/, /labels/ substrings

# 将图片路径替换为labels的路径

return [x.replace(sa, sb, 1).replace(os.path.splitext(x)[-1], '.txt') for x in img_paths]

try:

f = [] # image files

for p in path if isinstance(path, list) else [path]:

p = str(Path(p)) # os-agnostic

parent = str(Path(p).parent) + os.sep #获取数据集的上级父目录,os.sep为路径里的分隔符(不同系统的分割符不同,os.sep根据系统自适应)

# 系统分隔符,Windows 系统通过"\\",Linux系统如ubantu分割符 "/",而苹果Mac OS系统中是":"

if os.path.isfile(p): # file;如果路径path

with open(p, 'r') as t:

t = t.read().splitlines()

# 更换相对路径

f += [x.replace('./', parent) if x.startswith('./') else x for x in t] # local to global path

elif os.path.isdir(p): # folder

f += glob.iglob(p + os.sep + '*.*')

else:

raise Exception('%s does not exist' % p)

#分隔符替换为os.sep,os.path.splitext(x)将文件名与扩展名分开,并返回一个列表

self.img_files = sorted(

[x.replace('/', os.sep) for x in f if os.path.splitext(x)[-1].lower() in img_formats])

assert len(self.img_files) > 0, 'No images found'

except Exception as e:

raise Exception('Error loading data from %s: %s\nSee %s' % (path, e, help_url))

# Check cache

self.label_files = img2label_paths(self.img_files) # labels files

cache_path = str(Path(self.label_files[0]).parent) + '.cache' # cached labels

if os.path.isfile(cache_path):

# 将标签文件放在缓存文件中,在训练时会处理的更快些

cache = torch.load(cache_path) # load

if cache['hash'] != get_hash(self.label_files + self.img_files): # dataset changed

cache = self.cache_labels(cache_path) # re-cache

else:

cache = self.cache_labels(cache_path) # cache

# Read cache

cache.pop('hash') # remove hash

labels, shapes = zip(*cache.values())

self.labels = list(labels)

self.shapes = np.array(shapes, dtype=np.float64)

self.img_files = list(cache.keys()) # update

self.label_files = img2label_paths(cache.keys()) # update

n = len(shapes) # number of images

bi = np.floor(np.arange(n) / batch_size).astype(np.int) # batch index

nb = bi[-1] + 1 # number of batches

self.batch = bi # batch index of image

self.n = n

# Rectangular Training

if self.rect:

# Sort by aspect ratio

s = self.shapes # wh

ar = s[:, 1] / s[:, 0] # aspect ratio

irect = ar.argsort() # 根据aspect ratio 从小到大排序

self.img_files = [self.img_files[i] for i in irect]

self.label_files = [self.label_files[i] for i in irect]

self.labels = [self.labels[i] for i in irect]

self.shapes = s[irect] # wh

ar = ar[irect]

# Set training image shapes

shapes = [[1, 1]] * nb

for i in range(nb):

ari = ar[bi == i]

mini, maxi = ari.min(), ari.max()

if maxi < 1: #如果一个batch中最大的h/w小于1,则此batch的shape为(img_size*maxi,img_size)

shapes[i] = [maxi, 1]

elif mini > 1: #如果一个batch中最小的h/w大于1,则此batch的shape为(img_size,img_size/mini)

shapes[i] = [1, 1 / mini]

self.batch_shapes = np.ceil(np.array(shapes) * img_size / stride + pad).astype(np.int) * stride

# Check labels

create_datasubset, extract_bounding_boxes, labels_loaded = False, False, False

nm, nf, ne, ns, nd = 0, 0, 0, 0, 0 # number missing, found, empty, datasubset, duplicate

pbar = enumerate(self.label_files)

if rank in [-1, 0]: #只要一个GPU rank=-1

pbar = tqdm(pbar) # pbar 用进度条包装

for i, file in pbar:

l = self.labels[i] # label [[8 0.585 0.73067 0.122 0.34133],[],[]]

if l is not None and l.shape[0]:

assert l.shape[1] == 5, '> 5 label columns: %s' % file

assert (l >= 0).all(), 'negative labels: %s' % file

assert (l[:, 1:] <= 1).all(), 'non-normalized or out of bounds coordinate labels: %s' % file

if np.unique(l, axis=0).shape[0] < l.shape[0]: # duplicate rows

nd += 1 # print('WARNING: duplicate rows in %s' % self.label_files[i]) # duplicate rows

if single_cls:

l[:, 0] = 0 # force dataset into single-class mode

self.labels[i] = l

nf += 1 # file found

# Create subdataset (a smaller dataset)

if create_datasubset and ns < 1E4:

if ns == 0:

create_folder(path='./datasubset')

os.makedirs('./datasubset/images')

exclude_classes = 43

if exclude_classes not in l[:, 0]:

ns += 1

# shutil.copy(src=self.img_files[i], dst='./datasubset/images/') # copy image

with open('./datasubset/images.txt', 'a') as f:

f.write(self.img_files[i] + '\n')

# Extract object detection boxes for a second stage classifier

if extract_bounding_boxes:

p = Path(self.img_files[i])

img = cv2.imread(str(p))

h, w = img.shape[:2]

for j, x in enumerate(l):

f = '%s%sclassifier%s%g_%g_%s' % (p.parent.parent, os.sep, os.sep, x[0], j, p.name)

if not os.path.exists(Path(f).parent):

os.makedirs(Path(f).parent) # make new output folder

b = x[1:] * [w, h, w, h] # box

b[2:] = b[2:].max() # rectangle to square

b[2:] = b[2:] * 1.3 + 30 # pad

b = xywh2xyxy(b.reshape(-1, 4)).ravel().astype(np.int)

b[[0, 2]] = np.clip(b[[0, 2]], 0, w) # clip boxes outside of image

b[[1, 3]] = np.clip(b[[1, 3]], 0, h)

assert cv2.imwrite(f, img[b[1]:b[3], b[0]:b[2]]), 'Failure extracting classifier boxes'

else:

ne += 1 # print('empty labels for image %s' % self.img_files[i]) # file empty

# os.system("rm '%s' '%s'" % (self.img_files[i], self.label_files[i])) # remove

if rank in [-1, 0]:

pbar.desc = 'Scanning labels %s (%g found, %g missing, %g empty, %g duplicate, for %g images)' % (

cache_path, nf, nm, ne, nd, n)

if nf == 0:

s = 'WARNING: No labels found in %s. See %s' % (os.path.dirname(file) + os.sep, help_url)

print(s)

assert not augment, '%s. Can not train without labels.' % s

# Cache images into memory for faster training (WARNING: large datasets may exceed system RAM)

self.imgs = [None] * n

if cache_images:

gb = 0 # Gigabytes of cached images

pbar = tqdm(range(len(self.img_files)), desc='Caching images')

self.img_hw0, self.img_hw = [None] * n, [None] * n

for i in pbar: # max 10k images

self.imgs[i], self.img_hw0[i], self.img_hw[i] = load_image(self, i) # img, hw_original, hw_resized

gb += self.imgs[i].nbytes

pbar.desc = 'Caching images (%.1fGB)' % (gb / 1E9)

def cache_labels(self, path='labels.cache'):

# Cache dataset labels, check images and read shapes

x = {} # dict

pbar = tqdm(zip(self.img_files, self.label_files), desc='Scanning images', total=len(self.img_files))

for (img, label) in pbar:

try:

l = []

im = Image.open(img)

im.verify() # PIL verify

shape = exif_size(im) # image size

assert (shape[0] > 9) & (shape[1] > 9), 'image size <10 pixels'

if os.path.isfile(label):

with open(label, 'r') as f:

l = np.array([x.split() for x in f.read().splitlines()], dtype=np.float32) # labels

if len(l) == 0:

l = np.zeros((0, 5), dtype=np.float32)

x[img] = [l, shape]

except Exception as e:

print('WARNING: Ignoring corrupted image and/or label %s: %s' % (img, e))

x['hash'] = get_hash(self.label_files + self.img_files)

torch.save(x, path) # save for next time

return x

# 自定义数据集需要重新的抽象函数

def __len__(self):

return len(self.img_files) #对于训练集来说,返回训练集图片的个数

# 自定义数据集需要重新的抽象函数

def __getitem__(self, index):

if self.image_weights:

index = self.indices[index]

“”“

self.indices在train.py中设置,要配合train.py中的代码使用

image_weights 为根据标签中每个类别的数量设置的图片采样权重

如果image_weights=True 则根据图片采样权重获取新的下标

”“”

hyp = self.hyp

mosaic = self.mosaic and random.random() < hyp['mosaic']

# 使用mosaic进行图像增强

if mosaic:

# Load mosaic

img, labels = load_mosaic(self, index)

shapes = None

# MixUp https://arxiv.org/pdf/1710.09412.pdf

#Mixup 数据增强

if random.random() < hyp['mixup']:

img2, labels2 = load_mosaic(self, random.randint(0, len(self.labels) - 1))

r = np.random.beta(8.0, 8.0) # mixup ratio, alpha=beta=8.0

img = (img * r + img2 * (1 - r)).astype(np.uint8)

labels = np.concatenate((labels, labels2), 0)

else:

# Load image

img, (h0, w0), (h, w) = load_image(self, index)

# Letterbox

shape = self.batch_shapes[self.batch[index]] if self.rect else self.img_size # final letterboxed shape

img, ratio, pad = letterbox(img, shape, auto=False, scaleup=self.augment)

shapes = (h0, w0), ((h / h0, w / w0), pad) # for COCO mAP rescaling

# Load labels

labels = []

x = self.labels[index]

if x.size > 0:

# Normalized xywh to pixel xyxy format

labels = x.copy()

labels[:, 1] = ratio[0] * w * (x[:, 1] - x[:, 3] / 2) + pad[0] # pad width

labels[:, 2] = ratio[1] * h * (x[:, 2] - x[:, 4] / 2) + pad[1] # pad height

labels[:, 3] = ratio[0] * w * (x[:, 1] + x[:, 3] / 2) + pad[0]

labels[:, 4] = ratio[1] * h * (x[:, 2] + x[:, 4] / 2) + pad[1]

if self.augment:

# Augment imagespace

if not mosaic:

img, labels = random_perspective(img, labels,

degrees=hyp['degrees'],

translate=hyp['translate'],

scale=hyp['scale'],

shear=hyp['shear'],

perspective=hyp['perspective'])

# Augment colorspace #随机改变图片的色调(H).饱和度(S),亮度(W)

augment_hsv(img, hgain=hyp['hsv_h'], sgain=hyp['hsv_s'], vgain=hyp['hsv_v'])

# Apply cutouts

# if random.random() < 0.9:

# labels = cutout(img, labels)

nL = len(labels) # number of labels

if nL:

labels[:, 1:5] = xyxy2xywh(labels[:, 1:5]) # convert xyxy to xywh

labels[:, [2, 4]] /= img.shape[0] # normalized height 0-1

labels[:, [1, 3]] /= img.shape[1] # normalized width 0-1

if self.augment:

# flip up-down

if random.random() < hyp['flipud']:

img = np.flipud(img)

if nL:

labels[:, 2] = 1 - labels[:, 2]

# flip left-right

if random.random() < hyp['fliplr']:

img = np.fliplr(img)

if nL:

labels[:, 1] = 1 - labels[:, 1]

# 初始化标签对应的图片序号,配合下面的collate_fn使用

labels_out = torch.zeros((nL, 6))

if nL:

labels_out[:, 1:] = torch.from_numpy(labels)

# Convert

#img [:,:,::-1]的作用是实现BGR到RGB通道的转换;

#channel轴换到前面

#torch.Tensor 高纬矩阵表示: (nsample) x C x H x W

#numpy.ndarray 高纬矩阵表示:H x W x C

img = img[:, :, ::-1].transpose(2, 0, 1) # transpose(2, 0, 1)将H x W x C 转换为C x H x W

img = np.ascontiguousarray(img) #把img 变为内存连续的数据

return torch.from_numpy(img), labels_out, self.img_files[index], shapes # getitem 的返回结果

@staticmethod

def collate_fn(batch): #整理函数:如何去样本的,可以定义自己的函数来实现想要的功能

img, label, path, shapes = zip(*batch) # transposed

for i, l in enumerate(label):

l[:, 0] = i # add target image index for build_targets()

return torch.stack(img, 0), torch.cat(label, 0), path, shapes

LoadImages类

# 定义迭代器 LoadImages;用于detect.py 中获取图片数据的

class LoadImages: # for inference

def __init__(self, path, img_size=640):

p = str(Path(path)) # os-agnostic ‘inference\\images’

p = os.path.abspath(p) # absolute path; 绝对路径 `d:\\yolov5\\inference\\images`

# 如果采用正则化提取图片/视频,则使用glob获取文件路径

if '*' in p:

files = sorted(glob.glob(p, recursive=True)) # glob

elif os.path.isdir(p):

files = sorted(glob.glob(os.path.join(p, '*.*'))) # dir

elif os.path.isfile(p):

files = [p] # files

else:

raise Exception('ERROR: %s does not exist' % p)

# os.path.splitext 分离文件名和后缀(后缀包含.)

#分别提取图片和视频文件路径

images = [x for x in files if os.path.splitext(x)[-1].lower() in img_formats]

videos = [x for x in files if os.path.splitext(x)[-1].lower() in vid_formats]

# 获得图片与视频数量

ni, nv = len(images), len(videos)

self.img_size = img_size

self.files = images + videos

self.nf = ni + nv # number of files

self.video_flag = [False] * ni + [True] * nv

self.mode = 'images'

if any(videos): #如果包含视频文件,则初始化opencv中的视频模块,cap=cv2.VideoCapture等

self.new_video(videos[0]) # new video

else:

self.cap = None

assert self.nf > 0, 'No images or videos found in %s. Supported formats are:\nimages: %s\nvideos: %s' % \

(p, img_formats, vid_formats)

# 因为是个迭代器,所以会定义 __iter__和__next__

def __iter__(self):

self.count = 0

return self

def __next__(self):

if self.count == self.nf: #self.count == self.nf 表示数据读取完了

raise StopIteration

path = self.files[self.count]

if self.video_flag[self.count]:

# Read video

self.mode = 'video'

ret_val, img0 = self.cap.read()

if not ret_val:

self.count += 1

self.cap.release()

if self.count == self.nf: # last video

raise StopIteration

else:

path = self.files[self.count]

self.new_video(path)

ret_val, img0 = self.cap.read()

self.frame += 1

print('video %g/%g (%g/%g) %s: ' % (self.count + 1, self.nf, self.frame, self.nframes, path), end='')

else:

# Read image

self.count += 1

img0 = cv2.imread(path) # BGR

assert img0 is not None, 'Image Not Found ' + path

print('image %g/%g %s: ' % (self.count, self.nf, path), end='')

# Padded resize

img = letterbox(img0, new_shape=self.img_size)[0]

# Convert

img = img[:, :, ::-1].transpose(2, 0, 1) # BGR to RGB, to 3x416x416

img = np.ascontiguousarray(img) #将数组内存转换为连续的,提高运行速度

# cv2.imwrite(path + '.letterbox.jpg', 255 * img.transpose((1, 2, 0))[:, :, ::-1]) # save letterbox image

return path, img, img0, self.cap

def new_video(self, path):

self.frame = 0

self.cap = cv2.VideoCapture(path)

self.nframes = int(self.cap.get(cv2.CAP_PROP_FRAME_COUNT))

def __len__(self):

return self.nf # number of files

LoadWebcam类

LoadWebcam在yolov5中并没有用到

LoadStreams类

# 定义迭代器 LoadStreams;用于detect.py

"""

cap.grap()从设备或视频获取下一帧,获取成功返回true否则false

cap.retrieve(frame) 在grap后使用,对获取到的帧进行解码,也返回true或false

cap.read(frame) 结合grap 和retrieve的功能,抓取下一帧并解码

"""

class LoadStreams: # multiple IP or RTSP cameras

def __init__(self, sources='streams.txt', img_size=640):

self.mode = 'images'

self.img_size = img_size

# 如果sources为一个保存了多个视频流的文件

# 获取每一个视频流,保存为一个列表

if os.path.isfile(sources):

with open(sources, 'r') as f:

sources = [x.strip() for x in f.read().splitlines() if len(x.strip())]

else:

sources = [sources]

n = len(sources)

self.imgs = [None] * n

self.sources = sources #视频流个数

for i, s in enumerate(sources):

# Start the thread to read frames from the video stream

print('%g/%g: %s... ' % (i + 1, n, s), end='') #打印当前视频,总视频数,视频流地址

cap = cv2.VideoCapture(eval(s) if s.isnumeric() else s) #如果source=0 则打开摄像头,否则打开视频流地址

assert cap.isOpened(), 'Failed to open %s' % s

w = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH)) #获取视频的宽度信息

h = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT)) #获取视频高度信息

fps = cap.get(cv2.CAP_PROP_FPS) % 100 #获取视频的帧率

_, self.imgs[i] = cap.read() # guarantee first frame

# 创建多线程读取视频流,daemon=True表示主线程结束时子线程也结束

thread = Thread(target=self.update, args=([i, cap]), daemon=True)

print(' success (%gx%g at %.2f FPS).' % (w, h, fps))

thread.start()

print('') # newline

# check for common shapes

s = np.stack([letterbox(x, new_shape=self.img_size)[0].shape for x in self.imgs], 0) # inference shapes

self.rect = np.unique(s, axis=0).shape[0] == 1 # rect inference if all shapes equal

if not self.rect:

print('WARNING: Different stream shapes detected. For optimal performance supply similarly-shaped streams.')

def update(self, index, cap):

# Read next stream frame in a daemon thread

n = 0

while cap.isOpened():

n += 1

# _, self.imgs[index] = cap.read()

cap.grab()

if n == 4: # read every 4th frame 每4帧读取一次

_, self.imgs[index] = cap.retrieve()

n = 0

time.sleep(0.01) # wait time

def __iter__(self):

self.count = -1

return self

def __next__(self):

self.count += 1

img0 = self.imgs.copy()

if cv2.waitKey(1) == ord('q'): # q to quit

cv2.destroyAllWindows()

raise StopIteration

# Letterbox

img = [letterbox(x, new_shape=self.img_size, auto=self.rect)[0] for x in img0]

# Stack

img = np.stack(img, 0)

# Convert

img = img[:, :, :, ::-1].transpose(0, 3, 1, 2) # BGR to RGB, to bsx3x416x416

img = np.ascontiguousarray(img)

return self.sources, img, img0, None

def __len__(self):

return 0 # 1E12 frames = 32 streams at 30 FPS for 30 years