imdb情感分析

数据处理

数据准备

import urllib.request

import os

import tarfile#在线方式下载数据集

url = ""

filepath = "data/IMDb数据集/aclImdb_v1.tar.gz"

if not os.path.isfile(filepath):

result = urllib.request.urlretrieve(url,filepath)

print('download:',result)#解压下载好的数据集

if not os.path.exists("dara/IMDb数据集/aclImdb"):

tfile = tarfile.open("data/IMDb数据集/aclImdb_v1.tar.gz",'r:gz')

result = tfile.extractall('data/IMDb数据集/')读取数据

from keras.preprocessing import sequence #统一所有数字列表的长度 截长补短

from keras.preprocessing.text import Tokenizer #用于构建字典#函数:置空HTML标签

import re

def rm_tags(text):

re_tags = re.compile(r'<[^>]+>')

return re_tags.sub(' ',text)#函数:读取数据

import os

def read_files(filetype):

path = "data/IMDb数据集/aclImdb/"

file_list=[]

positive_path = path + filetype + "/pos/"

for f in os.listdir(positive_path):

file_list += [positive_path + f]

negative_path = path + filetype + "/neg/"

for f in os.listdir(negative_path):

file_list += [negative_path + f]

print("read",filetype,"files:",len(file_list))

all_labels = ([1]*12500+[0]*12500)

all_texts = []

for fi in file_list:

with open(fi,encoding = 'utf8') as file_input:

all_texts += [rm_tags(" ".join(file_input.readlines()))]

return all_labels,all_textsy_train,train_text = read_files("train")read train files: 25000

y_test,test_text = read_files("test")read test files: 25000

train_text[0]'Skippy from Family Ties goes from clean-cut to metal kid in this fairly cheesy movie. The film seems like it was made in response to all those upset parents who claimed metal music was turning their kids evil or making them kill themselves - except in this one a dead satanic metal star is trying to come back from the grave (using Skippy to help out). And while the plot is corny and cliche, the corniness (for example, an evil green fog taking off a girl\'s clothes)and the soundtrack are what make the movie so hilarious (and great). And of course, there\'s nothing like Ozzy Osbourne playing a preacher who\'s asking what happened to the love song :). Definitely a movie for having a few friends over for a good laugh. And while you\'re at it, make it a double feature with Slumber Party Massacre 2 - there\'s an "evil rocker" (as stated on the video box)driller killer in black leather w/fringe. A must see for cheesy movie fans.'test_text[0]"This film is one of the classics of cinema history. It was not made to please modern audiences, so some people nowadays may think it is creaky or stilted. I found it to be absorbing throughout. Cherkassov has exactly the right presence to play Alexander Nevskyi, just as he did when he played Ivan Groznyi (Ivan the Terrible) several years later. The music was beautiful. My one complaint was the poor soundtrack that was quite garbled. Although I only know a little Russian, it would have been nice to be able to pick out more words rather than having to rely almost 100% on the subtitles. I was watching this on an old videotape from the library, though. Perhaps by now a DVD version exists on which the sound has been enhanced. I would like to know whether the actors were using archaic Russian or even Old Church Slavonic when they were speaking. The subtitles were strangely worded, and it's hard for me to tell whether this was to reflect an older manner of speaking, or whether the subtitles were just somewhat poorly done."y_train[0]1y_train[12501]0建立字典token

token = Tokenizer(num_words=2000)#建立包含2000字数的字典

token.fit_on_texts(train_text)print(token.document_count)25000

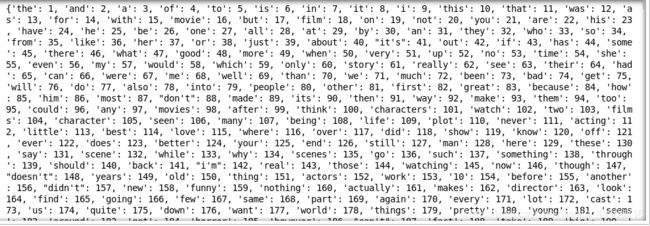

print(token.word_index)将训练集和测试集的数据转换成数字列表的程度

x_train_seq = token.texts_to_sequences(train_text)

x_test_seq = token.texts_to_sequences(test_text)train_text[0]'Skippy from Family Ties goes from clean-cut to metal kid in this fairly cheesy movie. The film seems like it was made in response to all those upset parents who claimed metal music was turning their kids evil or making them kill themselves - except in this one a dead satanic metal star is trying to come back from the grave (using Skippy to help out). And while the plot is corny and cliche, the corniness (for example, an evil green fog taking off a girl\'s clothes)and the soundtrack are what make the movie so hilarious (and great). And of course, there\'s nothing like Ozzy Osbourne playing a preacher who\'s asking what happened to the love song :). Definitely a movie for having a few friends over for a good laugh. And while you\'re at it, make it a double feature with Slumber Party Massacre 2 - there\'s an "evil rocker" (as stated on the video box)driller killer in black leather w/fringe. A must see for cheesy movie fans.'x_train_seq[0]

统一数字列表长度

x_train = sequence.pad_sequences(x_train_seq,maxlen=100)

x_test =sequence.pad_sequences(x_test_seq,maxlen=100)

len(x_train_seq[0])142len(x_train[0])100len(x_train_seq[5])63len(x_train[5])100x_train[5]array([ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 9, 7, 3, 2, 10, 18, 12,

613, 99, 71, 65, 456, 106, 3, 20, 34, 83, 18,

2, 7, 3, 1670, 782, 56, 147, 8, 12, 961, 518,

2, 71, 67, 1, 432, 307, 62, 505, 8, 1270, 9,

193, 1, 18, 12, 638, 7, 28, 1, 204, 2, 9,

443, 1, 173, 4, 101, 32, 62, 19, 21, 7, 1,

18], dtype=int32)for i in x_train_seq:

print(len(i))142

124

115

162

295

63

142

303

217

182

103

111

306

215

142

139

288

106

23构建模型

1多层感知器

from keras.models import Sequential

from keras.layers.core import Dense,Dropout,Activation,Flatten

from keras.layers.embeddings import Embedding嵌入层 数字列表转换成向量列表

model1 = Sequential()model1.add(Embedding(output_dim=32,input_dim=2000,input_length=100))

model1.add(Dropout(0.2))建立多层感知器模型

model1.add(Flatten())model1.add(Dense(units=256,activation='relu'))

model1.add(Dropout(0.35))model1.add(Dense(units=1,activation='sigmoid'))model1.summary()_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= embedding_1 (Embedding) (None, 100, 32) 64000 _________________________________________________________________ dropout_1 (Dropout) (None, 100, 32) 0 _________________________________________________________________ flatten_1 (Flatten) (None, 3200) 0 _________________________________________________________________ dense_1 (Dense) (None, 256) 819456 _________________________________________________________________ dropout_2 (Dropout) (None, 256) 0 _________________________________________________________________ dense_2 (Dense) (None, 1) 257 ================================================================= Total params: 883,713 Trainable params: 883,713 Non-trainable params: 0 ____________________________

设置训练方法model1.compile(loss = 'binary_crossentropy',optimizer='adam',metrics=['accuracy'])traub_history = model1.fit(x_train,y_train,batch_size=100,epochs=10,verbose=2,validation_split=0.2)Train on 20000 samples, validate on 5000 samples Epoch 1/10 - 2s - loss: 0.4822 - acc: 0.7569 - val_loss: 0.4742 - val_acc: 0.7814 Epoch 2/10 - 1s - loss: 0.2708 - acc: 0.8893 - val_loss: 0.3919 - val_acc: 0.8282 Epoch 3/10 - 1s - loss: 0.1636 - acc: 0.9404 - val_loss: 0.8360 - val_acc: 0.7028 Epoch 4/10 - 1s - loss: 0.0836 - acc: 0.9718 - val_loss: 0.7852 - val_acc: 0.7616 Epoch 5/10 - 1s - loss: 0.0491 - acc: 0.9829 - val_loss: 0.9962 - val_acc: 0.7524 Epoch 6/10 - 1s - loss: 0.0345 - acc: 0.9872 - val_loss: 0.9867 - val_acc: 0.7794 Epoch 7/10 - 1s - loss: 0.0333 - acc: 0.9883 - val_loss: 1.0124 - val_acc: 0.7824 Epoch 8/10 - 1s - loss: 0.0282 - acc: 0.9891 - val_loss: 1.4622 - val_acc: 0.7242 Epoch 9/10 - 1s - loss: 0.0263 - acc: 0.9903 - val_loss: 1.2537 - val_acc: 0.7592 Epoch 10/10 - 1s - loss: 0.0217 - acc: 0.9925 - val_loss: 1.4099 - val_acc: 0.7458

validation_split=0.2

scores = model1.evaluate(x_test,y_test,verbose=1)

scores[1]25000/25000 [==============================] - 1s 32us/step 0.80984

predict = model1.predict_classes(x_test)predict[:10]array([[0],

[1],

[1],

[0],

[1],

[0],

[1],

[1],

[1],

[1]], dtype=int32)

predict_classes = predict.reshape(-1)#转换成1维数组

predict_classes[:10]array([0, 1, 1, 0, 1, 0, 1, 1, 1, 1], dtype=int32)

#显示预测结果

ResultDict={1:'正面的',0:'负面的'}

def display_test_Result(i):

print(test_text[i])

print('label真实值:',ResultDict[y_test[i]],'预测结果:',ResultDict[predict_classes[i]])display_test_Result(2)Alejandro (Alejandro Polanco), called Ale for short, works at an auto-body repair shop in what has come to be known as the Iron Triangle, a deteriorating twenty block stretch of auto junk yards and sleazy car repair dealers close to Shea Stadium in Queens, New York. Here customers do not question whether or not parts come from stolen cars or why they are able to receive such large discounts, they simply put down their cash and hope that everything is on the up and up. Sleazy outskirts like these are not highlighted in the tour guides but Iranian-American director Ramin Bahrani puts them on vivid display in Chop Shop, a powerful Indie film that received much affection last year at Cannes, Berlin, and Toronto. A follow up to his acclaimed "Man Push Cart", Bahrani spent one and a half years in the location that F. Scott Fitzgerald described as in the Great Gatsby as "the valley of the ashes". For all its depiction of bleakness, Chop Shop is not a work of social criticism but, like Hector Babenco's Pixote, a poignant character study in which a young boy's survival is bought at the price of his innocence. Shot on location at Willets Point in Queens, Bahrani makes you feel as if you are there, sweating in a hot and humid New York summer with all of its noise and chaos. The film's focus is on the charming, street-smart 12-year-old Ale who lives on the edge without any adult support or supervision other than his boss (Rob Sowulski), the real-life proprietor of the Iron Triangle garage. Polanco's performance is raw and slightly ragged yet he fully earned the standing ovation he received at the film's premiere at Cannes along with a hug from great Iranian director Abbas Kiarostami. Cramped into a tiny room above the garage together with his 16-year-old sister Isamar (Isamar Gonzales) who works dispensing food from a lunch wagon, Ale is like one of the interchangeable spare parts he deals with. While he has dreams of owning his own food-service van, in the city that never sleeps, he knows that the only thing that may make the "top of the heap" is another dented fender. In this environment, Ale and Isi use any means necessary to keep their heads above water while their love for each other remains constant and they still laugh and act out the childhood that was never theirs. As Barack Obama says in his book "Dreams From My Father", the change may come later when their eyes stop laughing and they have shut off something inside. In the meantime, Ale supplements his earnings by selling candy bars in the crowded New York subways with his friend Carlos (Carlos Zapata) and pushing bootleg DVDs on the street corners, while Isi does tricks for the truck drivers to save enough money to buy the rusted $4500 van in which they hope to start their own business. Though Ale is a "good boy", he is not above stealing purses and hubcaps in the Shea Stadium parking lot, events that Bahrani's camera observes without judgment. In Chop Shop, Bahrani has provided a compelling antidote to the underdog success stories churned out by the Hollywood dream factory, and has given us a film of stunning naturalism and respect for its characters, similar in many ways to the great Italian neo-realist films and the recent Iranian works of Kiarostami, Panahi, and others. While the outcome of the characters is far from certain, Bahrani makes sure that we notice a giant billboard at Shea Stadium that reads, "Make dreams happen", leaving us with the hint that, in Rumi's phrase, "the drum of the realization of that promise is beating," label真实值: 正面的 预测结果: 正面的

2RNN模型

from keras.models import Sequential

from keras.layers.core import Dense,Dropout,Activation

from keras.layers.embeddings import Embedding

from keras.layers.recurrent import SimpleRNNmodel2 = Sequential()model2.add(Embedding(output_dim=32,input_dim=2000,input_length=100))

model2.add(Dropout(0.2))model2.add(SimpleRNN(units=16))model2.add(Dense(units=256,activation='relu'))

model2.add(Dropout(0.35))model2.add(Dense(units=1,activation='sigmoid'))model2.summary()Layer (type) Output Shape Param # ================================================================= embedding_2 (Embedding) (None, 100, 32) 64000 _________________________________________________________________ dropout_3 (Dropout) (None, 100, 32) 0 _________________________________________________________________ simple_rnn_1 (SimpleRNN) (None, 16) 784 _________________________________________________________________ dense_3 (Dense) (None, 256) 4352 _________________________________________________________________ dropout_4 (Dropout) (None, 256) 0 _________________________________________________________________ dense_4 (Dense) (None, 1) 257 ================================================================= Total params: 69,393 Trainable params: 69,393 Non-trainable params: 0

model2.compile(loss = 'binary_crossentropy',optimizer='adam',metrics=['accuracy'])traub_history = model2.fit(x_train,y_train,batch_size=100,epochs=10,verbose=2,validation_split=0.2)Train on 20000 samples, validate on 5000 samples Epoch 1/10 - 8s - loss: 0.5687 - acc: 0.6943 - val_loss: 0.4590 - val_acc: 0.7952 Epoch 2/10 - 8s - loss: 0.3595 - acc: 0.8494 - val_loss: 0.6334 - val_acc: 0.7270 Epoch 3/10 - 7s - loss: 0.3031 - acc: 0.8767 - val_loss: 0.5557 - val_acc: 0.7578 Epoch 4/10 - 8s - loss: 0.2679 - acc: 0.8920 - val_loss: 0.5127 - val_acc: 0.7734 Epoch 5/10 - 8s - loss: 0.2313 - acc: 0.9099 - val_loss: 0.6909 - val_acc: 0.7290 Epoch 6/10 - 8s - loss: 0.1955 - acc: 0.9260 - val_loss: 0.5649 - val_acc: 0.8096 Epoch 7/10 - 8s - loss: 0.1662 - acc: 0.9392 - val_loss: 0.7401 - val_acc: 0.7702 Epoch 8/10 - 7s - loss: 0.1487 - acc: 0.9431 - val_loss: 0.8676 - val_acc: 0.7514 Epoch 9/10 - 7s - loss: 0.1274 - acc: 0.9518 - val_loss: 0.8110 - val_acc: 0.7620 Epoch 10/10 - 7s - loss: 0.1063 - acc: 0.9596 - val_loss: 0.9075 - val_acc: 0.7654

scores = model2.evaluate(x_test,y_test,verbose=1)

scores[1]

25000/25000 [==============================] - 14s 543us/step 0.81376

3LSTM模型

from keras.models import Sequential

from keras.layers.core import Dense,Dropout,Activation,Flatten

from keras.layers.embeddings import Embedding

from keras.layers.recurrent import LSTMmodel3 = Sequential()model3.add(Embedding(output_dim=32,input_dim=2000,input_length=100))

model3.add(Dropout(0.2))model3.add(LSTM(32))model3.add(Dense(units=256,activation='relu'))

model3.add(Dropout(0.35))model3.add(Dense(units=1,activation='sigmoid'))model3.summary()_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= embedding_3 (Embedding) (None, 100, 32) 64000 _________________________________________________________________ dropout_5 (Dropout) (None, 100, 32) 0 _________________________________________________________________ lstm_1 (LSTM) (None, 32) 8320 _________________________________________________________________ dense_5 (Dense) (None, 256) 8448 _________________________________________________________________ dropout_6 (Dropout) (None, 256) 0 _________________________________________________________________ dense_6 (Dense) (None, 1) 257 ================================================================= Total params: 81,025 Trainable params: 81,025 Non-trainable params: 0 _________________________________________________________________

model3.compile(loss = 'binary_crossentropy',optimizer='adam',metrics=['accuracy'])traub_history = model3.fit(x_train,y_train,batch_size=100,epochs=10,verbose=2,validation_split=0.2)

Train on 20000 samples, validate on 5000 samples Epoch 1/10 - 23s - loss: 0.4918 - acc: 0.7517 - val_loss: 0.4642 - val_acc: 0.7784 Epoch 2/10 - 22s - loss: 0.3222 - acc: 0.8630 - val_loss: 0.5723 - val_acc: 0.7286 Epoch 3/10 - 23s - loss: 0.2948 - acc: 0.8775 - val_loss: 0.4594 - val_acc: 0.7948 Epoch 4/10 - 23s - loss: 0.2806 - acc: 0.8851 - val_loss: 0.4512 - val_acc: 0.8052 Epoch 5/10 - 22s - loss: 0.2688 - acc: 0.8902 - val_loss: 0.5428 - val_acc: 0.7654 Epoch 6/10 - 22s - loss: 0.2501 - acc: 0.8986 - val_loss: 0.5244 - val_acc: 0.7728 Epoch 7/10 - 22s - loss: 0.2378 - acc: 0.9049 - val_loss: 0.4907 - val_acc: 0.7960 Epoch 8/10 - 22s - loss: 0.2226 - acc: 0.9121 - val_loss: 0.4050 - val_acc: 0.8260 Epoch 9/10 - 22s - loss: 0.2110 - acc: 0.9160 - val_loss: 0.6225 - val_acc: 0.7624 Epoch 10/10 - 23s - loss: 0.2005 - acc: 0.9228 - val_loss: 0.5776 - val_acc: 0.7780

scores = model3.evaluate(x_test,y_test,verbose=1)

scores[1]

25000/25000 [==============================] - 27s 1ms/step 0.8324