day07-【商城业务】

文章目录

- 一、Elastic Search

- 二、SpringBoot整合ElasticSearch

-

- 2.1、搭建elasticsearch模块

- 2.2、编写配置文件:bootstrap.properties

- 2.3、编写配置类:GuliESConfig

- 2.4、进行测试

-

- 2.4.1、测试保存数据:indexData

- 2.4.2、测试获取数据:findData

- 2.4.3、测试复杂查询:findDataPlus

- 2.4.4、检索结果封装为:java bean

- 三、商城业务

-

- 3.1、商品上架

-

- 3.1.1、创建数据模型

- 3.1.2、商品上架接口

- 3.1.3、查询所有sku信息

- 3.1.4、挑出检索属性

- 3.1.5、查询是否有库存

- 3.1.6、将数据发给es进行保存

- 3.1.7、商品上架更改状态

- 四、商城业务-前台

-

- 4.1、首页-页面

-

- 4.1.1、获取一级分类

- 4.1.2、获取二三级分类

- 4.2、负载均衡到网关

- 4.3、Jmeter压力测试

- 4.4、性能指标

- 4.5、 jvisualvm

- 4.6、监控指标

- 4.7、

- 五、缓存与分布式锁

-

- 5.1、整合 redis 作为缓存

- 5.2、缓存失效问题

-

- 5.2.1、缓存穿透

- 5.2.2、缓存雪崩

- 5.2.3、缓存击穿

- 5.3、加锁解决缓存击穿问题

-

- 5.4.1、分布式锁演进一

- 5.4.2、分布式锁演进二

- 5.4.3、分布式锁演进三

- 5.4.4、分布式锁演进四

- 5.4.5、分布式锁演进五

- 5.4.6、分布式锁演进六

- 5.4、分布式锁 - Redisson

-

- 5.4.1、配置Redisson

- 5.4.2、可重入锁-Redisson - Lock 锁

- 5.4.3、Reidsson - 读写锁

- 5.4.4、Redisson - 闭锁测试

- 5.4.5、Redisson - 信号量测试

- 5.4.5、Redission - 缓存一致性解决

- 六、SpringCache

-

- 6.1、SpringCach简介

- 6.2、SpringCach配置

- 6.3、将数据保存成JSON格式

- 6.4、SpringCache原理与不足

一、Elastic Search

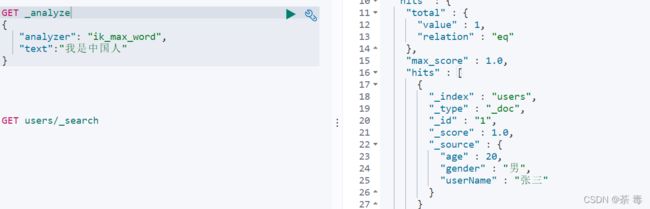

Elastic Search-全文检索

二、SpringBoot整合ElasticSearch

2.1、搭建elasticsearch模块

<dependency>

<groupId>org.elasticsearch.clientgroupId>

<artifactId>elasticsearch-rest-high-level-clientartifactId>

<version>7.4.2version>

dependency>

<properties>

<java.version>1.8java.version>

<elasticsearch.version>7.4.2elasticsearch.version>

properties>

请求测试项,比如es添加了安全访问规则,访问es需要添加一个安全头,就可以通过requestOptions设置

官方建议把requestOptions创建成单实例

2.2、编写配置文件:bootstrap.properties

nacos创建命名空间:elasticsearch

nacos.yml

spring:

cloud:

nacos:

discovery:

server-addr: 124.223.14.248:8848

application:

name: firefly-elasticsearch

server:

port: 13000

firefly-elasticsearch.yml

springBoot:

elasticsearch:

hostName: 124.223.14.248

port: 9200

scheme: http

2.3、编写配置类:GuliESConfig

GuliESConfig

package com.firefly.fireflymall.elasticsearch.config;

import org.apache.http.HttpHost;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestClient;

import org.elasticsearch.client.RestClientBuilder;

import org.elasticsearch.client.RestHighLevelClient;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

/**

* @author Michale @EMail:[email protected]

* @Date: 2022/1/18 19:00

* @Name GuliESConfig

* @Description:

*/

@Configuration

public class GuliESConfig {

public static final RequestOptions COMMON_OPTIONS;

static {

RequestOptions.Builder builder = RequestOptions.DEFAULT.toBuilder();

COMMON_OPTIONS = builder.build();

}

//给容器配置一个RestHighLevelClient

@Bean

public RestHighLevelClient esRestClient() {

RestClientBuilder builder = RestClient.builder(new HttpHost("124.223.14.248", 9200, "http"));

RestHighLevelClient client = new RestHighLevelClient(builder);

return client;

}

}

测试:contextLoads

@RunWith(SpringJUnit4ClassRunner.class)

@SpringBootTest

public class FireflyElasticsearchApplicationTests {

@Autowired

private RestHighLevelClient client;

@Test

public void contextLoads() {

System.out.println( client);

}

}

org.elasticsearch.client.RestHighLevelClient@2cd388f5

2.4、进行测试

2.4.1、测试保存数据:indexData

@Test

public void indexData() throws IOException {

@Data

class User {

private String userName;

private int age;

private String gender;

}

// 设置索引

IndexRequest indexRequest = new IndexRequest("users");

indexRequest.id("1");

User user = new User();

user.setUserName("张三");

user.setAge(20);

user.setGender("男");

String jsonString = JSON.toJSONString(user);

//设置要保存的内容,指定数据和类型

indexRequest.source(jsonString, XContentType.JSON);

//执行创建索引和保存数据-同步保存

IndexResponse index = client.index(indexRequest, GuliESConfig.COMMON_OPTIONS);

System.out.println(index);

}

IndexResponse[index=users,type=_doc,id=1,version=1,result=created,seqNo=0,primaryTerm=1,shards={"total":2,"successful":1,"failed":0}]

2.4.2、测试获取数据:findData

@Test

public void findData() throws IOException {

//1.创建检索请求

SearchRequest searchRequest = new SearchRequest();

//2.指定索引

searchRequest.indices("bank");

//3.构造检索条件

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

//sourceBuilder.query();

//sourceBuilder.from();

//sourceBuilder.size();

//sourceBuilder.aggregation();

sourceBuilder.query(QueryBuilders.matchQuery("address", "mill"));

System.out.println(sourceBuilder.toString());

searchRequest.source(sourceBuilder);

//4.执行检索

SearchResponse response = client.search(searchRequest, GuliESConfig.COMMON_OPTIONS);

//5.分析响应结果

System.out.println(response.toString());

}

2.4.3、测试复杂查询:findDataPlus

@Test

public void findDataPlus() throws IOException {

//1.创建检索请求

SearchRequest searchRequest = new SearchRequest();

//2.指定索引

searchRequest.indices("bank");

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

//3.构造检索条件

// sourceBuilder.query();

// sourceBuilder.from();

// sourceBuilder.size();

// sourceBuilder.aggregation();

sourceBuilder.query(QueryBuilders.matchQuery("address", "mill"));

//AggregationBuilders工具类构建AggregationBuilder

//3.1构建第一个聚合条件:按照年龄的值分布

TermsAggregationBuilder agg1 = AggregationBuilders.terms("agg1").field("age").size(10);// 聚合名称

//3.2参数为AggregationBuilder

sourceBuilder.aggregation(agg1);

//3.3构建第二个聚合条件:平均薪资

AvgAggregationBuilder agg2 = AggregationBuilders.avg("agg2").field("balance");

sourceBuilder.aggregation(agg2);

System.out.println("检索条件" + sourceBuilder.toString());

searchRequest.source(sourceBuilder);

//4.执行检索

SearchResponse response = client.search(searchRequest, GuliESConfig.COMMON_OPTIONS);

//5.分析响应结果

System.out.println(response.toString());

}

检索条件{"query":{"match":{"address":{"query":"mill","operator":"OR","prefix_length":0,"max_expansions":50,"fuzzy_transpositions":true,"lenient":false,"zero_terms_query":"NONE","auto_generate_synonyms_phrase_query":true,"boost":1.0}}},"aggregations":{"agg1":{"terms":{"field":"age","size":10,"min_doc_count":1,"shard_min_doc_count":0,"show_term_doc_count_error":false,"order":[{"_count":"desc"},{"_key":"asc"}]}},"agg2":{"avg":{"field":"balance"}}}}

{"took":7,"timed_out":false,"_shards":{"total":1,"successful":1,"skipped":0,"failed":0},"hits":{"total":{"value":4,"relation":"eq"},"max_score":5.4032025,"hits":[{"_index":"bank","_type":"account","_id":"970","_score":5.4032025,"_source":{"account_number":970,"balance":19648,"firstname":"Forbes","lastname":"Wallace","age":28,"gender":"M","address":"990 Mill Road","employer":"Pheast","email":"[email protected]","city":"Lopezo","state":"AK"}},{"_index":"bank","_type":"account","_id":"136","_score":5.4032025,"_source":{"account_number":136,"balance":45801,"firstname":"Winnie","lastname":"Holland","age":38,"gender":"M","address":"198 Mill Lane","employer":"Neteria","email":"[email protected]","city":"Urie","state":"IL"}},{"_index":"bank","_type":"account","_id":"345","_score":5.4032025,"_source":{"account_number":345,"balance":9812,"firstname":"Parker","lastname":"Hines","age":38,"gender":"M","address":"715 Mill Avenue","employer":"Baluba","email":"[email protected]","city":"Blackgum","state":"KY"}},{"_index":"bank","_type":"account","_id":"472","_score":5.4032025,"_source":{"account_number":472,"balance":25571,"firstname":"Lee","lastname":"Long","age":32,"gender":"F","address":"288 Mill Street","employer":"Comverges","email":"[email protected]","city":"Movico","state":"MT"}}]},"aggregations":{"avg#agg2":{"value":25208.0},"lterms#agg1":{"doc_count_error_upper_bound":0,"sum_other_doc_count":0,"buckets":[{"key":38,"doc_count":2},{"key":28,"doc_count":1},{"key":32,"doc_count":1}]}}}

2.4.4、检索结果封装为:java bean

创建javaBean

/**

* Copyright 2022 json.cn

*/

package com.firefly.fireflymall.elasticsearch.pojo;

import lombok.Data;

/**

* Auto-generated: 2022-01-20 0:26:10

*

* @author json.cn ([email protected])

* @website http://www.json.cn/java2pojo/

*/

@Data

public class Account {

private int account_number;

private String firstname;

private String address;

private int balance;

private String gender;

private String city;

private String employer;

private String state;

private int age;

private String email;

private String lastname;

public void setAccount_number(int account_number) {

this.account_number = account_number;

}

public int getAccount_number() {

return account_number;

}

public void setFirstname(String firstname) {

this.firstname = firstname;

}

public String getFirstname() {

return firstname;

}

public void setAddress(String address) {

this.address = address;

}

public String getAddress() {

return address;

}

public void setBalance(int balance) {

this.balance = balance;

}

public int getBalance() {

return balance;

}

public void setGender(String gender) {

this.gender = gender;

}

public String getGender() {

return gender;

}

public void setCity(String city) {

this.city = city;

}

public String getCity() {

return city;

}

public void setEmployer(String employer) {

this.employer = employer;

}

public String getEmployer() {

return employer;

}

public void setState(String state) {

this.state = state;

}

public String getState() {

return state;

}

public void setAge(int age) {

this.age = age;

}

public int getAge() {

return age;

}

public void setEmail(String email) {

this.email = email;

}

public String getEmail() {

return email;

}

public void setLastname(String lastname) {

this.lastname = lastname;

}

public String getLastname() {

return lastname;

}

}

创建测试方法

@Test

public void findDetile() throws IOException {

//1.创建检索请求

SearchRequest searchRequest = new SearchRequest();

//2.指定索引

searchRequest.indices("bank");

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

//3.构造检索条件

// sourceBuilder.query();

// sourceBuilder.from();

// sourceBuilder.size();

// sourceBuilder.aggregation();

sourceBuilder.query(QueryBuilders.matchQuery("address", "mill"));

//AggregationBuilders工具类构建AggregationBuilder

//3.1构建第一个聚合条件:按照年龄的值分布

TermsAggregationBuilder aggOne= AggregationBuilders.terms("aggOne").field("age").size(10);// 聚合名称

//3.2参数为AggregationBuilder

sourceBuilder.aggregation(aggOne);

//3.3构建第二个聚合条件:平均薪资

AvgAggregationBuilder aggTwo= AggregationBuilders.avg("aggTwo").field("balance");

sourceBuilder.aggregation(aggTwo);

searchRequest.source(sourceBuilder);

//4.执行检索

SearchResponse response = client.search(searchRequest, GuliESConfig.COMMON_OPTIONS);

//5.分析响应结果

SearchHits hits = response.getHits();

SearchHit[] hits1 = hits.getHits();

for (SearchHit hit : hits1) {

hit.getId();

hit.getIndex();

String sourceAsString = hit.getSourceAsString();

Account account = JSON.parseObject(sourceAsString, Account.class);

System.out.println(account);

}

}

对结果再次分析:

//获取检索到的分析信息

Aggregations aggregations = response.getAggregations();

//5.2 获取检索到的分析信息

List<Aggregation> aggregations1 = aggregations.asList();

for (Aggregation aggregation : aggregations1) {

String keyAsString = aggregation.getName();

System.out.println("本次聚合名字" + keyAsString);

}

本次聚合名字aggOne

本次聚合名字aggTwo

//获取检索到的分析信息

//5.3 获取检索到的分析信息

Terms agg21 = aggregations.get("aggOne");

for (Terms.Bucket bucket : agg21.getBuckets()) {

String keyAsString = bucket.getKeyAsString();

System.out.println("年龄:" + keyAsString+"====>人数" + bucket.getDocCount());

}

年龄:38====>人数2

年龄:28====>人数1

年龄:32====>人数1

三、商城业务

3.1、商品上架

http://localhost:88/api/product/spuinfo/{spuId}/up

商品上架需要在es中保存spu信息并更新spu的状态信息,由于SpuInfoEntity与索引的数据模型并不对应,所以我们要建立专门的vo进行数据传输。

3.1.1、创建数据模型

SkuEsModel

package com.firefly.common.to.es;

import lombok.Data;

import java.math.BigDecimal;

import java.util.List;

@Data

public class SkuEsModel {

private Long skuId;

private Long spuId;

private String skuTitle;

private BigDecimal skuPrice;

private String skuImg;

private Long saleCount;

private boolean hasStock;

private Long hotScore;

private Long brandId;

private Long catalogId;

private String brandName;

private String brandImg;

private String catalogName;

private List<Attr> attrs;

@Data

public static class Attr{

private Long attrId;

private String attrName;

private String attrValue;

}

}

3.1.2、商品上架接口

SpuInfoController

/**

* 商品上架

*

* @param spuId

* @return

*/

@GetMapping("/{spuId}/up")

public R up(@PathVariable("skuId") Long spuId) {

spuInfoService.up(spuId);

return R.ok();

}

SpuInfoServiceImpl

@Override

public void up(Long spuId) {

//1.查出当前spuid对应的所有sku信息,品牌的名字

List<SkuInfoEntity> skuInfoEntities = skuInfoService.getSkuBySpuId(spuId);

List<Long> skuIdList = skuInfoEntities.stream().map(SkuInfoEntity::getSkuId).collect(Collectors.toList());

//当前sku所有可以被用来检索的规格属性

List<ProductAttrValueEntity> baseAttrs = attrService.getSpuSpecification(spuId);

List<Long> attrIds = baseAttrs.stream().map(attr -> {

return attr.getAttrId();

}).collect(Collectors.toList());

//当前sku所有可以被用来检索的规格属性id

List<Long> searchAttrIds = attrService.selectSearchAttrIds(attrIds);

//为了方便过滤

Set<Long> idSet = new HashSet<>(searchAttrIds);

List<SkuEsModel.Attr> attrsList = baseAttrs.stream().filter(item -> {

return idSet.contains(item.getAttrId());

}).map(item -> {

SkuEsModel.Attr attrs1 = new SkuEsModel.Attr();

BeanUtils.copyProperties(item, attrs1);

return attrs1;

}).collect(Collectors.toList());

//发送远程调用,查是否有库存;

Map<Long, Boolean> sotckMap = null;

try {

R r = wareFeignService.getSkuHasStock(skuIdList);

TypeReference<List<SkuHasStockTo>> typeReference = new TypeReference<List<SkuHasStockTo>>() {

};

sotckMap = r.getData(typeReference).stream().collect(Collectors.toMap(SkuHasStockTo::getSkuId, item -> item.getHasStock()));

} catch (Exception e) {

log.error(FEIGN_WARE_STOCK_FAIL, e);

}

//2.封装数据

Map<Long, Boolean> finalSotckMap = sotckMap;

List<SkuEsModel> collect = skuInfoEntities.stream().map(sku -> {

SkuEsModel skuEsModel = new SkuEsModel();

BeanUtils.copyProperties(sku, skuEsModel);

skuEsModel.setSkuPrice(sku.getPrice());

skuEsModel.setSkuImg(sku.getSkuDefaultImg());

if (finalSotckMap == null) {

skuEsModel.setHasStock(false);

} else {

skuEsModel.setHasStock(finalSotckMap.get(sku.getSkuId()));

}

//热度评分(简化)

skuEsModel.setHotScore(0L);

BrandEntity brandEntity = brandService.getById(skuEsModel.getBrandId());

skuEsModel.setBrandName(brandEntity.getName());

skuEsModel.setBrandImg(brandEntity.getLogo());

CategoryEntity categoryEntity = categoryService.getById(skuEsModel.getCatalogId());

skuEsModel.setCatalogName(categoryEntity.getName());

//设置检索属性

skuEsModel.setAttrs(attrsList);

return skuEsModel;

}).collect(Collectors.toList());

//发送至es进行保存

R r = searchFeignService.productSaveService(collect);

Integer code = r.getCode();

if (code == GOODS_SHELF_SUCCESS.getCode()) {

//成功、修改spu状态

baseMapper.updateSpuStatus(spuId, ProductContant.GoodsShelf.GOODS_SHELF_SUCCESS.getCode());

} else {

//失败

//todo 接口幂等性:重试机制

/**Feign调用机制

1.构造请求数据,将对象转换为json

2.发送请求执行

3.执行请求会有重试机制

*

*/

}

}

3.1.3、查询所有sku信息

查出当前spuid对应的所有sku信息,品牌的名字

/**

* 查出当前spuId对应的所有sku信息,品牌的名字

*

* @param spuId

* @return

*/

@Override

public List<SkuInfoEntity> getSkuBySpuId(Long spuId) {

//查出当前spuId对应的所有sku信息,品牌的名字

QueryWrapper<SkuInfoEntity> queryWrapper = new QueryWrapper<>();

queryWrapper.eq("spu_id", spuId);

List<SkuInfoEntity> list = this.list(queryWrapper);

return list;

}

3.1.4、挑出检索属性

在指定的所有集合属性里面挑出检索属性

AttrServiceImpl

/**

* 在指定的所有集合属性里面挑出检索属性

*

* @param attrIds

* @return

*/

@Override

public List<Long> selectSearchAttrIds(List<Long> attrIds) {

return baseMapper.selectSearchAttrIds(attrIds);

}

AttrDao

List<Long> selectSearchAttrIds(@Param("attrIds") List<Long> attrIds);

AttrDao.xml

<select id="selectSearchAttrIds" resultType="java.lang.Long">

SELECT attr_id FROM `pms_attr` WHERE attr_id IN

<foreach collection="attrIds" item="id" separator="," open="(" close=")">

#{id}

foreach>

AND search_type =1

select>

3.1.5、查询是否有库存

发送远程调用,库存系统查询是否有库存

SkuHasStockVo

package com.firefly.fireflymall.ware.vo;

import lombok.Data;

@Data

public class SkuHasStockVo {

/**

* skuId

*/

private Long skuId;

/**

* 是否有库存

*/

private Boolean hasStock;

}

WareSkuController

@ApiOperation("查询是否有库存")

@PostMapping("/hasstock")

public R getSkuHasStock(@RequestBody List<Long> skuIds) {

List<SkuHasStockVo> vos = wareSkuService.getSkuHasStock(skuIds);

return R.ok().setData(vos);

}

WareSkuServiceImpl

@Override

public List<SkuHasStockVo> getSkuHasStock(List<Long> skuIds) {

List<SkuHasStockVo> skuHasStockVoList = null;

if (skuIds != null && skuIds.size() > 0) {

skuHasStockVoList = skuIds.stream().map(skuId -> {

SkuHasStockVo skuHasStockVo = new SkuHasStockVo();

//查询库存量 库存-锁定库存

String count = baseMapper.getHasStock(skuId);

skuHasStockVo.setSkuId(skuId);

if (count == null) {

skuHasStockVo.setHasStock(false);

}else {

skuHasStockVo.setHasStock(true);

}

return skuHasStockVo;

}).collect(Collectors.toList());

}

return skuHasStockVoList;

}

WareSkuDao.xml

<select id="getHasStock" resultType="java.lang.String">

SELECT SUM(stock-stock_locked) FROM `wms_ware_sku` WHERE sku_id = #{skuId}

</select>

WareFeignService

package com.firefly.fireflymall.product.feign;

import com.firefly.common.utils.R;

import org.springframework.cloud.openfeign.FeignClient;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestBody;

import java.util.List;

/**

* @Author Michale @EMail:[email protected]

* @Date: 2022/1/30 4:26

* @Description:远程调用库存信息

*/

@FeignClient(value = "firefly-ware")

public interface WareFeignService {

/**

* 查询商品是否有库存

*

* @param skuIds

* @return

*/

@PostMapping("/ware/waresku/hasstock")

public R getSkuHasStock(@RequestBody List<Long> skuIds);

}

注意:获取是否有库存要使用Post方式否则远程调用不成功

3.1.6、将数据发给es进行保存

SearchFeignService

/**

* @Author Michale @EMail:[email protected]

* @Date: 2022/1/30 10:25

* @Description:商品服务-全文检索-保存

*/

@FeignClient(value = "firefly-elasticsearch")

public interface SearchFeignService {

@PostMapping("/search/save/product")

public R productSaveService(@RequestBody List<SkuEsModel> skuEsModel);

}

EsasticSaveController

package com.firefly.fireflymall.elasticsearch.controller;

import com.firefly.common.to.es.SkuEsModel;

import com.firefly.common.utils.R;

import com.firefly.fireflymall.elasticsearch.service.ProductSaveService;

import io.swagger.annotations.Api;

import io.swagger.annotations.ApiOperation;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import java.io.IOException;

import java.util.List;

import static com.firefly.common.exception.BizCodeEnum.GOODS_SHELF_FAIL;

import static com.firefly.common.exception.BizCodeEnum.GOODS_SHELF_SUCCESS;

/**

* @Author Michale @EMail:[email protected]

* @Date: 2022/1/30 9:46

* @Description:全文检索

*/

@Slf4j

@Api(description = "全文检索")

@RestController

@RequestMapping("/search/save")

public class EsasticSaveController {

@Autowired

private ProductSaveService productSaveService;

@ApiOperation(value = "商品上架")

@PostMapping("/product")

public R productSaveService(@RequestBody List<SkuEsModel> skuEsModel) {

try {

productSaveService.productStatusUp(skuEsModel);

log.error(GOODS_SHELF_FAIL.getMsg());

return R.ok(GOODS_SHELF_SUCCESS.getCode(), GOODS_SHELF_SUCCESS.getMsg());

} catch (IOException e) {

e.printStackTrace();

return R.error(GOODS_SHELF_FAIL.getCode(), GOODS_SHELF_FAIL.getMsg());

}

}

}

ProductSaveServiceImpl

@Override

public boolean productStatusUp(List<SkuEsModel> skuEsModel) throws IOException {

//在es中建立索引,product 建立好映射关系

BulkRequest bulkRequest = new BulkRequest();

for (SkuEsModel skuEsMode : skuEsModel) {

// 2.构造保存请求

// 设置索引

IndexRequest indexRequest = new IndexRequest(PRODUCT_INDEX);

// 设置索引id

indexRequest.id(skuEsMode.getSkuId().toString());

String s = JSON.toJSONString(skuEsMode);

bulkRequest.add(indexRequest);

}

// bulk批量保存

BulkResponse bulk = client.bulk(bulkRequest, GuliESConfig.COMMON_OPTIONS);

// TODO 是否拥有错误

boolean hasFailures = bulk.hasFailures();

if (hasFailures) {

List<String> collect = Arrays.stream(bulk.getItems()).map(item -> item.getId()).collect(Collectors.toList());

log.error(GOODS_SHELF_FAIL.getMsg(), collect);

}

return hasFailures;

}

3.1.7、商品上架更改状态

SpuInfoDao

void upSpuStatus(@Param("spuId") Long spuId, @Param("code") int code);

SpuInfoDao.xml

<update id="upSpuStatus">

UPDATE `pms_spu_info` SET publish_status=#{code} WHERE id =#{spuId}

</update>

四、商城业务-前台

导入thymeleaf依赖、热部署依赖devtools使页面实时生效

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-thymeleafartifactId>

dependency>

4.1、首页-页面

index静态资源复制到:fireflymall-product/src/main/resources/static

index页面复制到:fireflymall-product/src/main/resources/templates

关闭thymeleaf缓存,方便开发实时看到更新

thymeleaf.yml

spring:

thymeleaf:

cache: false

suffix: .html #后缀

prefix: classpath:/templates/ #前缀

测试:http://localhost:15000/index/css/GL.css

测试:http://localhost:15000

web开发放到web包下,原来的controller是前后分离对接手机等访问的,所以可以改成app,对接app应用。

4.1.1、获取一级分类

indexController

package com.firefly.fireflymall.product.web;

import org.springframework.ui.Model;

import com.firefly.fireflymall.product.entity.CategoryEntity;

import com.firefly.fireflymall.product.service.CategoryService;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Controller;

import org.springframework.web.bind.annotation.GetMapping;

import java.util.List;

/**

* @Author Michale @EMail:[email protected]

* @Date: 2022/2/4 14:10

* @Description:首页页面

*/

@Controller

public class indexController {

@Autowired

private CategoryService categoryService;

@GetMapping({"/", "/index.html"})

public String indexPage(Model model) {

List<CategoryEntity> categoryOne = categoryService.getCategoryOne();

model.addAttribute("categorys", categoryOne);

// prefix: classpath:/templates/ +返回值 +后缀

return "index";

}

}

CategoryServiceImpl

@Override

public List<CategoryEntity> getCategoryOne() {

QueryWrapper<CategoryEntity> queryWrapper = new QueryWrapper<>();

queryWrapper.eq("parent_cid", 0);

List<CategoryEntity> entityList = baseMapper.selectList(queryWrapper);

return entityList;

}

引入thymeleaf的命名空间

xmlns:th="http://www.thymeleaf.org"

显示一级分类

<ul>

<li th:each="category : ${categorys}">

<a href="#" class="header_main_left_a" th:attr="ctg-data=${category.catId}"><b th:text="${category.name}">家用电器11</b></a>

</li>

</ul>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-devtoolsartifactId>

<optional>trueoptional>

dependency>

4.1.2、获取二三级分类

封装数据实体:Catelog2Vo

package com.firefly.fireflymall.product.vo;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import java.util.List;

/**

* @Author Michale @EMail:[email protected]

* @Date: 2022/2/4 21:56

* @Description:封装二,三级分类

*/

@NoArgsConstructor

@AllArgsConstructor

@Data

public class Catelog2Vo {

private String catalog1Id; // 1级父分类id

private List<Catelog3Vo> catalog3List; // 三级子分类

private String id;

private String name;

@NoArgsConstructor

@AllArgsConstructor

@Data

public static class Catelog3Vo{

private String catalog2Id; // 父分类,2级分类id

private String id;

private String name;

}

}

indexController

@ResponseBody

@GetMapping("/index/catalog.json")

public Map<String, List<Catelog2Vo>> getCatalogJson() {

Map<String, List<Catelog2Vo>> catalogJson = categoryService.getCatalogJson();

return catalogJson;

}

indexController

@ResponseBody

@GetMapping("/index/catalog.json")

public Map<String, List<Catelog2Vo>> getCatalogJson() {

Map<String, List<Catelog2Vo>> catalogJson = categoryService.getCatalogJson();

return catalogJson;

}

CategoryServiceImpl

@Override

public Map<String, List<Catelog2Vo>> getCatalogJson() {

//1.查询所有一级分类

List<CategoryEntity> categoryOne = getCategoryOne();

//2.封装数据

Map<String, List<Catelog2Vo>> collect = categoryOne.stream().collect(Collectors.toMap(k -> k.getCatId().toString(), v -> {

//2.1根据一级分类ID查询二级分类

List<CategoryEntity> categoryTwo = baseMapper.selectList(new QueryWrapper<CategoryEntity>().eq("parent_cid", v.getCatId()));

List<Catelog2Vo> catelog2Vos = null;

if (categoryTwo != null) {

//2.2 封装二级分类

catelog2Vos = categoryTwo.stream().map(levelTwo -> {

Catelog2Vo catelog2Vo = new Catelog2Vo(v.getCatId().toString(), null, levelTwo.getCatId().toString(), levelTwo.getName().toString());

List<CategoryEntity> categoryThree = baseMapper.selectList(new QueryWrapper<CategoryEntity>().eq("parent_cid", levelTwo.getCatId()));

if (categoryThree != null) {

List<Catelog2Vo.Catelog3Vo> catalog3List = categoryThree.stream().map(levelThree -> {

//2.3 封装三级分类

Catelog2Vo.Catelog3Vo catelog3Vo = new Catelog2Vo.Catelog3Vo(levelTwo.getCatId().toString(), levelThree.getCatId().toString(), levelThree.getName().toString());

return catelog3Vo;

}).collect(Collectors.toList());

//设置三级分类

catelog2Vo.setCatalog3List(catalog3List);

}

return catelog2Vo;

}).collect(Collectors.toList());

}

return catelog2Vos;

}));

return collect;

}

4.2、负载均衡到网关

[http://nginx.org/en/docs/http/load_balancing.html]

(http://nginx.org/en/docs/http/load_balancing.html)

使用switchHosts添加:nginx虚拟机IP www.fire.flymall.com

访问:www.fire.flymall.com便是nginx首页页面。

nginx配置文件添加

upstream fireflymall{

server 192.168.10.1:88;

}

server {

listen 80;

server_name fireflymall.com;

location / {

#又转回到本机

proxy_pass http://fireflymall;

}

}

```java

- id: firefly_host_route

uri: lb://firefly-product

predicates:

- Host=**.fireflymall.com

http://fireflymall.com/api/product/category/list/tree

注意:这个路由规则,一定要放置到最后,否则会优先进行Host匹配,导致其他路由规则失效。

原因分析:Nginx代理给网关的时候,会丢失请求头的很多信息,如HOST信息,Cookie等。

解决方法:需要修改Nginx的路径映射规则,加上“ proxy_set_header Host $host;”

proxy_set_header Host $host;

http://fireflymall/

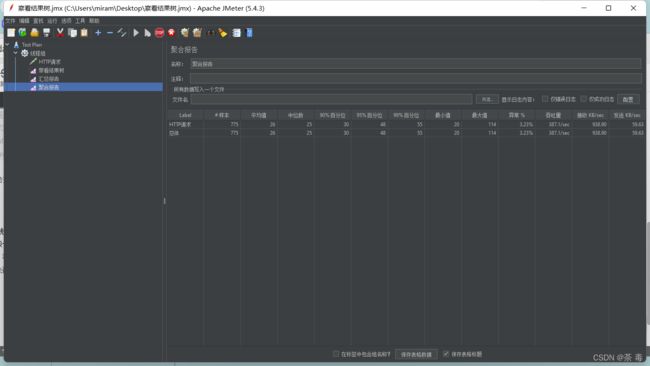

4.3、Jmeter压力测试

下载:https://jmeter.apache.org/download_jmeter.cgi

压力测试考察当前软硬件环境下系统所能承受住的最大负荷并帮助找出系统的瓶颈所在,压测都是为了系统

在线上的处理能力和稳定性维持在一个标准范围内,做到心中有数

使用压力测试,我们有希望找到很多种用其他测试方法更难发现的错误,有两种错误类型是:

内存泄漏、并发与同步

有效的压力测试系统将应用以下这些关键条件:重复、并发、量级、随机变化

创建一个压力测试

1-创建测试计划,添加线程组

2-添加-取样器-HTTP请求

3-添加-监听器-查看结果树

4-添加-监听器-汇总报告

5-添加-监听器-聚合报告

6-添加-监听器-汇总图

4.4、性能指标

Jvm 内存模型

堆

所有的对象实例以及数组都要在堆上分配,堆时垃圾收集器管理的主要区域,也被称为 “GC堆”,也是我们优化最多考虑的地方

新生代

Eden空间

From Survivor 空间

To Survivor 空间

永久代/原空间

Java8 以前永久代、受 JVM 管理、Java8 以后原空间,直接使用物理内存,因此默认情况下,原空间的大小仅受本地内存限制

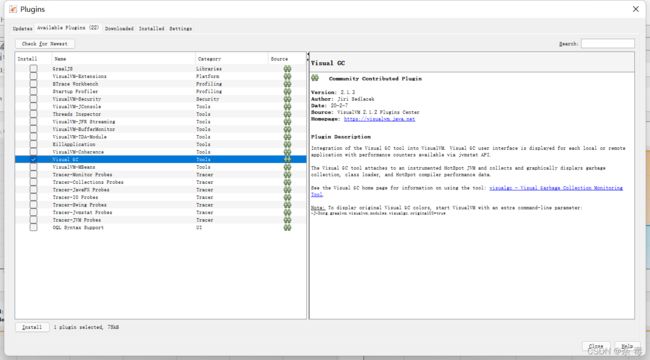

4.5、 jvisualvm

jdk 的两个小工具 jconsole、jvisualvm(升级版本的 jconsole)。通过命令行启动、可监控本地和远程应用、远程应用需要配置

1、jvisualvm 能干什么

监控内存泄漏、跟踪垃圾回收、执行时内存、cpu分析、线程分析.....

运行:正在运行的线程

休眠:sleep

等待:wait

驻留:线程池里面的空闲线程

监视:组赛的线程、正在等待锁

2、安装插件方便查看 gc

cmd 启动 jvisualvm

工具->插件:Visual GC

如果503 错误解决

打开网址: https://visualvm.github.io/pluginscenters.html

cmd 查看自己的jdk版本,找到对应的

docker stats 查看相关命令

4.6、监控指标

4.7、

五、缓存与分布式锁

为了系统性能的提升,我们一般都会将部分数据放入缓存中,加速访问,而 db 承担数据落盘工作。

哪些数据适合放入缓存?

即时性、数据一致性要求不高的

访问量大且更新频率不高的数据(读多、写少)

注意:在开发中,凡是放到缓存中的数据我们都应该制定过期时间,使其可以在系统即使没有主动更新数据也能自动触发数据加载的流程,避免业务奔溃导致的数据永久不一致的问题。

5.1、整合 redis 作为缓存

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-data-redisartifactId>

<exclusions>

<exclusion>

<groupId>io.lettucegroupId>

<artifactId>lettuce-coreartifactId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>redis.clientsgroupId>

<artifactId>jedisartifactId>

dependency>

redis.yml

Spring:

redis:

host: 124.223.14.248

port: 6379

package com.firefly.fireflymall.product;

import org.junit.Test;

import org.junit.runner.RunWith;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.data.redis.core.StringRedisTemplate;

import org.springframework.test.context.junit4.SpringRunner;

import java.util.UUID;

/**

* @Author Michale @EMail:[email protected]

* @Date: 2022/2/5 21:42

* @Description:redis缓存

*/

@SuppressWarnings("all")

@RunWith(SpringRunner.class)

@SpringBootTest

public class FireFlyMallProductRedisTest {

@Autowired

StringRedisTemplate stringRedisTemplate;

@Test

public void testStringRedisTemplate() {

stringRedisTemplate.opsForValue().set("hello", "world_" + UUID.randomUUID().toString());

String hello = stringRedisTemplate.opsForValue().get("hello");

System.out.println("之前保存的数据是:"+hello);

}

}

之前保存的数据是:world_e90cf5ca-97ee-47c8-8819-a6a1aed46859

优化三级分类

* TODO 产生堆外内存溢出 OutOfDirectMemoryError

* 1、SpringBoot2.0以后默认使用 Lettuce作为操作redis的客户端,它使用 netty进行网络通信

* 2、lettuce 的bug导致netty堆外内存溢出,-Xmx300m netty 如果没有指定堆内存移除,默认使用 -Xmx300m

* 可以通过-Dio.netty.maxDirectMemory 进行设置

* 解决方案 不能使用 -Dio.netty.maxDirectMemory调大内存

* 1、升级 lettuce客户端,2、 切换使用jedis

* redisTemplate:

* lettuce、jedis 操作redis的底层客户端,Spring再次封装

@Override

public Map<String, List<Catelog2Vo>> getCatalogJson() {

// 1、从缓存中获取

String catelogJSON = redisTemplate.opsForValue().get("catelogJSON");

if (StringUtils.isEmpty(catelogJSON)) {

// 2、缓存没有,从数据库中查询

Map<String, List<Catelog2Vo>> catelogJsonFromDb = getCatelogJsonFromDb();

// 3、查询到数据,将数据转成 JSON 后放入缓存中

String jsonString = JSON.toJSONString(catelogJsonFromDb);

redisTemplate.opsForValue().set("catelogJSON", jsonString);

return catelogJsonFromDb;

}

// 转换为我们指定的对象

Map<String, List<Catelog2Vo>> result = JSON.parseObject(catelogJSON, new TypeReference<Map<String, List<Catelog2Vo>>>() {

});

return result;

}

//三级分类

public Map<String, List<Catelog2Vo>> getCatelogJsonFromDb() {

//将数据库的多次查询变为一次查询

List<CategoryEntity> selectList = baseMapper.selectList(null);

//1.查询所有一级分类

List<CategoryEntity> categoryOne = getParent_cid(selectList, 0L);

//2.封装数据

Map<String, List<Catelog2Vo>> collect = categoryOne.stream().collect(Collectors.toMap(k -> k.getCatId().toString(), v -> {

//2.1根据一级分类ID查询二级分类

List<CategoryEntity> categoryTwo = getParent_cid(selectList, v.getCatId());

List<Catelog2Vo> catelog2Vos = null;

if (categoryTwo != null) {

//2.2 封装二级分类

catelog2Vos = categoryTwo.stream().map(levelTwo -> {

Catelog2Vo catelog2Vo = new Catelog2Vo(v.getCatId().toString(), null, levelTwo.getCatId().toString(), levelTwo.getName().toString());

List<CategoryEntity> categoryThree = getParent_cid(selectList, levelTwo.getCatId());

if (categoryThree != null) {

List<Catelog2Vo.Catelog3Vo> catalog3List = categoryThree.stream().map(levelThree -> {

//2.3 封装三级分类

Catelog2Vo.Catelog3Vo catelog3Vo = new Catelog2Vo.Catelog3Vo(levelTwo.getCatId().toString(), levelThree.getCatId().toString(), levelThree.getName().toString());

return catelog3Vo;

}).collect(Collectors.toList());

//设置三级分类

catelog2Vo.setCatalog3List(catalog3List);

}

return catelog2Vo;

}).collect(Collectors.toList());

}

return catelog2Vos;

}));

return collect;

}

//查询子分类

private List<CategoryEntity> getParent_cid(List<CategoryEntity> selectList, Long parentCid) {

//return baseMapper.selectList(new QueryWrapper().eq("parent_cid", parentCid));

List<CategoryEntity> collect = selectList.stream().filter(item -> item.getParentCid() == parentCid).collect(Collectors.toList());

return collect;

}

5.2、缓存失效问题

5.2.1、缓存穿透

5.2.2、缓存雪崩

5.2.3、缓存击穿

5.3、加锁解决缓存击穿问题

/**

* 获取二三级分类

*

* @return

*/

@Override

public Map<String, List<Catelog2Vo>> getCatalogJson() {

// 1、从缓存中获取

String catelogJSON = redisTemplate.opsForValue().get("categoryJSON");

System.out.println("从缓存中获取");

if (StringUtils.isEmpty(catelogJSON)) {

System.out.println("查询了数据库");

// 2、缓存没有,从数据库中查询

Map<String, List<Catelog2Vo>> catelogJsonFromDb = getCatelogJsonFromDb();

// 3、查询到数据,将数据转成 JSON 后放入缓存中

String jsonString = JSON.toJSONString(catelogJsonFromDb);

redisTemplate.opsForValue().set("categoryJSON", jsonString);

return catelogJsonFromDb;

}

// 转换为我们指定的对象

Map<String, List<Catelog2Vo>> result = JSON.parseObject(catelogJSON, new TypeReference<Map<String, List<Catelog2Vo>>>() {

});

return result;

}

//三级分类

public Map<String, List<Catelog2Vo>> getCatelogJsonFromDb() {

//加本地锁,只能锁定当进程

synchronized (this) {

// 0.得到锁之后从缓存中获取

String catelogJSON = redisTemplate.opsForValue().get("categoryJSON");

if (!StringUtils.isEmpty(catelogJSON)) {

// 转换为我们指定的对象

Map<String, List<Catelog2Vo>> result = JSON.parseObject(catelogJSON, new TypeReference<Map<String, List<Catelog2Vo>>>() {

});

System.out.println("获得锁-从缓存中获取");

return result;

}

System.out.println("获得锁-查询了数据库");

//将数据库的多次查询变为一次查询

List<CategoryEntity> selectList = baseMapper.selectList(null);

//1.查询所有一级分类

List<CategoryEntity> categoryOne = getParent_cid(selectList, 0L);

//2.封装数据

Map<String, List<Catelog2Vo>> collect = categoryOne.stream().collect(Collectors.toMap(k -> k.getCatId().toString(), v -> {

//2.1根据一级分类ID查询二级分类

List<CategoryEntity> categoryTwo = getParent_cid(selectList, v.getCatId());

List<Catelog2Vo> catelog2Vos = null;

if (categoryTwo != null) {

//2.2 封装二级分类

catelog2Vos = categoryTwo.stream().map(levelTwo -> {

Catelog2Vo catelog2Vo = new Catelog2Vo(v.getCatId().toString(), null, levelTwo.getCatId().toString(), levelTwo.getName().toString());

List<CategoryEntity> categoryThree = getParent_cid(selectList, levelTwo.getCatId());

if (categoryThree != null) {

List<Catelog2Vo.Catelog3Vo> catalog3List = categoryThree.stream().map(levelThree -> {

//2.3 封装三级分类

Catelog2Vo.Catelog3Vo catelog3Vo = new Catelog2Vo.Catelog3Vo(levelTwo.getCatId().toString(), levelThree.getCatId().toString(), levelThree.getName().toString());

return catelog3Vo;

}).collect(Collectors.toList());

//设置三级分类

catelog2Vo.setCatalog3List(catalog3List);

}

return catelog2Vo;

}).collect(Collectors.toList());

}

return catelog2Vos;

}));

return collect;

}

}

//查询子分类

private List<CategoryEntity> getParent_cid(List<CategoryEntity> selectList, Long parentCid) {

//return baseMapper.selectList(new QueryWrapper().eq("parent_cid", parentCid));

List<CategoryEntity> collect = selectList.stream().filter(item -> item.getParentCid() == parentCid).collect(Collectors.toList());

return collect;

}

5.4.1、分布式锁演进一

//getCatalogJson调用getCatalogJsonFromDbWithRedisLock

public Map<String, List<Catelog2Vo>> getCatalogJsonFromDbWithRedisLock() {

Boolean lock = redisTemplate.opsForValue().setIfAbsent("lock", "0");

if (lock) {

// 加锁成功..执行业务

Map<String, List<Catelog2Vo>> dataFromDb = getCatelogJsonFromDb();

redisTemplate.delete("lock"); // 删除锁

return dataFromDb;

} else {

// 加锁失败,重试 synchronized()

// 休眠100ms重试

return getCatalogJsonFromDbWithRedisLock();//自旋的方式

}

}

5.4.2、分布式锁演进二

//getCatalogJson调用getCatalogJsonFromDbWithRedisLock

public Map<String, List<Catelog2Vo>> getCatalogJsonFromDbWithRedisLock() {

Boolean lock = redisTemplate.opsForValue().setIfAbsent("lock", "0");

if (lock) {

//设置过期时间

redisTemplate.expire("lock",30, TimeUnit.SECONDS);

// 加锁成功..执行业务

Map<String, List<Catelog2Vo>> dataFromDb = getCatelogJsonFromDb();

redisTemplate.delete("lock"); // 删除锁

return dataFromDb;

} else {

// 加锁失败,重试 synchronized()

// 休眠100ms重试

return getCatalogJsonFromDbWithRedisLock();//自旋的方式

}

}

5.4.3、分布式锁演进三

//getCatalogJson调用getCatalogJsonFromDbWithRedisLock

public Map<String, List<Catelog2Vo>> getCatalogJsonFromDbWithRedisLock() {

// 设置值同时设置过期时间

Boolean lock = redisTemplate.opsForValue().setIfAbsent("lock","111",300,TimeUnit.SECONDS);

if (lock) {

// 加锁成功..执行业务

// 设置过期时间,必须和加锁是同步的,原子的

redisTemplate.expire("lock",30,TimeUnit.SECONDS);

Map<String,List<Catelog2Vo>> dataFromDb = getCatelogJsonFromDb();

redisTemplate.delete("lock"); // 删除锁

return dataFromDb;

} else {

// 加锁失败,重试 synchronized()

// 休眠100ms重试

return getCatalogJsonFromDbWithRedisLock();//自旋的方式

}

}

5.4.4、分布式锁演进四

//getCatalogJson调用getCatalogJsonFromDbWithRedisLock

public Map<String, List<Catelog2Vo>> getCatalogJsonFromDbWithRedisLock() {

String uuid = UUID.randomUUID().toString();

// 设置值同时设置过期时间

Boolean lock = redisTemplate.opsForValue().setIfAbsent("lock", uuid, 300, TimeUnit.SECONDS);

if (lock) {

// 加锁成功..执行业务

// 设置过期时间,必须和加锁是同步的,原子的

redisTemplate.expire("lock", 30, TimeUnit.SECONDS);

Map<String, List<Catelog2Vo>> dataFromDb = getCatelogJsonFromDb();

String lockValue = redisTemplate.opsForValue().get("lock");

if (lockValue.equals(uuid)) {

// 删除我自己的锁

redisTemplate.delete("lock"); // 删除锁

}

return dataFromDb;

} else {

// 加锁失败,重试 synchronized()

// 休眠100ms重试

return getCatalogJsonFromDbWithRedisLock();//自旋的方式

}

}

5.4.5、分布式锁演进五

//getCatalogJson调用getCatalogJsonFromDbWithRedisLock

public Map<String, List<Catelog2Vo>> getCatalogJsonFromDbWithRedisLock() {

String uuid = UUID.randomUUID().toString();

// 设置值同时设置过期时间

Boolean lock = redisTemplate.opsForValue().setIfAbsent("lock", uuid, 300, TimeUnit.SECONDS);

if (lock) {

// 加锁成功..执行业务

// 设置过期时间,必须和加锁是同步的,原子的

Map<String, List<Catelog2Vo>> dataFromDb = getCatelogJsonFromDb();

// 通过使用lua脚本进行原子性删除

String script = "if redis.call('get',KEYS[1]) == ARGV[1] then return redis.call('del',KEYS[1]) else return 0 end";

//删除锁

Long lock1 = redisTemplate.execute(new DefaultRedisScript<Long>(script, Long.class), Arrays.asList("lock"), uuid);

return dataFromDb;

} else {

// 加锁失败,重试 synchronized()

// 休眠100ms重试

return getCatalogJsonFromDbWithRedisLock();//自旋的方式

}

}

5.4.6、分布式锁演进六

//getCatalogJson调用getCatalogJsonFromDbWithRedisLock

public Map<String, List<Catelog2Vo>> getCatalogJsonFromDbWithRedisLock() {

String uuid = UUID.randomUUID().toString();

// 设置值同时设置过期时间

Boolean lock = redisTemplate.opsForValue().setIfAbsent("lock", uuid, 300, TimeUnit.SECONDS);

if (lock) {

// 加锁成功..执行业务

// 设置过期时间,必须和加锁是同步的,原子的

Map<String, List<Catelog2Vo>> dataFromDb;

try {

dataFromDb = getCatelogJsonFromDb();

} finally {

String script = "if redis.call('get',KEYS[1]) == ARGV[1] then return redis.call('del',KEYS[1]) else return 0 end";

//删除锁

Long lock1 = redisTemplate.execute(new DefaultRedisScript<Long>(script, Long.class), Arrays.asList("lock"), uuid);

}

return dataFromDb;

} else {

// 加锁失败,重试 synchronized()

// 休眠200ms重试

System.out.println("获取分布式锁失败,等待重试");

try {

TimeUnit.MILLISECONDS.sleep(200);

} catch (InterruptedException e) {

e.printStackTrace();

}

return getCatalogJsonFromDbWithRedisLock();

}

}

5.4、分布式锁 - Redisson

the Redlock algorithm:https://redis.io/topics/distlock

在Java 语言环境下使用 Redisson

github:https://github.com/redisson/redisson添加链接描述

5.4.1、配置Redisson

<dependency>

<groupId>org.redissongroupId>

<artifactId>redissonartifactId>

<version>3.12.0version>

dependency>

//默认连接地址 127.0.0.1:6379

RedissonClient redisson = Redisson . 创建();

配置配置= 新 配置();

配置。使用单服务器()。setAddress( " myredisserver:6379 " );

RedissonClient redisson = Redisson 。创建(配置);

package com.firefly.common.config;

import org.redisson.Redisson;

import org.redisson.api.RedissonClient;

import org.redisson.config.Config;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import java.io.IOException;

/**

* @Author Michale @EMail:[email protected]

* @Date: 2022/2/6 16:56

* @Description:redisson分布式锁

*/

@Configuration

public class MyRedissonConfig {

/**

* 所有对Redisson的使用都是通过RedissonClient对象

*

* @return

* @throws IOException

*/

@Bean(destroyMethod = "shutdown")

//创建配置

RedissonClient redisson() throws IOException {

Config config = new Config();

config.useSingleServer().setAddress("redis://124.223.14.248:6379");

//根据Config创建RedissonClient实例

RedissonClient redissonClient = Redisson.create(config);

return redissonClient;

}

}

测试

@Test

public void testRedissonClient() {

System.out.println("redissonClient-----" + redissonClient);

}

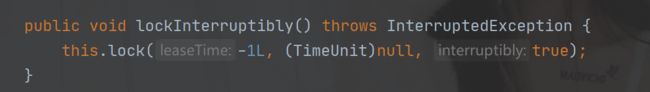

5.4.2、可重入锁-Redisson - Lock 锁

@GetMapping("/hello")

@ResponseBody

public String hello(){

// 1、获取一把锁,只要锁得名字一样,就是同一把锁

RLock lock = redissonClient.getLock("my-lock");

// 2、加锁

lock.lock(); // 阻塞式等待,默认加的锁都是30s时间

// 1、锁的自动续期,如果业务超长,运行期间自动给锁续上新的30s,不用担心业务时间长,锁自动过期后被删掉

// 2、加锁的业务只要运行完成,就不会给当前锁续期,即使不手动解锁,锁默认会在30s以后自动删除

lock.lock(10, TimeUnit.SECONDS); //10s 后自动删除

//问题 lock.lock(10, TimeUnit.SECONDS) 在锁时间到了后,不会自动续期

// 1、如果我们传递了锁的超时时间,就发送给 redis 执行脚本,进行占锁,默认超时就是我们指定的时间

// 2、如果我们为指定锁的超时时间,就是用 30 * 1000 LockWatchchdogTimeout看门狗的默认时间、

// 只要占锁成功,就会启动一个定时任务,【重新给锁设置过期时间,新的过期时间就是看门狗的默认时间】,每隔10s就自动续期

// internalLockLeaseTime【看门狗时间】 /3,10s

//最佳实践

// 1、lock.lock(10, TimeUnit.SECONDS);省掉了整个续期操作,手动解锁

try {

System.out.println("加锁成功,执行业务..." + Thread.currentThread().getId());

Thread.sleep(3000);

} catch (Exception e) {

} finally {

// 解锁 将设解锁代码没有运行,reidsson会不会出现死锁

System.out.println("释放锁...." + Thread.currentThread().getId());

lock.unlock();

}

return "hello";

}

// 2、加锁

lock.lock(); // 阻塞式等待,默认加的锁都是30s时间

// 1、锁的自动续期,如果业务超长,运行期间自动给锁续上新的30s,不用担心业务时间长,锁自动过期后被删掉

// 2、加锁的业务只要运行完成,就不会给当前锁续期,即使不手动解锁,锁默认会在30s以后自动删除

lock.lock(10, TimeUnit.SECONDS); //10s 后自动删除

//问题 lock.lock(10, TimeUnit.SECONDS) 在锁时间到了后,不会自动续期

// 1、如果我们传递了锁的超时时间,就发送给 redis 执行脚本,进行占锁,默认超时就是我们指定的时间

// 2、如果我们为指定锁的超时时间,就是用 30 * 1000 LockWatchchdogTimeout看门狗的默认时间、

//只要占锁成功,就会启动一个定时任务,【重新给锁设置过期时间,新的过期时间就是看门狗的默认时间】,每隔10s就自动续期

//internalLockLeaseTime【看门狗时间】 /3,10s

进入到 Redisson Lock 源码

1、进入 Lock 的实现 发现 他调用的也是 lock 方法参数 时间为 -1

2、再次进入 lock 方法

发现他调用了 tryAcquire

3、进入 tryAcquire

4、里头调用了 tryAcquireAsync

这里判断 laseTime != -1 就与刚刚的第一步传入的值有关系

5、进入到 tryLockInnerAsync 方法

6、internalLockLeaseTime 这个变量是锁的默认时间

这个变量在构造的时候就赋初始值

7、最后查看 lockWatchdogTimeout 变量

也就是30秒的时间

5.4.3、Reidsson - 读写锁

/**

* 保证一定能读取到最新数据,修改期间,写锁是一个排他锁(互斥锁,独享锁)读锁是一个共享锁

* 写锁没释放读锁就必须等待

* 读 + 读 相当于无锁,并发读,只会在 reids中记录好,所有当前的读锁,他们都会同时加锁成功

* 写 + 读 等待写锁释放

* 写 + 写 阻塞方式

* 读 + 写 有读锁,写也需要等待

* 只要有写的存在,都必须等待

*

* @return String

*/

@PostMapping("/write")

@ResponseBody

public String writeValue() {

RReadWriteLock lock = redissonClient.getReadWriteLock("rw_lock");

String s = "";

RLock rLock = lock.writeLock();

try {

// 1、改数据加写锁,读数据加读锁

rLock.lock();

System.out.println("写锁加锁成功..." + Thread.currentThread().getId());

s = UUID.randomUUID().toString();

try {

TimeUnit.SECONDS.sleep(3);

} catch (InterruptedException e) {

e.printStackTrace();

}

redisTemplate.opsForValue().set("writeValue", s);

} catch (Exception e) {

e.printStackTrace();

} finally {

rLock.unlock();

System.out.println("写锁释放..." + Thread.currentThread().getId());

}

return s;

}

@GetMapping("/read")

@ResponseBody

public String readValue() {

RReadWriteLock lock = redissonClient.getReadWriteLock("rw_lock");

RLock rLock = lock.readLock();

String s = "";

rLock.lock();

try {

System.out.println("读锁加锁成功..." + Thread.currentThread().getId());

s = (String) redisTemplate.opsForValue().get("writeValue");

try {

TimeUnit.SECONDS.sleep(3);

} catch (InterruptedException e) {

e.printStackTrace();

}

} catch (Exception e) {

e.printStackTrace();

} finally {

rLock.unlock();

System.out.println("读锁释放..." + Thread.currentThread().getId());

}

return s;

}

5.4.4、Redisson - 闭锁测试

/**

* 放假锁门

* 1班没人了

* 5个班级走完,我们可以锁们了

* @return

*/

@GetMapping("/lockDoor")

@ResponseBody

public String lockDoor() throws InterruptedException {

RCountDownLatch door = redissonClient.getCountDownLatch("door");

door.trySetCount(5);

door.await();//等待闭锁都完成

return "放假了....";

}

@GetMapping("/gogogo/{id}")

@ResponseBody

public String gogogo(@PathVariable("id") Long id) {

RCountDownLatch door = redissonClient.getCountDownLatch("door");

door.countDown();// 计数器减一

return id + "班的人走完了.....";

}

await()等待闭锁完成

countDown() 把计数器减掉后 await就会放行

5.4.5、Redisson - 信号量测试

/**

* 车库停车

*

* @return

*/

@ApiOperation(value = "停车")

@GetMapping("/park")

@ResponseBody

public String park() throws InterruptedException {

RSemaphore park = redissonClient.getSemaphore("park");

boolean b = park.tryAcquire();//获取一个信号,获取一个值,占用一个车位

int i = park.availablePermits();

if (b) {

System.out.println("停了一辆车,剩余停车位" + i);

return "停车成功";

}

System.out.println("停车失败,剩余停车位" + i);

return "停车失败";

}

@ApiOperation(value = "离开")

@GetMapping("/go")

@ResponseBody

public String go() {

RSemaphore park = redissonClient.getSemaphore("park");

int i = park.availablePermits();

;

park.release(); //释放一个车位

System.out.println("开走一辆,剩余停车位" + i);

return "开走一辆,剩余停车位" + i;

}

5.4.5、Redission - 缓存一致性解决

两个线程写 最终只有一个线程写成功,后写成功的会把之前写的数据给覆盖,这就会造成脏数据。

三个连接

一号连接 写数据库 然后删缓存

二号连接 写数据库时网络连接慢,还没有写入成功

三号链接 直接读取数据,读到的是一号连接写入的数据,此时 二号链接写入数据成功并删除了缓存,三号开始更新缓存发现更新的是二号的缓存

缓存数据一致性解决方案

无论是双写模式还是失效模式,都会到这缓存不一致的问题,即多个实力同时更新会出事,怎么办?

1、如果是用户纯度数据(订单数据、用户数据),这并发几率很小,几乎不用考虑这个问题,缓存数据加上过期时间,每隔一段时间触发读的主动更新即可

2、如果是菜单,商品介绍等基础数据,也可以去使用 canal 订阅,binlog 的方式

3、缓存数据 + 过期时间也足够解决大部分业务对缓存的要求

4、通过加锁保证并发读写,写写的时候按照顺序排好队,读读无所谓,所以适合读写锁,(业务不关心脏数据,允许临时脏数据可忽略)

总结:

我们能放入缓存的数据本来就不应该是实时性、一致性要求超高的。所以缓存数据的时候加上过期时间,保证每天拿到当前的最新值即可

我们不应该过度设计,增加系统的复杂性

遇到实时性、一致性要求高的数据,就应该查数据库,即使慢点

六、SpringCache

6.1、SpringCach简介

spring从3.1开始定义了Cache、CacheManager接口来统一不同的缓存技术。并支持使用JCache(JSR-107)注解简化我们的开发。

Cache接口的实现包括RedisCache、EhCacheCache、ConcurrentMapCache等

每次调用需要缓存功能的方法时,spring会检查检查指定参数的指定的目标方法

是否已经被调用过;如果有就直接从缓存中获取方法调用后的结果,如果没有就

调用方法并缓存结果后返回给用户。下次调用直接从缓存中获取。

1、确定方法需要缓存以及他们的缓存策略

2、从缓存中读取之前缓存存储的数据

6.2、SpringCach配置

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-cacheartifactId>

dependency>

application.properties

#指定缓存类型为redis

spring.cache.type=redis

# 指定redis中的过期时间为1h

spring.cache.redis.time-to-live=1000

指定缓存类型并在主配置类上加上注解:@EnableCaching

默认使用jdk进行序列化(可读性差),默认ttl为-1永不过期,自定义序列化方式需要编写配置类。

6.3、将数据保存成JSON格式

配置类:MyCacheConfig

@EnableConfigurationProperties(CacheProperties.class)

@Configuration

public class MyCacheConfig {

@Bean

public RedisCacheConfiguration redisCacheConfiguration( CacheProperties cacheProperties) {

CacheProperties.Redis redisProperties = cacheProperties.getRedis();

org.springframework.data.redis.cache.RedisCacheConfiguration config = org.springframework.data.redis.cache.RedisCacheConfiguration

.defaultCacheConfig();

//指定缓存序列化方式为json

config = config.serializeValuesWith(

RedisSerializationContext.SerializationPair.fromSerializer(new GenericJackson2JsonRedisSerializer()));

//设置配置文件中的各项配置,如过期时间

if (redisProperties.getTimeToLive() != null) {

config = config.entryTtl(redisProperties.getTimeToLive());

}

if (redisProperties.getKeyPrefix() != null) {

config = config.prefixKeysWith(redisProperties.getKeyPrefix());

}

if (!redisProperties.isCacheNullValues()) {

config = config.disableCachingNullValues();

}

if (!redisProperties.isUseKeyPrefix()) {

config = config.disableKeyPrefix();

}

return config;

}

}

@Cacheable

@Cacheble注解表示这个方法有了缓存的功能,方法的返回值会被缓存下来,下一次调用该方法前,会去检查是否缓存中已经有值,如果有就直接返回,不调用方法。如果没有,就调用方法,然后把结果缓存起来。这个注解一般用在查询方法上。

@CachePut

加了@CachePut注解的方法,会把方法的返回值put到缓存里面缓存起来,供其它地方使用。它通常用在新增方法上。

@CacheEvict

使用了CacheEvict注解的方法,会清空指定缓存。一般用在更新或者删除的方法上。

@Caching

Java注解的机制决定了,一个方法上只能有一个相同的注解生效。那有时候可能一个方法会操作多个缓存(这个在删除缓存操作中比较常见,在添加操作中不太常见)。

Spring Cache当然也考虑到了这种情况,@Caching注解就是用来解决这类情况的,大家一看它的源码就明白了。

public @interface Caching {

Cacheable[] cacheable() default {};

CachePut[] put() default {};

CacheEvict[] evict() default {};

}

@CacheConfig

前面提到的四个注解,都是Spring Cache常用的注解。每个注解都有很多可以配置的属性,这个我们在下一节再详细解释。但这几个注解通常都是作用在方法上的,而有些配置可能又是一个类通用的,这种情况就可以使用@CacheConfig了,它是一个类级别的注解,可以在类级别上配置cacheNames、keyGenerator、cacheManager、cacheResolver等。

#root.method.name获取方法名

@Cacheable(value = {"corgory"}, key = "#root.method.name")

@Override

public List<CategoryEntity> getCategoryOne() {

System.out.println("category");

QueryWrapper<CategoryEntity> queryWrapper = new QueryWrapper<>();

queryWrapper.eq("parent_cid", 0);

List<CategoryEntity> entityList = baseMapper.selectList(queryWrapper);

return entityList;

}

//@Caching(evict = {

// @CacheEvict(value = "category", key = "'getCategoryOne'"),

// @CacheEvict(value = "category", key = "'getCatalogJson'")

//})

@CacheEvict(value = "category", allEntries = true)

@Transactional

@Override

public void updateCategory(CategoryEntity category)

@Cacheable(value = {"category"}, key = "#root.method.name")

@Override

public List<CategoryEntity> getCategoryOne()

@Cacheable(value = "category", key = "#root.method.name")

@Override

public Map<String, List<Catelog2Vo>> getCatalogJson()

# 如果制定了前缀就是用指定的,如果没有指定前缀就使用缓存名字作为前缀

spring.cache.redis.use-key-prefix=true

# 解决缓存穿透

spring.cache.redis.cache-null-values=true

使用sync = true来解决击穿问题

6.4、SpringCache原理与不足

1)读模式

缓存穿透:查询一个null数据。解决方案:缓存空数据,可通过spring.cache.redis.cache-null-values=true

缓存击穿:大量并发进来同时查询一个正好过期的数据。解决方案:加锁 ? 默认是无加锁的;使用sync = true来解决击穿问题

缓存雪崩:大量的key同时过期。解决:加随机时间,加过期时间。spring.cache.redis.time-to-live=360000000

2)写模式:(缓存与数据库一致)

读写加锁

引入Canal,感知到MySQL的更新去更新Redis

读多写多,直接去数据库查询就行

3)总结:

常规数据(读多写少,即时性,一致性要求不高的数据,完全可以使用Spring-Cache)

写模式(只要缓存的数据有过期时间就足够了)

特殊数据:特殊设计

上一章:day06-仓库系统

下一章:day08-检索服务