- 数据标记

本项目采用labelimg标记佩戴口罩和不配戴口罩的人脸图片共2000张,训练12小时。

labelimg使用介绍

- 数据训练

将数据划分为测试集合训练集,配置yolov5环境进行训练

测试集和训练集划分以及训练

- 相关介绍

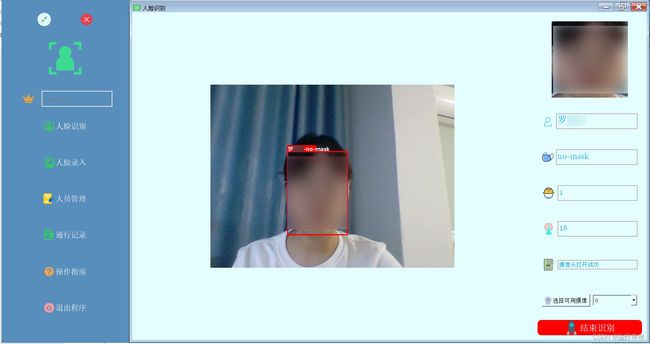

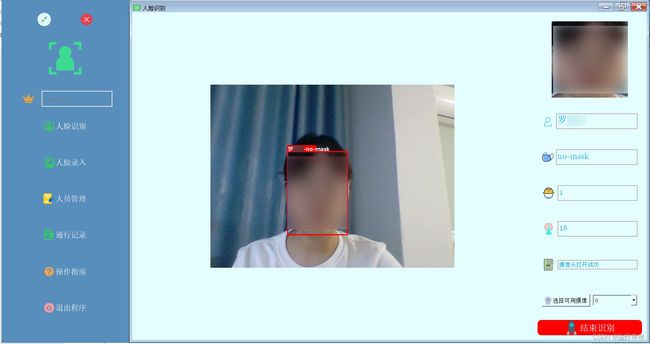

项目经历从dlib人脸识别---->优化的dlib人脸识别(利用质心追踪算法优化速度)---->加上pyside2界面的dlib人脸识别(此时无法识别是否佩戴口罩)---->训练yolov5后的人脸识别(此时只能输出是否佩戴口罩,无法识别佩戴口罩的人脸)---->最终版人脸识别(可以识别是否佩戴口罩以及识别佩戴口罩的人脸)

- 相关代码

import datetime

import time

import cv2

import dlib

from PIL import Image, ImageDraw, ImageFont

from PySide2.QtGui import Qt

from PySide2.QtWidgets import QApplication, QMessageBox

from PySide2 import QtWidgets

from PySide2.QtUiTools import QUiLoader

from PySide2 import QtGui, QtCore

from lib.share import SI

import lib.share as sa

import lib.dataDB as db

import traceback

from PyCameraList.camera_device import test_list_cameras, list_video_devices, list_audio_devices

import numpy as np

import face as fa

detector = dlib.get_frontal_face_detector()

predictor = dlib.shape_predictor('data/dlib/shape_predictor_68_face_landmarks.dat')

face_reco_model = dlib.face_recognition_model_v1("data/dlib/dlib_face_recognition_resnet_model_v1.dat")

class Client:

def __init__(self):

self.ui = QUiLoader().load('ui/face_recognition.ui')

"""加载人脸识别各种信息"""

self.current_face_position = []

self.features = SI.features

self.features_names = SI.f_uid

self.frame_cnt = 0

self.last_centriod = []

self.current_centroid = []

self.current_face_name = []

self.last_face_name = []

self.current_face_cnt = 0

self.last_face_cnt = 0

self.current_face_position = []

self.current_face_feature = []

self.last_current_distance = 0

self.current_face_distance = []

self.reclassify_cnt = 0

self.reclassify_interval = 20

self.current_face = None

"""视频相关操作"""

self.timer_camera = QtCore.QTimer()

self.cap = cv2.VideoCapture()

self.CAM_NUM = 0

"""操作"""

self.timer_camera.timeout.connect(self.show_camera)

self.ui.select_camera.clicked.connect(self.select_camera_num)

self.ui.begin_btn.setEnabled(False)

self.ui.begin_btn.clicked.connect(self.run_rec)

self.get_camera()

"""获取可用摄像头列表"""

def get_camera(self):

cameras = list_video_devices()

camera_dict = dict(cameras)

print(camera_dict)

camera_name = []

camera_name.append(str(0))

camera_name.append(str(1))

self.ui.cameraList.addItems(camera_name)

"""摄像头画面展示"""

def show_camera(self):

try:

start_time = time.time()

flag, img_rd = self.cap.read()

if flag:

img_rd = cv2.flip(img_rd, 1)

image = img_rd.copy()

img_rd = cv2.resize(img_rd, (640, 480))

detections = fa.detect(img_rd)

print("人脸数:", len(detections))

faces = []

mask_or_not = []

for i in detections:

pos = i['position']

face = dlib.rectangle(pos[0], pos[1], pos[0] + pos[2], pos[1] + pos[3])

faces.append(face)

mask_or_not.append(i['class'])

print(">>>目标检测结果>>>:", mask_or_not)

print(">>>人脸坐标>>>:", faces)

self.ui.personNumber.setText(str(len(detections)))

self.last_face_cnt = self.current_face_cnt

self.current_face_cnt = len(detections)

self.last_face_name = self.current_face_name[:]

self.last_centriod = self.current_centroid

self.current_centroid = []

if (self.current_face_cnt == self.last_face_cnt) and (self.reclassify_cnt != self.reclassify_interval):

self.current_face_position = []

if "unknow" in self.current_face_name:

self.reclassify_cnt += 1

if self.current_face_cnt != 0:

for k, d in enumerate(faces):

self.current_face_position.append(tuple(

[faces[k].left(), int(faces[k].bottom() + (faces[k].bottom() - faces[k].top()) / 4)]))

self.current_centroid.append(

[int(faces[k].left() + faces[k].right()) / 2,

int(faces[k].top() + faces[k].bottom()) / 2])

x1 = d.top()

y1 = d.left()

x2 = d.bottom()

y2 = d.right()

if y2 > img_rd.shape[1]:

y2 = img_rd.shape[1]

elif x2 > img_rd.shape[0]:

x2 = img_rd.shape[0]

elif y1 < 0:

y1 = 0

elif x1 < 0:

x1 = 0

crop = img_rd[x1:x2, y1:y2]

self.current_face = crop

self.display_face(crop)

rect = (d.left(), d.top(), d.right(), d.bottom())

name_lab = self.current_face_name[k] if self.current_face_name != [] else ""

name_lab = str(name_lab) + "-" + str(mask_or_not[k])

print("current_face_name:", name_lab)

image = self.drawRectBox(image, rect, name_lab)

self.ui.name.setText(str(self.current_face_name[k]))

self.ui.maskMark.setText(str(mask_or_not[k]))

self.display_img(image)

if self.current_face_cnt != 1:

self.centroid_tracker()

else:

self.current_face_position = []

self.current_face_distance = []

self.current_face_feature = []

self.reclassify_cnt = 0

if self.current_face_cnt == 0:

self.current_face_name = []

self.current_face_feature = []

else:

self.current_face_name = []

for i in range(len(faces)):

self.current_face_feature.append(sa.return_face_recognition_result(img_rd, faces[i]))

self.current_face_name.append("unknow")

print("self.current_face_name:", self.current_face_name)

for k in range(len(faces)):

x1 = faces[k].top()

x2 = faces[k].left()

y1 = faces[k].bottom()

y2 = faces[k].right()

self.current_face = img_rd[x1:y1, x2:y2]

self.current_centroid.append([int(faces[k].left() + faces[k].right()) / 2,

int(faces[k].top() + faces[k].bottom()) / 2])

self.current_face_distance = []

self.current_face_position.append(tuple(

[faces[k].left(), int(faces[k].bottom() + (faces[k].bottom() - faces[k].top()) / 4)]))

print("人脸库:", self.features)

for i in range(len(self.features)):

if str(self.features[i][0]) != '0.0':

e_distance_tmp = sa.return_euclidean_distance(self.current_face_feature[k],

self.features[i])

self.current_face_distance.append(e_distance_tmp)

else:

self.current_face_distance.append(99999999999)

print("self.current_face_distance:", self.current_face_distance)

min_dis = min(self.current_face_distance)

similar_person_num = self.current_face_distance.index(min_dis)

if min_dis < 0.4:

self.current_face_name[k] = self.features_names[similar_person_num]

curr_time = datetime.datetime.now()

curTime = datetime.datetime.strftime(curr_time, '%Y-%m-%d %H:%M:%S')

print("current_Time:", curTime)

filePath = "record_img/" + str(curr_time.year) + "_" + str(curr_time.month) + "_" + str(

curr_time.day) + "_" + str(curr_time.hour) + "_" + str(

curr_time.minute) + "_" + str(

curr_time.second) + ".jpg"

print("filePath:", filePath)

cv2.imencode('.jpg', self.current_face)[1].tofile(filePath)

sql = "insert into record(username,time,pic) values ('%s','%s','%s')" % (

self.current_face_name[k], curTime, filePath)

print("通行记录:", sql)

result = db.insertDB(sql)

end_time = time.time()

if end_time == start_time:

use_time = 1

else:

use_time = end_time - start_time

fps = int(1.0 / round(use_time, 3))

self.ui.fps.setText(str(fps))

except Exception as e:

traceback.print_exc()

QMessageBox.critical(

self.ui,

"人脸识别错误",

str(e)

)

"""摄像头选择事件"""

def select_camera_num(self):

try:

if not self.timer_camera.isActive():

self.CAM_NUM = int(self.ui.cameraList.currentText())

print("self.CAM_NUM:", self.CAM_NUM)

if self.CAM_NUM == 0:

self.ui.log.setText("内置摄像头准备就绪")

else:

self.ui.log.setText("外置摄像头准备就绪")

self.ui.name.setText("unknow")

self.ui.maskMark.setText("nomask")

self.ui.personNumber.setText("0")

self.ui.fps.setText("0")

self.ui.cameraLabel.setPixmap(QtGui.QPixmap("img/camera_label.jpg"))

self.ui.personX.setPixmap(QtGui.QPixmap("img/qql.jpg"))

self.ui.begin_btn.setEnabled(True)

except Exception as e:

traceback.print_exc()

QMessageBox.critical(

self.ui,

"摄像头选择函数出错了",

str(e)

)

"""画人脸框"""

def drawRectBox(self, image, rect, addText):

try:

cv2.rectangle(image, (int(round(rect[0])), int(round(rect[1]))),

(int(round(rect[2])), int(round(rect[3]))),

(0, 0, 255), 2)

cv2.rectangle(image, (int(rect[0] - 1), int(rect[1]) - 16), (int(rect[0] + 75), int(rect[1])), (0, 0, 255),

-1, cv2.LINE_AA)

img = Image.fromarray(image)

draw = ImageDraw.Draw(img)

font_path = "Font/platech.ttf"

font = ImageFont.truetype(font_path, 14, 0)

draw.text((int(rect[0] + 1), int(rect[1] - 16)), addText, (255, 255, 255), font=font)

imagex = np.array(img)

return imagex

except Exception as e:

traceback.print_exc()

QMessageBox.critical(

self.ui,

"画人脸框错误",

str(e)

)

"""加载视频区域"""

def display_img(self, image):

try:

image = cv2.resize(image, (640, 480))

show = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

showImage = QtGui.QImage(show.data, show.shape[1], show.shape[0], show.shape[1] * 3,

QtGui.QImage.Format_RGB888)

self.ui.cameraLabel.setPixmap(QtGui.QPixmap.fromImage(showImage))

self.ui.cameraLabel.setScaledContents(True)

QtWidgets.QApplication.processEvents()

except Exception as e:

traceback.print_exc()

QMessageBox.critical(

self.ui,

"加载视频区域错误",

str(e)

)

"""在右边小框显示人脸"""

def display_face(self, image):

try:

self.ui.personX.clear()

if image.any():

show = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

showImage = QtGui.QImage(show.data, show.shape[1], show.shape[0], show.shape[1] * 3,

QtGui.QImage.Format_RGB888)

self.ui.personX.setFixedSize(200, 200)

self.ui.personX.setPixmap(QtGui.QPixmap.fromImage(showImage))

self.ui.personX.setScaledContents(True)

QtWidgets.QApplication.processEvents()

except Exception as e:

traceback.print_exc()

QMessageBox.critical(

self.ui,

"显示人脸框错误",

str(e)

)

""" 使用质心追踪来识别人脸"""

def centroid_tracker(self):

for i in range(len(self.current_centroid)):

distance_current_person = []

for j in range(len(self.last_centriod)):

self.last_current_distance = sa.return_euclidean_distance(

self.current_centroid[i], self.last_centriod[j])

distance_current_person.append(self.last_current_distance)

last_frame_num = distance_current_person.index(min(distance_current_person))

self.current_face_name[i] = self.last_face_name[last_frame_num]

"""点击开始运行按钮执行函数"""

def run_rec(self):

self.features, self.features_names = sa.get_all_features()

try:

if not self.timer_camera.isActive():

QtWidgets.QApplication.processEvents()

flag = self.cap.open(self.CAM_NUM)

if not flag:

QMessageBox.critical(

self.ui,

"错误",

"摄像头打开失败,请检查连接后重新打开"

)

else:

self.ui.log.setText("正在打开摄像头,请稍等")

self.timer_camera.start(30)

self.ui.begin_btn.setText("结束识别")

self.ui.log.setText("摄像头打开成功")

self.ui.begin_btn.setStyleSheet("background-color: red;\n"

"color: rgb(255, 255, 255);\n"

"font: 87 14pt \"Arial Black\";\n"

"border-radius:10px;")

self.features = SI.features

self.features_names = SI.f_uid

else:

self.timer_camera.stop()

self.cap.release()

self.ui.log.setText("摄像头已关闭")

self.ui.cameraLabel.clear()

self.ui.personX.clear()

self.ui.cameraLabel.setPixmap(QtGui.QPixmap("img/camera_label.jpg"))

self.ui.personX.setPixmap(QtGui.QPixmap("img/qql.jpg"))

self.ui.begin_btn.setText("开始识别")

self.ui.begin_btn.setStyleSheet("background-color: rgb(104, 164, 253);\n"

"color: rgb(255, 255, 255);\n"

"font: 87 14pt \"Arial Black\";\n"

"border-radius:10px;")

self.ui.name.setText("unknow")

self.ui.maskMark.setText("nomask")

self.ui.personNumber.setText("0")

self.ui.fps.setText("0")

QtWidgets.QApplication.processEvents()

except Exception as e:

traceback.print_exc()

QMessageBox.critical(

self.ui,

"打开摄像头错误",

str(e)

)

if __name__ == "__main__":

app = QApplication([])

SI.loginWin = Client()

SI.loginWin.ui.show()

app.exec_()

- 代码说明

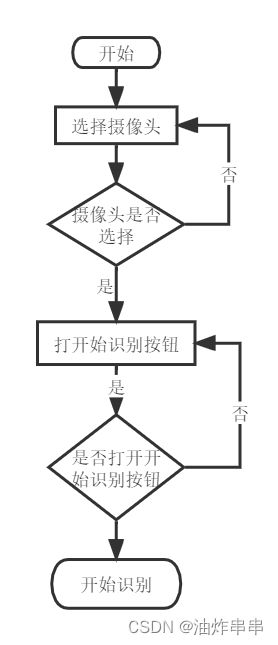

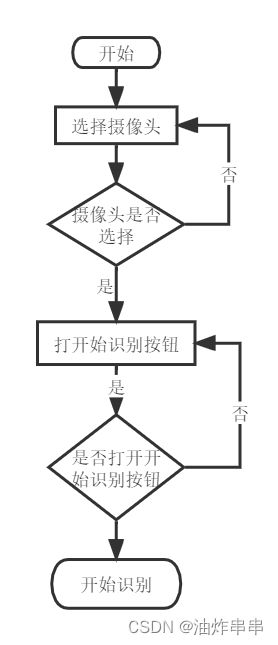

代码具有捕获摄像头数量的功能,可以选择外置摄像头或内置摄像头,如果不需要直接将该函数去掉,并将self.CAM_NUM置为0既可。

- 项目完整代码

项目完整源码地址1

项目完整源码地址2