1.30.Flink SQL案例将Kafka数据写入hive

1.30.Flink SQL案例将Kafka数据写入hive

1.30.1.1.场景,环境,配置准备

1.30.1.2.案例代码

1.30.1.2.1.编写pom.xml文件

1.30.1.2.2.Maven工程resources下编写配置文件log4j2.properties

1.30.1.2.3.Maven工程resources下编写配置文件logback.xml

1.30.1.2.4.Maven工程resources下编写配置文件project-config-test.properties

1.30.1.2.5.编写com.xxxxx.log.utils.PropertiesUtils

1.30.1.2.6.编写com.xxxxx.log.flink.handler.ExceptionLogHandlerBySql

1.30.1.2.7.执行命令

1.30.Flink SQL案例将Kafka数据写入hive

1.30.1.1.场景,环境,配置准备

场景:通过Flink SQL的方式,将Kafka的数据实时写入到hive中。

(1)环境

hadoop 3.1.1.3.1.4-315

hive 3.1.0.3.1.4-315

flink 1.12.1

前置准备:

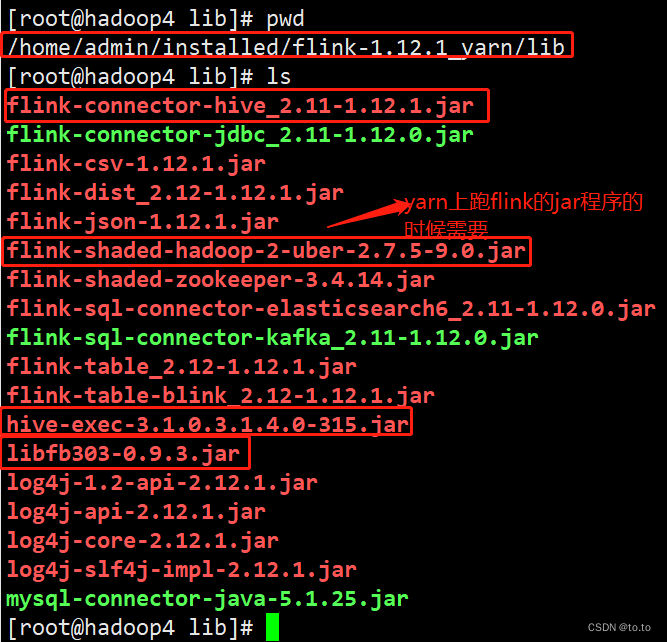

将以下几个包添加到$FLINK_HOME/lib,其中hive-exec-3.1.0.3.1.4.0-315.jar和libfb303-0.9.3.jar从/usr/hdp/current/hive-client/lib中拷贝

1.30.1.2.案例代码

1.30.1.2.1.编写pom.xml文件

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<groupId>com.xxxxx.zczlgroupId>

<artifactId>flink-log-handlerartifactId>

<properties>

<project.build.sourceEncoding>UTF-8project.build.sourceEncoding>

<maven.test.skip>truemaven.test.skip>

<maven.javadoc.skip>truemaven.javadoc.skip>

<maven.compiler.source>1.8maven.compiler.source>

<maven.compiler.target>1.8maven.compiler.target>

<slf4j.version>1.7.25slf4j.version>

<fastjson.version>1.2.73fastjson.version>

<joda-time.version>2.9.4joda-time.version>

<flink.version>1.12.1flink.version>

<scala.binary.version>2.11scala.binary.version>

<hive.version>3.1.2hive.version>

<hadoop.version>3.1.4hadoop.version>

<mysql.connector.java>8.0.22mysql.connector.java>

<fileName>flink-log-handlerfileName>

properties>

<version>1.0-SNAPSHOTversion>

<repositories>

<repository>

<id>clouderaid>

<layout>defaultlayout>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/url>

repository>

repositories>

<dependencies>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-javaartifactId>

<version>${flink.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-streaming-java_${scala.binary.version}artifactId>

<version>${flink.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-connector-kafka_${scala.binary.version}artifactId>

<version>${flink.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-shaded-hadoop-2-uberartifactId>

<version>2.7.5-9.0version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-sequence-fileartifactId>

<version>${flink.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-table-api-java-bridge_${scala.binary.version}artifactId>

<version>${flink.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-table-planner-blink_${scala.binary.version}artifactId>

<version>${flink.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-clients_${scala.binary.version}artifactId>

<version>${flink.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-jsonartifactId>

<version>${flink.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>mysqlgroupId>

<artifactId>mysql-connector-javaartifactId>

<version>${mysql.connector.java}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-connector-hive_${scala.binary.version}artifactId>

<version>${flink.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-streaming-scala_${scala.binary.version}artifactId>

<version>${flink.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-table-commonartifactId>

<version>${flink.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-commonartifactId>

<version>${hadoop.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-clientartifactId>

<version>${hadoop.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.hivegroupId>

<artifactId>hive-jdbcartifactId>

<version>${hive.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.hivegroupId>

<artifactId>hive-metastoreartifactId>

<version>${hive.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.hivegroupId>

<artifactId>hive-execartifactId>

<version>${hive.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>joda-timegroupId>

<artifactId>joda-timeartifactId>

<version>${joda-time.version}version>

dependency>

<dependency>

<groupId>com.alibabagroupId>

<artifactId>fastjsonartifactId>

<version>${fastjson.version}version>

dependency>

<dependency>

<groupId>org.slf4jgroupId>

<artifactId>slf4j-apiartifactId>

<version>${slf4j.version}version>

dependency>

<dependency>

<groupId>org.slf4jgroupId>

<artifactId>slf4j-log4j12artifactId>

<version>${slf4j.version}version>

<scope>testscope>

dependency>

dependencies>

<build>

<finalName>${fileName}finalName>

<plugins>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-compiler-pluginartifactId>

<version>3.6.0version>

<configuration>

<source>${maven.compiler.source}source>

<target>${maven.compiler.target}target>

<encoding>${project.build.sourceEncoding}encoding>

<compilerVersion>${maven.compiler.source}compilerVersion>

<showDeprecation>trueshowDeprecation>

<showWarnings>trueshowWarnings>

configuration>

plugin>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-surefire-pluginartifactId>

<version>2.12.4version>

<configuration>

<skipTests>${maven.test.skip}skipTests>

configuration>

plugin>

<plugin>

<groupId>org.apache.ratgroupId>

<artifactId>apache-rat-pluginartifactId>

<version>0.12version>

<configuration>

<excludes>

<exclude>README.mdexclude>

excludes>

configuration>

plugin>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-javadoc-pluginartifactId>

<version>2.10.4version>

<configuration>

<aggregate>trueaggregate>

<reportOutputDirectory>javadocsreportOutputDirectory>

<locale>enlocale>

configuration>

plugin>

<plugin>

<groupId>net.alchim31.mavengroupId>

<artifactId>scala-maven-pluginartifactId>

<version>3.1.6version>

<configuration>

<scalaCompatVersion>2.11scalaCompatVersion>

<scalaVersion>2.11.12scalaVersion>

<encoding>UTF-8encoding>

configuration>

<executions>

<execution>

<id>compile-scalaid>

<phase>compilephase>

<goals>

<goal>add-sourcegoal>

<goal>compilegoal>

goals>

execution>

<execution>

<id>test-compile-scalaid>

<phase>test-compilephase>

<goals>

<goal>add-sourcegoal>

<goal>testCompilegoal>

goals>

execution>

executions>

plugin>

<plugin>

<artifactId>maven-assembly-pluginartifactId>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependenciesdescriptorRef>

descriptorRefs>

configuration>

<executions>

<execution>

<id>make-assemblyid>

<phase>packagephase>

<goals>

<goal>singlegoal>

goals>

execution>

executions>

plugin>

plugins>

build>

project>

1.30.1.2.2.Maven工程resources下编写配置文件log4j2.properties

具体内容如下:

rootLogger.level = ERROR

rootLogger.appenderRef.console.ref = ConsoleAppender

appender.console.name = ConsoleAppender

appender.console.type = CONSOLE

appender.console.layout.type = PatternLayout

appender.console.layout.pattern = %d{yyyy-MM-dd HH:mm:ss,SSS} %-5p %-60c %x - %m%n

1.30.1.2.3.Maven工程resources下编写配置文件logback.xml

<configuration>

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>%d{HH:mm:ss.SSS} [%thread] %-5level %logger{60} %X{sourceThread} - %msg%npattern>

encoder>

appender>

<appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>log/generator.%d{yyyy-MM-dd}.logfileNamePattern>

<maxHistory>7maxHistory>

rollingPolicy>

<encoder>

<pattern>%d{HH:mm:ss.SSS} [%thread] %-5level %logger{36} - %msg%npattern>

encoder>

appender>

<root level="ERROR">

<appender-ref ref="FILE" />

<appender-ref ref="STDOUT" />

root>

configuration>

1.30.1.2.4.Maven工程resources下编写配置文件project-config-test.properties

# 测试环境

####################################业务方kafka相关配置 start###########################################

kafka.bootstrap.servers=xxx.xxx.xxx.xxx:9094

# 消费者配置

kafka.consumer.group.id=logkit

kafka.consumer.enableAutoCommit=true

kafka.consumer.autoCommitInterval=1000

kafka.consumer.key.deserializer=org.apache.kafka.common.serialization.StringDeserializer

kafka.consumer.value.deserializer=org.apache.kafka.common.serialization.StringDeserializer

# 主题

kafka.exception.topic=lk_exception_log_statistics

kafka.log.topic=lk_log_info_statistics

####################################flink相关配置 start###########################################

# 间隔5s产生checkpoing

flink.checkpoint.interval=5000

# 确保检查点之间有至少1000 ms的间隔(可以把这个注释掉:提高checkpoint的写速度===todo===)

flink.checkpoint.minPauseBetweenCheckpoints=1000

# 检查点必须在1min内完成,或者被丢弃【checkpoint的超时时间】

flink.checkpoint.checkpointTimeout=60000

# 同一时间只允许进行一个检查点

flink.checkpoint.maxConcurrentCheckpoints=3

# 尝试重启次数

flink.fixedDelayRestart.times=3

# 每次尝试重启时之间的时间间隔

flink.fixedDelayRestart.interval=5

####################################source和sink

# kafka source读并发

flink.kafka.source.parallelism=1

# hive下沉的并发

flink.hive.sink.parallelism=1

#hive.conf=/usr/hdp/current/hive-client/conf

hive.conf=/usr/hdp/3.1.4.0-315/hive/conf/

hive.zhoushan.database=xxxx_158

1.30.1.2.5.编写com.xxxxx.log.utils.PropertiesUtils

package com.xxxxx.log.utils;

import java.io.IOException;

import java.io.InputStream;

import java.util.Properties;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public final class PropertiesUtils {

private static Logger logger = LoggerFactory.getLogger(PropertiesUtils.class);

private static PropertiesUtils instance = null;

/** 间隔xxx秒产生checkpoing **/

private Integer flinkCheckpointsInterval = null;

/** 确保检查点之间有至少xxx ms的间隔 **/

private Integer flinkMinPauseBetweenCheckpoints = null;

/** 检查点必须在一分钟内完成,或者被丢弃【checkpoint的超时时间】 **/

private Integer flinkCheckpointTimeout = null;

/** 同一时间只允许进行一个检查点 **/

private Integer flinkMaxConcurrentCheckpoints = null;

/** 尝试重启次数 **/

private Integer flinkFixedDelayRestartTimes = null;

/** 每次尝试重启时之间的时间间隔 **/

private Integer flinkFixedDelayRestartInterval = null;

/** kafka source 的并行度 **/

private Integer kafkaSourceParallelism = null;

/** hive sink 的并行度 **/

private Integer hiveSinkParallelism = null;

/** kafka集群 **/

private String kafkServer = null;

/** 消费者组id **/

private String groupId = null;

private Boolean enableAutoCommit = null;

private Long autoCommitInterval = null;

private String keyDeserializer = null;

private String valueDeserializer = null;

private String exceptionTopic = null;

private String logTopic = null;

private String hiveConf = null;

private String database = null;

/**

* 静态代码块

*/

private PropertiesUtils() {

InputStream in = null;

try {

// 读取配置文件,通过类加载器的方式读取属性文件

in = PropertiesUtils.class.getClassLoader().getResourceAsStream("project-config-test.properties");

// in = PropertiesUtils.class.getClassLoader().getResourceAsStream("test-win10.properties");

// in = PropertiesUtils.class.getClassLoader().getResourceAsStream("test-linux.properties");

Properties prop = new Properties();

prop.load(in);

// flink配置

flinkCheckpointsInterval = Integer.parseInt(prop.getProperty("flink.checkpoint.interval").trim());

flinkMinPauseBetweenCheckpoints =

Integer.parseInt(prop.getProperty("flink.checkpoint.minPauseBetweenCheckpoints").trim());

flinkCheckpointTimeout = Integer.parseInt(prop.getProperty("flink.checkpoint.checkpointTimeout").trim());

flinkMaxConcurrentCheckpoints =

Integer.parseInt(prop.getProperty("flink.checkpoint.maxConcurrentCheckpoints").trim());

flinkFixedDelayRestartTimes = Integer.parseInt(prop.getProperty("flink.fixedDelayRestart.times").trim());

flinkFixedDelayRestartInterval =

Integer.parseInt(prop.getProperty("flink.fixedDelayRestart.interval").trim());

kafkaSourceParallelism = Integer.parseInt(prop.getProperty("flink.kafka.source.parallelism").trim());

hiveSinkParallelism = Integer.parseInt(prop.getProperty("flink.hive.sink.parallelism").trim());

// kafka配置

kafkServer = prop.getProperty("kafka.bootstrap.servers").trim();

groupId = prop.getProperty("kafka.consumer.group.id").trim();

enableAutoCommit = Boolean.valueOf(prop.getProperty("kafka.consumer.enableAutoCommit").trim());

autoCommitInterval = Long.valueOf(prop.getProperty("kafka.consumer.autoCommitInterval").trim());

keyDeserializer = prop.getProperty("kafka.consumer.key.deserializer").trim();

valueDeserializer = prop.getProperty("kafka.consumer.value.deserializer").trim();

exceptionTopic = prop.getProperty("kafka.exception.topic").trim();

logTopic = prop.getProperty("kafka.log.topic").trim();

hiveConf = prop.getProperty("hive.conf").trim();

database = prop.getProperty("hive.zhoushan.database").trim();

} catch (Exception e) {

throw new ExceptionInInitializerError(e);

} finally {

try {

if (in != null) {

in.close();

}

} catch (IOException e) {

e.printStackTrace();

logger.error("流关闭失败");

}

}

}

public static PropertiesUtils getInstance() {

if (instance == null) {

instance = new PropertiesUtils();

}

return instance;

}

public Integer getFlinkCheckpointsInterval() {

return flinkCheckpointsInterval;

}

public Integer getFlinkMinPauseBetweenCheckpoints() {

return flinkMinPauseBetweenCheckpoints;

}

public Integer getFlinkCheckpointTimeout() {

return flinkCheckpointTimeout;

}

public Integer getFlinkMaxConcurrentCheckpoints() {

return flinkMaxConcurrentCheckpoints;

}

public Integer getFlinkFixedDelayRestartTimes() {

return flinkFixedDelayRestartTimes;

}

public Integer getFlinkFixedDelayRestartInterval() {

return flinkFixedDelayRestartInterval;

}

public Integer getKafkaSourceParallelism() {

return kafkaSourceParallelism;

}

public Integer getHiveSinkParallelism() {

return hiveSinkParallelism;

}

public String getKafkServer() {

return kafkServer;

}

public String getGroupId() {

return groupId;

}

public Boolean getEnableAutoCommit() {

return enableAutoCommit;

}

public Long getAutoCommitInterval() {

return autoCommitInterval;

}

public String getKeyDeserializer() {

return keyDeserializer;

}

public String getValueDeserializer() {

return valueDeserializer;

}

public String getExceptionTopic() {

return exceptionTopic;

}

public String getLogTopic() {

return logTopic;

}

public String getHiveConf() {

return hiveConf;

}

public String getDatabase() {

return database;

}

}

1.30.1.2.6.编写com.xxxxx.log.flink.handler.ExceptionLogHandlerBySql

具体内容是:

package com.xxxxx.log.flink.handler;

import java.util.concurrent.TimeUnit;

import org.apache.flink.api.common.restartstrategy.RestartStrategies;

import org.apache.flink.api.common.time.Time;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.environment.CheckpointConfig;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.EnvironmentSettings;

import org.apache.flink.table.api.SqlDialect;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.table.catalog.hive.HiveCatalog;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import com.xxxxx.log.utils.PropertiesUtils;

public class ExceptionLogHandlerBySql {

private static final Logger logger = LoggerFactory.getLogger(ExceptionLogHandlerBySql.class);

public static void main(String[] args) {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

PropertiesUtils instance = PropertiesUtils.getInstance();

//重启策略之固定间隔 (Fixed delay)

env.setRestartStrategy(RestartStrategies.fixedDelayRestart(instance.getFlinkFixedDelayRestartTimes(),

Time.of(instance.getFlinkFixedDelayRestartInterval(), TimeUnit.MINUTES)));

//设置间隔多长时间产生checkpoint

env.enableCheckpointing(instance.getFlinkCheckpointsInterval());

//设置模式为exactly-once (这是默认值)

env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);

//确保检查点之间有至少500 ms的间隔【checkpoint最小间隔】

// env.getCheckpointConfig().setMinPauseBetweenCheckpoints(instance.getFlinkMinPauseBetweenCheckpoints());

//检查点必须在一分钟内完成,或者被丢弃【checkpoint的超时时间】

env.getCheckpointConfig().setCheckpointTimeout(instance.getFlinkCheckpointTimeout());

//同一时间只允许进行一个检查点

env.getCheckpointConfig().setMaxConcurrentCheckpoints(instance.getFlinkMaxConcurrentCheckpoints());

// 表示一旦Flink处理程序被cancel后,会保留Checkpoint数据,以便根据实际需要恢复到指定的Checkpoint【详细解释见备注】

env.getCheckpointConfig()

.enableExternalizedCheckpoints(CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION);

env.setParallelism(1);

// flink table

EnvironmentSettings settings = EnvironmentSettings.newInstance().inStreamingMode().useBlinkPlanner().build();

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env, settings);

// 构造 kafka source, 用 DEFAULT

tableEnv.getConfig().setSqlDialect(SqlDialect.DEFAULT);

String sourceDrop = "drop table if exists kafka_exception";

String sourceTable = "CREATE TABLE kafka_exception ("

+ " serviceId STRING,"

+ " serverName STRING,"

+ " serverIp STRING,"

+ " title STRING,"

+ " operationPath STRING,"

+ " url STRING,"

+ " stack STRING,"

+ " exceptionName STRING,"

+ " exceptionInfo STRING,"

+ " operationUser STRING,"

+ " operationIp STRING,"

+ " orgId BIGINT,"

+ " methodClass STRING,"

+ " fileName STRING,"

+ " methodName STRING,"

+ " operationData STRING,"

+ " occurrenceTime BIGINT"

+ ") WITH ("

+ " 'connector' = 'kafka',"

+ " 'topic' = '" + instance.getExceptionTopic() + "',"

+ " 'properties.bootstrap.servers' = '" + instance.getKafkServer() + "',"

+ " 'properties.group.id' = '" + instance.getGroupId() + "',"

+ " 'scan.startup.mode' = 'earliest-offset',"

+ " 'format' = 'json',"

+ " 'json.fail-on-missing-field' = 'false',"

+ " 'json.ignore-parse-errors' = 'true'"

+ " )";

System.out.println("=================sourcesql打印开始========================");

tableEnv.executeSql(sourceDrop);

tableEnv.executeSql(sourceTable);

System.out.println(sourceTable);

System.out.println("=================sourcesql打印结束========================");

// 构造 hive catalog(这个可以任意编写)

String name = "mycatalog";

String defaultDatabase = instance.getDatabase();

String hiveConfDir = instance.getHiveConf();

HiveCatalog hive = new HiveCatalog(name, defaultDatabase, hiveConfDir);

tableEnv.registerCatalog(name, hive);

tableEnv.useCatalog(name);

// hive sink

tableEnv.getConfig().setSqlDialect(SqlDialect.HIVE);

tableEnv.useDatabase(defaultDatabase);

String sinkDrop = "drop table if exists hive_exception";

String sinkTable = "CREATE TABLE hive_exception ("

+ " service_id STRING,"

+ " server_name STRING,"

+ " server_ip STRING,"

+ " title STRING,"

+ " operation_path STRING,"

+ " url STRING,"

+ " stack STRING,"

+ " exception_name STRING,"

+ " exception_info STRING,"

+ " operation_user STRING,"

+ " operation_ip STRING,"

+ " org_id BIGINT,"

+ " method_class STRING,"

+ " file_name STRING,"

+ " method_name STRING,"

+ " operation_data STRING,"

+ " occurrence_time String"

+ " ) PARTITIONED BY (dt STRING) STORED AS parquet TBLPROPERTIES ("

+ " 'partition.time-extractor.timestamp-pattern'='$dt 00:00:00',"

+ " 'sink.partition-commit.trigger'='process-time',"

+ " 'sink.partition-commit.delay'='0 s',"

+ " 'sink.partition-commit.policy.kind'='metastore,success-file'"

+ ")";

System.out.println("=================sinksql打印开始========================");

tableEnv.executeSql(sinkDrop);

tableEnv.executeSql(sinkTable);

System.out.println(sinkTable);

System.out.println("=================sinksql打印结束========================");

String sql = "INSERT INTO TABLE hive_exception"

+ " SELECT serviceId, serverName, serverIp, title, operationPath, url, stack, exceptionName, exceptionInfo, operationUser, operationIp,"

+ " orgId, methodClass, fileName, methodName, operationData, from_unixtime(cast(occurrenceTime/1000 as bigint),'yyyy-MM-dd HH:mm:ss'), from_unixtime(cast(occurrenceTime/1000 as bigint),'yyyy-MM-dd')"

+ " FROM kafka_exception";

tableEnv.executeSql(sql);

}

}

1.30.1.2.7.执行命令

第一种:standalone模式

$FLINK_HOME/bin/flink run \

-c com.xxxxx.log.flink.handler.ExceptionLogHandlerBySql \

/root/cf_temp/flink-log-handler.jar

第二种:cluster-yarn

$FLINK_HOME/bin/flink run -d -m yarn-cluster \

-yqu real_time_processing_queue \

-p 1 -yjm 1024m -ytm 1024m -ynm ExceptionLogHandler \

-c com.xxxxx.log.flink.handler.ExceptionLogHandlerBySql \

/root/cf_temp/flink-log-handler.jar

第三种:yarn-session(这个需要先提交到yarn获取对应的application_id,所以这个没测试)

$FLINK_HOME/bin/yarn-session.sh -d -nm yarnsession01 -n 2 -s 3 -jm 1024m -tm 2048m

$FLINK_HOME/bin/flink run -d -yid application_1603447441975_0034 \

-c com.xxxxx.log.flink.handler.ExceptionLogHandlerBySql \

/root/cf_temp/flink-log-handler.jar ExceptionLogSession \

Json格式:

{"serviceId":"test000","serverName":"xxx","serverIp":"xxx.xxx.xxx.xxx","title":"xxxx","operationPath":"/usr/currunt","url":"http://baidu.com","stack":"xxx","exceptionName":"xxxx","exceptionInfo":"xxxx","operationUser":"chenfeng","operationIp":"xxx.xxx.xxx.xxx","orgId":777777,"methodClass":"com.xxxxx.Test","fileName":"test.txt","methodName":"findname","operationData":"name=kk","occurrenceTime":"2021-05-12 09:23:20"}