使用ncnn在树莓派部署自己的yolov5lites模型

使用ncnn在树莓派部署自己的yolov5lites模型

文章目录

- 使用ncnn在树莓派部署自己的yolov5lites模型

- 前言

- 一、windows10上训练自己的yolov5lites模型

-

- 1 下载yolov5lites源码

- 2 配置yolov5lites运行环境

- 3 修改参数进行yolov5lites的训练

-

- 3.1 修改.yaml文件

- 3.2 修改输入为[320,320]

- 3.3 进行训练

- 3.4 生成先验框

- 3.5 可能遇到的bug,比如爆显存等等自己可以csdn一下。(坚持下去,多舔一舔)

- 3.6 这里贴一个将xml格式转换成yolov5lites训练所需的txt文件

- 二、数据的导出以及转换

-

- 1.last.onnx文件导出以及简化

- 三、树莓派环境依赖已经ncnn编译

-

- 1.树莓派环境依赖配置

- 2.ncnn配置以及编译

- 四、树莓派部署lite的ncnn细节

-

- 1.将lastsim.onnx转换为fp16的last.param和last.bin文件

- 2.添加yolov5lite.cpp到ncnn/examples文件夹下

- 3.修改yolov5lite.cpp

-

- 3.1 修改classclass_names

- 3.2 修改anchor的数据

- 3.3 修改lastsim.param

- 3.4 修改yolov5lites的ex.extract

- 3.5 在yolov5lites.cpp内修改路径

- 3.6 修改CMakelists.txt

- 五、测试效果

前言

记录一下入门小白的树莓派部署记录,前前后后走过不少坑。

一、windows10上训练自己的yolov5lites模型

1 下载yolov5lites源码

git clone https://github.com/ppogg/YOLOv5-Lite.git

2 配置yolov5lites运行环境

默认你在windows10上已经会配置环境,由于我本身已经配置好torch=1.7,torchvision=0.8,对应的cuda版本为11.0,还有对应的cudnn版本以及显卡驱动,我就在此环境下进行训练。并使用requirements.txt里的依赖(不需要重新配置相应的cuda以及cudnn)进行.onnx的导出

pip install -r requirements.txt

3 修改参数进行yolov5lites的训练

这一部分是记录转换xml格式为txt文件以及yolov5lites训练自己的模型等所需要的准备工作。

3.1 修改.yaml文件

为自己的训练种类以及个数

3.2 修改输入为[320,320]

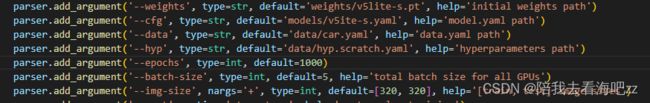

将下载的v5lite-s.pt文件放在创建的weights/文件夹下。

3.3 进行训练

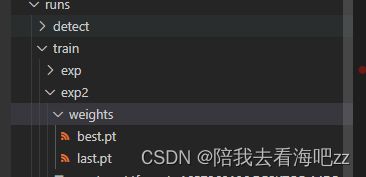

点击train.py,训练保存的权重在runs文件夹下

3.4 生成先验框

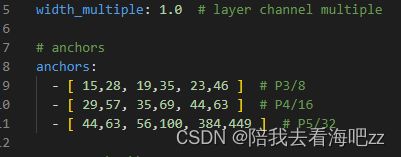

使用autoanchor.py,将生成的数据保存到v5lites.yaml

自己手动添加到对应的是C:\Users\jxbj2\Desktop\yolov5lite\YOLOv5-Lite-master\models\v5lite-s.yaml

3.5 可能遇到的bug,比如爆显存等等自己可以csdn一下。(坚持下去,多舔一舔)

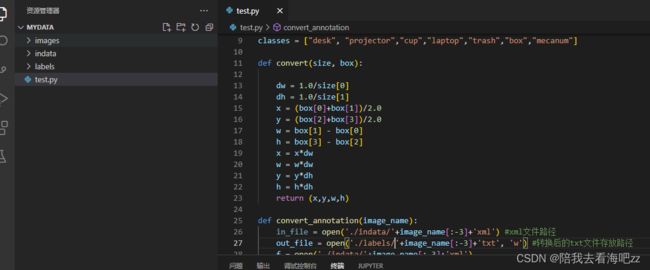

3.6 这里贴一个将xml格式转换成yolov5lites训练所需的txt文件

文件夹准备如下,images放入图片,indata放入对应的xml格式文件,生成的txt文件会在labels文件夹下。

转换代码如下,如需调动测试以及验证数据集自己手动调yolov5lites的代码,换上自己对应修改的种类即可。

import xml.etree.ElementTree as ET

import pickle

import os

from os import listdir , getcwd

from os.path import join

import glob

classes = ["desk", "projector","cup","laptop","trash","box","mecanum"]

def convert(size, box):

dw = 1.0/size[0]

dh = 1.0/size[1]

x = (box[0]+box[1])/2.0

y = (box[2]+box[3])/2.0

w = box[1] - box[0]

h = box[3] - box[2]

x = x*dw

w = w*dw

y = y*dh

h = h*dh

return (x,y,w,h)

def convert_annotation(image_name):

in_file = open('./indata/'+image_name[:-3]+'xml') #xml文件路径

out_file = open('./labels/'+image_name[:-3]+'txt', 'w') #转换后的txt文件存放路径

f = open('./indata/'+image_name[:-3]+'xml')

xml_text = f.read()

root = ET.fromstring(xml_text)

f.close()

size = root.find('size')

w = int(size.find('width').text)

h = int(size.find('height').text)

for obj in root.iter('object'):

cls = obj.find('name').text

if cls not in classes:

print(cls)

continue

cls_id = classes.index(cls)

xmlbox = obj.find('bndbox')

b = (float(xmlbox.find('xmin').text), float(xmlbox.find('xmax').text), float(xmlbox.find('ymin').text),

float(xmlbox.find('ymax').text))

bb = convert((w,h), b)

out_file.write(str(cls_id) + " " + " ".join([str(a) for a in bb]) + '\n')

wd = getcwd()

if __name__ == '__main__':

#for image_path in glob.glob("./images/train/*.jpg"): #每一张图片都对应一个xml文件这里写xml对应的图片的路径

for image_path in glob.glob("./images/*.jpg"): #每一张图片都对应一个xml文件这里写xml对应的图片的路径

image_name = image_path.split('\\')[-1]

convert_annotation(image_name)

二、数据的导出以及转换

这部分的导出我是在windows上完成的,我尝试了一下在树莓派上去导出,但一直报导出错误。

1.last.onnx文件导出以及简化

python export.py --weights weights/last.pt

会在weights/文件夹下生成对应的last.onnx文件

使用onnx-simplifier对转换后的onnx进行简化,将last.onnx文件放到yolov5lite-master文件下输入在终端输入以下指令

python -m onnxsim last.onnx lastsim.onnx

将简化后的lastsim.onnx放入u盘,导入到树莓派中

三、树莓派环境依赖已经ncnn编译

1.树莓派环境依赖配置

sudo apt-get install git cmake

sudo apt-get install -y gfortran

sudo apt-get install -y libprotobuf-dev libleveldb-dev libsnappy-dev libopencv-dev libhdf5-serial-dev protobuf-compiler

sudo apt-get install --no-install-recommends libboost-all-dev

sudo apt-get install -y libgflags-dev libgoogle-glog-dev liblmdb-dev libatlas-base-dev

2.ncnn配置以及编译

$ git clone https://gitee.com/Tencent/ncnn.git

cd ncnn

mkdir build

cd build

cmake ..

make -j4

make install

四、树莓派部署lite的ncnn细节

1.将lastsim.onnx转换为fp16的last.param和last.bin文件

对应路径自己修改(应当具备基础的命令行使用能力哈哈哈哈哈)

cd ncnn/build

./tools/onnx/onnx2ncnn lastsim.onnx lastsim.param lastsim.bin

./tools/ncnnoptimize lastsim.param lastsim.bin last.param last.bin 65536

2.添加yolov5lite.cpp到ncnn/examples文件夹下

具体代码如下所示

// Tencent is pleased to support the open source community by making ncnn available.

//

// Copyright (C) 2020 THL A29 Limited, a Tencent company. All rights reserved.

//

// Licensed under the BSD 3-Clause License (the "License"); you may not use this file except

// in compliance with the License. You may obtain a copy of the License at

//

// https://opensource.org/licenses/BSD-3-Clause

//

// Unless required by applicable law or agreed to in writing, software distributed

// under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR

// CONDITIONS OF ANY KIND, either express or implied. See the License for the

// specific language governing permissions and limitations under the License.

#include "layer.h"

#include "net.h"

#if defined(USE_NCNN_SIMPLEOCV)

#include "simpleocv.h"

#else

#include 3.修改yolov5lite.cpp

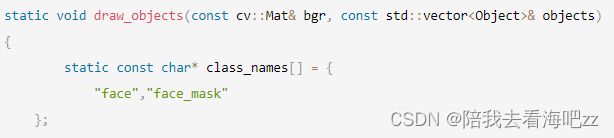

3.1 修改classclass_names

修改成你所需要的检测种类,“desk”, “bicycle”, “cup”, “laptop”, “trash”, “box”, “mecanum”

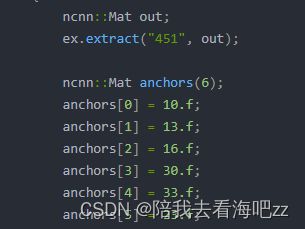

3.2 修改anchor的数据

对应的是C:\Users\jxbj2\Desktop\yolov5lite\YOLOv5-Lite-master\models\v5lite-s.yaml

将上面这些anchor的数据(15,28,19,35,23,46)放入yolov5lite.cpp中的以下代码中,第一行的六个数据对应10,13,16,30,33,23)

对应的还有两个对应的地方也做出同样修改即可。

3.3 修改lastsim.param

将Reshape 0=x全部设置为0=-1,如画圈所示

3.4 修改yolov5lites的ex.extract

打开lastsim.param文件,对应上图三个方框里的 onnx::Sigmoid_647改写到ex.extract里面。

3.5 在yolov5lites.cpp内修改路径

修改好yolov5.cpp中lastsim.param和lastsim.bin的路径,并放到测试的文件夹内(路径)。

3.6 修改CMakelists.txt

进入到ncnn/examples/CMakelist.txt,如下图所示

输入指令

cd ncnn/build

cmake ..

make

完成编译。

五、测试效果

打开测试的文件夹,将编译好的yolov5.cpp可执行文件放到测试文件夹下,(在yolov5lite.cpp文件内选择摄像头还是图片,如果有图片记得放在测试文件夹下)。

cd pi/ceshi

./yolov5_lite.cpp

说实话,我用的树莓派4B,yolov5lites感觉效果都不是很好,接下来我要继续试一下int8的量化。