实践数据湖iceberg 第七课 实时写入到iceberg

系列文章目录

实践数据湖iceberg 第一课.

实践数据湖iceberg 第二课 iceberg基于hadoop的底层数据格式.

实践数据湖iceberg 第三课 在sqlclient中,以sql方式从kafka读数据到iceberg.

实践数据湖iceberg 第四课 在sqlclient中,以sql方式从kafka读数据到iceberg(升级版本到flink1.12.7).

实践数据湖iceberg 第五课 hive catalog特点.

实践数据湖iceberg 第六课 从kafka写入到iceberg失败问题 解决.

实践数据湖iceberg 第七课 实时写入到iceberg.

提示:写完文章后,目录可以自动生成,如何生成可参考右边的帮助文档

文章目录

- 系列文章目录

- 前言

- 1. 制造数据

- 2. 通过flume把制造的数据,写入到kafka

-

- 2.1 flume把日志直接写到kafka的配置文件

- 2.2 准备启动flume agent的脚本

- 2.3 检查是否写入到kafka

- 3.把kakfa的行为数据入湖

-

- 3.1.定义kafka表

-

- 3.1.1 使用csv格式读取看看

- 3.1.2 使用raw格式读取看看

- 3.2 定义iceberg表

- 3.3 kafka表入到iceberg

- 4.观察生成的数据

- 5. count条数

- 5. 大量小文件如何处理?

- 总结

前言

制造数据,每秒生成1条数据,数据有序增长,把这些数据实时写入kafka, kaka写到iceberg提示:以下是本篇文章正文内容,下面案例可供参考

1. 制造数据

准备生成数据脚本

[root@hadoop102 bin]# cat generateLog.sh

#!/bin/bash

for i in {1..10000000};do echo $i,$RANDOM >> /opt/module/logs/mockLog.log; sleep 1s ;done;

生成数据

[root@hadoop102 bin]# nohup ./generateLog.sh > generateLog.log 2>&1 &

[1] 20688

查看生成的数据

[root@hadoop102 logs]# tail -f mockLog.log

2,98562

3,4401

4,69527

5,27391

6,64876

7,32809

8,47124

9,65215

10,55296

11,91302

12,37345

13,56344

14,5092

制造过程中,出错,日志入下图

[root@hadoop102 bin]# cat generateLog.log

nohup: ignoring input

./generateLog.sh: fork: Cannot allocate memory

是因为服务器,使用默认最大进程数,造成,修改一下就OK, 百度一下fork: Cannot allocate memory 就有很多解决方案

后来发现不是进程数的问题。

可能是for的数字太大

我还是改为java写个jar包上来

package org.example;

import java.util.Random;

/**

* Hello world!

*

*/

public class GenerateLog

{

public static void main( String[] args ) throws InterruptedException {

int len = 100000;

int sleepMilis = 1000;

// System.out.println("<生成id范围><每条数据停顿时长毫秒>");

if(args.length == 1){

len = Integer.valueOf(args[0]);

}

if(args.length == 2){

len = Integer.valueOf(args[0]);

sleepMilis = Integer.valueOf(args[1]);

}

Random random = new Random();

for(int i=0; i<10000000; i++){

System.out.println(i+"," + random.nextInt(len) );

Thread.sleep(sleepMilis);

}

}

}

java版升成数据脚本

[root@hadoop102 bin]# cat generateLog.sh

#!/bin/bash

#for i in {1..100000};do echo $i,$RANDOM >> /opt/module/logs/mockLog.log; sleep 1s ;done;

nohup java -jar /opt/software/iceberg-learning-1.0-SNAPSHOT.jar > /opt/module/logs/mockLog.log 2>&1 &

2. 通过flume把制造的数据,写入到kafka

一开始,想通过linux管道写到kafka,发现 管道

2.1 flume把日志直接写到kafka的配置文件

[root@hadoop102 conf]# cat file-to-kafka.conf

a1.sources = r1

a1.channels = c1

a1.sources.r1.type = TAILDIR

a1.sources.r1.positionFile = /var/log/flume/taildir_position.json

a1.sources.r1.filegroups = f1

a1.sources.r1.filegroups.f1 = /opt/module/logs/mockLog.log

a1.channels.c1.type = org.apache.flume.channel.kafka.KafkaChannel

a1.channels.c1.kafka.bootstrap.servers = hadoop101:9092,hadoop102:9092,hadoop103:9092

a1.channels.c1.kafka.topic = behavior_log

a1.channels.c1.kafka.consumer.group.id = flume-consumer

#绑定source和channel以及sink和channel的关系

a1.sources.r1.channels = c1

2.2 准备启动flume agent的脚本

[root@hadoop102 bin]# cat flume.sh

#! /bin/bash

case $1 in

"start"){

for i in hadoop102

do

echo " --------启动 $i flume-------"

ssh $i "source /etc/profile;cd /opt/module/flume; nohup flume-ng agent --conf conf --conf-file conf/file-to-kafka.conf --name a1 -Dflume.root.logger=INFO,console >/opt/module/flume/logs/file-to-kafka.log 2>&1 &"

done

};;

"stop"){

for i in hadoop102

do

echo " --------停止 $i flume file-to-kafka.conf -------"

ssh $i "ps -ef | grep file-to-kafka.conf | grep -v grep |awk '{print \$2}' | xargs -n1 kill"

done

};;

esac

[root@hadoop102 bin]#

2.3 检查是否写入到kafka

[root@hadoop103 ~]# kafka-console-consumer.sh --topic behavior_log --bootstrap-server hadoop101:9092,hadoop102:9092,hadoop103:9092

413,97153

416,77212

414,27686

412,33012

415,84019

419,28548

418,39725

421,38533

417,39665

420,9306

3.把kakfa的行为数据入湖

3.1.定义kafka表

启动flink-sql

sql-client.sh embedded -j /opt/software/iceberg-flink-runtime-0.12.1.jar -j /opt/software/flink-sql-connector-hive-2.3.6_2.12-1.12.7.jar -j /opt/software/flink-sql-connector-kafka_2.12-1.12.7.jar shell

3.1.1 使用csv格式读取看看

create table kafka_behavior_log

(

i BIGINT,

id BIGINT

) WITH (

'connector' = 'kafka',

'topic' = 'behavior_log',

'properties.bootstrap.servers' = 'hadoop101:9092,hadoop102:9092,hadoop103:9092',

'properties.group.id' = 'rickGroup7',

'scan.startup.mode' = 'earliest-offset',

'format' = 'csv'

)

select * from kafka_behavior_log;

读取不到实时数据

3.1.2 使用raw格式读取看看

create table kafka_behavior_log_raw

(

log STRING

) WITH (

'connector' = 'kafka',

'topic' = 'behavior_log',

'properties.bootstrap.servers' = 'hadoop101:9092,hadoop102:9092,hadoop103:9092',

'properties.group.id' = 'rickGroup7',

'scan.startup.mode' = 'earliest-offset',

'format' = 'raw'

)

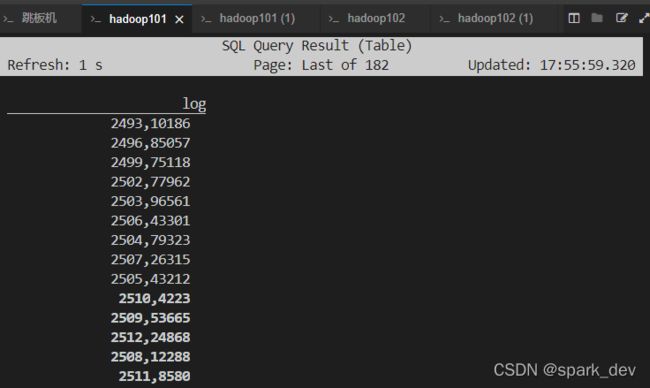

Flink SQL> select * from kafka_behavior_log_raw;

3.2 定义iceberg表

建catalog,表

CREATE CATALOG hive_catalog6 WITH (

'type'='iceberg',

'catalog-type'='hive',

'uri'='thrift://hadoop101:9083',

'clients'='5',

'property-version'='1',

'warehouse'='hdfs:user/hive/warehouse/hive_catalog6'

);

database 各个catalog是共享的

use catalog hive_catalog6;

create database iceberg_db6;

create table `hive_catalog6`.`iceberg_db6`.`behavior_log_ib`(

log STRING

)

3.3 kafka表入到iceberg

切换回default_catalog; (因为kafka表在这个catalog创建的)

use catalog default_catalog;

insert into `hive_catalog6`.`iceberg_db6`.`behavior_log_ib` select log from kafka_behavior_log_raw

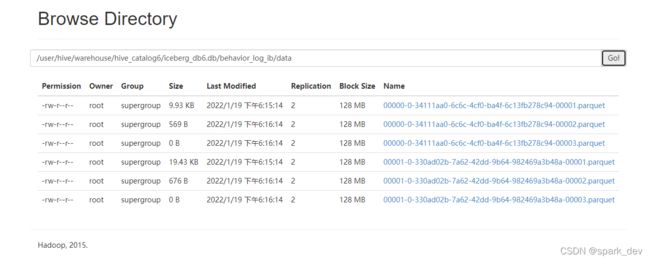

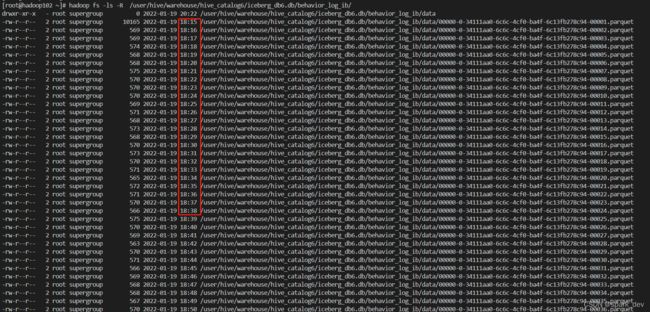

4.观察生成的数据

结论:与checkpoint的时间间隔生成数据文件

[root@hadoop102 ~]# hadoop fs -ls -R /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/

drwxr-xr-x - root supergroup 0 2022-01-19 18:17 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/data

-rw-r--r-- 2 root supergroup 10165 2022-01-19 18:15 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/data/00000-0-34111aa0-6c6c-4cf0-ba4f-6c13fb278c94-00001.parquet

-rw-r--r-- 2 root supergroup 569 2022-01-19 18:16 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/data/00000-0-34111aa0-6c6c-4cf0-ba4f-6c13fb278c94-00002.parquet

-rw-r--r-- 2 root supergroup 569 2022-01-19 18:17 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/data/00000-0-34111aa0-6c6c-4cf0-ba4f-6c13fb278c94-00003.parquet

-rw-r--r-- 2 root supergroup 0 2022-01-19 18:17 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/data/00000-0-34111aa0-6c6c-4cf0-ba4f-6c13fb278c94-00004.parquet

-rw-r--r-- 2 root supergroup 19899 2022-01-19 18:15 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/data/00001-0-330ad02b-7a62-42dd-9b64-982469a3b48a-00001.parquet

-rw-r--r-- 2 root supergroup 676 2022-01-19 18:16 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/data/00001-0-330ad02b-7a62-42dd-9b64-982469a3b48a-00002.parquet

-rw-r--r-- 2 root supergroup 670 2022-01-19 18:17 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/data/00001-0-330ad02b-7a62-42dd-9b64-982469a3b48a-00003.parquet

-rw-r--r-- 2 root supergroup 0 2022-01-19 18:17 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/data/00001-0-330ad02b-7a62-42dd-9b64-982469a3b48a-00004.parquet

drwxr-xr-x - root supergroup 0 2022-01-19 18:17 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata

-rw-r--r-- 2 root supergroup 998 2022-01-19 18:12 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/00000-61699181-b792-411f-a920-5cd2b931e598.metadata.json

-rw-r--r-- 2 root supergroup 2096 2022-01-19 18:15 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/00001-6ace3ab3-88fd-4c07-9d6a-c68d966dfd65.metadata.json

-rw-r--r-- 2 root supergroup 3225 2022-01-19 18:16 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/00002-b48d08cc-4eeb-442c-b590-db6420ffed35.metadata.json

-rw-r--r-- 2 root supergroup 4354 2022-01-19 18:17 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/00003-1e64aa46-1346-40c2-bb8f-50c5d4b8beda.metadata.json

-rw-r--r-- 2 root supergroup 5831 2022-01-19 18:16 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/46a441c4-fa76-4102-93bc-a8f844342d9c-m0.avro

-rw-r--r-- 2 root supergroup 5831 2022-01-19 18:17 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/a9c597aa-5b1e-418c-a4c3-77266c6fef98-m0.avro

-rw-r--r-- 2 root supergroup 5838 2022-01-19 18:15 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/f7587144-2769-4128-9251-20634cfc3e10-m0.avro

-rw-r--r-- 2 root supergroup 3909 2022-01-19 18:17 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/snap-1810035882406080361-1-a9c597aa-5b1e-418c-a4c3-77266c6fef98.avro

-rw-r--r-- 2 root supergroup 3792 2022-01-19 18:15 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/snap-6209374798665286118-1-f7587144-2769-4128-9251-20634cfc3e10.avro

-rw-r--r-- 2 root supergroup 3867 2022-01-19 18:16 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/snap-7052328324703984043-1-46a441c4-fa76-4102-93bc-a8f844342d9c.avro

生成5个数据文件,和相应的metadata

[root@hadoop102 ~]# hadoop fs -ls -R /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/

drwxr-xr-x - root supergroup 0 2022-01-19 18:18 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/data

-rw-r--r-- 2 root supergroup 10165 2022-01-19 18:15 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/data/00000-0-34111aa0-6c6c-4cf0-ba4f-6c13fb278c94-00001.parquet

-rw-r--r-- 2 root supergroup 569 2022-01-19 18:16 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/data/00000-0-34111aa0-6c6c-4cf0-ba4f-6c13fb278c94-00002.parquet

-rw-r--r-- 2 root supergroup 569 2022-01-19 18:17 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/data/00000-0-34111aa0-6c6c-4cf0-ba4f-6c13fb278c94-00003.parquet

-rw-r--r-- 2 root supergroup 574 2022-01-19 18:18 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/data/00000-0-34111aa0-6c6c-4cf0-ba4f-6c13fb278c94-00004.parquet

-rw-r--r-- 2 root supergroup 0 2022-01-19 18:18 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/data/00000-0-34111aa0-6c6c-4cf0-ba4f-6c13fb278c94-00005.parquet

-rw-r--r-- 2 root supergroup 19899 2022-01-19 18:15 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/data/00001-0-330ad02b-7a62-42dd-9b64-982469a3b48a-00001.parquet

-rw-r--r-- 2 root supergroup 676 2022-01-19 18:16 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/data/00001-0-330ad02b-7a62-42dd-9b64-982469a3b48a-00002.parquet

-rw-r--r-- 2 root supergroup 670 2022-01-19 18:17 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/data/00001-0-330ad02b-7a62-42dd-9b64-982469a3b48a-00003.parquet

-rw-r--r-- 2 root supergroup 671 2022-01-19 18:18 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/data/00001-0-330ad02b-7a62-42dd-9b64-982469a3b48a-00004.parquet

-rw-r--r-- 2 root supergroup 0 2022-01-19 18:18 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/data/00001-0-330ad02b-7a62-42dd-9b64-982469a3b48a-00005.parquet

drwxr-xr-x - root supergroup 0 2022-01-19 18:18 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata

-rw-r--r-- 2 root supergroup 998 2022-01-19 18:12 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/00000-61699181-b792-411f-a920-5cd2b931e598.metadata.json

-rw-r--r-- 2 root supergroup 2096 2022-01-19 18:15 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/00001-6ace3ab3-88fd-4c07-9d6a-c68d966dfd65.metadata.json

-rw-r--r-- 2 root supergroup 3225 2022-01-19 18:16 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/00002-b48d08cc-4eeb-442c-b590-db6420ffed35.metadata.json

-rw-r--r-- 2 root supergroup 4354 2022-01-19 18:17 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/00003-1e64aa46-1346-40c2-bb8f-50c5d4b8beda.metadata.json

-rw-r--r-- 2 root supergroup 5483 2022-01-19 18:18 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/00004-5cd0ffd8-7336-46ac-a280-c29dbc0faabb.metadata.json

-rw-r--r-- 2 root supergroup 5831 2022-01-19 18:16 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/46a441c4-fa76-4102-93bc-a8f844342d9c-m0.avro

-rw-r--r-- 2 root supergroup 5836 2022-01-19 18:18 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/a995dc51-f98f-4030-9daf-973ea2cb8106-m0.avro

-rw-r--r-- 2 root supergroup 5831 2022-01-19 18:17 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/a9c597aa-5b1e-418c-a4c3-77266c6fef98-m0.avro

-rw-r--r-- 2 root supergroup 5838 2022-01-19 18:15 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/f7587144-2769-4128-9251-20634cfc3e10-m0.avro

-rw-r--r-- 2 root supergroup 3909 2022-01-19 18:17 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/snap-1810035882406080361-1-a9c597aa-5b1e-418c-a4c3-77266c6fef98.avro

-rw-r--r-- 2 root supergroup 3947 2022-01-19 18:18 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/snap-3397835470520958512-1-a995dc51-f98f-4030-9daf-973ea2cb8106.avro

-rw-r--r-- 2 root supergroup 3792 2022-01-19 18:15 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/snap-6209374798665286118-1-f7587144-2769-4128-9251-20634cfc3e10.avro

-rw-r--r-- 2 root supergroup 3867 2022-01-19 18:16 /user/hive/warehouse/hive_catalog6/iceberg_db6.db/behavior_log_ib/metadata/snap-7052328324703984043-1-46a441c4-fa76-4102-93bc-a8f844342d9c.avro

生成文件特点: 每隔一分钟生成一个数据文件, 与checkpoint的时间间隔一致

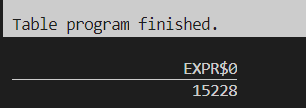

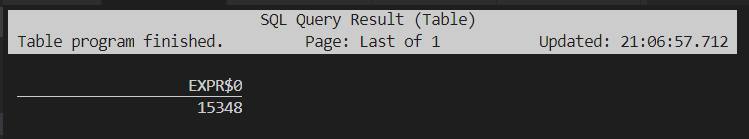

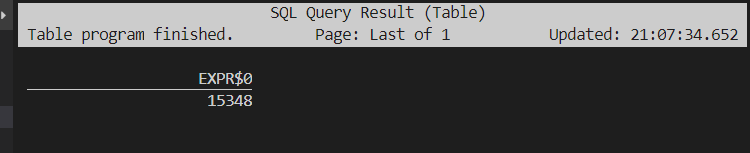

5. count条数

Flink SQL> select count(*) from `hive_catalog6`.`iceberg_db6`.`behavior_log_ib` ;

[INFO] Result retrieval cancelled.

1510

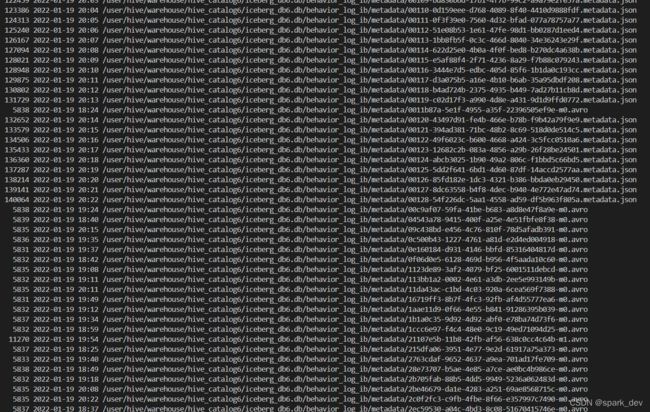

5. 大量小文件如何处理?

每分钟生成一份文件,三个小文件(数据文件,metadata,快照文件),一天24小时60分钟/小时=1440分钟,14403个文件=4320个文件。

一天生成4000多个小文件如何进行合并?