libtorch-win10环境配置

版本问题

pytorch 版本和libtorch版本一致,

原有的环境pytorch 1.6.0,这里采用pytorch 1.7.0

win10 cuda 版本 11.0

- 查看自己的cuda版本

nvcc -V

- 复制一份当前环境

conda create -n sotcuda11 --clone sot

conda activate sotcuda11

- 安装pytorch 1.7.0,本地是cuda11.0.3

- https://pytorch.org/get-started/previous-versions/

conda install pytorch==1.7.0 torchvision==0.8.0 torchaudio==0.7.0 cudatoolkit=11.0 -c pytorch

- 老版本的libtorch下载修改相应版本号即可

https://download.pytorch.org/libtorch/cu110/libtorch-win-shared-with-deps-debug-1.7.0%2Bcu110.zip

模型导出

具体介绍看:https://blog.csdn.net/weixin_41449637/article/details/120036685

import torch

import torchvision

print(torch.__version__)

# An instance of your model.

model = torchvision.models.resnet18()

# An example input you would normally provide to your model's forward() method.

example = torch.rand(1, 3, 224, 224)

# Use torch.jit.trace to generate a torch.jit.ScriptModule via tracing.

traced_script_module = torch.jit.trace(model, example)

traced_script_module.save("model.pt")

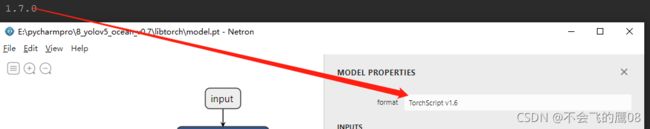

netron查看导出模型的pytorch版本号,pytorch版本1.7.0,torchscript 1.6

vs2019新建空项目并配置环境变量

- 新建空项目,新建main.cpp,并修改自己的刚生成的模型路径

#include // One-stop header.

#include

#include

int main() {

// Deserialize the ScriptModule from a file using torch::jit::load().

torch::jit::script::Module module = torch::jit::load("E:\\vspro\\mysiam\\myLibtorch\\models\\model.pt");

assert(module != nullptr);

std::cout << "ok\n";

// Create a vector of inputs.

std::vector inputs;

inputs.push_back(torch::ones({ 1, 3, 224, 224 }));

// Execute the model and turn its output into a tensor.

at::Tensor output = module->forward(inputs).toTensor();

std::cout << output.slice(/*dim=*/1, /*start=*/0, /*end=*/5) << '\n';

while (1);

}

- 这里不采用 cmake 编译,试了cmake之后,发现添加的lib路径很乱,报了很多错,因此采用vs添加include,lib库目录和lib文件的方式进行

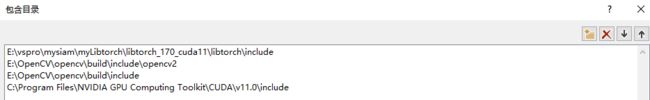

include的目录,这里在vc++目录下设置的,也可以在c/c++的include目录设置

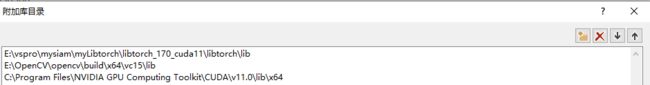

附加库目录:

lib文件:

输出目录下的几个dll文件,从libtorch_170_cuda11\libtorch\lib复制过去就行

注意事项: - 项目-》属性-》c/c++》常规》SDL检查:改为否

- c/c++》语言》符合模式:选择:否

- 同时运行模式切换到debug,x64

运行结果

- cpu结果如下图所示

可能会用到的链接 - https://blog.csdn.net/weixin_44816589/article/details/117068262

- C++部署Pytorch(Libtorch)出现问题、错误汇总

- Pytorch Windows C++调用(libtorch) Cmake编译 分别在python和C++中验证一致

- Win10+VS2017+PyTorch(libtorch) C++ 基本应用

相关疑问

- 为什么要用cmake?

在当前情况cmake项目时,如果添加libtorch,会寻找两个后缀为.make的文件,比如opencv中就有OpenCVConfig.cmake 和OpenCVConfig-version.cmake,但是现在官网下载的libtorch并没有这两个文件,直接添加库目录和头文件就能使用,因此这里没用cmake,别人为什么用cmake就行,我也不明白,清楚的可以留言

note:

E:\vspro\mysiam\myLibtorch\libtorch_170_cuda11\libtorch\share\cmake\Torch

有cmake两个文件

----------------------------------------------------------分割线-----------------------------------------------------------

GPU 版本的话,编译方式采用cmake编译哈,

cmakelist.txt内容如下:指定了opencv的路径,libtorch 的路径没有指定,在cmake gui编译的时候指定,编译通过后,用vs2019打开.sln文件,重新编译,设置的注意事项参考上面

```cpp

cmake_minimum_required(VERSION 3.12 FATAL_ERROR)

project(myresnet)

find_package(Torch REQUIRED)

set(OpenCV_DIR E:/OpenCV/opencv/build)

find_package(OpenCV REQUIRED)

if(NOT Torch_FOUND)

message(FATAL_ERROR "Pytorch Not Found!")

endif(NOT Torch_FOUND)

message(STATUS "Pytorch status:")

message(STATUS " libraries: ${TORCH_LIBRARIES}")

message(STATUS "OpenCV library status:")

message(STATUS " version: ${OpenCV_VERSION}")

message(STATUS " libraries: ${OpenCV_LIBS}")

message(STATUS " include path: ${OpenCV_INCLUDE_DIRS}")

add_executable(myresnet main.cpp)

target_link_libraries(myresnet ${TORCH_LIBRARIES} ${OpenCV_LIBS})

set_property(TARGET myresnet PROPERTY CXX_STANDARD 11)

验证GPU版本的代码,main.cpp

#include #include

note:上面这行代码的路径在

myLibtorch\libtorch_170_cuda11\libtorch\include\torch\csrc\api\include\torch

而 #include

myLibtorch\libtorch_170_cuda11\libtorch\include\torch