kafka之消息消费者基本知识

文章目录

- 消费者

-

- 一 基本知识

-

- 1.1 客户端开发

- 1.2 重要参数

- 1.3 消息的订阅

- 1.4 消息的拉取

- 二 原理解析

-

- 2.1 反序列化

- 2.2消费位移

-

- 2.2.1 自动提交

- 2.2.2 手动提交

- 2.2.3 控制或关闭消费

- 2.3.4 指定位移消费

- 2.3 在均衡

- 2.4 消费拦截器

- 2.5 多线程

消费者

一 基本知识

-

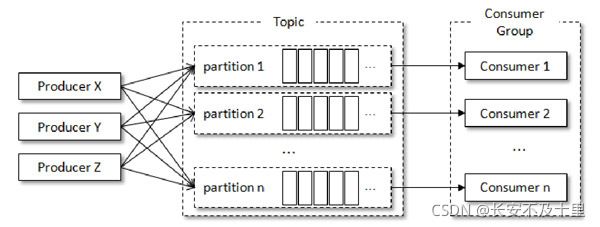

在Kafka的消费理念中还有一层消费组(Consumer Group)的概念,每个消费者都有一个对应的消费组。当消息发布到主题后,只会被投递给订阅它的每个消费组中的一个消费者。

-

每个消费者只能消费所分配到的分区中的消息。换言之,每一个分区只能被一个消费组中的一个消费者所消费。

-

发布订阅模式定义了如何向一个内容节点发布和订阅消息,这个内容节点称为主题(Topic),主题可以认为是消息传递的中介,消息发布者将消息发布到某个主题,而消息订阅者从主题中订阅消息。

-

主题使得消息的订阅者和发布者互相保持独立,不需要进行接触即可保证消息的传递,发布/订阅模式在消息的一对多广播时采用。

-

如果所有的消费者都隶属于同一个消费组,那么所有的消息都会被均衡地投递给每一个消费者,即每条消息只会被一个消费者处理,这就相当于点对点模式的应用。

1.1 客户端开发

import org.apache.kafka.clients.consumer.*;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.common.TopicPartition;

import org.apache.kafka.common.serialization.StringDeserializer;

import java.time.Duration;

import java.util.Arrays;

import java.util.Collections;

import java.util.Properties;

/**

* @Author shu

* @Date: 2021/10/25/ 15:09

* @Description 消费者

**/

public class MySimpleConsumer {

//主题名

private final static String TOPIC_NAME = "my-replicated-topic";

//分组

private final static String CONSUMER_GROUP_NAME = "testGroup";

public static void main(String[] args) {

Properties props =new Properties();

//消息地址

props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "ip:9093");

//分组

props.put(ConsumerConfig.GROUP_ID_CONFIG, CONSUMER_GROUP_NAME);

//序列化

props.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

props.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

//自动提交,拉取到信息之后,立马提交偏移量给consumer_offset,保证顺序消费,但是会造成消息丢失问题

// // 是否⾃动提交offset,默认就是true

// props.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, "true");

// // ⾃动提交offset的间隔时间

// props.put(ConsumerConfig.AUTO_COMMIT_INTERVAL_MS_CONFIG, "1000");

//手动提交,当消费者消费消息完毕之后,返回偏移量

props.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, "false");

//⼀次poll最⼤拉取消息的条数,可以根据消费速度的快慢来设置

// props.put(ConsumerConfig.MAX_POLL_RECORDS_CONFIG, 500);

//props.put(ConsumerConfig.MAX_POLL_INTERVAL_MS_CONFIG, 30 * 1000);

//1.创建⼀个消费者的客户端

KafkaConsumer<String, String> consumer = new KafkaConsumer<String, String>(props);

//2. 消费者订阅主题列表

// consumer.assign();

//consumer.subscribe(Arrays.asList(TOPIC_NAME));

TopicPartition topicPartition = new TopicPartition(TOPIC_NAME, 0);

consumer.assign(Arrays.asList(topicPartition));

while (true) {

/*

* 3.poll() API 是拉取消息的⻓轮询

*/

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(1000));

for (ConsumerRecord<String, String> record : records) {

//4.打印消息ConsumerCoordinator

System.out.printf("收到消息:partition = %d,offset = %d, key = %s, value = %s%n", record.partition(),

record.offset(), record.key(), record.value());

}

//所有的消息已消费完

if (records.count() > 0) {//有消息

// ⼿动同步提交offset,当前线程会阻塞直到offset提交成功

// ⼀般使⽤同步提交,因为提交之后⼀般也没有什么逻辑代码了

consumer.commitSync();//=======阻塞=== 提交成功

}

long position = consumer.position(topicPartition);

System.out.println("下一个消费的位置"+position);

}

}

}

1.2 重要参数

bootstrap.servers:该参数用来指定生产者客户端连接Kafka集群所需的broker地址清单,具体的内容格式为host1:port1,host2:port2,可以设置一个或多个地址,中间以逗号隔开。key.deserializer 和 value.deserializer:broker 端接收的消息必须以字节数组(byte[])的形式存在,key.deserializer和value.deserializer这两个参数分别用来指定key和value序列化操作的序列化器,这两个参数无默认值。注意这里必须填写序列化器的全限定名。enable-auto-commit:是否自动提交。group.id:消费者隶属的消费组的名称,默认值为’’。auto-offset-reset:- earliest: 当各分区下有已提交的offset时,从提交的offset开始消费;无提交的offset时,从头开始消费

- latest: 当各分区下有已提交的offset时,从提交的offset开始消费;无提交的offset时,消费新产生的该分区下的数据

ack-mode:动调Acknowledgment.acknowledge()后即提交

#########consumer############# 关闭自动提交

spring.kafka.consumer.enable-auto-commit=false

# 消费组

spring.kafka.consumer.group-id=MyGroup1

#第一次重头消费,后面依次消费

spring.kafka.consumer.auto-offset-reset=earliest

# 反序列化s

pring.kafka.consumer.key-deserializer= org.apache.kafka.common.serialization.StringDeserializerspring.kafka.consumer.value-deserializer= org.apache.kafka.common.serialization.StringDeserializer

# 最大消息

spring.kafka.consumer.max-poll-records=500

# 动调Acknowledgment.acknowledge()后即提交

spring.kafka.listener.ack-mode=manual_immediate

#kafka的监听接口监听的主题不存在时是否报错#

spring.kafka.listener.missing-topics-fatal=false

1.3 消息的订阅

- 在创建好消费者之后,我们就需要为该消费者订阅相关的主题了。

//1.创建⼀个消费者的客户端

KafkaConsumer<String, String> consumer = new KafkaConsumer<String, String>(props);

//2. 消费者订阅主题列表

consumer.subscribe(Arrays.asList(TOPIC_NAME));

- 我们使用subscribe()方法订阅了一个主题,对于这个方法而言,既可以以集合的形式订阅多个主题,也可以以正则表达式的形式订阅特定模式的主题。

- 如果前后两次订阅了不同的主题,那么消费者以最后一次的为准。

- 如果消费者采用的是正则表达式的方式(subscribe(Pattern))订阅,在之后的过程中,如果有人又创建了新的主题,并且主题的名字与正则表达式相匹配,那么这个消费者就可以消费到新添加的主题中的消息。

public void subscribe(Collection<String> topics, ConsumerRebalanceListener listener) {} public void subscribe(Collection<String> topics) {}

public void subscribe(Pattern pattern, ConsumerRebalanceListener listener) {}

public void subscribe(Pattern pattern) { }

public void subscribe(Collection<String> topics) {}

if (this.subscriptions.subscribe(new HashSet<>(topics), listener)) metadata.requestUpdateForNewTopics();

public synchronized boolean subscribe(Set<String> topics, ConsumerRebalanceListener listener) { registerRebalanceListener(listener); setSubscriptionType(SubscriptionType.AUTO_TOPICS); return changeSubscription(topics); }

/* 用户请求的主题列表 */

private Set<String> subscription;

private boolean changeSubscription(Set<String> topicsToSubscribe) {

if (subscription.equals(topicsToSubscribe))

return false;

subscription = topicsToSubscribe;

return true;

}

KafkaConsumer中的partitionsFor()方法可以用来查询指定主题的元数据信息,找到主题的分区信息

public class PartitionInfo {

private final String topic;//主题

private final int partition;//分区

private final Node leader;//领导节点

private final Node[] replicas;//AR集合

private final Node[] inSyncReplicas;//ISR集合

private final Node[] offlineReplicas;//OSR集合 }

//获取有关给定主题的分区的元数据

public List<PartitionInfo> partitionsFor(String topic, Duration timeout) { acquireAndEnsureOpen();

try {

Cluster cluster = this.metadata.fetch();

List<PartitionInfo> parts = cluster.partitionsForTopic(topic);

if (!parts.isEmpty())

return parts;

Timer timer = time.timer(timeout);

Map<String, List<PartitionInfo>> topicMetadata = fetcher.getTopicMetadata( new MetadataRequest.Builder(Collections.singletonList(topic), metadata.allowAutoTopicCreation()), timer);

return topicMetadata.get(topic); }

finally { release(); } }

- 可以直接订阅某些主题的特定分区,在KafkaConsumer中还提供了一个assign()方法来实现这些功能

assign:根据分区信息拉取内容

consumer.assign();

public void assign(Collection<TopicPartition> partitions) {

if (this.subscriptions.assignFromUser(new HashSet<>(partitions))) metadata.requestUpdateForNewTopics(); }//将分配更改为用户提供的指定分区,注意这与assignFromSubscribed(Collection)不同,其输入分区由订阅的主题提供。

public synchronized boolean assignFromUser(Set<TopicPartition> partitions) { setSubscriptionType(SubscriptionType.USER_ASSIGNED);

if (this.assignment.partitionSet().equals(partitions))

return false;

assignmentId++;

Set<String> manualSubscribedTopics = new HashSet<>();

Map<TopicPartition, TopicPartitionState> partitionToState = new HashMap<>();

for (TopicPartition partition : partitions) {

TopicPartitionState state = assignment.stateValue(partition);

if (state == null)

state = new TopicPartitionState();

partitionToState.put(partition, state); manualSubscribedTopics.add(partition.topic()); } this.assignment.set(partitionToState);

return changeSubscription(manualSubscribedTopics); }

private boolean changeSubscription(Set<String> topicsToSubscribe) {

if (subscription.equals(topicsToSubscribe))

return false;

subscription = topicsToSubscribe;

return true; }

- 集合订阅的方式subscribe(Collection)、正则表达式订阅的方式subscribe(Pattern)和指定分区的订阅方式 assign(Collection)分表代表了三种不同的订阅状态:AUTO_TOPICS、AUTO_PATTERN和USER_ASSIGNED(如果没有订阅,那么订阅状态为NONE)。

- 然而这三种状态是互斥的,在一个消费者中只能使用其中的一种,否则会报出IllegalStateException异常:

private enum SubscriptionType { NONE, AUTO_TOPICS, AUTO_PATTERN, USER_ASSIGNED }

- 通过 subscribe()方法订阅主题具有消费者自动再均衡的功能,在多个消费者的情况下可以根据分区分配策略来自动分配各个消费者与分区的关系。当消费组内的消费者增加或减少时,分区分配关系会自动调整,以实现消费负载均衡及故障自动转移。

- 而通过assign()方法订阅分区时,是不具备消费者自动均衡的功能的,其实这一点从assign()方法的参数中就可以看出端倪,两种类型的subscribe()都有

ConsumerRebalanceListener类型参数的方法,而assign()方法却没有。

1.4 消息的拉取

- Kafka中的消费是基于拉模式的。消息的消费一般有两种模式:推模式和拉模式。推模式是服务端主动将消息推送给消费者,而拉模式是消费者主动向服务端发起请求来拉取消息。

while (true) { /* * 3.poll() API 是拉取消息的⻓轮询 */ ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(1000)); for (ConsumerRecord<String, String> record : records) {

//4.打印消息

System.out.printf("收到消息:partition = %d,offset = %d, key = %s, value = %s%n", record.partition(),record.offset(), record.key(), record.value()); }

- poll(long)方法中timeout的时间单位固定为毫秒,而poll(Duration)方法可以根据Duration中的

ofMillis()、ofSeconds()、ofMinutes()、ofHours()等多种不同的方法指定不同的时间单位,灵活性更强。

//拉取的消息

public class ConsumerRecord<K, V> {

public static final long NO_TIMESTAMP = RecordBatch.NO_TIMESTAMP;

public static final int NULL_SIZE = -1;

public static final int NULL_CHECKSUM = -1;

private final String topic; //主题名

private final int partition; //分区

private final long offset; //偏移量

private final long timestamp; //时间戳

private final TimestampType timestampType;

private final int serializedKeySize; //序列化

private final int serializedValueSize;

private final Headers headers; //头部信息

private final K key; //key

private final V value; //值

private final Optional<Integer> leaderEpoch;

private volatile Long checksum;}

- 可以简单地认为poll()方法只是拉取一下消息而已,但就其内部逻辑而言并不简单,它涉及消费位移、消费者协调器、组协调器、消费者的选举、分区分配的分发、再均衡的逻辑、心跳等内容。

二 原理解析

2.1 反序列化

- Kafka所提供的反序列化器有

ByteBufferDeserializer、ByteArrayDeserializer、BytesDeserializer、DoubleDeserializer、FloatDeserializer、IntegerDeserializer、LongDeserializer、ShortDeserializer、StringDeserializer。 - 它们分别用于

ByteBuffer、ByteArray、Bytes、Double、Float、Integer、Long、Short及String类型的反序列化,这些序列化器也都实现了 Deserializer 接口

public interface Deserializer<T> extends Closeable {

//初始化配置

default void configure(Map<String, ?> configs, boolean isKey) {

// intentionally left blank

}

T deserialize(String topic, byte[] data);

//用来执行反序列化

default T deserialize(String topic, Headers headers, byte[] data) {

return deserialize(topic, data);

@Override

default void close() {

// intentionally left blank

}

}

- 自定义反序列化

public class PersonJsonDeserializer implements Deserializer<Person> {

@Override

public void configure(Map<String, ?> configs, boolean isKey) {

}

@Override

public Person deserialize(String topic, byte[] data) {

return JSON.parseObject(data, Person.class);

}

@Override

public void close() {

}

}

2.2消费位移

- 对于Kafka中的分区而言,它的每条消息都有唯一的offset,用来表示消息在分区中对应的位置。

- 对于消息在分区中的位置,我们将offset称为“偏移量”;对于消费者消费到的位置,将 offset 称为“位移”,有时候也会更明确地称之为“消费位移”。

- 新消费者客户端中,消费位移存储在Kafka内部的主题__consumer_offsets(50)中

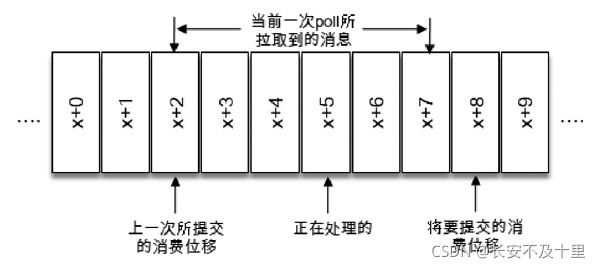

- 当前消费者需要提交的消费位移并不是 x,而是 x+1,它表示下一条需要拉取的消息的位置。

-

消费者消费到此分区消息的最大偏移量为268,对应的消费位移

lastConsumedOffset也就是268。在消费完之后就执行同步提交,但是最终结果显示所提交的位移committed offset为 269。 -

对于位移提交的具体时机的把握也很有讲究,有可能会造成重复消费和消息丢失的现象。

2.2.1 自动提交

- 当前一次poll()操作所拉取的消息集为[x+2,x+7],x+2代表上一次提交的消费位移,说明已经完成了x+1之前(包括x+1在内)的所有消息的消费,x+5表示当前正在处理的位置。如果拉取到消息之后就进行了位移提交,即提交了x+8,那么当前消费x+5的时候遇到了异常,在故障恢复之后,我们重新拉取的消息是从x+8开始的。也就是说,x+5至x+7之间的消息并未能被消费,如此便发生了消息丢失的现象。(就是当消费者设置了自动提交,当消费者,接受到消息,就立马提交偏移量,但是这期间消费发送故障,就会导致消息丢失)

- 位移提交的动作是在消费完所有拉取到的消息之后才执行的,那么当消费x+5的时候遇到了异常,在故障恢复之后,我们重新拉取的消息是从x+2开始的。也就是说,x+2至x+4之间的消息又重新消费了一遍,故而又发生了重复消费的现象。

- 在 Kafka 中默认的消费位移的提交方式是自动提交,这个由消费者客户端参数

enable.auto.commit配置,默认值为 true。当然这个默认的自动提交不是每消费一条消息就提交一次,而是定期提交,这个定期的周期时间由客户端参数auto.commit.interval.ms配置,默认值为5秒。

2.2.2 手动提交

- 手动提交可以细分为同步提交和异步提交,对应于 KafkaConsumer 中的

commitSync()和commitAsync()两种类型的方法。

同步提交

//所有的消息已消费完

if (records.count() > 0) {//有消息

// ⼿动同步提交offset,当前线程会阻塞直到offset提交成功

// ⼀般使⽤同步提交,因为提交之后⼀般也没有什么逻辑代码了

consumer.commitSync();//=======阻塞=== 提交成功

}

long position = consumer.position(topicPartition);

System.out.println("下一个消费的位置"+position);

-

有重复消费的问题,如果在业务逻辑处理完之后,并且在同步位移提交前,程序出现了崩溃,那么待恢复之后又只能从上一次位移提交的地方拉取消息,由此在两次位移提交的窗口中出现了重复消费的现象。

-

commitSync()方法会根据poll()方法拉取的最新位移来进行提交(注意提交的值对应于图3-6中position的位置),只要没有发生不可恢复的错误(Unrecoverable Error),它就会阻塞消费者线程直至位移提交完成

异步提交

//所有的消息已消费完

if (records.count() > 0) {//有消息

consumer.commitAsync(new OffsetCommitCallback() {

@Override

public void onComplete(Map<TopicPartition, OffsetAndMetadata> offsets, Exception exception) {

// 失败判断redis最后一次消费的偏移量,如果大于或等于就正常消费

}

});

long position = consumer.position(topicPartition);

System.out.println("下一个消费的位置"+position);

-

与commitSync()方法相反,异步提交的方式(commitAsync()在执行的时候消费者线程不会被阻塞,可能在提交消费位移的结果还未返回之前就开始了新一次的拉取操作。

-

设置一个递增的序号来维护异步提交的顺序,每次位移提交之后就增加序号相对应的值。在遇到位移提交失败需要重试的时候,可以检查所提交的位移和序号的值的大小,如果前者小于后者,则说明有更大的位移已经提交了,不需要再进行本次重试;如果两者相同,则说明可以进行重试提交。

2.2.3 控制或关闭消费

-

在有些应用场景下我们可能需要暂停某些分区的消费而先消费其他分区,当达到一定条件时再恢复这些分区的消费。

-

KafkaConsumer中使用pause()和resume()方法来分别实现暂停某些分区在拉取操作时返回数据给客户端和恢复某些分区向客户端返回数据的操作。

consumer.pause();

consumer.resume();

2.3.4 指定位移消费

- seek()方法中的参数partition表示分区,而offset参数用来指定从分区的哪个位置开始消费。seek()方法只能重置消费者分配到的分区的消费位置,而分区的分配是在 poll()方法的调用过程中实现的。

- 也就是说,在执行seek()方法之前需要先执行一次poll()方法,等到分配到分区之后才可以重置消费位置。

//consumer.subscribe(Arrays.asList(TOPIC_NAME));

TopicPartition topicPartition = new TopicPartition(TOPIC_NAME, 0);

consumer.assign(Arrays.asList(topicPartition));

while (true) {

/*

* 3.poll() API 是拉取消息的⻓轮询

*/

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(1000));

//指定位移消费

for (ConsumerRecord<String, String> record : records) {

consumer.seek(topicPartition,10);

//4.打印消息ConsumerCoordinator

System.out.printf("收到消息:partition = %d,offset = %d, key = %s, value = %s%n", record.partition(),

record.offset(), record.key(), record.value());

}

//所有的消息已消费完

if (records.count() > 0) {//有消息

// ⼿动同步提交offset,当前线程会阻塞直到offset提交成功

// ⼀般使⽤同步提交,因为提交之后⼀般也没有什么逻辑代码了

consumer.commitSync();//=======阻塞=== 提交成功

}

long position = consumer.position(topicPartition);

System.out.println("下一个消费的位置"+position);

}

2.3 在均衡

- 再均衡是指分区的所属权从一个消费者转移到另一消费者的行为,它为消费组具备高可用性和伸缩性提供保障,使我们可以既方便又安全地删除消费组内的消费者或往消费组内添加消费者。

public void subscribe(Pattern pattern, ConsumerRebalanceListener listener) {

}

// 在均衡监视器

public interface ConsumerRebalanceListener {

//这个方法会在再均衡开始之前和消费者停止读取消息之后被调用。可以通过这个回调方法来处理消费位移的提交,以此来避免一些不必要的重复消费现象的发生。

void onPartitionsRevoked(Collection<TopicPartition> partitions);

//这个方法会在重新分配分区之后和消费者开始读取消费之前被调用。参数partitions表示再均衡后所分配到的分区。

void onPartitionsAssigned(Collection<TopicPartition> partitions);

default void onPartitionsLost(Collection<TopicPartition> partitions) {

onPartitionsRevoked(partitions);

}

}

- 自定义均衡器

public class HandlerRebalance implements ConsumerRebalanceListener {

private final Map<TopicPartition, OffsetAndMetadata> currOffsets;

private final KafkaConsumer<String,String> consumer;

//private final Transaction tr事务类的实例

public HandlerRebalance(Map<TopicPartition, OffsetAndMetadata> currOffsets,

KafkaConsumer<String, String> consumer) {

this.currOffsets = currOffsets;

this.consumer = consumer;

}

/*模拟一个保存分区偏移量的数据库表*/

public final static ConcurrentHashMap<TopicPartition,Long>

partitionOffsetMap = new ConcurrentHashMap();

//分区再均衡之前

public void onPartitionsRevoked(

Collection<TopicPartition> partitions) {

final String id = Thread.currentThread().getId()+"";

System.out.println(id+"-onPartitionsRevoked参数值为:"+partitions);

System.out.println(id+"-服务器准备分区再均衡,提交偏移量。当前偏移量为:"

+currOffsets);

//开始事务

//偏移量写入数据库

System.out.println("分区偏移量表中:"+partitionOffsetMap);

for(TopicPartition topicPartition:partitions){

partitionOffsetMap.put(topicPartition,

currOffsets.get(topicPartition).offset());

}

consumer.commitSync(currOffsets);

//提交业务数和偏移量入库 tr.commit

}

//分区再均衡完成以后

public void onPartitionsAssigned(

Collection<TopicPartition> partitions) {

final String id = "" + Thread.currentThread().getId();

System.out.println(id+"-再均衡完成,onPartitionsAssigned参数值为:"+partitions);

System.out.println("分区偏移量表中:"+partitionOffsetMap);

for(TopicPartition topicPartition:partitions){

System.out.println(id+"-topicPartition"+topicPartition);

//模拟从数据库中取得上次的偏移量

Long offset = partitionOffsetMap.get(topicPartition);

if(offset==null) continue;

//从特定偏移量处开始记录 (从指定分区中的指定偏移量开始消费)

//这样就可以确保分区再均衡中的数据不错乱

consumer.seek(topicPartition,partitionOffsetMap.get(topicPartition));

}

}

}

2.4 消费拦截器

- KafkaConsumer会在poll()方法返回之前调用拦截器的onConsume()方法来对消息进行相应的定制化操作,比如修改返回的消息内容、按照某种规则过滤消息(可能会减少poll()方法返回的消息的个数)。如果 onConsume()方法中抛出异常,那么会被捕获并记录到日志中,但是异常不会再向上传递。

private final ConsumerInterceptors<K, V> interceptors;

//拉取方法

private ConsumerRecords<K, V> poll(final Timer timer, final boolean includeMetadataInTimeout) {

final Map<TopicPartition, List<ConsumerRecord<K, V>>> records = pollForFetches(timer);

if (!records.isEmpty()) {

// before returning the fetched records, we can send off the next round of fetches

// and avoid block waiting for their responses to enable pipelining while the user

// is handling the fetched records.

//

// NOTE: since the consumed position has already been updated, we must not allow

// wakeups or any other errors to be triggered prior to returning the fetched records.

if (fetcher.sendFetches() > 0 || client.hasPendingRequests()) {

client.transmitSends();

}

//进入消费者拦截器

return this.interceptors.onConsume(new ConsumerRecords<>(records));

}

}

- 基本结构

public class ConsumerInterceptors<K, V> implements Closeable {

private static final Logger log = LoggerFactory.getLogger(ConsumerInterceptors.class);

private final List<ConsumerInterceptor<K, V>> interceptors;

public ConsumerInterceptors(List<ConsumerInterceptor<K, V>> interceptors) {

this.interceptors = interceptors;

}

public ConsumerRecords<K, V> onConsume(ConsumerRecords<K, V> records) {

ConsumerRecords<K, V> interceptRecords = records;

for (ConsumerInterceptor<K, V> interceptor : this.interceptors) {

try {

interceptRecords = interceptor.onConsume(interceptRecords);

} catch (Exception e) {

// do not propagate interceptor exception, log and continue calling other interceptors

log.warn("Error executing interceptor onConsume callback", e);

}

}

return interceptRecords;

}

/**

KafkaConsumer会在提交完消费位移之后调用拦截器的onCommit()方法,可以使用这个方法来记录跟踪所提交的位移信息,比如当消费者使用commitSync的无参方法时,我们不知道提交的消费位移的具体细节,而使用拦截器的onCommit()方法却可以做到这一点。

**/

public void onCommit(Map<TopicPartition, OffsetAndMetadata> offsets) {

for (ConsumerInterceptor<K, V> interceptor : this.interceptors) {

try {

interceptor.onCommit(offsets);

} catch (Exception e) {

// do not propagate interceptor exception, just log

log.warn("Error executing interceptor onCommit callback", e);

}

}

}

@Override

public void close() {

for (ConsumerInterceptor<K, V> interceptor : this.interceptors) {

try {

interceptor.close();

} catch (Exception e) {

log.error("Failed to close consumer interceptor ", e);

}

}

}

}

- 自定义消费者拦截器

@Component

public class MyConsumerInterceptor implements ConsumerInterceptor<String,String> {

@Override

public ConsumerRecords<String, String> onConsume(ConsumerRecords<String, String> records) {

Map<TopicPartition, List<ConsumerRecord<String, String>>> newRecords = new HashMap<>();

for(TopicPartition partition:records.partitions()){

List<ConsumerRecord<String, String>> recs = records.records(partition);

List<ConsumerRecord<String, String>> newRecs = new ArrayList<>();

for(ConsumerRecord<String,String> rec:recs){

String newValue = "interceptor-"+rec.value();

ConsumerRecord<String,String> newRec = new ConsumerRecord<>(rec.topic(),

rec.partition(),rec.offset(),rec.key(),newValue);

newRecs.add(newRec);

}

newRecords.put(partition,newRecs);

}

return new ConsumerRecords<>(newRecords);

}

@Override

public void onCommit(Map<TopicPartition, OffsetAndMetadata> offsets) {

offsets.forEach((tp,offsetAndMetadata) -> {

System.out.println(tp+" : "+offsetAndMetadata.offset());

});

}

@Override

public void close() {

}

@Override

public void configure(Map<String, ?> configs) {

}

- 在消费者中也有拦截链的概念,和生产者的拦截链一样,也是按照interceptor.classes参数配置的拦截器的顺序来一一执行的(配置的时候,各个拦截器之间使用逗号隔开)。同样也要提防“副作用”的发生。如果在拦截链中某个拦截器执行失败,那么下一个拦截器会接着从上一个执行成功的拦截器继续执行。

2.5 多线程

- KafkaProducer是线程安全的,然而KafkaConsumer却是非线程安全的。KafkaConsumer中定义了一个 acquire()方法,用来检测当前是否只有一个线程在操作。

private void acquire() {

long threadId = Thread.currentThread().getId();

if (threadId != currentThread.get() && !currentThread.compareAndSet(NO_CURRENT_THREAD, threadId))

throw new ConcurrentModificationException("KafkaConsumer is not safe for multi-threaded access");

refcount.incrementAndGet();

}

- KafkaConsumer中的每个公用方法在执行所要执行的动作之前都会调用这个acquire()方法,只有wakeup()方法是个例外

- KafkaConsumer 非线程安全并不意味着我们在消费消息的时候只能以单线程的方式执行。