活体检测笔记总结

活体检测

PAD(presentation attack detection)

- 动作配合式活体检测:给出指定动作要求,用户需配合完成,通过实时检测用户眼睛,嘴巴,头部姿态的状态,来判断是否是活体。

- H5视频活体检测:用户上传一个现场录制的视频,录制时读出随机分配的语音校验码。然后通过分析这个视频的人脸信息以及语音校验码是否匹配,完成活体检测判断。

- 静默活体检测:相对于动态活体检测方法,静默活体检测是指,不需要用户做任何动作,自然面对摄像头3、4秒钟即可。由于真实人脸并不是绝对静止的, 存在微表情,如眼皮眼球的律动、眨眼、嘴唇及周边面颊的伸缩等,可通过此类特征反欺骗。

- 图片活体检测:基于图片中人像的破绽(摩尔纹、成像畸形等)来判断目标对象是否为活体,可有效防止屏幕二次翻拍等作弊攻击,可使用单张或多张判断逻辑。

- 近红外活体检测:利用近红外成像原理,实现夜间或无自然光条件下的活体判断。其成像特点(如屏幕无法成像,不同材质反射率不同等)可以实现高鲁棒性的活体判断。

- 3D结构光活体检测:基于3D结构光成像原理,通过人脸表面反射光线构建深度图像,判断目标对象是否为活体,可强效防御图片、视频、屏幕、模具等攻击。

- 光流法:利用图像序列中的像素强度数据的时域变化和相关性来确定各自像素位置的“运动”,从图像序列中得到各个像素点的运行信息,采用高斯差分滤波器、LBP特征和支持向量机进行数据统计分析。同时,光流场对物体运动比较敏感,利用光流场可以统一检测眼球移动和眨眼。这种活体检测方式可以在用户无配合的情况下实现盲测。

传统方法:

1)镜面反射+图像质量失真+颜色

specular reflection, blurriness features, chromatic moment and color diversity

Di Wen, Hu Han, Anil K. Jain. Face Spoof Detection with Image Distortion Analysis. IEEE Transactions on Information Forensics and Security, 2015

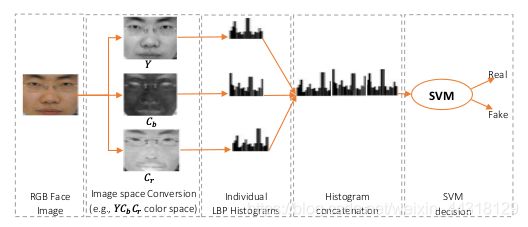

2)HSV空间人脸多级LBP特征 + YCbCr空间人脸LPQ特征

Zinelabidine Boulkenafet, Jukka Komulainen, Abdenour Hadid. Face Spoofing Detection Using Colour Texture Analysis. IEEE TRANSACTIONS ON INFORMATION FORENSICS AND SECURITY, 2016

3)捕获活体与非活体微动作之间的差异来设计特征:一个是先通过运动放大来增强脸部微动作, 然后提取方向光流直方图HOOF + 动态纹理LBP-TOP 特征;一个是通过动态模式分解DMD,得到最大运动能量的子空间图,再分析纹理。

Santosh Tirunagari, Norman Poh. Detection of Face Spoofing Using Visual Dynamics. IEEE TRANS. ON INFORMATION FORENSICS AND SECURIT, 2015

4)先通过 pluse 在频域上分布不同先区分活体 or 照片攻击 (因为照片中的人脸提取的心率分布不同),再若判别1结果是活体,再 cascade 一个纹理LBP分类器,来区分活体 or 屏幕攻击(因为屏幕视频中人脸心率分布与活体相近)

Xiaobai Li, , Guoying Zhao. Generalized face anti-spoofing by detecting pulse from face videos, 2016 23rd ICPR

5) multi-scale local binary pattern (LBP) followed by a non-linear SVM(texture)

J. Määttä, A. Hadid, and M. Pietikäinen, “Face spoofing detection from single images using micro-texture analysis,” in Biometrics (IJCB), 2011 international joint conference on. IEEE, 2011, pp. 1–7.

6) used the LBP-TOP features containing space and time descriptors to encode the motion information along with the face texture

T. de Freitas Pereira, A. Anjos, J. M. De Martino, and S. Marcel, “Lbp- top based countermeasure against face spoofing attacks,” in Asian Conference on Computer Vision. Springer, 2012, pp. 121–132.

7)multiple Difference of Gaussian (DoG) filters to remove the noise and low-frequency information. They used the high frequency information to generate the feature vector for SVM classifier

Z. Zhang, J. Yan, S. Liu, Z. Lei, D. Yi, and S. Z. Li, “A face antispoofing database with diverse attacks,” in Biometrics (ICB), 2012 5th IAPR international conference on. IEEE, 2012, pp. 26–31.

8)the motion relation between foreground and background

A. Anjos and S. Marcel, “Counter-measures to photo attacks in face recognition: a public database and a baseline,” in Biometrics (IJCB),2011 international joint conference on. IEEE, 2011, pp. 1–7.

9)(2015)FACE ANTI-SPOOFING BASED ON COLOR TEXTURE ANALYSIS

深度学习方法论文概要

1 (2018)Discriminative Representation Combinations for Accurate Face Spoofing Detection

SPMT: spatial pyramid coding micro-texture; local

SSD: Single Shot MultiBox Detector;context

TFBD: template face matched binocular depth;stereo

1)SSD+SPMT: one image

2)TFBD+SPMT:binocular image pair

把 活体检测 直接放到 人脸检测(SSD,MTCNN等) 模块里作为一个类,即人脸检测出来的 bbox 里有 背景,真人人脸,假人脸 三类的置信度,这样可以在早期就过滤掉一部分非活体。

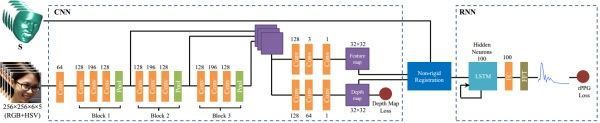

2 (2018)Learning Deep Models for Face Anti-Spoofing Binary or Auxiliary Supervision

二分类with softmax 只学到训练集的某一种区分特征,黑盒而且泛化性不强。

提出auxillary supervision提取时间和空间信息:face depth(pixel-wise、CNN)和rPPG signals(sequence-wise、RNN)。

设计了深度框架准端到端地去预测 Pulse统计量 及 Depth map (这里说的“准”,就是最后没接分类器,直接通过样本 feature 的相似距离,阈值决策)

1)过去方法把活体检测看成二分类问题,直接让DNN去学习,这样学出来的cues不够general 和 discriminative

2)将二分类问题换成带目标性地特征监督问题,即 回归出 pulse 统计量 + 回归出 Depth map,保证网络学习的就是这两种特征(哈哈,不排除假设学到了 color texture 在里面,黑箱网络这么聪明)

回归 Depth map,就是通过 Landmark 然后 3DMMfitting 得到 人脸3D shape,然后再阈值化去背景,得到 depth map 的 groundtruth,最后和网络预测的 estimated depth map 有 L2 loss。

而文章亮点在于设计了 Non-rigid Registration Layer 来对齐各帧人脸的非刚性运动(如姿态,表情等),然后通过RNN更好地学到 temporal pulse 信息。

为什么需要这个对齐网络呢?我们来想想,在做运动识别任务时,只需简单把 sampling或者连续帧 合并起来喂进网络就行了,是假定相机是不动的,对象在运动;而文中需要对连续人脸帧进行pulse特征提取,主要对象是人脸上对应ROI在 temporal 上的 Intensity 变化,所以就需要把人脸当成是相机固定不动。

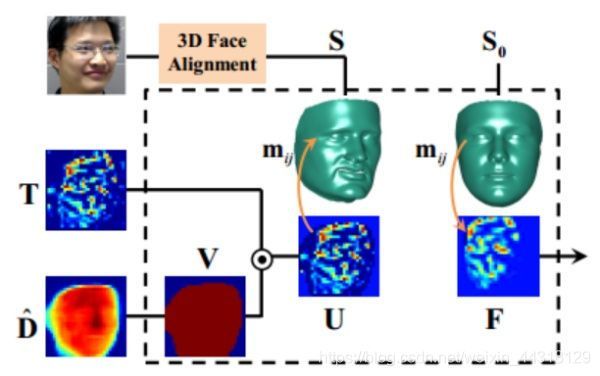

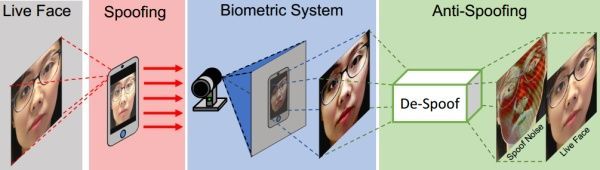

3 (2018)Face De-Spoofing Anti-Spoofing via Noise Modeling

假设噪音是ubiquitous and repetive。

单帧方法,启发于图像去噪denoise 图像去抖动 deblur,无论是噪声图还是模糊图,都可看成是在原图上加噪声运算或者模糊运算,而去噪和去抖动,就是估计噪声分布和模糊核,从而重构回原图。文中把活体人脸图看成是原图 ,而非活体人脸图看成是加了噪声后失真的 x ,故 task 就变成估计 Spoof noise ,然后用这个 Noise pattern feature 去分类决策。

那问题来了,数据集没有像素级别一一对应的 groundtruth,也没有Spoof Noise模型的先验知识(如果有知道Noise模型,可以用Live Face来生成Spoofing Face),那拿什么来当groundtruth,怎么设计网络去估计 Spoofing noise 呢?

如一般Low-level image 任务一样,文中利用Encoder-decoder来得到 Spoof noise N,然后通过残差重构 ,这就是下图的DS Net。为了保证网络对于不同输入,学出来的Noise是有效的,根据先验知识设计了三个Loss来constrain:

1)Magnitude loss(当输入是Live face时,N尽量逼近0);

2)Repetitive loss(Spooing face的Noise图在高频段有较大的峰值);

3)Map Loss(让Real Face 的 deep feature map分布尽量逼近全0,而Spoofing face的 deep feature map 尽量逼近全1)

那网络右边的 VQ-Net 和 DQ-Net 又有什么作用呢?因为没有 Live face 的 Groundtruth,要保证重构出来的分布接近 Live face,作者用了对抗生成网络GAN (即 VQ-Net )去约束重构生成的live face 与Live face分布尽量一致;而用了 pre-trained Depth model 来保证结果live face的深度图与Live face的深度图尽量一致。

Pros: 通过可视化最终让大众知道了 Spoofing Noise 是长什么样子的~

Cons: 在实际场景中难部署(该模型假定Spoofing Noise是 strongly 存在的,当实际场景中活体的人脸图质量并不是很高,而非活体攻击的质量相对高时,Spoofing noise走不通)

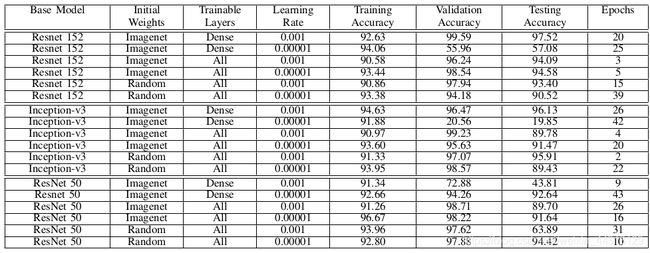

**4 (2019)A Performance Evaluation of Convolutional Neural **

测试多种核心CNN网络、是否transfer、是否init random、learning rate

The face anti-spoofing is considered as the two-class classification problem in this paper. The two classes are real face

class and spoofed face class. the CNN model predicts the class score for training images, computes the categorical cross-entropy loss

TABLE : The training, validation and testing performance comparison among Inception-v3, ResNet50 and ResNet152 models in terms of the accuracy, convergence rate, and varying parameters like initial weights, number of trainable layers and learning rate. In this table, the ‘Epochs’ is the number of epochs for highest validation accuracy.

TABLE : The training, validation and testing performance comparison among Inception-v3, ResNet50 and ResNet152 models in terms of the accuracy, convergence rate, and varying parameters like initial weights, number of trainable layers and learning rate. In this table, the ‘Epochs’ is the number of epochs for highest validation accuracy.

5 (2019)Deep Transfer Across Domains for Face Anti-spoofing

提出现有的方法泛化性都不足。原因:

1)the variety of spoofing materials can make the spoofing attacks quite different.

2)limited labeled data is available for training in face anti-spoofing.

We propose to learn a shared feature subspace where the distributions of the real access samples (genuine) from different domains, and the distributions of different types of spoofing attacks (fake) from different domains are drawn close, respectively. In the proposed framework, the sufficient labeled source data are used to learn discriminative representations that distinguish the genuine samples and the fake samples, meanwhile the sparsely labeled target samples are fed to the network to calculate the feature distribution distance between the genuine samples from the source and the target domain, and between the fake samples from the source and the target domains, corresponding to their materials. The kernel approach is adopted to map the features output from the CNN into a common kernel space, and the Maximum Mean Discrepancy (MMD) is adopted to measure the distribution distance between the samples from the source and target domains. This feature distribution distance is treated as a domain loss term added to the objective function and minimized along with training of the network.

Figure : The flowchart of the proposed framework, where every input batch contains half the source images and half the target images. Features of the two domains output from the last pooling layer are used to calculate the distribution distance with kernel based MMD. The network is trained using the classification loss along with the distribution distance which is taken as domain loss.

Figure : The flowchart of the proposed framework, where every input batch contains half the source images and half the target images. Features of the two domains output from the last pooling layer are used to calculate the distribution distance with kernel based MMD. The network is trained using the classification loss along with the distribution distance which is taken as domain loss.

6 (2018)Deep Tree Learning for Zero-shot Face Anti-Spoofing

the detection of unknown spoof attacks as Zero-Shot Face Anti-spoofing (ZSFA).A novel Deep Tree Network (DTN) is proposed to partition the spoof samples into semantic sub-groups in an unsupervised fashion.Assuming there are both homogeneous features among different spoof types and distinct features within each spoof type, a tree-like model is well-suited to handle this case: learning the homogeneous features in the early tree nodes and distinct features in later tree nodes.

Figure : The proposed Deep Tree Network (DTN) architecture. (a) the overall structure of DTN. A tree node consists of a Convolutional Residual Unit (CRU) and a Tree Routing Unit (TRU), and a leaf node consists of a CRU and a Supervised Feature Learning (SFL) module. (b) the concept of Tree Routing Unit (TRU): finding the base with largest variations; © the structure of each Convolutional Residual Unit(CRU); (d) the structure of the Supervised Feature Learning (SFL) in the leaf nodes.

Figure : The proposed Deep Tree Network (DTN) architecture. (a) the overall structure of DTN. A tree node consists of a Convolutional Residual Unit (CRU) and a Tree Routing Unit (TRU), and a leaf node consists of a CRU and a Supervised Feature Learning (SFL) module. (b) the concept of Tree Routing Unit (TRU): finding the base with largest variations; © the structure of each Convolutional Residual Unit(CRU); (d) the structure of the Supervised Feature Learning (SFL) in the leaf nodes.

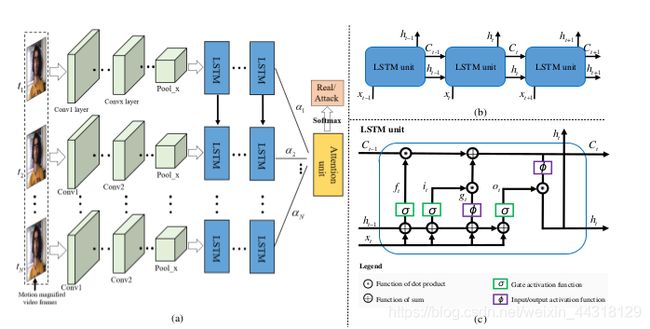

7 (2019)Enhance the Motion Cues for Face Anti-Spoofing using

fine-grained motions:比如眨眼、手抖

Extract the high discriminative features of video frames using the conventional Convolutional Neural Network (CNN). Then we leverage Long Short-Term Memory (LSTM) with the extracted features as inputs to capture the temporal dynamics in videos.To ensure the fine-grained motions more easily to be perceived in the training process, the eulerian motion magnification is used as the preprocessing to enhance the facial expressions exhibited by individuals, and the attention mechanism is embedded in LSTM to ensure the model learn to focus selectively on the dynamic frames across the video clips.

Fig: (a) The flowchart of the proposed CNN-LSTM framework. (b) The cascaded LSTM architecture. © Illustration of a single LSTM unit, the current state t depends on the past state t 1 of the same neuron.

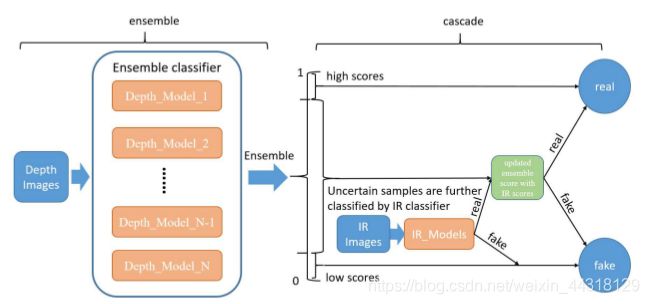

8 (2019)FeatherNets Convolutional Neural Networks as Light as Feather

提出一种非常小的网络结构;fixes the weakness of Global Average Pooling–>Streaming Module; use depth image only( the depth information is estimated from RGB image) ;“ensemble + cascade” structure

Figure. Streaming Module. The last blocks’ output is down-sampled by a depthwise convolution[28, 29] with stride larger than 1 and flattened directly into an one-dimensional vector.

Figure. Multi-Modal Fusion Strategy: Two stages cascaded, stage 1 is an ensemble classifier consisting of several depth models. Stage 2 employs IR models to classify the uncertain samples from stage 1.

9 (2018)LiveNet Improving features generalization for face liveness detection

continuous data-randomization (like bootstrapping)

Fig. The sampling is done in the form of mini-batches. (a) Conventional method for training CNN Networks. (b) Proposed method for training CNN networks.

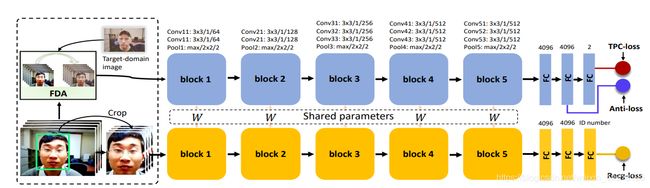

10(2019)Learning Generalizable and Identity-Discriminative Representations for face anti-spoofing

1)Total Pairwise Confusion(TPC) loss

2)Fast Domain Adaptation(FDA) component into the CNN model to alleviate negative effects brought by domain changes

3)Generalizable Face Authentication CNN model,works in a multi-task manner, performing simultaneously face anti-spoofing and face recognition

Figure : Architecture of proposed GFA-CNN. The whole network contains two branches. The face anti-spoofing branch

(upper) takes as input the domain-adaptive images transferred by FDA and optimized by TPC-loss and Anti-loss, while the face recognition branch (bottom) takes the cropped face images as input and is trained by minimizing Recog-loss. The

structure settings are shown on top of each block, where “ID number” indicates the number of subjects involved in training. The two branches share parameters during training.

11(2019)Improving Face Anti-Spoofing by 3D Virtual Synthesis

合成更多的spoof 数据

12( 2019)Generalized Presentation Attack Detection a face anti-spoofing evaluation proposal

1)proposed a framework, GRAD-GPAD, for systematic evaluation of the generalization properties of face-PAD methods

2)提出了两种新的评估协议:Cross-FaceResolution、Cross-Conditions

原有的协议:Grandtest、Cross-Dataset、One-PAI、Unseen Attacks (Cross-PAI)、 Unseen Capture Devices:

13( 2019)Exploiting temporal and depth information for multi-frame face anti-spoofing

estimate depth information from multiple RGB frames

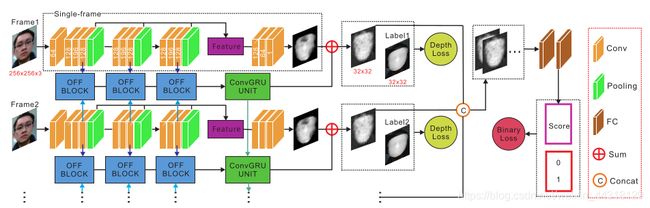

Figure . The pipeline of proposed architecture. The inputs are consecutive frames in a fixed interval. Our single-frame part aims to

extract features at various levels and to output the single-frame estimated facial depth. OFF blocks take single-frame features from two

consecutive frames as inputs and calculate short-term motion features. Then the final OFF features are fed into the ConvGRUs to obtain

long-term motion information, and output the residual of single-frame facial depth. Finally, the combined estimated multi-frame depth

maps are supervised by the depth loss and binary loss in respective manners.

14( 2019)Meta Anti-spoofing: Learning to Learn in Face Anti-spoofing

a few-shot learning problem with evolving new attacks

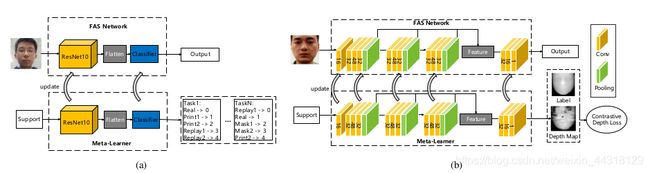

Figure. (a) Network structure of Meta-FAS-CS which aims to train a meta-learner through classification label. (b) Network structure of

Meta-FAS-DR which aims to train a meta-learner through depth label.

15( 2019)Deep Anomaly Detection for Generalized Face Anti-Spoofing

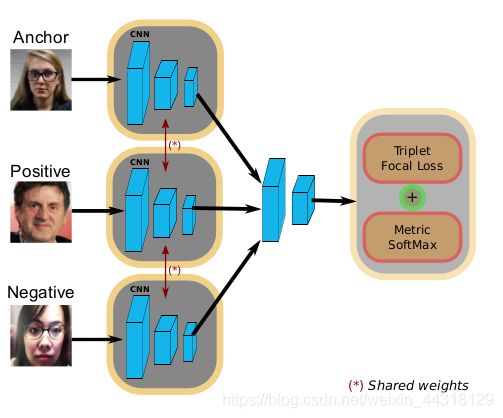

Figure : We propose a deep metric learning approach, using a set of Siamese CNNs, in conjunction with the combination of a triplet focal loss and a novel “metric softmax” loss. The latter accumulates the probability distribution of each pair within the triplet. Our aim is to learn a feature representation that allows us to detect impostor samples as anomalies.

16( 2019)Aurora Guard: Real-Time Face Anti-Spoofing via Light Reflection

extracts the normal cues via light reflection analysis, and then uses an end-to-end trainable multi-task Convolutional Neural Network (CNN) to not only recover subjects’ depth maps to assist liveness classification, but also provide the light CAPTCHA checking mechanism in the regression branch to further improve the system reliability

Figure : Overview of Aurora Guard. From facial reflection frames encoded by casted light CAPTCHA, we estimate the normal cues. In the classification branch, we recover the depth maps from the normal cues, and then perform depth-based liveness classification. In the regression branch, we obtain the estimated light CAPTCHA.

17( 2019)Towards Real-time Eyeblink Detection in The Wild:Dataset, Theory and Practices

After locating and tracking human eye using SeetaFace engine and KCF tracker respectively, a modified LSTM model able to capture the multi-scale temporal information is proposed to execute eyeblink verification.A feature extraction approach that reveals appearance and motion characteristics simultaneously is also proposed.

18( 2018)Exploring Hypergraph Representation on Face Anti-spoofing Beyond 2D Attacks

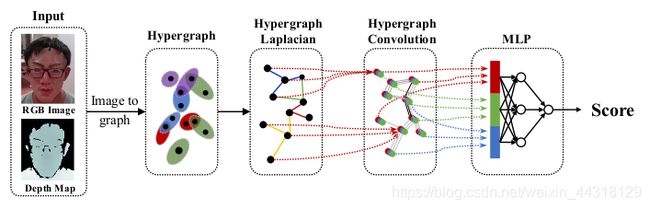

construct a computation-efficient and posture-invariant face representation with only a few key points on hypergraphs. The hypergraph representation is then fed into the designed HGCNN with hypergraph convolution for feature extraction, while the depth auxiliary is also exploited for 3D mask anti-spoofing

总结

近几年提出的深度学习的活体检测方法,主要有四种思路:

1)单纯地使用图片作为输入–>CNN

难点在于泛化性不足、黑盒特征,提出的解决思路有:

- 学习不同数据集的domain difference:domain loss[例5]、FDA+TPC loss[例10]

- 使用注意力机制学习特定的特征:face depth(pixel-wise、CNN)[例2]

- 新创的抽样方法[例9]

- 新型的网络结构、“ensemble + cascade” structure、使用depth image[例8]

- 将spoofing 信息视为一种noise[例3]

- 与人工特征结合[例1]

2)考虑时域信息来识别fine-grained motions(LSTM)[例7]、rPPG signals(RNN)[例2]

3)使用无监督学习[例6]

4)使用binary image pair[例1]

数据集

可下载:

NUAA

REPLAY-ATTACK https://www.idiap.ch/dataset/replayattack

、

CASIA-FASD http://www.cbsr.ia.ac.cn/english/FASDB_Agreement/Agreement.pdf

SIW http://cvlab.cse.msu.edu/spoof-in-the-wild-siw-face-anti-spoofing-database.html

、

OULU-NPU https://sites.google.com/site/oulunpudatabase/

MSU-MFSD http://biometrics.cse.msu.edu/Publications/Databases/MSUMobileFaceSpoofing/index.htm

MSU_USSA http://biometrics.cse.msu.edu/Publications/Databases/MSU_USSA/

HKBU-MARs http://rds.comp.hkbu.edu.hk/mars/

、

3DMAD https://www.idiap.ch/dataset/3dmad

UVAD https://recodbr.wordpress.com/code-n-data/#UVAD

REPLAY-MOBILE https://www.idiap.ch/dataset/replay-mobile

ROSE-YOUTU http://rose1.ntu.edu.sg/Datasets/faceLivenessDetection.asp

CS-MAD https://www.idiap.ch/dataset/csmad

SMAD

未公开:

SiW-M 、MMFD

参考:

活体检测算法综述

论文获取链接1