容器服务(三)自动化监控 Prometheus、Grafana

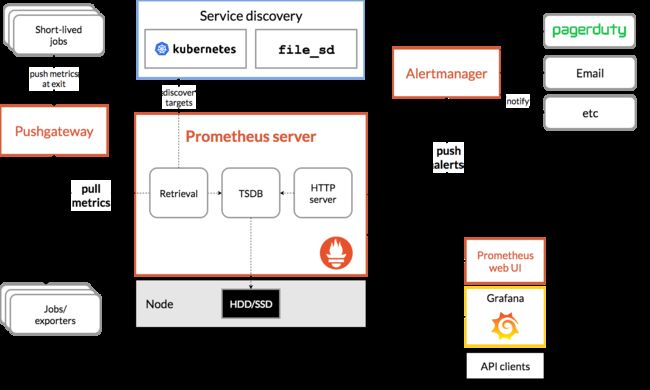

Prometheus 算是一个全能型选手,原生支持容器监控,当然监控传统应用也不是吃干饭的,所以就是容器 和非容器他都支持,所有的监控系统都具备这个流程,数据采集→数据处理→数据存储→数据展示→告警, 本文就是针对 Prometheus 展开的,所以先看看 Prometheus 概述

Prometheus 概述展

中文名普罗米修斯,最初在 SoundCloud 上构建的监控系统,自 2012 年成为社区开源项目,用户非常活跃 的开发人员和用户社区,2016 年加入 CNCF,成为继 kubernetes 之后的第二个托管项目

官方网站: https://prometheus.io/docs/introduction/overview/

Prometheus 特点

- 多维数据模型:由度量名称和键值对标识的时间序列数据

- PromSQL: — 种灵活的查询语言,可以利用多维数据完成复杂的查询 不依赖分布式存储,单个服务器节点可直接工作

- 基于 HTTP 的 pull 方式釆集时间序列数据

- 推送时间序列数据通过 PushGateway 组件支持 通过服务发现或静态配罝发现目标

- 多种图形模式及仪表盘支持 (grafana)

Prometheus 组成与架构

部署

我们借助docker来安装,新建目录docker-monitor,在里面创建文件docker-compose.yml,内容如下

version: '3.8'

services:

prometheus:

image: prom/prometheus:v2.4.3

container_name: 'prometheus'

volumes:

- /data/prometheus/:/etc/prometheus/ #映射prometheus的配置文件

- /etc/localtime:/etc/localtime:ro #同步容器与宿主机的时间,这个非常重要,如果时间不一致,会导致prometheus抓不到数据

ports:

- '9090:9090'

grafana:

image: grafana/grafana:5.2.4

container_name: 'grafana'

ports:

- '3000:3000'

volumes:

- /data/grafana/config/grafana.ini:/etc/grafana/grafana.ini #grafana报警邮件配置

- /data/grafana/provisioning/:/etc/grafana/provisioning/ #配置grafana的prometheus数据源

- /etc/localtime:/etc/localtime:ro

env_file:

- /data/grafana/config.monitoring #grafana登录配置

depends_on:

- prometheus #grafana需要在prometheus之后启动

prometheus/prometheus.yml

global: #全局配置

scrape_interval: 15s #全局定时任务抓取性能数据间隔

scrape_configs: #抓取性能数据任务配置

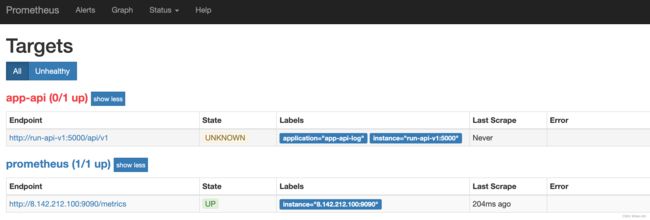

- job_name: 'app-api' #抓取订单服务性能指标数据任务,一个job下可以配置多个抓紧的targets,比如订单服务多个实例机器

scrape_interval: 10s #每10s抓取一次

metrics_path: '/api/v1' #抓取的数据url

static_configs:

- targets: ['run-api-v1:5000'] #抓取的服务器地址

labels:

application: 'app-api-log' #抓取任务标签

- job_name: 'prometheus' #抓取prometheus自身性能指标数据任务

scrape_interval: 5s

static_configs:

- targets: ['x.x.x.x:9090']

grafana/config.monitoring

GF_SECURITY_ADMIN_PASSWORD=admin #grafana管理界面的登录用户密码,用户名是admin

GF_USERS_ALLOW_SIGN_UP=false #grafana管理界面是否允许注册,默认不允许

grafana/config/grafana.ini

#################################### SMTP / Emailing ##########################

# 配置邮件服务器

[smtp]

enabled = true

# 发件服务器

host = smtp.qq.com:465

# smtp账号

user = [email protected]

# smtp 授权码,授权码获取请参看课上视频演示

password = xxxxxx

# 发信邮箱

from_address = [email protected]

# 发信人

from_name = MX

grafana/provisioning/datasource.yml

# config file version

apiVersion: 1

deleteDatasources: #如果之前存在name为Prometheus,orgId为1的数据源先删除

- name: Prometheus

orgId: 1

datasources: #配置Prometheus的数据源

- name: Prometheus

type: prometheus

access: proxy

orgId: 1

url: http://prometheus:9090 #在相同的docker compose下,可以直接用prometheus服务名直接访问

basicAuth: false

isDefault: true

version: 1

editable: true

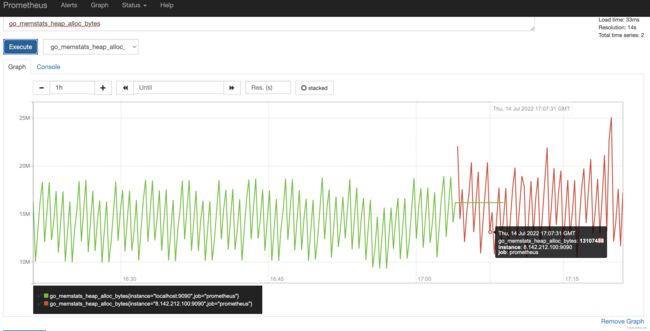

prometheus 页面

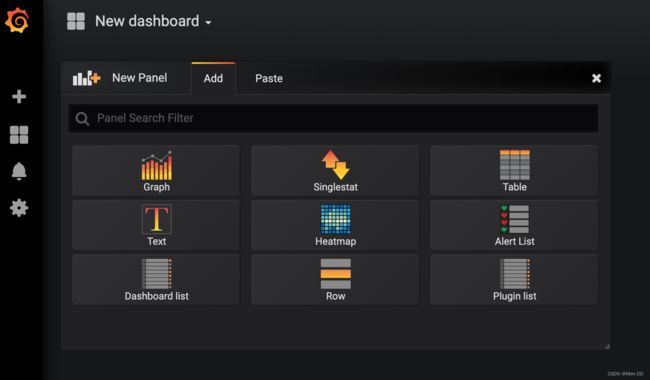

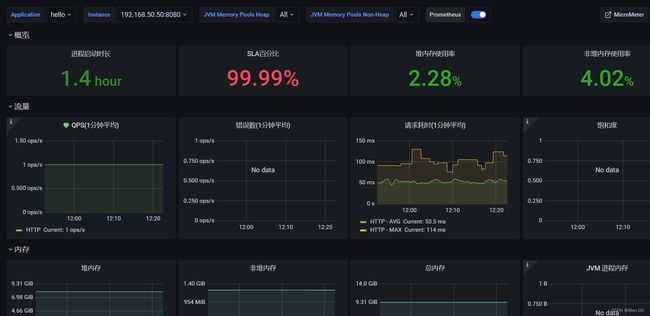

grafana 页面

导入模板:

https://grafana.com/grafana/dashboards

https://github.com/percona/grafana-dashboards.git

https://grafana.com/grafana/dashboards/16144/revisions

监控

监控Spring Boot性能指标

pom.xml

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-actuatorartifactId>

dependency>

<dependency>

<groupId>io.micrometergroupId>

<artifactId>micrometer-registry-prometheusartifactId>

dependency>

监控Redis性能指标

docker run -d -p 9121:9121 oliver006/redis_exporter --redis.addr redis://redis连接IP:6379

prometheus/prometheus.yml 追加 redis docker pull oliver006/redis_exporter

- job_name: 'redis'

scrape_interval: 5s

static_configs:

- targets: ['192.168.50.60:9121']

labels:

instance: redis

监控Mysql性能指标

docker run -d -p 9104:9104 -e DATA_SOURCE_NAME="root:password@(mysql服务器ip:3306)/databaseName" prom/mysqld-exporter

prometheus/prometheus.yml 追加 mysql docker pull prom/mysqld-exporter

- job_name: 'mysql'

scrape_interval: 5s

static_configs:

- targets: ['IP地址1:9104']

labels:

instance: mysql

监控Linux性能指标

docker run -d -p 9100:9100 prom/node-exporter

prometheus/prometheus.yml 追加 linux docker pull prom/node-exporter

- job_name: linux

scrape_interval: 10s

static_configs:

- targets: ['IP地址1:9100']

labels:

instance: linux-1

- targets: ['IP地址2:9100']

labels:

instance: linux-2