深度学习学习笔记——RNN(LSTM、GRU、双向RNN)

目录

- 前置知识

- 循环神经网络(RNN)

-

- 文本向量化

- RNN 建模

- RNN 模型改进

-

- LSTM(Long Short Term Memory)

- LSTM变形与数学表达式

- 门控循环单元GRU(Grated Recurrent Unit)

- 双向RNN模型

前置知识

- 深度学习是什么

- 深度学习是机器学习的一个分支

- 由全连接网络、卷积神经网络和循环神经网络构成的结构

- 多层全连接网络:多层感知器

- 多层卷积神经网络

- 卷积神经网络基本结构

- 数据:

- 2D输入数据形式:[批尺寸(batchsize),高度(H),宽度(W),通道数(特征数)(channel)],[B, H, W, C]

- 2D卷积核心格式:[(卷积核心大小1,卷积核心大小2),输入通道数(特征),输出通道数(特征)]

- 1D输入数据形式:[B, T, C]

- 1D卷积核心格式:[K, C, C2]

- 2D数据:图像、雷达等

- 1D数据:有顺序的文本、信号

- 有些人感觉鸢尾花数据是1D数据,其仅是一个向量点

- 卷积:提取特征,也就是滤波

- 池化:降采样(stride ≠ \neq = 1)

- LeNet(手写数字识别):卷积+全连接

- 卷积神经网络中最重要的概念是什么

- 感受野(由卷积的kernel size决定)

- 如何增加感受野

- 增加层数

- 降采样

- 数据:

- 深度学习中重要的观念:

- 向量:深度学习中所有的属性、物体、特征均是向量(大部分网络均可由Numpy实现)

循环神经网络(RNN)

RNN核心思想:可以考虑输入数据前后文相关特征

文本向量化

- 常规文本向量化(不考虑顺序)

- 将整片文章转换为一个向量

- 基于词频统计的

- 词袋子(Bag of words)模型

- 此时丢失了顺序的信息

- eg:今 天 天 气 不 好

- 看到“好”字我们应该认为是一个正面的情绪

- 但是“好”前有个“不”

- 有些人提取前后文特征组成“词”->N-gtam-range

- 单个字或词带有信息少,需要结合前后文

- 对顺序文本进行向量化

- 句子向量化:今 天 天 气 不 好 。

- 需要将字转换为ID:建立字典,为每个字符赋予一个整形数字

- 句子转换为:[96, 22, 22, 163, 3, 244, 1] -> [T], type:int

- 对每个整形数字进行OneHot编码:[T=字符数量,字符数量]

- 可能有多个(BatchSize,B)句子,因此

- 输入:[B, T, 字符数量]->[B, T, 字符数量]->一维连续数据

- 每个句子长度不同->补0->计算效率

- 深度神经网络仅能处理浮点型的向量,所以将每个字符均转换为向量

- 但是字符数量长度太长,需要降维,乘以降维矩阵W[词数量,降维长度]

- 最终:[B, T , 降维长度]

- 注意:

- W初始取随机值,随后随网络一同训练

- X:[B, T, C] W[C, C2],如何相乘,张量点乘

- 整个过程叫做Embedding

- 将文字转换为向量的过程

- 中文以“字”作为基本单位,英文可以以“字母”或“词”作为基本单位

RNN 建模

- 假设X是Embedding后的序列:[B, T, C]

- 选取某个时间X[: , 0, :], 相当于取第1个词[B, C]

- 记录 x t x_t xt-> X [ : , t , : ] X[:, t, :] X[:,t,:], 所以X[:, 0, :]是 x 0 x_0 x0

- 如果构建线性模型: y t = x t w + b y_t = x_tw+b yt=xtw+b,实际上金相当于处理单个字符

- 如果需要考虑前文内容,需要进行如下改动:

- 输入: ( x 0 , x 1 , … , x T ) ∈ X (x_0,x_1,\dotsc,x_T)\in X (x0,x1,…,xT)∈X

- 状态state: ( h 0 , h 1 , … , h T ) ∈ H (h_0,h_1,\dotsc,h_T)\in H (h0,h1,…,hT)∈H 其中 h 0 h_0 h0默认为0

- 输出: ( y 0 , y 1 , … , y T ) ∈ Y (y_0,y_1,\dotsc,y_T)\in Y (y0,y1,…,yT)∈Y

- 因此有:

y t = h t = tanh ( c o n c a t [ x t , h t − 1 ] ⋅ W + b ) y_t = h_t = \tanh(concat[x_t, h_{t-1}]\cdot W + b) yt=ht=tanh(concat[xt,ht−1]⋅W+b) - 其中 x t x_t xt的形式为[batchsize, features1], h t h_t ht的形式为[batchsize, features2]。多层rnn网络可以在输出的基础上继续加入RNN函数:

h t l = f ( x t , h t − 1 l ) h t l + 1 = f ( x t , h t − 1 l + 1 ) h^l_t = f(x_t,h^l_{t-1})\\ h^{l+1}_t = f(x_t,h^{l+1}_{t-1}) htl=f(xt,ht−1l)htl+1=f(xt,ht−1l+1)

其中 l l l表示隐藏层

Numpy实现(仅包含计算过程):

import numpy as np

# 读取字典

word_map_file = open(r'model\wordmap','r',encoding='utf-8')

word2id_dict = eval(word_map_file.read())

word_map_file.close()

# print(word2id_dict)

# 文本向量化

B = 2 #文本数量(批次大小)

n_words = 5388

strs1 = '今天天气不好。'

strs2 = '所以我不出门。'

strs_id1 = [word2id_dict.get(itr) for itr in strs1]

strs_id2 = [word2id_dict.get(itr) for itr in strs2]

print(f'文本1:“{strs1}”, 对应字典中的整数:{strs_id1}')

print(f'文本2:“{strs2}”, 对应字典中的整数:{strs_id2}')

# One hot编码

T = max(len(strs1), len(strs2)) #字符串长度

C = len(word2id_dict) #字典中的字符数量,总数

strs_vect = np.zeros([B, T, C])

for idx, ids in enumerate(strs_id1):

strs_vect[0, idx, ids] = 1

for idx, ids in enumerate(strs_id2):

strs_vect[1, idx, ids] = 1

# print(strs_vect)

print(f'降维前 Size:{strs_vect.shape}')

# 降维: 向量文本乘上一个矩阵[字符数量:5388,降维的维度:128(可训练)]

enbedding_size = 128

W = np.random.normal(0, 0.1, [n_words, enbedding_size])

vect2d = np.reshape(strs_vect, [B*T, C])

out = vect2d @ W

vect = np.reshape(out, [B, T, enbedding_size]) #Embedding 过程(压缩矩阵)

print(f'降维后 Size:{vect.shape}')

###############

##### RNN #####

###############

#初始化

hidden_size = 64

rnn_w = np.random.random([enbedding_size+hidden_size, hidden_size])

rnn_b = np.zeros([hidden_size])

state = np.zeros([B, hidden_size])

#正向计算传播

outputs = []

for step in range(T):

x_t = np.concatenate([vect[:, step, :], state], axis=1)

state = np.tanh(x_t @ rnn_w + rnn_b)

outputs.append(state)

last_output = outputs[-1] #包含前面全部信息

Tensorflow实现(仅框架,没有传入数据):

import tensorflow as tf

# 超参数

batch_size = 32 # B = 32

seq_len = 100 # 文本长度, T=100

embedding_size = 128 # 降维后向量长度 C = 128

hidden_size = 128 # 隐藏层向量长度

epochs = 100 # 训练次数

# 统计量

n_words = 5388 # 字符数量

n_class = 10 # 类别数量

# 原始数据

input_ID = tf.placeholder(tf.int32, [batch_size, seq_len])

label_ID = tf.placeholder(tf.int32, [batch_size])

# 降维数据

embedding_w = tf.get_variable('embedding_w', [n_words, embedding_size])

# 传入模型数据

inputs = tf.nn.embedding_lookup(embedding_w, input_ID)

# 构建多层神经网络单元

rnn_fn = tf.nn.rnn_cell.BasicRNNCell

rnn_cell = tf.nn.rnn_cell.MultiRNNCell([

rnn_fn(hidden_size),

rnn_fn(hidden_size)

])

# 将数据输入循环神经网络

outputs, last_state = tf.nn.dynamic_rnn(rnn_cell, inputs, dtype = tf.float32)

last_out = outputs[:, 0, :]

logits = tf.layers.dense(last_out, n_class, activation= None)

label_onehot = tf.one_hot(label_ID, n_class)

loss = tf.losses.softmax_cross_entropy(label_onehot, logits)

train_step = tf.train.AdamOptimizer().minimize(loss)

sess = tf.Session()

sess.run(tf.global_variables_initializer())

for step in range(epochs):

sess.run(train_step, feed_dict={label_ID:..., input_ID:...})

RNN 模型改进

- RNN时间步较多时,梯度容易过大

- RNN使用的激活函数是tanh

- BasicRNN,容易出现“遗忘”

- 改进的LSTM结构,长短时间记忆单元

- 两个向量用于保留记忆,h, c

- 门控结构是一个加权的机制,在很多网络中都有体现

LSTM(Long Short Term Memory)

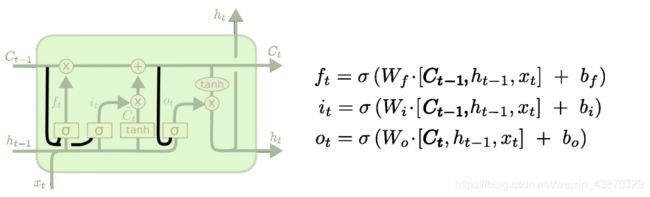

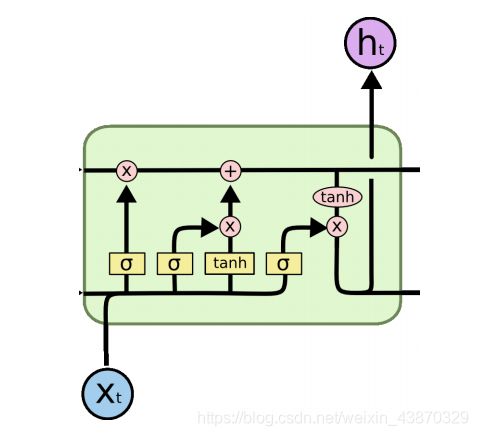

其中: σ \sigma σ为sigmod函数, tanh \tanh tanh为双曲正切函数, 图中左上角省略了输入记忆单元 C t − 1 C_{t-1} Ct−1,左上角省略了输出记忆单元 C t C_t Ct,左下角省略了输入状态单元 h t − 1 h_{t-1} ht−1

公式如下:

h t = tanh { σ ( c o n c a t [ x t , h t − 1 ] ) × C t − 1 + σ ( c o n c a t [ x t , h t − 1 ] ) × tanh ( c o n c a t [ x t , h t − 1 ] ) } × σ ( c o n c a t [ x t , h t − 1 ] ) c t = σ ( c o n c a t [ x t , h t − 1 ] ) × C t − 1 + σ ( c o n c a t [ x t , h t − 1 ] ) × tanh ( c o n c a t [ x t , h t − 1 ] ) h_t = \tanh \left \{ \sigma(concat[x_t, h_{t-1}]) \times C_{t-1} + \sigma(concat[x_t, h_{t-1}]) \times \tanh(concat[x_t, h_{t-1}]) \right \} \times \sigma(concat[x_t, h_{t-1}])\\ c_t = \sigma(concat[x_t, h_{t-1}]) \times C_{t-1} + \sigma(concat[x_t, h_{t-1}]) \times \tanh(concat[x_t, h_{t-1}]) ht=tanh{σ(concat[xt,ht−1])×Ct−1+σ(concat[xt,ht−1])×tanh(concat[xt,ht−1])}×σ(concat[xt,ht−1])ct=σ(concat[xt,ht−1])×Ct−1+σ(concat[xt,ht−1])×tanh(concat[xt,ht−1])

其中:

- 因为 σ 函 数 ∈ [ 0 , 1 ] \sigma函数\in[0, 1] σ函数∈[0,1],所以该部分的输出皆作为控制门信号

- σ ( c o n c a t [ x t , h t − 1 ] ) \sigma(concat[x_t, h_{t-1}]) σ(concat[xt,ht−1])为遗忘门信号(图中最左侧的 σ \sigma σ),判断当前信息以及前文信息是否重要

- 图中中间的 σ \sigma σ为输入门( σ ( c o n c a t [ x t , h t − 1 ] ) \sigma(concat[x_t, h_{t-1}]) σ(concat[xt,ht−1])),判断短时记忆是否重要,将短时记忆加入长时记忆中

- 图中中间的 tanh \tanh tanh为Cell输入信号( tanh ( c o n c a t [ x t , h t − 1 ] ) \tanh(concat[x_t, h_{t-1}]) tanh(concat[xt,ht−1])),其中包含短时记忆以及当前信息

- 图中右侧的 σ \sigma σ输出门( σ ( c o n c a t [ x t , h t − 1 ] ) \sigma(concat[x_t, h_{t-1}]) σ(concat[xt,ht−1])),判断当前长时记忆与短时记忆是否重要,是否输出

- c t − 1 c_{t-1} ct−1为长时间记忆

- h t − 1 h_{t-1} ht−1为短时间记忆

LSTM变形与数学表达式

- f t f_t ft为遗忘门,判断长时记忆与短时记忆是否重要,是否需要遗忘

- i t i_t it为输入门,判断短时记忆是否重要,将短时记忆加入长时记忆中

- o t o_t ot为输出门,判断当前长时记忆与短时记忆是否重要,是否输出

}$为长时间记忆 - h t − 1 h_{t-1} ht−1为短时间记忆

门控循环单元GRU(Grated Recurrent Unit)

GRU为简化版本的LSTM,理论上速度会有显著提升,具体算法:GRU

双向RNN模型

- 同时考虑“过去”和“未来”的信息

- 构建两个RNN/LSTM传播的单元(一个正向,一个反向)

模型构建代码:

import tensorflow as tf

# 超参数

batch_size = 16

seq_len = 100 # 100个字

emb_size = 128 # 字符向量长度

n_layer = 2

n_hidden = 128

# 统计量

n_words = 5388 # 多少字符

n_class = 10 # 多少类

# 定义输入:长度为100的字符ID序列,类型INT

# 定义标签:每个时间步均需要做分类

inputs = tf.placeholder(tf.int32, [batch_size, seq_len])

labels = tf.placeholder(tf.int32, [batch_size, seq_len])

mask = tf.placeholder(tf.float32, [batch_size, seq_len])

# Embedding

emb_w = tf.get_variable("emb_w", [n_words, emb_size]) #是可训练的

inputs_emb = tf.nn.embedding_lookup(emb_w, inputs)

# inputs_emb是神经网络输入[batch_size(B), seq_len(T), emb_size(C)]

# 定义多层神经网络

# cell_fn = tf.nn.rnn_cell.BasicRNNCell # 基本RNN

cell_fn = tf.nn.rnn_cell.LSTMCell # LSTM

# 向前传播的单元

cell_fw = tf.nn.rnn_cell.MultiRNNCell(

[cell_fn(n_hidden) for itr in range(n_layer)])

# 反向传播的单元

cell_bw = tf.nn.rnn_cell.MultiRNNCell(

[cell_fn(n_hidden) for itr in range(n_layer)])

# 将Embedding后的向量输入循环神经网络中

outputs, last_state = tf.nn.dynamic_rnn(cell_fw, inputs_emb, dtype=tf.float32)

intputs_bw = tf.reverse(inputs_emb, 1)

outputs_bw, last_state = tf.nn.dynamic_rnn(cell_bw, intputs_bw, dtype=tf.float32)

outputs_bw = tf.reverse(outputs_bw, 1)

# # 或者使用TF中tf.nn.bidirectional_dynamic_rnn()进行搭建,不许手动将输入/输出反向

# # 双向RNN,seqlen用于不同长度序列解码

# (fw_output, bw_output), state = tf.nn.bidirectional_dynamic_rnn(

# cell_fw_cell,

# cell_bw_cell,

# emb_input,

# seqlen,

# dtype=tf.float32

# )

# outputs = tf.concat([outputs, outputs_bw], 2)

# outputs相当于Y[batch_size, seq_len, n_hidden]

# logits,每个时间步需要预测类别

logits = tf.layers.dense(outputs, 4)

# 优化过程

loss = tf.contrib.seq2seq.sequence_loss(

logits, # 网络的输出

labels, # 标签

mask # 掩码 用于选取非补0区域数据,具体操作为对补0区域乘上0权重

)

step = tf.train.AdamOptimizer().minimize(loss)