pytorch模型训练全过程

#model.py

#搭建神经网络

class Model_test(nn.Module):

def __init__():

super(Model_test, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3,32,5,1,2),

nn.MaxPool2d(2),

nn.Conv2d(32,32,5,1,2),

nn.MaxPool2d(2),

nn.Conv2d(32,64,5,1,2),

nn.MaxPool2d(2),

nn.Flatten(),

nn,Linear(64*4*4,64),

nn,Linear(64,10))

def forward(self,x):

X= self.model(x)

return x

#测试网络正确性

if __name__=="__main__"

model_test = Model_test()

input = torch.ones((64,3,32,32))

output = model_test(input)

print(output.shape)

#train.py

import torchvision

from model import * #model.py和train.py需要在同一个文件夹下

import time #计时

#定义训练的设备

device = torch.device("cpu")

device = torch.device("cuda")

device = torch.device("cuda:0")

#准备数据集

train_data = torchvision.datasets.CIFAR10("./data", train= Ture, transform=torchvision.transforms.ToTensor(),download=True)

test_data = torchvision.datasets.CIFAR10("./data", train= False, transform=torchvision.transforms.ToTensor(),download=True)

#length长度

train_data_size = len(train_data)

test_data_size = len(test_data)

#如果train_data_size=10,训练数据集的长度为:10

print("训练数据集的长度为:{}".format(train_data_size))

print("测试数据集的长度为:{}".format(test_data_size))

#利用DataLoader来加载数据集

train_dataloader = DataLoader(train_data, batch_size=64)

test_dataloader = DataLoader(test_data, batch_size=64)

#创建网络模型

model_test = Model_test()

#if torch.cuda,is_available():

# model_test.cuda() ####方法一GPU 1.网络模型

model_test.to(device) ###方法二GPU

#损失函数

loss_fn= nn.CrossEntropyLoss()

if torch.cuda,is_available():

loss_fn = loss_fn.cuda() ####方法一GPU 2.损失函数

loss_fn = loss_fn.to(device) ###方法二GPU

#优化器

#1e-2 = 1x(10)^(-2)=0.01

learning_rate = 1e-2

optimizer = torch.optim.SGD(model_test.paaramenters(), lr = learning_rate)

#设置训练网络的一些参数

total_train_step = 0

#记录测试的次数

total_test_step = 0

#训练的轮数

epoch = 10

#添加tensorboard

writer = SummaryWriter("logs_train")

start_time = time.time()

for i in range(epoch):

print("-------第{}轮的训练开始了-------".format(i+1))

#训练开始

model_test.train() #作用对特定层起作用

for data in train_dataloader:

imgs, targets = data

#if torch.cuda,is_available():

# imgs = imgs.cuda() ####方法一GPU 3.1 训练数据

# targets = targets.cuda() ####方法一GPU 3.1 训练数据

imgs = imgs.to(device) ###方法二GPU

targets = targets.to(device) ###方法二GPU

outputs = model_test(imgs)

loss = loss_fn(outputs, targets)

#优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step +=1

if total_train_step %100 =0:

end_time = time.time()

print(start_tim, end_time)

print("训练次数:{},loss:{}".format(total_train_step,loss.item()))

writer.add_scalar("train_lose",loss.item(), total_train_step)

#测试步骤开始

model_test.eval() #作用对特定层起作用

total_test_loss = 0

total_accuracy=0

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

#if torch.cuda,is_available():

# imgs = imgs.cuda() ####方法一GPU 3.2 测试数据

# targets = targets.cuda() ####方法一GPU 3.2 测试数据

imgs = imgs.to(device) ###方法二GPU

targets = targets.to(device) ###方法二GPU

outputs = model_test(imgs)

loss = loss_fn(outputs, targets)

total_test_loss = total_test_loss + loss

accuracy = (output.agxmax(1)==taargets).sum

total_accuracy = total_accuracy + accuracy

print("整体测试集的Loss:{}".format(total_test_loss))

print("整体测试集的准确率:{}".format(total_accuracy、test_data_size))

writer.add_scalar("test_lose",total_test_loss.item(), total_test_step)

total_test_step +=1

torch.save(model_test, "model_test_{}.pth".format(i))

#torch.save(“Model_test_{}.pth”.format(i))

print("模型已保存")

writer.close()

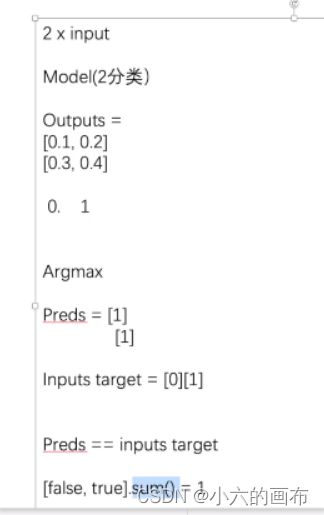

test2.py

outputs = torcch.tensor([0.3,0.4],[0.1,0.2])

print(outputs.argmax(1)) #0代表纵向,1代表横向

preds = outputs.argmax(1)

targets = torch.tensor([0,1])

print((preds == targets).sum())

模型在gpu上训练的三个关键:网络模型、数据(输入、标注)、损失函数

调用.cuda()

#train_gpu.py

方法一:.cuda()

方法二:.to(device)

device = torch.device

torch.device(“cuda:0”)

torch.device(“cuda:1”)

方法三:

device = torch.device(“cuda” if torch.cuda.is_available() else “cpu”)