docker三剑客之 Docker Machine Docker Compose Docker Swarm

Docker 三剑客

- 一、docker machine

-

- 1.Docker Machine 简介

- 2.Docker Machine实践

- 二、docker-compose

-

- 1.docker-compose简介

- 2.docker-compose实践

- 三、docker集群

-

- 1.swarm简介

- 2.Docker Swarm实践

Docker三大编排工具:

Docker Compose:是用来组装多容器应用的工具,可以在 Swarm集群中部署分布式应用。

Docker Machine:是支持多平台安装Docker的工具,使用 Docker Machine,可以很方便地在笔记本、云平台及数据中心里安装Docker。

Docker Swarm:是Docker社区原生提供的容器集群管理工具。

一、docker machine

1.Docker Machine 简介

Docker Machine 是 Docker 官方提供的一个工具,它可以帮助我们在远程的机器上安装 Docker,或者在虚拟机 host 上直接安装虚拟机并在虚拟机中安装 Docker。我们还可以通过 docker-machine 命令来管理这些虚拟机和 Docker。下面是来自 Docker Machine 官方文档的一张图,很形象。

2.Docker Machine实践

尤其注意 不想麻烦 就把虚拟机的火墙关掉。

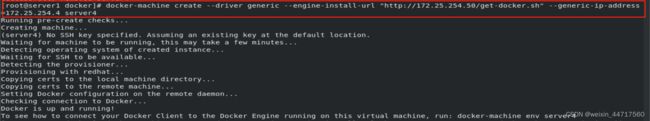

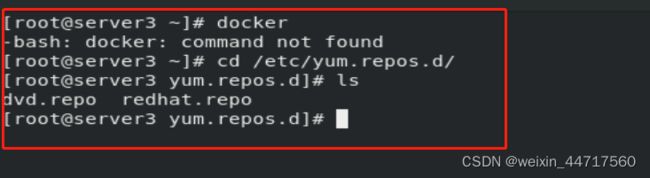

(1)新建一个纯净的虚拟机server3,用docker-machine在server3上部署docker

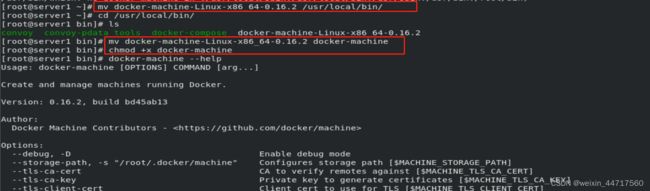

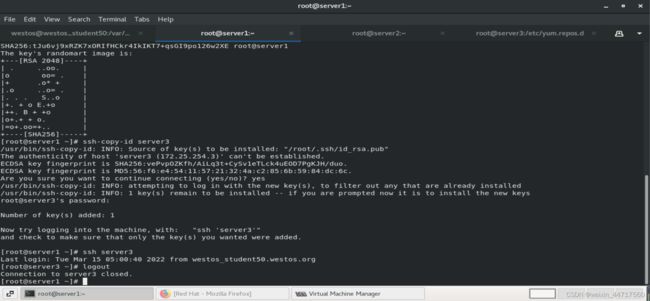

(2)在server1上安装docker-machine 并对server3做免密认证

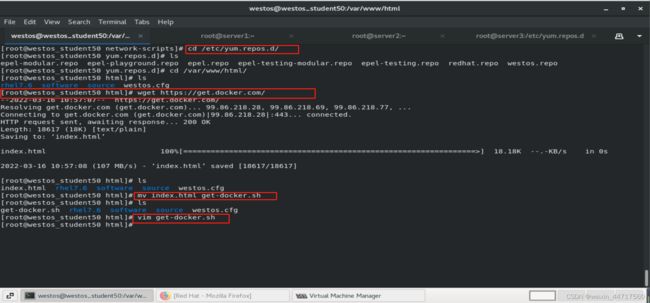

(3)在主机的Apache发布目录中编辑自动执行脚本

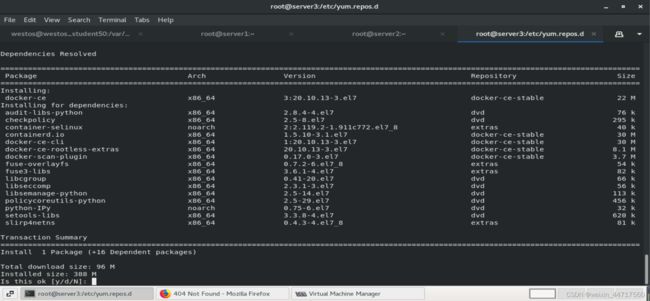

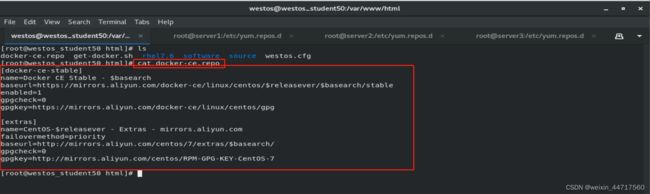

(4)给server3配置docker软件源

此处 我们最好自己在主机上配置一个docker源,因为官方给的地址下载的话太慢了,阿里云上也有,且采用阿里云下载的方式也可以。但是在生产环境中 最好不要是用外网。

那如果配置内网docker源的话,我们可以把要用到的依赖包(如下图所示)先下载下来,然后放到apache发布目录里面,将其供配置时使用。

此时需要用到主机的Apache,发布此文件作为给server3部署的docker软件源。

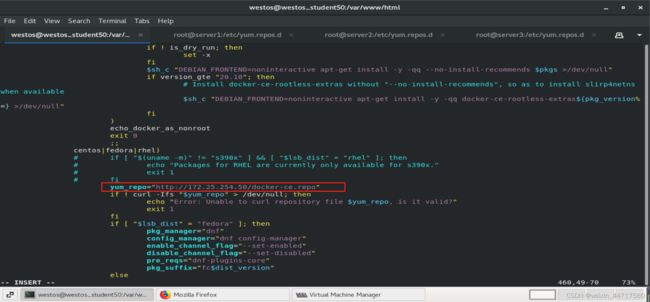

(5)在自动执行脚本中编辑yum源

(6)在server1上的docker-machine为server3部署docker

[root@server1 ~]# docker-machine create --driver generic --engine-install-url "http://172.25.254.50/get-docker.sh" --generic-ip-address=172.25.254.3 server4

#指定安装脚本的url; 指定目标主机server4及IP

二、docker-compose

1.docker-compose简介

Github地址: https://github.com/docker/compose

简单来讲,Compose是用来定义和运行一个或多个容器应用的工具。使用compose可以简化容器镜像的建立及容器的运行。

Compose使用python语言开发,非常适合在单机环境里部署一个或多个容器,并自动把多个容器互相关联起来。

Compose 中有两个重要的概念:

服务 (service):一个应用的容器,实际上可以包括若干运行相同镜像的容器实例。

项目 (project):由一组关联的应用容器组成的一个完整业务单元,在 docker-compose.yml 文件中定义。

用户可以很容易地用一个配置文件定义一个多容器的应用,然后使用一条指令安装这个应用的所有依赖,完成构建。

解决了容器与容器之间如何管理编排的问题。

2.docker-compose实践

(1)docker-compose下载及安装

docker compose安装

方式1:(官方推荐,但是慢)

# curl -L https://github.com/docker/compose/releases/download/1.21.2/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose

# chmod +x /usr/local/bin/docker-compose

方式2:(推荐,速度快)

https://mirrors.aliyun.com/docker-toolbox/linux/compose/1.21.2/

下载后放在: /usr/local/bin/docker-compose

chmod +x /usr/local/bin/docker-compose

(2)查看官方给出的案例

(3)我们以nginx负载均衡为例

[root@server1 ~]# mkdir compose

[root@server1 ~]# cd compose/

[root@server1 compose]#

[root@server1 compose]# ls

[root@server1 compose]# cd

[root@server1 ~]# ls

auth certs convoy docker harbor-offline-installer-v2.3.4.tgz openssl11-libs-1.1.1k-2.el7.x86_64.rpm

base-debian10.tar compose convoy-v0.5.2.tar.gz harbor openssl11-1.1.1k-2.el7.x86_64.rpm

[root@server1 ~]# cd harbor/

[root@server1 harbor]# ls

common common.sh docker-compose.yml harbor.v2.3.4.tar.gz harbor.yml harbor.yml.tmpl install.sh LICENSE prepare

[root@server1 harbor]# vim docker-compose.yml

[root@server1 harbor]# cd

[root@server1 ~]# cd compose/

[root@server1 compose]# ls

[root@server1 compose]# vim docker-compose.yml

[root@server1 compose]# vim docker-compose.yml

[root@server1 compose]# mkdir proxy

[root@server1 compose]# cd proxy/

[root@server1 proxy]# vim nginx.conf

[root@server1 proxy]# \vi nginx.conf

[root@server1 proxy]# vim nginx.conf

[root@server1 proxy]# cd ..

[root@server1 compose]# vim

docker-compose.yml proxy/

[root@server1 compose]# vim docker-compose.yml

[root@server1 compose]# mkdir web1

[root@server1 compose]# mkdir web2

[root@server1 compose]# cd web1

[root@server1 web1]# ls

[root@server1 web1]# echo web1 > index.html

[root@server1 web1]# cd .

[root@server1 web1]# cd ..

[root@server1 compose]# cd web2

[root@server1 web2]# echo web2 > index.html

[root@server1 web2]# cd ..

[root@server1 compose]# ls

docker-compose.yml proxy web1 web2

[root@server1 compose]# vim docker-compose.yml

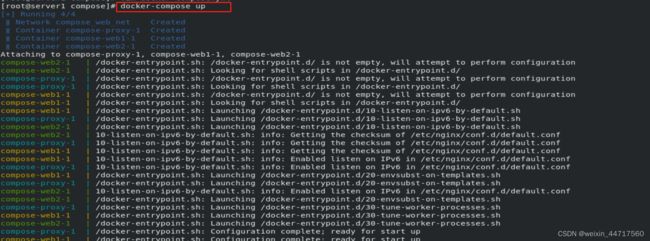

[root@server1 compose]# docker-compose up

services.proxy Additional property volume is not allowed

[root@server1 compose]# vim docker-compose.yml

[root@server1 compose]# ls

docker-compose.yml proxy web1 web2

[root@server1 compose]# vim docker-compose.yml

[root@server1 compose]# docker-compose up

services.web2 Additional property volume is not allowed

[root@server1 compose]# vim docker-compose.yml

[root@server1 compose]# docker-compose up

networks must be a mapping

[root@server1 compose]# vim docker-compose.yml

[root@server1 compose]# docker-compose up

其中,docker-compose.yml中的内容为:

version: "3.9"

services:

proxy:

image: nginx

ports:

- "80:80"

networks:

- web_net

volumes:

- "./proxy/nginx.conf:/etc/nginx/nginx.conf"

web1:

image: nginx

networks:

- web_net

volumes:

- "./web1:/usr/share/nginx/html"

web2:

image: nginx

networks:

- web_net

volumes:

- "./web2:/usr/share/nginx/html"

networks:

web_net:

proxy中 nginx.conf文件如下:

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

upstream westos {

server web1:80;

server web2:80;

}

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

#include /etc/nginx/conf.d/*.conf;

server{

listen 80;

server_name www.westos.org;

location / {

proxy_pass http://westos;

}

}

}

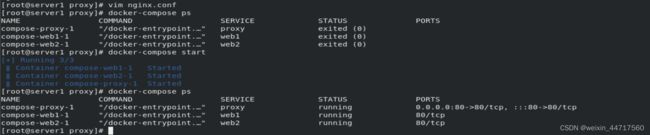

此时 docker-compose up 能正常起来,ctrl+c 退出后,我们在后台起来:

[root@server1 proxy]# docker-compose ps

NAME COMMAND SERVICE STATUS PORTS

compose-proxy-1 "/docker-entrypoint.…" proxy exited (0)

compose-web1-1 "/docker-entrypoint.…" web1 exited (0)

compose-web2-1 "/docker-entrypoint.…" web2 exited (0)

[root@server1 proxy]# docker-compose start

[+] Running 3/3

⠿ Container compose-web1-1 Started 3.4s

⠿ Container compose-web2-1 Started 3.4s

⠿ Container compose-proxy-1 Started 3.4s

[root@server1 proxy]# docker-compose ps

NAME COMMAND SERVICE STATUS PORTS

compose-proxy-1 "/docker-entrypoint.…" proxy running 0.0.0.0:80->80/tcp, :::80->80/tcp

compose-web1-1 "/docker-entrypoint.…" web1 running 80/tcp

compose-web2-1 "/docker-entrypoint.…" web2 running 80/tcp

[root@server1 proxy]#

三、docker集群

1.swarm简介

Docker Swarm 是 Docker 官方三剑客项目之一,提供 Docker 容器集群服务,是 Docker 官方对容器云生态进行支持的核心方案。使用它,用户可以将多个 Docker 主机封装为单个大型的虚拟 Docker 主机,快速打造一套容器云平台。

Swarm mode内置kv存储功能,提供了众多的新特性,比如:具有容错能力的去中心化设计、内置服务发现、负载均衡、路由网格、动态伸缩、滚动更新、安全传输等。使得 Docker 原生的 Swarm 集群具备与 Mesos、Kubernetes 竞争的实力。

基本概念

Swarm 是使用 SwarmKit 构建的 Docker 引擎内置(原生)的集群管理和编排工具。

节点:

运行 Docker 的主机可以主动初始化一个 Swarm 集群或者加入一个已存在的 Swarm 集群,这样这个运行 Docker 的主机就成为一个 Swarm 集群的节点 (node)

节点分为管理 (manager) 节点和工作 (worker) 节点

管理节点:用于 Swarm 集群的管理,docker swarm 命令基本只能在管理节点执行(节点退出集群命令 docker swarm leave 可以在工作节点执行)。一个 Swarm 集群可以有多个管理节点,但只有一个管理节点可以成为 leader,leader 通过 raft 协议实现。

工作节点:是任务执行节点,管理节点将服务 (service) 下发至工作节点执行。管理节点默认也作为工作节点。也可以通过配置让服务只运行在管理节点。

集群中管理节点与工作节点的关系如下所示:

2.Docker Swarm实践

(1)初始化 并加入节点

docker swarm init

[root@server2 ~]# docker swarm init

Swarm initialized: current node (u8bk3i2bdf3zi5jrefn77bai2) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-2w47end27xyidrqkh11yfcqx3r991ik1wlc9gi0q3bbh8k44b7-29erjzgsgmfhushlesr3ycoyk 172.25.254.2:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

[root@server2 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

u8bk3i2bdf3zi5jrefn77bai2 * server2 Ready Active Leader 20.10.12

[root@server2 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

u8bk3i2bdf3zi5jrefn77bai2 * server2 Ready Active Leader 20.10.12

rcag3tjcmyxsfluwfusvp8949 server4 Ready Active 20.10.13

在server4上:

[root@server4 docker]# docker swarm join --token SWMTKN-1-2w47end27xyidrqkh11yfcqx3r991ik1wlc9gi0q3bbh8k44b7-29erjzgsgmfhushlesr3ycoyk 172.25.254.2:2377

This node joined a swarm as a worker.

[root@server4 docker]#

(2)由于之前配置的内容都持久化到了/data,所以得来一个彻底的清理:

[root@server1 harbor]# docker-compose down

[root@server1 harbor]# ls

common common.sh docker-compose.yml harbor.v2.3.4.tar.gz harbor.yml harbor.yml.tmpl install.sh LICENSE prepare

[root@server1 harbor]# rm -f docker-compose.yml

[root@server1 harbor]# ./prepare

[root@server1 harbor]# docker volume prune

[root@server1 harbor]# cd

[root@server1 ~]# ls

auth certs convoy docker harbor-offline-installer-v2.3.4.tgz openssl11-libs-1.1.1k-2.el7.x86_64.rpm

base-debian10.tar compose convoy-v0.5.2.tar.gz harbor openssl11-1.1.1k-2.el7.x86_64.rpm

[root@server1 ~]# cd /data

[root@server1 data]# ls

ca_download certs chart_storage database job_logs redis registry secret trivy-adapter

[root@server1 data]# cp -r certs/ ~

[root@server1 data]# rm -fr *

[root@server1 data]# ls

[root@server1 data]# cp -r ~/certs/ .

[root@server1 data]# ls

certs

[root@server1 ~]# cd harbor/

[root@server1 harbor]# ls

common common.sh docker-compose.yml harbor.v2.3.4.tar.gz harbor.yml harbor.yml.tmpl install.sh LICENSE prepare

[root@server1 harbor]# ./install.sh --help

[root@server1 harbor]# ./install.sh --with-chartmuseum

(3)此时,上传两个镜像,以便实验观看:

[root@server1 harbor]# docker push reg.westos.org/library/busybox:latest

The push refers to repository [reg.westos.org/library/busybox]

d31505fd5050: Pushed

latest: digest: sha256:b69959407d21e8a062e0416bf13405bb2b71ed7a84dde4158ebafacfa06f5578 size: 527

[root@server1 harbor]# docker push reg.westos.org/library/nginx:1.18.0

The push refers to repository [reg.westos.org/library/nginx]

4fa6704c8474: Pushed

4fe7d87c8e14: Pushed

6fcbf7acaafd: Pushed

f3fdf88f1cb7: Pushed

7e718b9c0c8c: Pushed

1.18.0: digest: sha256:9b0fc8e09ae1abb0144ce57018fc1e13d23abd108540f135dc83c0ed661081cf size: 1362

(4)此时,检查server2与server4两个节点与仓库的连通性,发现都没问题,为了方便实验结果的观看,再拉一个镜像

[root@server2 docker]# docker pull busybox

Using default tag: latest

latest: Pulling from library/busybox

009932687766: Pull complete

Digest: sha256:b69959407d21e8a062e0416bf13405bb2b71ed7a84dde4158ebafacfa06f5578

Status: Downloaded newer image for busybox:latest

docker.io/library/busybox:latest

[root@server4 docker]# docker pull busybox

Using default tag: latest

latest: Pulling from library/busybox

009932687766: Pull complete

Digest: sha256:b69959407d21e8a062e0416bf13405bb2b71ed7a84dde4158ebafacfa06f5578

Status: Downloaded newer image for busybox:latest

docker.io/library/busybox:latest

再拉取一个镜像

[root@server1 harbor]# docker pull ikubernetes/myapp:v1

v1: Pulling from ikubernetes/myapp

550fe1bea624: Pull complete

af3988949040: Pull complete

d6642feac728: Pull complete

c20f0a205eaa: Pull complete

fe78b5db7c4e: Pull complete

6565e38e67fe: Pull complete

Digest: sha256:9c3dc30b5219788b2b8a4b065f548b922a34479577befb54b03330999d30d513

Status: Downloaded newer image for ikubernetes/myapp:v1

docker.io/ikubernetes/myapp:v1

[root@server1 harbor]# docker pull ikubernetes/myapp:v2

v2: Pulling from ikubernetes/myapp

550fe1bea624: Already exists

af3988949040: Already exists

d6642feac728: Already exists

c20f0a205eaa: Already exists

fe78b5db7c4e: Already exists

Digest: sha256:85a2b81a62f09a414ea33b74fb8aa686ed9b168294b26b4c819df0be0712d358

Status: Downloaded newer image for ikubernetes/myapp:v2

docker.io/ikubernetes/myapp:v2

[root@server1 harbor]# docker tag ikubernetes/myapp:v1 reg.westos.org/library/myapp:v1

[root@server1 harbor]# docker tag ikubernetes/myapp:v2 reg.westos.org/library/myapp:v2

[root@server1 harbor]# docker push reg.westos.org/library/myapp1:v1

The push refers to repository [reg.westos.org/library/myapp1]

An image does not exist locally with the tag: reg.westos.org/library/myapp1

[root@server1 harbor]# docker push reg.westos.org/library/myapp:v1

The push refers to repository [reg.westos.org/library/myapp]

a0d2c4392b06: Pushed

05a9e65e2d53: Pushed

68695a6cfd7d: Pushed

c1dc81a64903: Pushed

8460a579ab63: Pushed

d39d92664027: Pushed

v1: digest: sha256:9eeca44ba2d410e54fccc54cbe9c021802aa8b9836a0bcf3d3229354e4c8870e size: 1569

[root@server1 harbor]# docker push reg.westos.org/library/myapp:v2

The push refers to repository [reg.westos.org/library/myapp]

05a9e65e2d53: Layer already exists

68695a6cfd7d: Layer already exists

c1dc81a64903: Layer already exists

8460a579ab63: Layer already exists

d39d92664027: Layer already exists

v2: digest: sha256:5f4afc8302ade316fc47c99ee1d41f8ba94dbe7e3e7747dd87215a15429b9102 size: 1362

(5)在管理节点端,部署服务:

[root@server2 docker]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

u8bk3i2bdf3zi5jrefn77bai2 * server2 Ready Active Leader 20.10.12

rcag3tjcmyxsfluwfusvp8949 server4 Ready Active 20.10.13

[root@server2 docker]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

[root@server2 docker]# docker service create --name webservice -p 80:80 --replicas 2 myapp:v1

kj6ht0zllpq3t4vcqvgotxwuw

overall progress: 2 out of 2 tasks

1/2: running [==================================================>]

2/2: running [==================================================>]

verify: Service converged

此时,在server4上(工作节点),可以看到,镜像被自动下载下来了,

[root@server4 docker]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

busybox latest ec3f0931a6e6 5 weeks ago 1.24MB

myapp <none> d4a5e0eaa84f 4 years ago 15.5MB

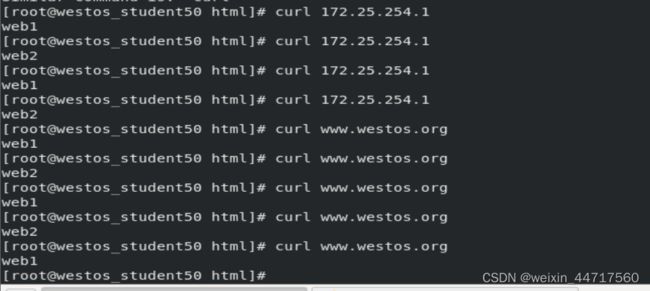

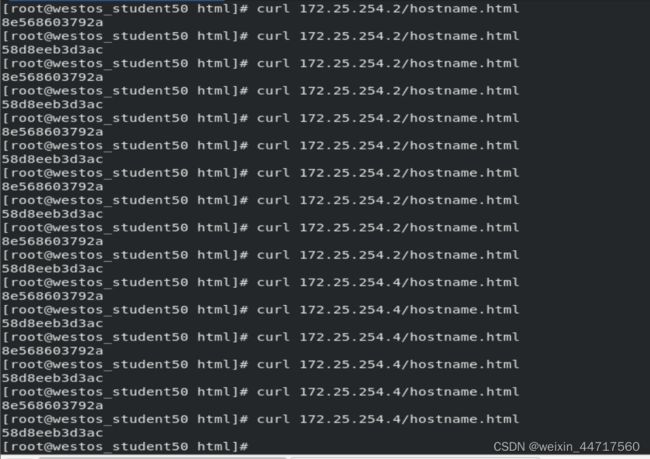

在客户端测试如下所示,是负载均衡的:

当然,我们可以将其扩展和减小其副本数:

[root@server2 docker]# docker service scale webservice=4 (扩展副本数为4)

webservice scaled to 4

overall progress: 4 out of 4 tasks

1/4: running [==================================================>]

2/4: running [==================================================>]

3/4: running [==================================================>]

4/4: running [==================================================>]

verify: Service converged

[root@server2 docker]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

01bf0d130b4d myapp:v1 "nginx -g 'daemon of…" About a minute ago Up About a minute 80/tcp webservice.3.iptf69g2u09ujyd1gqheuawgg

8e568603792a myapp:v1 "nginx -g 'daemon of…" 5 minutes ago Up 5 minutes 80/tcp webservice.2.jeah5ej0m5k43ko0p0z0flhym

[root@server2 docker]# docker service ps webservice

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

8dkhqosx1gw9 webservice.1 myapp:v1 server4 Running Running less than a second ago

jeah5ej0m5k4 webservice.2 myapp:v1 server2 Running Running 10 minutes ago

iptf69g2u09u webservice.3 myapp:v1 server2 Running Running 6 minutes ago

aa9u1myo4bmx webservice.4 myapp:v1 server4 Running Running less than a second ago