kudeadm 部署 k8s

一、部署规划

kubeadm 部署 k8s ,没有硬性要求必须有几台master节点,或者slave节点,保证最基础有一台master节点即可,本文节省资源只部署master节点。

| 主机名 | 系统 | 角色 | 部署组件 |

|---|---|---|---|

| k8s-master | centos 7 | master | etcd,kube-apiserver,kube-controller-manager,kubectl,kubeadm,kubelet,kube-proxy,flannel |

| k8s-slave1 | centos 7 | slave | kubectl,kubelet,kube-proxy,flannel |

| k8s-slave2 | centos 7 | slave | kubectl,kubelet,kube-proxy,flannel |

端口开放

| master节点 | TCP : 6443 , 2379 , 2380 , 60080 , 60081 UDP : 协议端口全部打开 |

| slave节点 | UDP : 协议端口全部打开 |

二、基础环境搭建 (master+slave)

1、设置主机名

[root@localhost ~]# hostnamectl set-hostname k8s-master

[root@localhost ~]# bash

[root@k8s-master ]# 2、修改hosts

[root@k8s-master ~]# echo "192.168.18.7 k8s-master" >>/etc/hosts

3、设置iptables

iptables -P FORWARD ACCEPT4、关闭交换分区

sed -ri 's/.*swap.*/#&/' /etc/fstab

swapoff -a && sysctl -w vm.swappiness=05、关闭防火墙、SELinux

systemctl stop firewalld.service;systemctl disable firewalld.service;sed -i -r 's#(^SELIN.*=)enforcing#\1disable#g' /etc/selinux/config;setenforce 06、修改内核参数

[root@k8s-master ~]# cat /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.max_map_count=262144

[root@k8s-master ~]# modprobe br_netfilter

[root@k8s-master ~]# sysctl -p /etc/sysctl.d/k8s.conf7、设置yum 源

[root@k8s-master ~]# wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@k8s-master ~]# mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo_bak

[root@k8s-master ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

[root@k8s-master ~]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

[root@k8s-master ~]# cat /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

[root@k8s-master ~]# yum clean all && maker makecache

三、部署 docker (master+slave)

[root@k8s-master ~]# yum install -y docker-ce

[root@hdss7-21 ~]# mkdir /etc/docker/

[root@k8s-master ~]# cat /etc/docker/daemon.json

{

"graph": "/data/docker",

"storage-driver": "overlay2",

"insecure-registries": ["registry.access.redhat.com","quay.io","harbor.od.com","harbor.od.com:180"],

"registry-mirrors": ["https://6kx4zyno.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"live-restore": true

}

[root@hdss7-21 ~]# mkdir -p /data/docker

[root@hdss7-21 ~]# systemctl start docker ; systemctl enable docker四、部署 k8s-v1.16.2

1、下载kubeadm (master+slave)

[root@k8s-master ~]# yum install -y kubelet-1.16.2 kubeadm-1.16.2 kubectl-1.16.2 -- disableexcludes=kubernetes

[root@k8s-master ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"16", GitVersion:"v1.16.2", GitCommit:"c97fe5036ef3df2967d086711e6c0c405941e14b", GitTreeState:"clean", BuildDate:"2019-10-15T19:15:39Z", GoVersion:"go1.12.10", Compiler:"gc", Platform:"linux/amd64"}

[root@k8s-master ~]# systemctl enable kubelet

2、使用kubeadm模板部署k8s (master)

[root@k8s-master ~]# mkdir /opt/k8s-install/;cd /opt/k8s-install/

[root@k8s-master k8s-install]# kubeadm config print init-defaults > kubeadm.yaml

[root@k8s-master k8s-install]# cat kubeadm.yaml apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.18.7 # apiserver的地IP

bindPort: 6443 # apiserver的port

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers # 修改为阿里云地址

kind: ClusterConfiguration

kubernetesVersion: v1.16.2

networking:

dnsDomain: cluster.local

podSubnet: 172.7.0.0/16 # pod 网段, flannel插件需要使用网段

serviceSubnet: 10.96.0.0/12

scheduler: {}

[root@k8s-master k8s-install]#

提前将容器下载到本地,以防在部署时候,是镜像导致的部署失败 (master+slave)

[root@k8s-master k8s-install]# kubeadm config images pull --config kubeadm.yaml

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.16.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.16.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.16.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.16.2

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.1

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.3.15-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:1.6.2

[root@k8s-master k8s-install]# docker pull registry.aliyuncs.com/google_containers/kube-apiserver:v1.16.2

[root@k8s-master k8s-install]# docker pull registry.aliyuncs.com/google_containers/kube-controller-manager:v1.16.2

[root@k8s-master k8s-install]# docker pull registry.aliyuncs.com/google_containers/kube-scheduler:v1.16.2

[root@k8s-master k8s-install]# docker pull registry.aliyuncs.com/google_containers/kube-proxy:v1.16.2

[root@k8s-master k8s-install]# docker pull registry.aliyuncs.com/google_containers/pause:3.1

[root@k8s-master k8s-install]# docker pull registry.aliyuncs.com/google_containers/etcd:3.3.15-0

[root@k8s-master k8s-install]# docker pull registry.aliyuncs.com/google_containers/coredns:1.6.23、初始化master节点 (master节点)

[root@k8s-master k8s-install]# kubeadm init --config kubeadm.yaml # 提示:Your kubernetes master has initialized successfully! 为成功

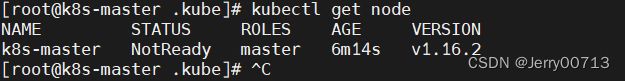

[root@k8s-master .kube]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master NotReady master 6m14s v1.16.2

提示你需要执行

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config4、添加 slave到 k8s (所有的slave)

在每台slave节点上执行如下命令,该命令是在kubeadm init 成功后提示信息打印出来的

kubeadm join 192.168.18.7:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:7d9e753992ae96e0d5bd34e20129a5b9e5f65b355f69e8d59f89f578c1558a0d五、部署flannel 插件 (一台master节点)

查看status状态是NotReady,是由于没有部署flannel插件,flannel插件是能够让不同节点之间,pod与pod,pod与各个节点宿主机互相通信的组件,所以是必不可少的,所以状态为NotReady。即使你现在只有master一个节点,也需要部署,k8s 检测的是有无CNI网络插件

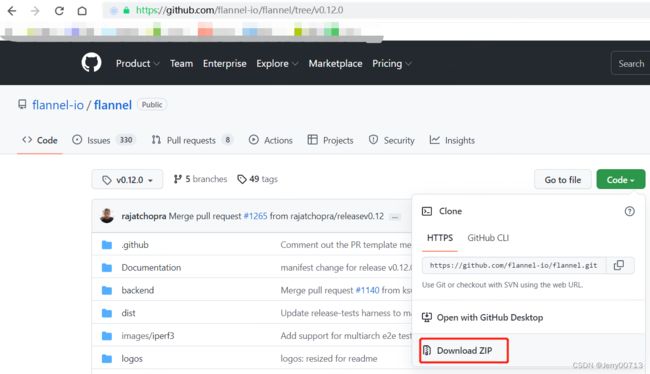

1、下载flannel (一台master节点)

https://github.com/flannel-io/flannel/tree/v0.12.0

2、部署flannel ( 一台master节点)

理解flannel : flannel如何实现pod与pod通信?pod是k8s最小单元,容器是docker的最小单元,而pod中可能存在一个或者多个容器。所以说白了,k8s通过手段管理的就是容器,而容器与容器怎么通信,如果是同一台机器,是通过docker0通信的,也就相当于docker0是一个交换机一个网关,一个容器访问另一个容器,通过网关协调。而容器如何跟另一台的物理机的容器通信?首先容器通过docker0无法找到另一台容器的,所以docker0就把请求往上给,给到了物理机的物理网卡,物理网卡又是怎么去知道另一台的物理机的容器在哪?flannel起到了作用。假如有A B 两个物理机器,A机器通过ens33 10.4.7.21/24 跟 B 机器通信,于是配置flannel 绑定ens33,然后在 A 机器daemon.json中配置,当前物理机启动的pod网段是172.7.21/24,也就是说当前物理机启动的pod的IP是172.7.21/24中之一,而 B 物理机的pod网段是172.7.22/24。于是flannel 就会在 A 物理机生成一个路由规则,172.7.22/24 10.4.7.22 255.255.255.0 ens33 ,代表如果访问的是172.7.22/24就把通过ens33网卡流量给10.4.7.22,具体的解释就是,A中的pod,访问172.7.22/24,docker0发现没有符合的地址,给到了上层网卡ens33,通过路由发现,去172.7.22/24的,直接通过ens33发给10.4.7.22,当 B 机器接收到后,发现是访问172.7.22/24,直接转给docker0,在给到pod。

通过上述,首先需要查看物理网卡的名字是什么

找到\flannel-0.12.0\Documentation\kube-flannel.yml 进行修改,添加--iface=网卡,如果不添加此配置,会默认选择第一个物理网卡

提前下载镜像,如果要修改镜像,需要都该

docker pull quay.io/coreos/flannel:v0.12.0-amd64应用flannel

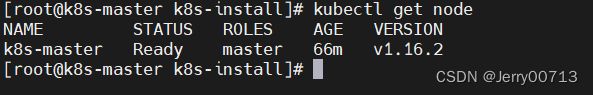

[root@k8s-master k8s-install]# kubectl create -f kube-flannel.yml

查看node 节点的状态

测试验证

[root@k8s-master k8s-install]# kubectl run test-nginx --image=nginx:alpine

[root@k8s-master k8s-install]# kubectl get pod

NAME READY STATUS RESTARTS AGE

test-nginx-5bd8859b98-9bs2p 0/1 Pending 0 59s

六、清理重置环境

如果在部署的时候,遇到了其他问题,可以使用如下命令重置

kubeadm reset

ifconfig cni0 down && ip link delete cni0

ifconfig flannel.1 down && ip link delete flannel.1

rm -rf /var/lib/cni/

七、设置 master 节点是否可以调度

默认kubeadm 部署的k8s,master节点无法被调度,也就是master无法启动业务pod,主要原因是master默认打上了一个污点,所以可以去除

[root@k8s-master nginx]# kubectl taint node k8s-master node-role.kubernetes.io/master:NoSchedule-