MOOC人工智能实践:Tensorflow笔记1.4 1.5

- 1.4TF2常用函数1

-

- tf.cast

- tf.reduce_min tf.reduce_max

- tf.reduce_mean tf.reduce_sum

- tf.Variable

- Tensorflow中的数学运算

- 1.5TF2常用函数2

-

- tf.data.Dataset.from_tensor_slices

- tf.GradientTape

- enumerate

- tf.one_hot

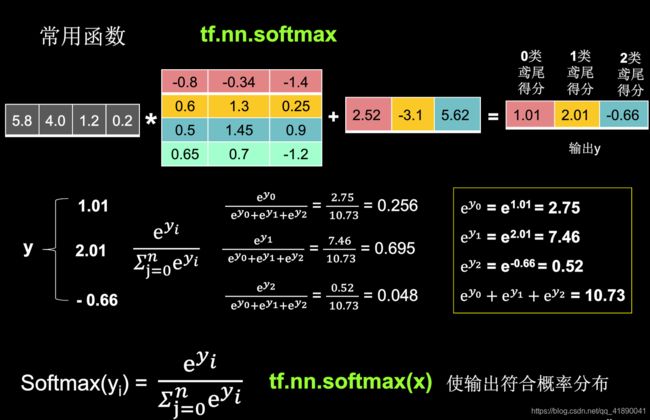

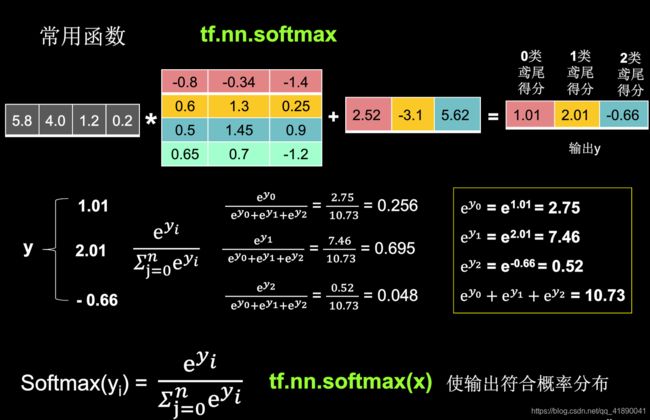

- tf.nn.softmax

- tf.assign_sub

- tf.argmax

1.4TF2常用函数1

tf.cast

tf.reduce_min tf.reduce_max

·强制tensor转换为该数据类型

tf.cast(张量名,dtype=数据类型)

·计算张量维度上元素的最小值

tf.reduce_min(张量名)

·计算张量维度上元素的最大值

tf.reduce_max(张量名)

import tensorflow as tf

x1 = tf.constant([1., 2., 3.], dtype=tf.float64)

print("x1:", x1)

x2 = tf.cast(x1, tf.int32)

print("x2", x2)

print("minimum of x2:", tf.reduce_min(x2))

print("maxmum of x2:", tf.reduce_max(x2))

运行结果:

x1: tf.Tensor([1. 2. 3.], shape=(3,), dtype=float64)

x2 tf.Tensor([1 2 3], shape=(3,), dtype=int32)

minimum of x2: tf.Tensor(1, shape=(), dtype=int32)

maxmum of x2: tf.Tensor(3, shape=(), dtype=int32)

tf.reduce_mean tf.reduce_sum

·计算张量沿着指定维度的平均值

tf.reduce_mean(张量名,axis = 操作值)

·计算张量沿着指定维度的和

tf.reduce_sum(张量名,axis = 操作值)

注意:axis=0纵向操作,axis=1横向操作

import tensorflow as tf

x = tf.constant([[1, 2, 3], [2, 2, 3]])

print("x:", x)

print("mean of x:", tf.reduce_mean(x))

print("sum of x:", tf.reduce_sum(x, axis=1))

运行结果:

x: tf.Tensor(

[[1 2 3]

[2 2 3]], shape=(2, 3), dtype=int32)

mean of x: tf.Tensor(2, shape=(), dtype=int32)

sum of x: tf.Tensor([6 7], shape=(2,), dtype=int32)

tf.Variable

·tf.Variable()变量标记为“可训练”。神经网络训练中,常用该函数标记待训练参数

tf.Variable(初始值)

w = tf.Variable(tf.random.normal([2,2], mean=0, stddev=1))

Tensorflow中的数学运算

·对应元素的四则运算

tf.add, tf.subtract, tf.multiply, tf.divide

·平方、次方与开方

tf.square, tf.pow, tf.sqrt

·矩阵乘

tf.matmul

注意:只有维度相同的元素才可以进行四则远算

import tensorflow as tf

a = tf.ones([1, 3])

b = tf.fill([1, 3], 3.)

print("a:", a)

print("b:", b)

print("a+b:", tf.add(a, b))

print("a-b:", tf.subtract(a, b))

print("a*b:", tf.multiply(a, b))

print("b/a:", tf.divide(b, a))

运行结果:

a: tf.Tensor([[1. 1. 1.]], shape=(1, 3), dtype=float32)

b: tf.Tensor([[3. 3. 3.]], shape=(1, 3), dtype=float32)

a+b: tf.Tensor([[4. 4. 4.]], shape=(1, 3), dtype=float32)

a-b: tf.Tensor([[-2. -2. -2.]], shape=(1, 3), dtype=float32)

a*b: tf.Tensor([[3. 3. 3.]], shape=(1, 3), dtype=float32)

b/a: tf.Tensor([[3. 3. 3.]], shape=(1, 3), dtype=float32)

----------------------------------------------------

a = tf.fill([1, 2], 3.)

print("a:", a)

print("a的平方:", tf.pow(a, 3))

print("a的平方:", tf.square(a))

print("a的开方:", tf.sqrt(a))

运行结果:

a: tf.Tensor([[3. 3.]], shape=(1, 2), dtype=float32)

a的平方: tf.Tensor([[27. 27.]], shape=(1, 2), dtype=float32)

a的平方: tf.Tensor([[9. 9.]], shape=(1, 2), dtype=float32)

a的开方: tf.Tensor([[1.7320508 1.7320508]], shape=(1, 2), dtype=float32)

----------------------------------------------------

a = tf.ones([3, 2])

b = tf.fill([2, 3], 3.)

print("a:", a)

print("b:", b)

print("a*b:", tf.matmul(a, b))

运行结果:

a: tf.Tensor(

[[1. 1.]

[1. 1.]

[1. 1.]], shape=(3, 2), dtype=float32)

b: tf.Tensor(

[[3. 3. 3.]

[3. 3. 3.]], shape=(2, 3), dtype=float32)

a*b: tf.Tensor(

[[6. 6. 6.]

[6. 6. 6.]

[6. 6. 6.]], shape=(3, 3), dtype=float32)

1.5TF2常用函数2

tf.data.Dataset.from_tensor_slices

·生成输入特征/标签对,构建数据集

data = tf.data.Dataset.from_tensor_slices((特征, 标签))

import tensorflow as tf

features = tf.constant([12, 23, 10, 17])

labels = tf.constant([0, 1, 1, 0])

dataset = tf.data.Dataset.from_tensor_slices((features, labels))

for element in dataset:

print(element)

运行结果:

(<tf.Tensor: id=9, shape=(), dtype=int32, numpy=12>, <tf.Tensor: id=10, shape=(), dtype=int32, numpy=0>)

(<tf.Tensor: id=11, shape=(), dtype=int32, numpy=23>, <tf.Tensor: id=12, shape=(), dtype=int32, numpy=1>)

(<tf.Tensor: id=13, shape=(), dtype=int32, numpy=10>, <tf.Tensor: id=14, shape=(), dtype=int32, numpy=1>)

(<tf.Tensor: id=15, shape=(), dtype=int32, numpy=17>, <tf.Tensor: id=16, shape=(), dtype=int32, numpy=0>)

tf.GradientTape

·with结构记录计算过程,gradient求出张量的梯度

with tf.GradientTape() as tape:

若干个计算过程

grad = tape.gradient(函数,对谁求导)

import tensorflow as tf

with tf.GradientTape() as tape:

x = tf.Variable(tf.constant(3.0))

y = tf.pow(x, 2)

grad = tape.gradient(y, x)

print(grad)

运行结果:

tf.Tensor(6.0, shape=(), dtype=float32)

enumerate

·它可遍历每个元素(如列表、元组或字符串),组合为:索引 元素,常在for循环中使用

enumerate(列表名)

seq = ['one', 'two', 'three']

for i, element in enumerate(seq):

print(i, element)

运行结果:

0 one

1 two

2 three

tf.one_hot

·tf.one_hot()函数将待转换数据,转换为one-hot形式的数据输出。在分类问题中,常用独热码做标签

tf.one_hot(待转换数据,depth=几分类)

import tensorflow as tf

classes = 3

labels = tf.constant([1, 0, 2])

output = tf.one_hot(labels, depth=classes)

print("result of labels1:", output)

print("\n")

运行结果:

result of labels1: tf.Tensor(

[[0. 1. 0.]

[1. 0. 0.]

[0. 0. 1.]], shape=(3, 3), dtype=float32)

tf.nn.softmax

·当n分类的n个输出(y0, y1, ..., yn-1)通过softmax()函数,便符合概率分布了

import tensorflow as tf

x1 = tf.constant([[5.8, 4.0, 1.2, 0.2]])

w1 = tf.constant([[-0.8, -0.34, -1.4],

[0.6, 1.3, 0.25],

[0.5, 1.45, 0.9],

[0.65, 0.7, -1.2]])

b1 = tf.constant([2.52, -3.1, 5.62])

y = tf.matmul(x1, w1) + b1

print("x1.shape:", x1.shape)

print("w1.shape:", w1.shape)

print("b1.shape:", b1.shape)

print("y.shape:", y.shape)

print("y:", y)

y_dim = tf.squeeze(y)

y_pro = tf.nn.softmax(y_dim)

print("y_dim:", y_dim)

print("y_pro:", y_pro)

运行结果:

x1.shape: (1, 4)

w1.shape: (4, 3)

b1.shape: (3,)

y.shape: (1, 3)

y: tf.Tensor([[ 1.0099998 2.008 -0.65999985]], shape=(1, 3), dtype=float32)

y_dim: tf.Tensor([ 1.0099998 2.008 -0.65999985], shape=(3,), dtype=float32)

y_pro: tf.Tensor([0.2563381 0.69540703 0.04825491], shape=(3,), dtype=float32)

tf.assign_sub

·更新参数w和b(调用之前,先用tf.Variable定义变量w,b为可训练)

w.assign_sub(w要自减的内容)

tf.argmax

·返回张量沿指定维度最大值的索引

tf.argmax(张量名,axis=操作轴)

import numpy as np

import tensorflow as tf

test = np.array([[1, 2, 3], [2, 3, 4], [5, 4, 3], [8, 7, 2]])

print("test:\n", test)

print("每一列的最大值的索引:", tf.argmax(test, axis=0))

print("每一行的最大值的索引", tf.argmax(test, axis=1))

运行结果:

每一列的最大值的索引: tf.Tensor([3 3 1], shape=(3,), dtype=int64)

每一行的最大值的索引 tf.Tensor([2 2 0 0], shape=(4,), dtype=int64)