OpenCvForUnity人脸识别插件动态创建面部特征点Unity换脸

前段时间捣鼓OpenCV插件,得到多位小伙伴们的认可,因工作原因,有一段时间没更新文章了,在和小伙伴们的交流中发现一些问题,本文鉴于上一章 的思路做一个换脸演示流程,在此感谢各位网友的认可,如果此文能够帮助你解决项目上的问题,希望动动你的发财手,给个一键三联!

进入正题:首先下载必备插件,没有顺序之分,如果必须有就是:OpenCv,DlibFaceLandmarkDetector,FaceMaskExample,当然的确没有顺序之分,因为导入进入报错之后你只要把三个插件导入进去就都解决了(鉴于之前一个小伙伴出现的乌龙提示一下);

进入正题:首先下载必备插件,没有顺序之分,如果必须有就是:OpenCv,DlibFaceLandmarkDetector,FaceMaskExample,当然的确没有顺序之分,因为导入进入报错之后你只要把三个插件导入进去就都解决了(鉴于之前一个小伙伴出现的乌龙提示一下);

接下来呢就是下载必要的依赖文件,如果下载不了的,可以到我博客下载资源中找到。插件导入和依赖文件导入后目录如下:(记得把插件中的StreamingAssets放到根目录)

接下来新建一个场景配置如下:

除了Quad挂载了脚本之外。其他的物体均未挂载任何脚本组件:

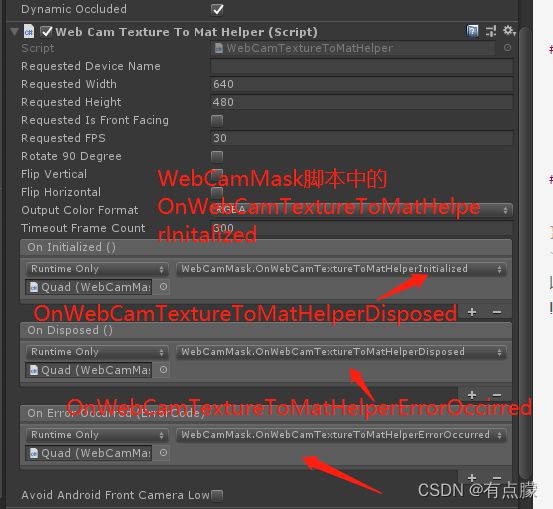

Quad挂载以下几个组件:WebCamMask,TextureExample ,FpsMonitor (FpsMonitor 可要可不要,如不要,将代码中的相关逻辑取消就行)两个脚本中有依赖组件,可手动挂载:TrackedMeshOverlay ,WebCamTextureToMatHelper;

using System;

using System.Collections;

using System.Collections.Generic;

using System.IO;

using UnityEngine;

using UnityEngine.SceneManagement;

using DlibFaceLandmarkDetector;

using OpenCVForUnity.RectangleTrack;

using OpenCVForUnity.UnityUtils.Helper;

using OpenCVForUnity.CoreModule;

using OpenCVForUnity.ObjdetectModule;

using OpenCVForUnity.ImgprocModule;

using Rect = OpenCVForUnity.CoreModule.Rect;

using FaceMaskExample;

using UnityEditor;

/// ().material.mainTexture as Texture2D, Application.dataPath + "/TestForOpenCV/Resources", "wakaka");

if (item == null)

{

//加载预制并将FaceMaskData重新赋值。

GameObject obj = Instantiate(Resources.Load<GameObject>("TestMask"), GameObject.Find("FaceMaskData").transform);

obj.GetComponent<FaceMaskData>().image = gameObject.GetComponent<Renderer>().material.mainTexture as Texture2D;

obj.GetComponent<FaceMaskData>().faceRect = facemaskrect;

obj.GetComponent<FaceMaskData>().landmarkPoints = landmasks;

faceMaskDatas.Add(obj.GetComponent<FaceMaskData>());

textureExample.faceMaskDatas = faceMaskDatas;

textureExample.Run(Resources.Load("头-黑底") as Texture2D);

//var prefabInstance = PrefabUtility.GetCorrespondingObjectFromSource(obj);

非打开预制体模式下

//if (prefabInstance)

//{

// var prefabPath = AssetDatabase.GetAssetPath(prefabInstance);

// // 修改预制体,则只能先Unpack预制体再保存

// PrefabUtility.UnpackPrefabInstance(obj, PrefabUnpackMode.Completely, InteractionMode.UserAction);

// PrefabUtility.SaveAsPrefabAssetAndConnect(obj, prefabPath, InteractionMode.AutomatedAction);

// // 不修改只新增,可以直接保存

// PrefabUtility.SaveAsPrefabAsset(obj, prefabPath);

//}

//else

//{

// // 预制体模式下,从Prefab场景中取得预制体资源位置和根物体,并保存

// // PrefabStage prefabStage = PrefabStageUtility.GetCurrentPrefabStage();

// //预制体原始位置

// string path = Application.dataPath + "/TestForOpenCV/Resources/TestMask.prefab";//prefabStage.prefabAssetPath;

// GameObject root = obj; //prefabStage.prefabContentsRoot;

// PrefabUtility.SaveAsPrefabAsset(root, path);

//}

}

}

if (webCamTextureToMatHelper.IsPlaying() && webCamTextureToMatHelper.DidUpdateThisFrame())

{

Mat rgbaMat = webCamTextureToMatHelper.GetMat();

// detect faces.

List<Rect> detectResult = new List<Rect>();

if (useDlibFaceDetecter)

{

OpenCVForUnityUtils.SetImage(faceLandmarkDetector, rgbaMat);

List<UnityEngine.Rect> result = faceLandmarkDetector.Detect();

foreach (var unityRect in result)

{

detectResult.Add(new Rect((int)unityRect.x, (int)unityRect.y, (int)unityRect.width, (int)unityRect.height));

}

}

else

{

// convert image to greyscale.

Imgproc.cvtColor(rgbaMat, grayMat, Imgproc.COLOR_RGBA2GRAY);

using (Mat equalizeHistMat = new Mat())

using (MatOfRect faces = new MatOfRect())

{

Imgproc.equalizeHist(grayMat, equalizeHistMat);

cascade.detectMultiScale(equalizeHistMat, faces, 1.1f, 2, 0 | Objdetect.CASCADE_SCALE_IMAGE, new Size(equalizeHistMat.cols() * 0.15, equalizeHistMat.cols() * 0.15), new Size());

detectResult = faces.toList();

}

// corrects the deviation of a detection result between OpenCV and Dlib.

foreach (Rect r in detectResult)

{

r.y += (int)(r.height * 0.1f);

}

}

// face tracking.

rectangleTracker.UpdateTrackedObjects(detectResult);

List<TrackedRect> trackedRects = new List<TrackedRect>();

rectangleTracker.GetObjects(trackedRects, true);

// create noise filter.

foreach (var openCVRect in trackedRects)

{

if (openCVRect.state == TrackedState.NEW)

{

if (!lowPassFilterDict.ContainsKey(openCVRect.id))

lowPassFilterDict.Add(openCVRect.id, new LowPassPointsFilter((int)faceLandmarkDetector.GetShapePredictorNumParts()));

if (!opticalFlowFilterDict.ContainsKey(openCVRect.id))

opticalFlowFilterDict.Add(openCVRect.id, new OFPointsFilter((int)faceLandmarkDetector.GetShapePredictorNumParts()));

}

else if (openCVRect.state == TrackedState.DELETED)

{

if (lowPassFilterDict.ContainsKey(openCVRect.id))

{

lowPassFilterDict[openCVRect.id].Dispose();

lowPassFilterDict.Remove(openCVRect.id);

}

if (opticalFlowFilterDict.ContainsKey(openCVRect.id))

{

opticalFlowFilterDict[openCVRect.id].Dispose();

opticalFlowFilterDict.Remove(openCVRect.id);

}

}

}

// create LUT texture.

foreach (var openCVRect in trackedRects)

{

if (openCVRect.state == TrackedState.NEW)

{

faceMaskColorCorrector.CreateLUTTex(openCVRect.id);

}

else if (openCVRect.state == TrackedState.DELETED)

{

faceMaskColorCorrector.DeleteLUTTex(openCVRect.id);

}

}

// detect face landmark points.

OpenCVForUnityUtils.SetImage(faceLandmarkDetector, rgbaMat);

List<List<Vector2>> landmarkPoints = new List<List<Vector2>>();

for (int i = 0; i < trackedRects.Count; i++)

{

TrackedRect tr = trackedRects[i];

UnityEngine.Rect rect = new UnityEngine.Rect(tr.x, tr.y, tr.width, tr.height);

List<Vector2> points = faceLandmarkDetector.DetectLandmark(rect);

// apply noise filter.

if (enableNoiseFilter)

{

if (tr.state > TrackedState.NEW && tr.state < TrackedState.DELETED)

{

opticalFlowFilterDict[tr.id].Process(rgbaMat, points, points);

lowPassFilterDict[tr.id].Process(rgbaMat, points, points);

}

}

landmarkPoints.Add(points);

}

if (landmarkPoints.Count > 0)

{

landmasks = landmarkPoints[0];

facemaskrect = new UnityEngine.Rect(trackedRects[0].x, trackedRects[0].y, trackedRects[0].width, trackedRects[0].height);

print(string.Format("当前识别到人脸数量为:{0},标志点为:{1},追踪人数:{2}", landmarkPoints.Count, landmarkPoints[0].Count, trackedRects.Count));

}

#region 创建遮罩face masking.

if (faceMaskTexture != null && landmarkPoints.Count >= 1)

{ // Apply face masking between detected faces and a face mask image.

float maskImageWidth = faceMaskTexture.width;

float maskImageHeight = faceMaskTexture.height;

TrackedRect tr;

for (int i = 0; i < trackedRects.Count; i++)

{

tr = trackedRects[i];

if (tr.state == TrackedState.NEW)

{

meshOverlay.CreateObject(tr.id, faceMaskTexture);

}

if (tr.state < TrackedState.DELETED)

{

MaskFace(meshOverlay, tr, landmarkPoints[i], faceLandmarkPointsInMask, maskImageWidth, maskImageHeight);

if (enableColorCorrection)

{

CorrectFaceMaskColor(tr.id, faceMaskMat, rgbaMat, faceLandmarkPointsInMask, landmarkPoints[i]);

}

}

else if (tr.state == TrackedState.DELETED)

{

meshOverlay.DeleteObject(tr.id);

}

}

}

else if (landmarkPoints.Count >= 1)

{ // Apply face masking between detected faces.

float maskImageWidth = texture.width;

float maskImageHeight = texture.height;

TrackedRect tr;

for (int i = 0; i < trackedRects.Count; i++)

{

tr = trackedRects[i];

if (tr.state == TrackedState.NEW)

{

meshOverlay.CreateObject(tr.id, texture);

}

if (tr.state < TrackedState.DELETED)

{

MaskFace(meshOverlay, tr, landmarkPoints[i], landmarkPoints[0], maskImageWidth, maskImageHeight);

if (enableColorCorrection)

{

CorrectFaceMaskColor(tr.id, rgbaMat, rgbaMat, landmarkPoints[0], landmarkPoints[i]);

}

}

else if (tr.state == TrackedState.DELETED)

{

meshOverlay.DeleteObject(tr.id);

}

}

}

#endregion

// draw face rects.

if (displayFaceRects)

{

for (int i = 0; i < detectResult.Count; i++)

{

UnityEngine.Rect rect = new UnityEngine.Rect(detectResult[i].x, detectResult[i].y, detectResult[i].width, detectResult[i].height);

OpenCVForUnityUtils.DrawFaceRect(rgbaMat, rect, new Scalar(255, 0, 0, 255), 2);

}

for (int i = 0; i < trackedRects.Count; i++)

{

UnityEngine.Rect rect = new UnityEngine.Rect(trackedRects[i].x, trackedRects[i].y, trackedRects[i].width, trackedRects[i].height);

OpenCVForUnityUtils.DrawFaceRect(rgbaMat, rect, new Scalar(255, 255, 0, 255), 2);

// Imgproc.putText (rgbaMat, " " + frontalFaceChecker.GetFrontalFaceAngles (landmarkPoints [i]), new Point (rect.xMin, rect.yMin - 10), Imgproc.FONT_HERSHEY_SIMPLEX, 0.5, new Scalar (255, 255, 255, 255), 2, Imgproc.LINE_AA, false);

// Imgproc.putText (rgbaMat, " " + frontalFaceChecker.GetFrontalFaceRate (landmarkPoints [i]), new Point (rect.xMin, rect.yMin - 10), Imgproc.FONT_HERSHEY_SIMPLEX, 0.5, new Scalar (255, 255, 255, 255), 2, Imgproc.LINE_AA, false);

}

}

// draw face points.

if (displayDebugFacePoints)

{

for (int i = 0; i < landmarkPoints.Count; i++)

{

OpenCVForUnityUtils.DrawFaceLandmark(rgbaMat, landmarkPoints[i], new Scalar(0, 255, 0, 255), 2);

}

}

print(landmarkPoints.Count);

// display face mask image.

#region 是否显示遮罩

//if (faceMaskTexture != null && faceMaskMat != null)

//{

// if (displayFaceRects)

// {

// OpenCVForUnityUtils.DrawFaceRect(faceMaskMat, faceRectInMask, new Scalar(255, 0, 0, 255), 2);

// }

// if (displayDebugFacePoints)

// {

// OpenCVForUnityUtils.DrawFaceLandmark(faceMaskMat, faceLandmarkPointsInMask, new Scalar(0, 255, 0, 255), 2);

// }

// float scale = (rgbaMat.width() / 4f) / faceMaskMat.width();

// float tx = rgbaMat.width() - faceMaskMat.width() * scale;

// float ty = 0.0f;

// Mat trans = new Mat(2, 3, CvType.CV_32F);//1.0, 0.0, tx, 0.0, 1.0, ty);

// trans.put(0, 0, scale);

// trans.put(0, 1, 0.0f);

// trans.put(0, 2, tx);

// trans.put(1, 0, 0.0f);

// trans.put(1, 1, scale);

// trans.put(1, 2, ty);

// Imgproc.warpAffine(faceMaskMat, rgbaMat, trans, rgbaMat.size(), Imgproc.INTER_LINEAR, Core.BORDER_TRANSPARENT, new Scalar(0));

// if (displayFaceRects)

// OpenCVForUnity.UnityUtils.Utils.texture2DToMat(faceMaskTexture, faceMaskMat);

//}

#endregion

// Imgproc.putText (rgbaMat, "W:" + rgbaMat.width () + " H:" + rgbaMat.height () + " SO:" + Screen.orientation, new Point (5, rgbaMat.rows () - 10), Imgproc.FONT_HERSHEY_SIMPLEX, 0.5, new Scalar (255, 255, 255, 255), 1, Imgproc.LINE_AA, false);

OpenCVForUnity.UnityUtils.Utils.fastMatToTexture2D(rgbaMat, texture);

}

}

private void MaskFace(TrackedMeshOverlay meshOverlay, TrackedRect tr, List<Vector2> landmarkPoints, List<Vector2> landmarkPointsInMaskImage, float maskImageWidth = 0, float maskImageHeight = 0)

{

float imageWidth = meshOverlay.width;

float imageHeight = meshOverlay.height;

if (maskImageWidth == 0)

maskImageWidth = imageWidth;

if (maskImageHeight == 0)

maskImageHeight = imageHeight;

TrackedMesh tm = meshOverlay.GetObjectById(tr.id);

Vector3[] vertices = tm.meshFilter.mesh.vertices;

if (vertices.Length == landmarkPoints.Count)

{

for (int j = 0; j < vertices.Length; j++)

{

vertices[j].x = landmarkPoints[j].x / imageWidth - 0.5f;

vertices[j].y = 0.5f - landmarkPoints[j].y / imageHeight;

}

}

Vector2[] uv = tm.meshFilter.mesh.uv;

if (uv.Length == landmarkPointsInMaskImage.Count)

{

for (int jj = 0; jj < uv.Length; jj++)

{

uv[jj].x = landmarkPointsInMaskImage[jj].x / maskImageWidth;

uv[jj].y = (maskImageHeight - landmarkPointsInMaskImage[jj].y) / maskImageHeight;

}

}

meshOverlay.UpdateObject(tr.id, vertices, null, uv);

if (tr.numFramesNotDetected > 3)

{

tm.sharedMaterial.SetFloat(shader_FadeID, 1f);

}

else if (tr.numFramesNotDetected > 0 && tr.numFramesNotDetected <= 3)

{

tm.sharedMaterial.SetFloat(shader_FadeID, 0.3f + (0.7f / 4f) * tr.numFramesNotDetected);

}

else

{

tm.sharedMaterial.SetFloat(shader_FadeID, 0.3f);

}

if (enableColorCorrection)

{

tm.sharedMaterial.SetFloat(shader_ColorCorrectionID, 1f);

}

else

{

tm.sharedMaterial.SetFloat(shader_ColorCorrectionID, 0f);

}

// filter non frontal faces.

if (filterNonFrontalFaces && frontalFaceChecker.GetFrontalFaceRate(landmarkPoints) < frontalFaceRateLowerLimit)

{

tm.sharedMaterial.SetFloat(shader_FadeID, 1f);

}

}

private void CorrectFaceMaskColor(int id, Mat src, Mat dst, List<Vector2> src_landmarkPoints, List<Vector2> dst_landmarkPoints)

{

Texture2D LUTTex = faceMaskColorCorrector.UpdateLUTTex(id, src, dst, src_landmarkPoints, dst_landmarkPoints);

TrackedMesh tm = meshOverlay.GetObjectById(id);

tm.sharedMaterial.SetTexture(shader_LUTTexID, LUTTex);

}

/// using System.Collections.Generic;

using System.IO;

using System.Linq;

using UnityEngine;

using UnityEngine.SceneManagement;

using DlibFaceLandmarkDetector;

using OpenCVForUnity.ObjdetectModule;

using OpenCVForUnity.CoreModule;

using OpenCVForUnity.ImgprocModule;

using Rect = OpenCVForUnity.CoreModule.Rect;

using FaceMaskExample;

using OpenCVForUnity.RectangleTrack;

[RequireComponent(typeof(TrackedMeshOverlay))]

public class TextureExample : MonoBehaviour

{

[HeaderAttribute("FaceMaskData")]

/// 以上为两个脚本的代码逻辑;下面需要手动给组件绑定的相关事件方法:

!](https://img-blog.csdnimg.cn/2226064ba29b449caa36b7612ab86d0d.png)

这个预制中能找到,在FaceMaskExample-FaceMaskPrefab文件夹下;

Config文件就几个静态方法,之前还有小伙伴觉得难,这百度一大堆,都是保存图片,或者本地图片转换Sprite精灵图片的方法。

public class Config

{

public static string _Path = string.Format("{0}/Facefolder/{1}.png", Application.streamingAssetsPath,"mPhoto");

///

/// 根据图片路径返回图片的字节流byte[]

///

/// 图片路径

/// 返回的字节流

public static byte[] GetImageByte(string imagePath)

{

FileStream files = new FileStream(imagePath, FileMode.Open);

byte[] imgByte = new byte[files.Length];

files.Read(imgByte, 0, imgByte.Length);

files.Close();

return imgByte;

}

}

好了,自己准备一个模板图片,名字你随便改,如果要和我的一样就改名为mPhoto.png把。放到能加载的地方就行;接下来看效果把。。

脸部特征不是很明显的效果不是很明显,内置的一个外国佬的脸效果刚刚的,但不影响我们的逻辑与功能实现;本文到此结束,希望能够帮助更多和我一样在技术路上探究的小伙伴,一起追寻技术大道,喜欢的点赞,关注,收藏!感谢!!!!!