用Tensorflow2搭建经典的卷积神经网络(LeNet,AlexNet,VGG16,ResNet50)

最近看了卷积神经网络的一些经典论文,用Tensorflow2进行了复现,其中包括LeNet,AlexNet,VGG16,ResNet50,并且在数据集上进行了测试,能够正常训练,结果表明搭建的没有问题。

下面进入正题:

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

加载mnist数据集用于测试LeNet

mnist_data = np.load("E:/deep_learning/jupyternotebook工作空间/0531/mnist/mnist.npz")

x_train = mnist_data['x_train'] / 255.0

y_train = mnist_data['y_train'] / 255.0

x_test = mnist_data['x_test']

y_test = mnist_data['y_test']

print(x_train.shape)

print(x_test.shape)

print(y_train.shape)

print(y_test.shape)

(60000, 28, 28)

(10000, 28, 28)

(60000,)

(10000,)

x_train0 = x_train.reshape((-1,28,28,1))

x_test0 = x_test.reshape((-1,28,28,1))

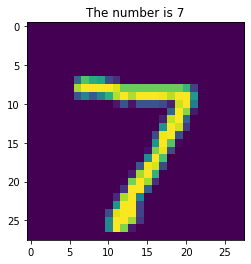

plt.imshow(x_test[0])

plt.title('The number is '+ str(y_test[0]))

Text(0.5, 1.0, 'The number is 7')

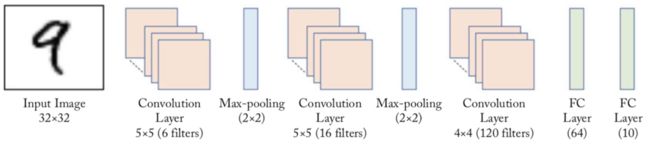

LeNet

LeNet模型网络结构示意图

def get_LeNet():

model = keras.Sequential(name='LeNet')

model.add(layers.Conv2D(filters=6,kernel_size=(5,5),strides=1,activation='sigmoid',

input_shape=(28,28,1),name='Conv1'))

model.add(layers.MaxPool2D(pool_size=(2,2),strides=2,name='Pool1'))

model.add(layers.Conv2D(16,(5,5),strides=1,activation='sigmoid',name='Conv2'))

model.add(layers.MaxPool2D(pool_size=(2,2),strides=2,name='Pool2'))

model.add(layers.Conv2D(120,(4,4),strides=1,activation='sigmoid',name='Conv3'))

model.add(layers.Flatten(name='Flatten'))

model.add(layers.Dense(64,activation='sigmoid',name='FC1'))

model.add(layers.Dense(10,activation='softmax',name='FC2'))

return model

LeNet = get_LeNet()

LeNet.summary()

Model: "LeNet"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

Conv1 (Conv2D) (None, 24, 24, 6) 156

_________________________________________________________________

Pool1 (MaxPooling2D) (None, 12, 12, 6) 0

_________________________________________________________________

Conv2 (Conv2D) (None, 8, 8, 16) 2416

_________________________________________________________________

Pool2 (MaxPooling2D) (None, 4, 4, 16) 0

_________________________________________________________________

Conv3 (Conv2D) (None, 1, 1, 120) 30840

_________________________________________________________________

Flatten (Flatten) (None, 120) 0

_________________________________________________________________

FC1 (Dense) (None, 64) 7744

_________________________________________________________________

FC2 (Dense) (None, 10) 650

=================================================================

Total params: 41,806

Trainable params: 41,806

Non-trainable params: 0

_________________________________________________________________

由上图可知,LeNet为5层网络,共41806个参数

#训练

LeNet.compile(optimizer=keras.optimizers.Adam(learning_rate=0.001),

loss='sparse_categorical_crossentropy', #计算稀疏的分类交叉熵损失

metrics=['accuracy'])

LeNet.fit(x_train0,y_train,batch_size=64,epochs=1,validation_split=0.15)

LeNet.evaluate(x_test0,y_test,batch_size=64)

AlexNet

def alexNet_model(numclasses):

alexNet = keras.Sequential(name='AlexNet')

#第一层

alexNet.add(layers.Conv2D(filters=96,kernel_size=(11,11),strides=(4,4),

activation='relu',input_shape=(227,227,3),name='Conv1'))

alexNet.add(layers.BatchNormalization(name='BN1'))

alexNet.add(layers.MaxPool2D(pool_size=(3,3),strides=(2,2),name='Pool1'))

#第二层

alexNet.add(layers.Conv2D(filters=256,kernel_size=(5,5),strides=(1,1),padding='same',

activation='relu',name='Conv2'))

alexNet.add(layers.BatchNormalization(name='BN2'))

alexNet.add(layers.MaxPool2D(pool_size=(3,3),strides=(2,2),name='Pool2'))

#第三层

alexNet.add(layers.Conv2D(filters=384,kernel_size=(3,3),strides=(1,1),padding='same',

activation='relu',name='Conv3'))

#第四层

alexNet.add(layers.Conv2D(filters=192,kernel_size=(3,3),strides=(1,1),padding='same',

activation='relu',name='Conv4'))

#第五层

alexNet.add(layers.Conv2D(filters=256,kernel_size=(3,3),strides=(1,1),padding='same',

activation='relu',name='Conv5'))

alexNet.add(layers.BatchNormalization(name='BN3'))

alexNet.add(layers.MaxPool2D(pool_size=(3,3),strides=(2,2),name='Pool3'))

#全连接层

alexNet.add(layers.Flatten())

alexNet.add(layers.Dense(4096,activation='relu',name='fc1'))

alexNet.add(layers.Dense(4096,activation='relu',name='fc2'))

alexNet.add(layers.Dense(numclasses,activation='softmax',name='output'))

return alexNet

AlexNet = alexNet_model(1000)

AlexNet.summary()

Model: "AlexNet"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

Conv1 (Conv2D) (None, 55, 55, 96) 34944

_________________________________________________________________

BN1 (BatchNormalization) (None, 55, 55, 96) 384

_________________________________________________________________

Pool1 (MaxPooling2D) (None, 27, 27, 96) 0

_________________________________________________________________

Conv2 (Conv2D) (None, 27, 27, 256) 614656

_________________________________________________________________

BN2 (BatchNormalization) (None, 27, 27, 256) 1024

_________________________________________________________________

Pool2 (MaxPooling2D) (None, 13, 13, 256) 0

_________________________________________________________________

Conv3 (Conv2D) (None, 13, 13, 384) 885120

_________________________________________________________________

Conv4 (Conv2D) (None, 13, 13, 192) 663744

_________________________________________________________________

Conv5 (Conv2D) (None, 13, 13, 256) 442624

_________________________________________________________________

BN3 (BatchNormalization) (None, 13, 13, 256) 1024

_________________________________________________________________

Pool3 (MaxPooling2D) (None, 6, 6, 256) 0

_________________________________________________________________

flatten (Flatten) (None, 9216) 0

_________________________________________________________________

fc1 (Dense) (None, 4096) 37752832

_________________________________________________________________

fc2 (Dense) (None, 4096) 16781312

_________________________________________________________________

output (Dense) (None, 1000) 4097000

=================================================================

Total params: 61,274,664

Trainable params: 61,273,448

Non-trainable params: 1,216

_________________________________________________________________

AlexNet共有61,273,448个训练参数

构造手势识别数据用于测试网络

import os

import random

from PIL import Image

#生成数据列表

data_path = 'E:\deep_learning\jupyternotebook工作空间\数据集\手势数字\Dataset'

charater_folders = os.listdir(data_path)

charater_folders

['0', '1', '2', '3', '4', '5', '6', '7', '8', '9']

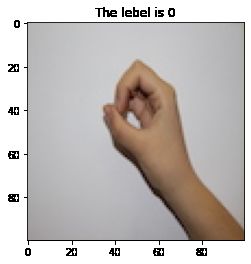

img = Image.open(r'E:\deep_learning\jupyternotebook工作空间\数据集\手势数字\Dataset\0\IMG_1118.JPG')

plt.imshow(img)

plt.title("The lebel is 0")

Text(0.5, 1.0, 'The lebel is 0')

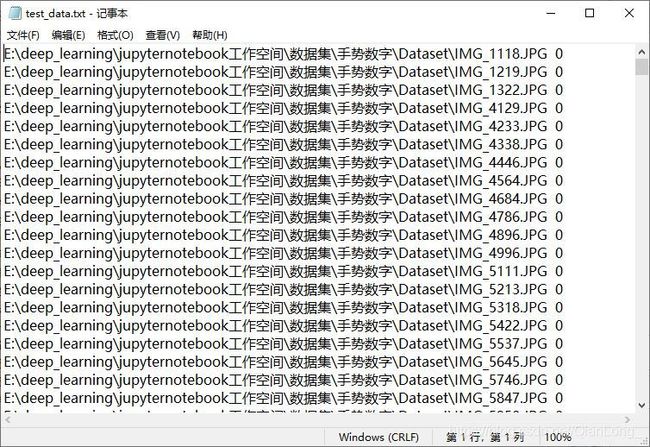

#生成数据列表

if(os.path.exists('./train_data.list')):

os.remove('./train_data.list')

if(os.path.exists('./test_data.list')):

os.remove('./test_data.list')

for charater_folder in charater_folders:

with open('./train_data.txt','a') as f_train:

with open('./test_data.txt','a') as f_test:

imgname_list = os.listdir(os.path.join(data_path,charater_folder))

count = 0

for imgname in imgname_list:

if imgname == '.DS_Store':

continue

if count%10 == 0:#以10%的比例划分测试数据集

f_test.write(os.path.join(data_path,charater_folder,imgname) + '\t' + charater_folder + '\n')

else:

f_train.write(os.path.join(data_path,charater_folder,imgname) + '\t' + charater_folder + '\n')

count += 1

print("列表已生成")

列表已生成

#加载数据集

def img_preprocess(img_path):

'''数据预处理'''

img = Image.open(img_path)

img = img.resize((227,227),Image.ANTIALIAS)

img = np.array(img).astype('float32')

img = img / 255.0

return img

def build_dataSet(datalist_path):

img_list = []

label_list = []

with open(datalist_path,'r') as f:

lines = f.readlines()

random.shuffle(lines) #打乱数据

for line in lines:

imgpath,label = line.split('\t')

label_list.append(int(label))

img = img_preprocess(imgpath)

img_list.append(img)

return np.array(img_list),np.array(label_list)

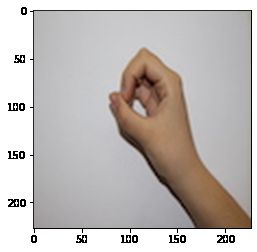

img0 = img_preprocess(r'数据集\手势数字\Dataset\0\IMG_1118.JPG')

img0.shape

(227, 227, 3)

plt.imshow(img0)

train_x,train_y = build_dataSet('train_data.txt')

test_x,test_y = build_dataSet('test_data.txt')

print(train_x.shape)

print(train_y.shape)

print(test_x.shape)

print(test_y.shape)

(1852, 227, 227, 3)

(1852,)

(210, 227, 227, 3)

(210,)

my_alexNet = alexNet_model(10)

my_alexNet.compile(optimizer=keras.optimizers.Adam(learning_rate=0.001),

loss='sparse_categorical_crossentropy', #计算稀疏的分类交叉熵损失

metrics=['accuracy'])

my_alexNet.fit(train_x,train_y,batch_size=4,epochs=1)

my_alexNet.evaluate(test_x,test_y,batch_size=4)

#电脑太垃圾,跑一轮要半小时,不跑了。

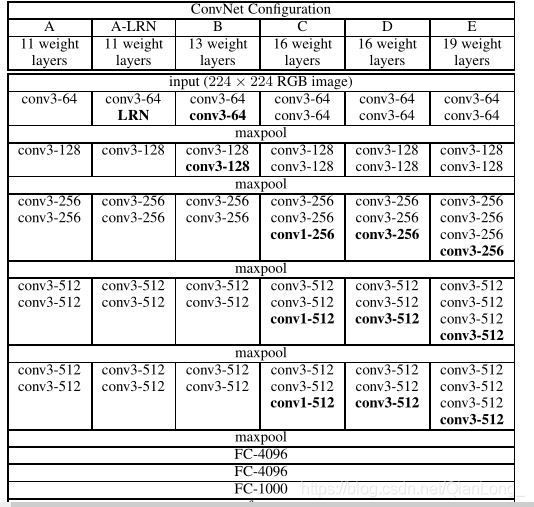

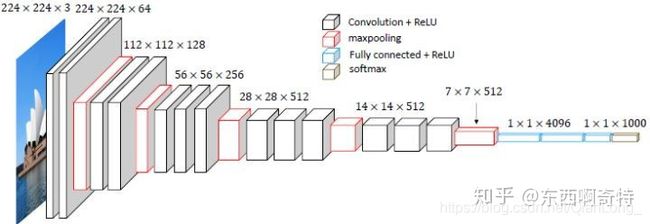

VGGNet

def basic_net(model,filters,is_three):

model.add(layers.Conv2D(filters=filters,kernel_size=3,strides=1,activation='relu',padding='same'))

model.add(layers.Conv2D(filters=filters,kernel_size=3,strides=1,activation='relu',padding='same'))

if is_three:

model.add(layers.Conv2D(filters=filters,kernel_size=3,strides=1,activation='relu',padding='same'))

model.add(layers.MaxPool2D(pool_size=(2,2),strides=(2,2)))

return model

def get_vgg16(numclasses,input_shape):

model = keras.Sequential(name='VGG16')

model.add(layers.Conv2D(filters=64,kernel_size=3,strides=1,activation='relu',padding='same',input_shape=input_shape))

model.add(layers.Conv2D(filters=64,kernel_size=3,strides=1,activation='relu',padding='same'))

model.add(layers.MaxPool2D(pool_size=(2,2),strides=(2,2)))

model = basic_net(model,128,is_three=False)

model = basic_net(model,256,is_three=True)

model = basic_net(model,512,is_three=True)

model = basic_net(model,512,is_three=True)

model.add(layers.Flatten())

model.add(layers.Dense(4096,activation='relu'))

model.add(layers.Dense(4096,activation='relu'))

model.add(layers.Dense(numclasses,activation='softmax'))

return model

VGG16 = get_vgg16(numclasses=1000,input_shape=(224,224,3))

keras.utils.plot_model(VGG16,to_file='VGG16.png')

VGG16.summary()

Failed to import pydot. You must install pydot and graphviz for `pydotprint` to work.

Model: "VGG16"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_52 (Conv2D) (None, 224, 224, 64) 1792

_________________________________________________________________

conv2d_53 (Conv2D) (None, 224, 224, 64) 36928

_________________________________________________________________

max_pooling2d_20 (MaxPooling (None, 112, 112, 64) 0

_________________________________________________________________

conv2d_54 (Conv2D) (None, 112, 112, 128) 73856

_________________________________________________________________

conv2d_55 (Conv2D) (None, 112, 112, 128) 147584

_________________________________________________________________

max_pooling2d_21 (MaxPooling (None, 56, 56, 128) 0

_________________________________________________________________

conv2d_56 (Conv2D) (None, 56, 56, 256) 295168

_________________________________________________________________

conv2d_57 (Conv2D) (None, 56, 56, 256) 590080

_________________________________________________________________

conv2d_58 (Conv2D) (None, 56, 56, 256) 590080

_________________________________________________________________

max_pooling2d_22 (MaxPooling (None, 28, 28, 256) 0

_________________________________________________________________

conv2d_59 (Conv2D) (None, 28, 28, 512) 1180160

_________________________________________________________________

conv2d_60 (Conv2D) (None, 28, 28, 512) 2359808

_________________________________________________________________

conv2d_61 (Conv2D) (None, 28, 28, 512) 2359808

_________________________________________________________________

max_pooling2d_23 (MaxPooling (None, 14, 14, 512) 0

_________________________________________________________________

conv2d_62 (Conv2D) (None, 14, 14, 512) 2359808

_________________________________________________________________

conv2d_63 (Conv2D) (None, 14, 14, 512) 2359808

_________________________________________________________________

conv2d_64 (Conv2D) (None, 14, 14, 512) 2359808

_________________________________________________________________

max_pooling2d_24 (MaxPooling (None, 7, 7, 512) 0

_________________________________________________________________

flatten_4 (Flatten) (None, 25088) 0

_________________________________________________________________

dense_12 (Dense) (None, 4096) 102764544

_________________________________________________________________

dense_13 (Dense) (None, 4096) 16781312

_________________________________________________________________

dense_14 (Dense) (None, 1000) 4097000

=================================================================

Total params: 138,357,544

Trainable params: 138,357,544

Non-trainable params: 0

_________________________________________________________________

VGG16共有138,357,544个训练参数

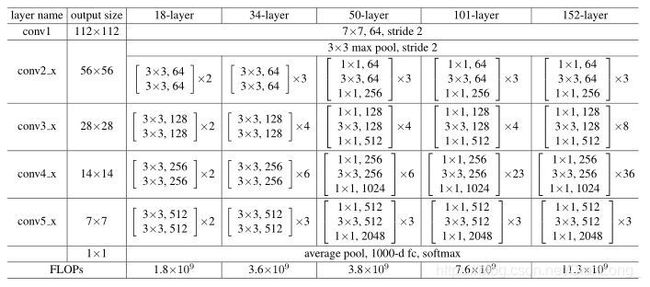

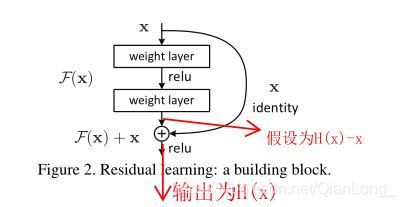

ResNet

搭建ResNet50

def res_block(x,filters,is_same,s=1):

'''构造残差快'''

x_shortcut = x

f1,f2,f3 = filters

x = layers.Conv2D(filters=f1,kernel_size=(1,1),strides=(s,s),padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(filters=f2,kernel_size=(3,3),strides=(1,1),padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(filters=f3,kernel_size=(1,1),strides=(1,1),padding='same')(x)

x = layers.BatchNormalization()(x)

if is_same:

x_shortcut = layers.Conv2D(filters=f3,kernel_size=(1,1),strides=(1,1),padding='valid')(x_shortcut)

x = layers.Add()([x,x_shortcut])

x = layers.Activation('relu')(x)

else:

x_shortcut = layers.Conv2D(filters=f3,kernel_size=(1,1),strides=(2,2),padding='valid')(x_shortcut)

x_shortcut = layers.BatchNormalization()(x_shortcut)

x = layers.Add()([x,x_shortcut])

x = layers.Activation('relu')(x)

return x

def get_ResNet50(numclasses,input_shape):

#浅层网络

inputs = layers.Input(shape=input_shape)

x = layers.ZeroPadding2D(padding=(3,3))(inputs)

x = layers.Conv2D(filters=64,kernel_size=(7,7),strides=(2,2),activation='relu')(x)

x = layers.ZeroPadding2D(padding=(1,1))(inputs)

x = layers.MaxPool2D(pool_size=(3,3),strides=(2,2))(x)

#残差块1 *3

x = res_block(x,[64,64,256],is_same=True)

x = res_block(x,[64,64,256],is_same=True)

x = res_block(x,[64,64,256],is_same=True)

#残差块2 *4

x = res_block(x,[128,128,512],is_same=False,s=2)

x = res_block(x,[128,128,512],is_same=True)

x = res_block(x,[128,128,512],is_same=True)

x = res_block(x,[128,128,512],is_same=True)

#残差块3 *6

x = res_block(x,[256,256,1024],is_same=False,s=2)

x = res_block(x,[256,256,1024],is_same=True)

x = res_block(x,[256,256,1024],is_same=True)

x = res_block(x,[256,256,1024],is_same=True)

x = res_block(x,[256,256,1024],is_same=True)

x = res_block(x,[256,256,1024],is_same=True)

#残差块4 *3

x = res_block(x,[512,512,2048],is_same=False,s=2)

x = res_block(x,[512,512,2048],is_same=True)

x = res_block(x,[512,512,2048],is_same=True)

#全局平均池化

x = layers.GlobalAveragePooling2D()(x)

x = layers.Flatten()(x)

outputs = layers.Dense(numclasses,activation='softmax')(x)

model = keras.Model(inputs=inputs,outputs=outputs,name='ResNet50')

return model

ResNet50 = get_ResNet50(1000,(224,224,3))

ResNet50.summary()

Model: "ResNet50"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_5 (InputLayer) [(None, 224, 224, 3) 0

__________________________________________________________________________________________________

zero_padding2d_9 (ZeroPadding2D (None, 226, 226, 3) 0 input_5[0][0]

__________________________________________________________________________________________________

max_pooling2d_29 (MaxPooling2D) (None, 112, 112, 3) 0 zero_padding2d_9[0][0]

__________________________________________________________________________________________________

conv2d_182 (Conv2D) (None, 112, 112, 64) 256 max_pooling2d_29[0][0]

__________________________________________________________________________________________________

batch_normalization_90 (BatchNo (None, 112, 112, 64) 256 conv2d_182[0][0]

__________________________________________________________________________________________________

activation_81 (Activation) (None, 112, 112, 64) 0 batch_normalization_90[0][0]

__________________________________________________________________________________________________

conv2d_183 (Conv2D) (None, 112, 112, 64) 36928 activation_81[0][0]

__________________________________________________________________________________________________

batch_normalization_91 (BatchNo (None, 112, 112, 64) 256 conv2d_183[0][0]

__________________________________________________________________________________________________

activation_82 (Activation) (None, 112, 112, 64) 0 batch_normalization_91[0][0]

__________________________________________________________________________________________________

conv2d_184 (Conv2D) (None, 112, 112, 256 16640 activation_82[0][0]

__________________________________________________________________________________________________

batch_normalization_92 (BatchNo (None, 112, 112, 256 1024 conv2d_184[0][0]

__________________________________________________________________________________________________

conv2d_185 (Conv2D) (None, 112, 112, 256 1024 max_pooling2d_29[0][0]

__________________________________________________________________________________________________

add_25 (Add) (None, 112, 112, 256 0 batch_normalization_92[0][0]

conv2d_185[0][0]

__________________________________________________________________________________________________

activation_83 (Activation) (None, 112, 112, 256 0 add_25[0][0]

__________________________________________________________________________________________________

conv2d_186 (Conv2D) (None, 112, 112, 64) 16448 activation_83[0][0]

__________________________________________________________________________________________________

batch_normalization_93 (BatchNo (None, 112, 112, 64) 256 conv2d_186[0][0]

__________________________________________________________________________________________________

activation_84 (Activation) (None, 112, 112, 64) 0 batch_normalization_93[0][0]

__________________________________________________________________________________________________

conv2d_187 (Conv2D) (None, 112, 112, 64) 36928 activation_84[0][0]

__________________________________________________________________________________________________

batch_normalization_94 (BatchNo (None, 112, 112, 64) 256 conv2d_187[0][0]

__________________________________________________________________________________________________

activation_85 (Activation) (None, 112, 112, 64) 0 batch_normalization_94[0][0]

__________________________________________________________________________________________________

conv2d_188 (Conv2D) (None, 112, 112, 256 16640 activation_85[0][0]

__________________________________________________________________________________________________

batch_normalization_95 (BatchNo (None, 112, 112, 256 1024 conv2d_188[0][0]

__________________________________________________________________________________________________

conv2d_189 (Conv2D) (None, 112, 112, 256 65792 activation_83[0][0]

__________________________________________________________________________________________________

add_26 (Add) (None, 112, 112, 256 0 batch_normalization_95[0][0]

conv2d_189[0][0]

__________________________________________________________________________________________________

activation_86 (Activation) (None, 112, 112, 256 0 add_26[0][0]

__________________________________________________________________________________________________

conv2d_190 (Conv2D) (None, 112, 112, 64) 16448 activation_86[0][0]

__________________________________________________________________________________________________

batch_normalization_96 (BatchNo (None, 112, 112, 64) 256 conv2d_190[0][0]

__________________________________________________________________________________________________

activation_87 (Activation) (None, 112, 112, 64) 0 batch_normalization_96[0][0]

__________________________________________________________________________________________________

conv2d_191 (Conv2D) (None, 112, 112, 64) 36928 activation_87[0][0]

__________________________________________________________________________________________________

batch_normalization_97 (BatchNo (None, 112, 112, 64) 256 conv2d_191[0][0]

__________________________________________________________________________________________________

activation_88 (Activation) (None, 112, 112, 64) 0 batch_normalization_97[0][0]

__________________________________________________________________________________________________

conv2d_192 (Conv2D) (None, 112, 112, 256 16640 activation_88[0][0]

__________________________________________________________________________________________________

batch_normalization_98 (BatchNo (None, 112, 112, 256 1024 conv2d_192[0][0]

__________________________________________________________________________________________________

conv2d_193 (Conv2D) (None, 112, 112, 256 65792 activation_86[0][0]

__________________________________________________________________________________________________

add_27 (Add) (None, 112, 112, 256 0 batch_normalization_98[0][0]

conv2d_193[0][0]

__________________________________________________________________________________________________

activation_89 (Activation) (None, 112, 112, 256 0 add_27[0][0]

__________________________________________________________________________________________________

conv2d_194 (Conv2D) (None, 56, 56, 128) 32896 activation_89[0][0]

__________________________________________________________________________________________________

batch_normalization_99 (BatchNo (None, 56, 56, 128) 512 conv2d_194[0][0]

__________________________________________________________________________________________________

activation_90 (Activation) (None, 56, 56, 128) 0 batch_normalization_99[0][0]

__________________________________________________________________________________________________

conv2d_195 (Conv2D) (None, 56, 56, 128) 147584 activation_90[0][0]

__________________________________________________________________________________________________

batch_normalization_100 (BatchN (None, 56, 56, 128) 512 conv2d_195[0][0]

__________________________________________________________________________________________________

activation_91 (Activation) (None, 56, 56, 128) 0 batch_normalization_100[0][0]

__________________________________________________________________________________________________

conv2d_196 (Conv2D) (None, 56, 56, 512) 66048 activation_91[0][0]

__________________________________________________________________________________________________

conv2d_197 (Conv2D) (None, 56, 56, 512) 131584 activation_89[0][0]

__________________________________________________________________________________________________

batch_normalization_101 (BatchN (None, 56, 56, 512) 2048 conv2d_196[0][0]

__________________________________________________________________________________________________

batch_normalization_102 (BatchN (None, 56, 56, 512) 2048 conv2d_197[0][0]

__________________________________________________________________________________________________

add_28 (Add) (None, 56, 56, 512) 0 batch_normalization_101[0][0]

batch_normalization_102[0][0]

__________________________________________________________________________________________________

activation_92 (Activation) (None, 56, 56, 512) 0 add_28[0][0]

__________________________________________________________________________________________________

conv2d_198 (Conv2D) (None, 56, 56, 128) 65664 activation_92[0][0]

__________________________________________________________________________________________________

batch_normalization_103 (BatchN (None, 56, 56, 128) 512 conv2d_198[0][0]

__________________________________________________________________________________________________

activation_93 (Activation) (None, 56, 56, 128) 0 batch_normalization_103[0][0]

__________________________________________________________________________________________________

conv2d_199 (Conv2D) (None, 56, 56, 128) 147584 activation_93[0][0]

__________________________________________________________________________________________________

batch_normalization_104 (BatchN (None, 56, 56, 128) 512 conv2d_199[0][0]

__________________________________________________________________________________________________

activation_94 (Activation) (None, 56, 56, 128) 0 batch_normalization_104[0][0]

__________________________________________________________________________________________________

conv2d_200 (Conv2D) (None, 56, 56, 512) 66048 activation_94[0][0]

__________________________________________________________________________________________________

batch_normalization_105 (BatchN (None, 56, 56, 512) 2048 conv2d_200[0][0]

__________________________________________________________________________________________________

conv2d_201 (Conv2D) (None, 56, 56, 512) 262656 activation_92[0][0]

__________________________________________________________________________________________________

add_29 (Add) (None, 56, 56, 512) 0 batch_normalization_105[0][0]

conv2d_201[0][0]

__________________________________________________________________________________________________

activation_95 (Activation) (None, 56, 56, 512) 0 add_29[0][0]

__________________________________________________________________________________________________

conv2d_202 (Conv2D) (None, 56, 56, 128) 65664 activation_95[0][0]

__________________________________________________________________________________________________

batch_normalization_106 (BatchN (None, 56, 56, 128) 512 conv2d_202[0][0]

__________________________________________________________________________________________________

activation_96 (Activation) (None, 56, 56, 128) 0 batch_normalization_106[0][0]

__________________________________________________________________________________________________

conv2d_203 (Conv2D) (None, 56, 56, 128) 147584 activation_96[0][0]

__________________________________________________________________________________________________

batch_normalization_107 (BatchN (None, 56, 56, 128) 512 conv2d_203[0][0]

__________________________________________________________________________________________________

activation_97 (Activation) (None, 56, 56, 128) 0 batch_normalization_107[0][0]

__________________________________________________________________________________________________

conv2d_204 (Conv2D) (None, 56, 56, 512) 66048 activation_97[0][0]

__________________________________________________________________________________________________

batch_normalization_108 (BatchN (None, 56, 56, 512) 2048 conv2d_204[0][0]

__________________________________________________________________________________________________

conv2d_205 (Conv2D) (None, 56, 56, 512) 262656 activation_95[0][0]

__________________________________________________________________________________________________

add_30 (Add) (None, 56, 56, 512) 0 batch_normalization_108[0][0]

conv2d_205[0][0]

__________________________________________________________________________________________________

activation_98 (Activation) (None, 56, 56, 512) 0 add_30[0][0]

__________________________________________________________________________________________________

conv2d_206 (Conv2D) (None, 56, 56, 128) 65664 activation_98[0][0]

__________________________________________________________________________________________________

batch_normalization_109 (BatchN (None, 56, 56, 128) 512 conv2d_206[0][0]

__________________________________________________________________________________________________

activation_99 (Activation) (None, 56, 56, 128) 0 batch_normalization_109[0][0]

__________________________________________________________________________________________________

conv2d_207 (Conv2D) (None, 56, 56, 128) 147584 activation_99[0][0]

__________________________________________________________________________________________________

batch_normalization_110 (BatchN (None, 56, 56, 128) 512 conv2d_207[0][0]

__________________________________________________________________________________________________

activation_100 (Activation) (None, 56, 56, 128) 0 batch_normalization_110[0][0]

__________________________________________________________________________________________________

conv2d_208 (Conv2D) (None, 56, 56, 512) 66048 activation_100[0][0]

__________________________________________________________________________________________________

batch_normalization_111 (BatchN (None, 56, 56, 512) 2048 conv2d_208[0][0]

__________________________________________________________________________________________________

conv2d_209 (Conv2D) (None, 56, 56, 512) 262656 activation_98[0][0]

__________________________________________________________________________________________________

add_31 (Add) (None, 56, 56, 512) 0 batch_normalization_111[0][0]

conv2d_209[0][0]

__________________________________________________________________________________________________

activation_101 (Activation) (None, 56, 56, 512) 0 add_31[0][0]

__________________________________________________________________________________________________

conv2d_210 (Conv2D) (None, 28, 28, 256) 131328 activation_101[0][0]

__________________________________________________________________________________________________

batch_normalization_112 (BatchN (None, 28, 28, 256) 1024 conv2d_210[0][0]

__________________________________________________________________________________________________

activation_102 (Activation) (None, 28, 28, 256) 0 batch_normalization_112[0][0]

__________________________________________________________________________________________________

conv2d_211 (Conv2D) (None, 28, 28, 256) 590080 activation_102[0][0]

__________________________________________________________________________________________________

batch_normalization_113 (BatchN (None, 28, 28, 256) 1024 conv2d_211[0][0]

__________________________________________________________________________________________________

activation_103 (Activation) (None, 28, 28, 256) 0 batch_normalization_113[0][0]

__________________________________________________________________________________________________

conv2d_212 (Conv2D) (None, 28, 28, 1024) 263168 activation_103[0][0]

__________________________________________________________________________________________________

conv2d_213 (Conv2D) (None, 28, 28, 1024) 525312 activation_101[0][0]

__________________________________________________________________________________________________

batch_normalization_114 (BatchN (None, 28, 28, 1024) 4096 conv2d_212[0][0]

__________________________________________________________________________________________________

batch_normalization_115 (BatchN (None, 28, 28, 1024) 4096 conv2d_213[0][0]

__________________________________________________________________________________________________

add_32 (Add) (None, 28, 28, 1024) 0 batch_normalization_114[0][0]

batch_normalization_115[0][0]

__________________________________________________________________________________________________

activation_104 (Activation) (None, 28, 28, 1024) 0 add_32[0][0]

__________________________________________________________________________________________________

conv2d_214 (Conv2D) (None, 28, 28, 256) 262400 activation_104[0][0]

__________________________________________________________________________________________________

batch_normalization_116 (BatchN (None, 28, 28, 256) 1024 conv2d_214[0][0]

__________________________________________________________________________________________________

activation_105 (Activation) (None, 28, 28, 256) 0 batch_normalization_116[0][0]

__________________________________________________________________________________________________

conv2d_215 (Conv2D) (None, 28, 28, 256) 590080 activation_105[0][0]

__________________________________________________________________________________________________

batch_normalization_117 (BatchN (None, 28, 28, 256) 1024 conv2d_215[0][0]

__________________________________________________________________________________________________

activation_106 (Activation) (None, 28, 28, 256) 0 batch_normalization_117[0][0]

__________________________________________________________________________________________________

conv2d_216 (Conv2D) (None, 28, 28, 1024) 263168 activation_106[0][0]

__________________________________________________________________________________________________

batch_normalization_118 (BatchN (None, 28, 28, 1024) 4096 conv2d_216[0][0]

__________________________________________________________________________________________________

conv2d_217 (Conv2D) (None, 28, 28, 1024) 1049600 activation_104[0][0]

__________________________________________________________________________________________________

add_33 (Add) (None, 28, 28, 1024) 0 batch_normalization_118[0][0]

conv2d_217[0][0]

__________________________________________________________________________________________________

activation_107 (Activation) (None, 28, 28, 1024) 0 add_33[0][0]

__________________________________________________________________________________________________

conv2d_218 (Conv2D) (None, 28, 28, 256) 262400 activation_107[0][0]

__________________________________________________________________________________________________

batch_normalization_119 (BatchN (None, 28, 28, 256) 1024 conv2d_218[0][0]

__________________________________________________________________________________________________

activation_108 (Activation) (None, 28, 28, 256) 0 batch_normalization_119[0][0]

__________________________________________________________________________________________________

conv2d_219 (Conv2D) (None, 28, 28, 256) 590080 activation_108[0][0]

__________________________________________________________________________________________________

batch_normalization_120 (BatchN (None, 28, 28, 256) 1024 conv2d_219[0][0]

__________________________________________________________________________________________________

activation_109 (Activation) (None, 28, 28, 256) 0 batch_normalization_120[0][0]

__________________________________________________________________________________________________

conv2d_220 (Conv2D) (None, 28, 28, 1024) 263168 activation_109[0][0]

__________________________________________________________________________________________________

batch_normalization_121 (BatchN (None, 28, 28, 1024) 4096 conv2d_220[0][0]

__________________________________________________________________________________________________

conv2d_221 (Conv2D) (None, 28, 28, 1024) 1049600 activation_107[0][0]

__________________________________________________________________________________________________

add_34 (Add) (None, 28, 28, 1024) 0 batch_normalization_121[0][0]

conv2d_221[0][0]

__________________________________________________________________________________________________

activation_110 (Activation) (None, 28, 28, 1024) 0 add_34[0][0]

__________________________________________________________________________________________________

conv2d_222 (Conv2D) (None, 28, 28, 256) 262400 activation_110[0][0]

__________________________________________________________________________________________________

batch_normalization_122 (BatchN (None, 28, 28, 256) 1024 conv2d_222[0][0]

__________________________________________________________________________________________________

activation_111 (Activation) (None, 28, 28, 256) 0 batch_normalization_122[0][0]

__________________________________________________________________________________________________

conv2d_223 (Conv2D) (None, 28, 28, 256) 590080 activation_111[0][0]

__________________________________________________________________________________________________

batch_normalization_123 (BatchN (None, 28, 28, 256) 1024 conv2d_223[0][0]

__________________________________________________________________________________________________

activation_112 (Activation) (None, 28, 28, 256) 0 batch_normalization_123[0][0]

__________________________________________________________________________________________________

conv2d_224 (Conv2D) (None, 28, 28, 1024) 263168 activation_112[0][0]

__________________________________________________________________________________________________

batch_normalization_124 (BatchN (None, 28, 28, 1024) 4096 conv2d_224[0][0]

__________________________________________________________________________________________________

conv2d_225 (Conv2D) (None, 28, 28, 1024) 1049600 activation_110[0][0]

__________________________________________________________________________________________________

add_35 (Add) (None, 28, 28, 1024) 0 batch_normalization_124[0][0]

conv2d_225[0][0]

__________________________________________________________________________________________________

activation_113 (Activation) (None, 28, 28, 1024) 0 add_35[0][0]

__________________________________________________________________________________________________

conv2d_226 (Conv2D) (None, 28, 28, 256) 262400 activation_113[0][0]

__________________________________________________________________________________________________

batch_normalization_125 (BatchN (None, 28, 28, 256) 1024 conv2d_226[0][0]

__________________________________________________________________________________________________

activation_114 (Activation) (None, 28, 28, 256) 0 batch_normalization_125[0][0]

__________________________________________________________________________________________________

conv2d_227 (Conv2D) (None, 28, 28, 256) 590080 activation_114[0][0]

__________________________________________________________________________________________________

batch_normalization_126 (BatchN (None, 28, 28, 256) 1024 conv2d_227[0][0]

__________________________________________________________________________________________________

activation_115 (Activation) (None, 28, 28, 256) 0 batch_normalization_126[0][0]

__________________________________________________________________________________________________

conv2d_228 (Conv2D) (None, 28, 28, 1024) 263168 activation_115[0][0]

__________________________________________________________________________________________________

batch_normalization_127 (BatchN (None, 28, 28, 1024) 4096 conv2d_228[0][0]

__________________________________________________________________________________________________

conv2d_229 (Conv2D) (None, 28, 28, 1024) 1049600 activation_113[0][0]

__________________________________________________________________________________________________

add_36 (Add) (None, 28, 28, 1024) 0 batch_normalization_127[0][0]

conv2d_229[0][0]

__________________________________________________________________________________________________

activation_116 (Activation) (None, 28, 28, 1024) 0 add_36[0][0]

__________________________________________________________________________________________________

conv2d_230 (Conv2D) (None, 28, 28, 256) 262400 activation_116[0][0]

__________________________________________________________________________________________________

batch_normalization_128 (BatchN (None, 28, 28, 256) 1024 conv2d_230[0][0]

__________________________________________________________________________________________________

activation_117 (Activation) (None, 28, 28, 256) 0 batch_normalization_128[0][0]

__________________________________________________________________________________________________

conv2d_231 (Conv2D) (None, 28, 28, 256) 590080 activation_117[0][0]

__________________________________________________________________________________________________

batch_normalization_129 (BatchN (None, 28, 28, 256) 1024 conv2d_231[0][0]

__________________________________________________________________________________________________

activation_118 (Activation) (None, 28, 28, 256) 0 batch_normalization_129[0][0]

__________________________________________________________________________________________________

conv2d_232 (Conv2D) (None, 28, 28, 1024) 263168 activation_118[0][0]

__________________________________________________________________________________________________

batch_normalization_130 (BatchN (None, 28, 28, 1024) 4096 conv2d_232[0][0]

__________________________________________________________________________________________________

conv2d_233 (Conv2D) (None, 28, 28, 1024) 1049600 activation_116[0][0]

__________________________________________________________________________________________________

add_37 (Add) (None, 28, 28, 1024) 0 batch_normalization_130[0][0]

conv2d_233[0][0]

__________________________________________________________________________________________________

activation_119 (Activation) (None, 28, 28, 1024) 0 add_37[0][0]

__________________________________________________________________________________________________

conv2d_234 (Conv2D) (None, 14, 14, 512) 524800 activation_119[0][0]

__________________________________________________________________________________________________

batch_normalization_131 (BatchN (None, 14, 14, 512) 2048 conv2d_234[0][0]

__________________________________________________________________________________________________

activation_120 (Activation) (None, 14, 14, 512) 0 batch_normalization_131[0][0]

__________________________________________________________________________________________________

conv2d_235 (Conv2D) (None, 14, 14, 512) 2359808 activation_120[0][0]

__________________________________________________________________________________________________

batch_normalization_132 (BatchN (None, 14, 14, 512) 2048 conv2d_235[0][0]

__________________________________________________________________________________________________

activation_121 (Activation) (None, 14, 14, 512) 0 batch_normalization_132[0][0]

__________________________________________________________________________________________________

conv2d_236 (Conv2D) (None, 14, 14, 2048) 1050624 activation_121[0][0]

__________________________________________________________________________________________________

conv2d_237 (Conv2D) (None, 14, 14, 2048) 2099200 activation_119[0][0]

__________________________________________________________________________________________________

batch_normalization_133 (BatchN (None, 14, 14, 2048) 8192 conv2d_236[0][0]

__________________________________________________________________________________________________

batch_normalization_134 (BatchN (None, 14, 14, 2048) 8192 conv2d_237[0][0]

__________________________________________________________________________________________________

add_38 (Add) (None, 14, 14, 2048) 0 batch_normalization_133[0][0]

batch_normalization_134[0][0]

__________________________________________________________________________________________________

activation_122 (Activation) (None, 14, 14, 2048) 0 add_38[0][0]

__________________________________________________________________________________________________

conv2d_238 (Conv2D) (None, 14, 14, 512) 1049088 activation_122[0][0]

__________________________________________________________________________________________________

batch_normalization_135 (BatchN (None, 14, 14, 512) 2048 conv2d_238[0][0]

__________________________________________________________________________________________________

activation_123 (Activation) (None, 14, 14, 512) 0 batch_normalization_135[0][0]

__________________________________________________________________________________________________

conv2d_239 (Conv2D) (None, 14, 14, 512) 2359808 activation_123[0][0]

__________________________________________________________________________________________________

batch_normalization_136 (BatchN (None, 14, 14, 512) 2048 conv2d_239[0][0]

__________________________________________________________________________________________________

activation_124 (Activation) (None, 14, 14, 512) 0 batch_normalization_136[0][0]

__________________________________________________________________________________________________

conv2d_240 (Conv2D) (None, 14, 14, 2048) 1050624 activation_124[0][0]

__________________________________________________________________________________________________

batch_normalization_137 (BatchN (None, 14, 14, 2048) 8192 conv2d_240[0][0]

__________________________________________________________________________________________________

conv2d_241 (Conv2D) (None, 14, 14, 2048) 4196352 activation_122[0][0]

__________________________________________________________________________________________________

add_39 (Add) (None, 14, 14, 2048) 0 batch_normalization_137[0][0]

conv2d_241[0][0]

__________________________________________________________________________________________________

activation_125 (Activation) (None, 14, 14, 2048) 0 add_39[0][0]

__________________________________________________________________________________________________

conv2d_242 (Conv2D) (None, 14, 14, 512) 1049088 activation_125[0][0]

__________________________________________________________________________________________________

batch_normalization_138 (BatchN (None, 14, 14, 512) 2048 conv2d_242[0][0]

__________________________________________________________________________________________________

activation_126 (Activation) (None, 14, 14, 512) 0 batch_normalization_138[0][0]

__________________________________________________________________________________________________

conv2d_243 (Conv2D) (None, 14, 14, 512) 2359808 activation_126[0][0]

__________________________________________________________________________________________________

batch_normalization_139 (BatchN (None, 14, 14, 512) 2048 conv2d_243[0][0]

__________________________________________________________________________________________________

activation_127 (Activation) (None, 14, 14, 512) 0 batch_normalization_139[0][0]

__________________________________________________________________________________________________

conv2d_244 (Conv2D) (None, 14, 14, 2048) 1050624 activation_127[0][0]

__________________________________________________________________________________________________

batch_normalization_140 (BatchN (None, 14, 14, 2048) 8192 conv2d_244[0][0]

__________________________________________________________________________________________________

conv2d_245 (Conv2D) (None, 14, 14, 2048) 4196352 activation_125[0][0]

__________________________________________________________________________________________________

add_40 (Add) (None, 14, 14, 2048) 0 batch_normalization_140[0][0]

conv2d_245[0][0]

__________________________________________________________________________________________________

activation_128 (Activation) (None, 14, 14, 2048) 0 add_40[0][0]

__________________________________________________________________________________________________

global_average_pooling2d_1 (Glo (None, 2048) 0 activation_128[0][0]

__________________________________________________________________________________________________

flatten_6 (Flatten) (None, 2048) 0 global_average_pooling2d_1[0][0]

__________________________________________________________________________________________________

dense_16 (Dense) (None, 1000) 2049000 flatten_6[0][0]

==================================================================================================

Total params: 40,166,696

Trainable params: 40,114,216

Non-trainable params: 52,480

__________________________________________________________________________________________________

ResNet共有 40,114,216个训练参数

#训练

my_resnet50 = get_ResNet50(10,(227,227,3))

my_resnet50.compile(optimizer=keras.optimizers.Adam(learning_rate=0.001),

loss='sparse_categorical_crossentropy', #计算稀疏的分类交叉熵损失

metrics=['accuracy'])

my_resnet50.fit(test_x,test_y,batch_size=1,epochs=1)

通过对比AlexNet,VGG16,ResNet50,可以发现,ResNet50层数最多,而且训练参数最少。