chainer-图像分类-VGG

文章目录

- 前言

- 一、VGG网络结构

- 二、代码实现

-

- 1.引入必须要的库库

- 2.模型构建

-

- 1.一些标准的模块进行设置

- 2.VGG网络结构的构建

- 3.结合之前构建的分类框架调用

- 三、训练效果展示

-

- 1.vgg11训练结果展示

- 总结

前言

在文章与之前编写的图像分类框架构建组合使用,这里只讲述基于chainer的模型构建,本次讲解如何使用chainer构建VGG网络结构以及对应的其他版本:vgg11,vgg13,vgg16,vgg19。并且考虑到有些电脑的显存不高,所以使用alpha降低通道数,但是效果经过测试后还是比较理想的。

一、VGG网络结构

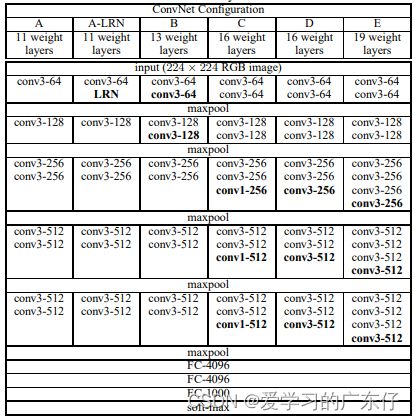

这里直接上VGG论文中的一张图:

本次实现的包括A、B、D、E分别对应vgg11,vgg13,vgg16,vgg19,我们对比下面这张图进行一块理解:

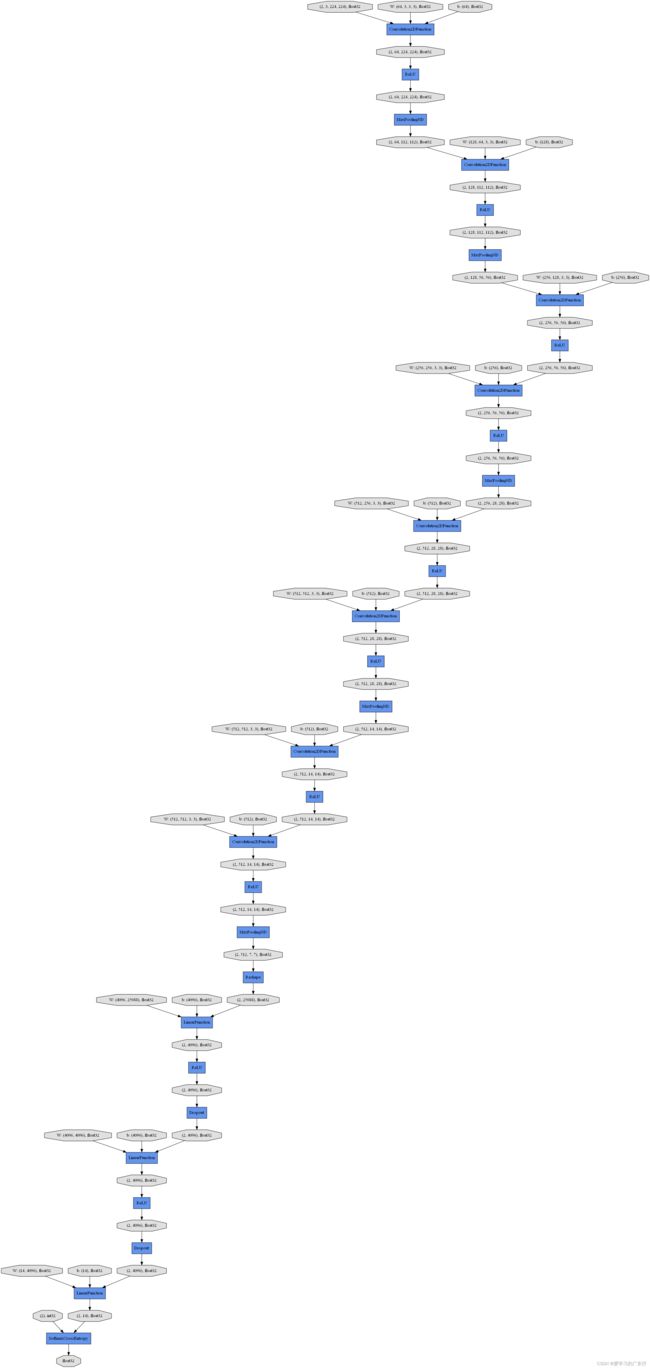

这张图我们对应这D结构分析,可以得知,白色的为卷积+激活函数,红色的为池化层,后面蓝色的为扁平化,可理解为把维度铺平,然后最后输出的时候进入softmax,这个在分类网络的结果上基本上都会用到。因此在代码结构上我们需要一块一块的实现。

至于原理包括卷积怎么计算,网上已经有很多资料的,我这里就不一一讲述,只要理解这个长宽以及通道数的变化跟着算即可。

二、代码实现

1.引入必须要的库库

from chainer.functions import dropout, max_pooling_2d, relu, softmax

from chainer.initializers import constant, normal

from chainer.links import Linear, Convolution2D, DilatedConvolution2D

import chainer, sys

import numpy as np

from data.data_transform import resize

import chainer.links as L

import chainer.functions as F

2.模型构建

1.一些标准的模块进行设置

# 卷积+激活函数模块

class Conv2DActiv(chainer.Chain):

def __init__(self, in_channels, out_channels, ksize=None, stride=1, pad=0, nobias=False, initialW=None, initial_bias=None, activ=relu):

if ksize is None:

out_channels, ksize, in_channels = in_channels, out_channels, None

self.activ = activ

super(Conv2DActiv, self).__init__()

with self.init_scope():

self.conv = Convolution2D(in_channels, out_channels, ksize, stride, pad, nobias, initialW, initial_bias)

def forward(self, x):

h = self.conv(x)

return self.activ(h)

# 池化层模块

def _max_pooling_2d(x):

return max_pooling_2d(x, ksize=2)

2.VGG网络结构的构建

这里构建的时候直接vgg11、vgg13、vgg16、vgg19同时构建,根据参数layers决定网络的选择,代码如下:

class VGG(chainer.Chain):

def __init__(self, n_class=None, layers=11, alpha=1, zeroInit=False, initial_bias=None):

self.alpha = alpha

if zeroInit:

initialW = constant.Zero()

else:

initialW = normal.Normal(0.01)

kwargs = {'initialW': initialW, 'initial_bias': initial_bias}

self.layers = layers

super(VGG, self).__init__()

with self.init_scope():

self.conv1_1 = Conv2DActiv(None, 64//self.alpha, 3, 1, 1, **kwargs)

if layers in [13,16,19]:

self.conv1_2 = Conv2DActiv(None, 64//self.alpha, 3, 1, 1, **kwargs)

self.pool1 = _max_pooling_2d

self.conv2_1 = Conv2DActiv(None, 128//self.alpha, 3, 1, 1, **kwargs)

if layers in [13,16,19]:

self.conv2_2 = Conv2DActiv(None, 128//self.alpha, 3, 1, 1, **kwargs)

self.pool2 = _max_pooling_2d

self.conv3_1 = Conv2DActiv(None, 256//self.alpha, 3, 1, 1, **kwargs)

self.conv3_2 = Conv2DActiv(None, 256//self.alpha, 3, 1, 1, **kwargs)

if layers in [16,19]:

self.conv3_3 = Conv2DActiv(None, 256//self.alpha, 3, 1, 1, **kwargs)

if layers in [19]:

self.conv3_4 = Conv2DActiv(None, 256//self.alpha, 3, 1, 1, **kwargs)

self.pool3 = _max_pooling_2d

self.conv4_1 = Conv2DActiv(None, 512//self.alpha, 3, 1, 1, **kwargs)

self.conv4_2 = Conv2DActiv(None, 512//self.alpha, 3, 1, 1, **kwargs)

if layers in [16,19]:

self.conv4_3 = Conv2DActiv(None, 512//self.alpha, 3, 1, 1, **kwargs)

if layers in [19]:

self.conv4_4 = Conv2DActiv(None, 512//self.alpha, 3, 1, 1, **kwargs)

self.pool4 = _max_pooling_2d

self.conv5_1 = Conv2DActiv(None, 512//self.alpha, 3, 1, 1, **kwargs)

self.conv5_2 = Conv2DActiv(None, 512//self.alpha, 3, 1, 1, **kwargs)

if layers in [16,19]:

self.conv5_3 = Conv2DActiv(None, 512//self.alpha, 3, 1, 1, **kwargs)

if layers in [19]:

self.conv5_4 = Conv2DActiv(None, 512//self.alpha, 3, 1, 1, **kwargs)

self.pool5 = _max_pooling_2d

self.fc6 = Linear(None, 4096//self.alpha, **kwargs)

self.fc6_relu = relu

self.fc6_dropout = dropout

self.fc7 = Linear(None, 4096//self.alpha, **kwargs)

self.fc7_relu = relu

self.fc7_dropout = dropout

self.fc8 = Linear(None, n_class, **kwargs)

self.fc8_relu = relu #

self.prob = softmax

def forward(self, x):

x = self.conv1_1(x)

if self.layers in [13,16,19]:

x = self.conv1_2(x)

x = self.pool1(x)

x = self.conv2_1(x)

if self.layers in [13,16,19]:

x = self.conv2_2(x)

x = self.pool2(x)

x = self.conv3_1(x)

x = self.conv3_2(x)

if self.layers in [16,19]:

x = self.conv3_3(x)

if self.layers in [19]:

x = self.conv3_4(x)

x = self.pool3(x)

x = self.conv4_1(x)

x = self.conv4_2(x)

if self.layers in [16,19]:

x = self.conv4_3(x)

if self.layers in [19]:

x = self.conv4_4(x)

x = self.pool4(x)

x = self.conv5_1(x)

x = self.conv5_2(x)

if self.layers in [16,19]:

x = self.conv5_3(x)

if self.layers in [19]:

x = self.conv5_4(x)

x = self.pool5(x)

x = self.fc6(x)

x = self.fc6_relu(x)

x = self.fc6_dropout(x)

x = self.fc7(x)

x = self.fc7_relu(x)

x = self.fc7_dropout(x)

x = self.fc8(x)

# x = self.fc8_relu(x)

# x = self.prob(x)

return x

这里的参数需要解释一下:

n_class:类别个数

layers:设置选择vgg11,vgg13,vgg16,vgg19

alpha:根据自己电脑自身情况修改通道数

3.结合之前构建的分类框架调用

self.extractor = VGG(n_class=14, layers=11, alpha=1)

self.model = Classifier(self.extractor)

if self.gpu_devices >= 0:

self.model.to_gpu()

三、训练效果展示

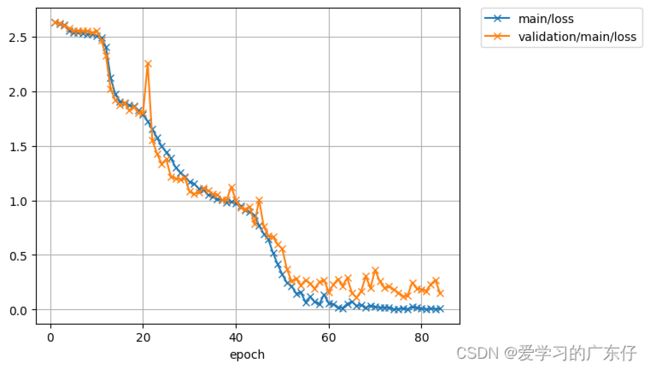

1.vgg11训练结果展示

从这里的图片可以看到效果还是比较可观的,下边这张图为训练的损失值的变化:

模型最终预测效果如图:

文件名的第一个代表预测结果,第二个代表实际标签,可以看得出这里是一一对应的,效果目前看起来是可以的。

总结

本次使用VGG网络结构以及对应他的一些不同深度的结构进行测试,效果对比,调用只需要在图像分类框架篇中替换model即可。