局域网使用kubeadm安装高可用k8s集群

主机列表:

| ip | 主机名 | 节点 | cpu | 内存 |

| 192.168.23.100 | k8smaster01 | master | 2核 | 2G |

| 192.168.23.101 | k8smaster02 | node | 2核 | 2G |

| 192.168.23.102 | k8smaster03 | node | 2核 | 2G |

1、配置本地yum源

yum源包:

链接:https://pan.baidu.com/s/1KAYWlw5Ky2ESUEZVsphQ0Q

提取码:3ddo

配置本地yum源,将yum.repo拷贝到/etc/yum.repos.d/目录。

[root@k8smaster yum.repos.d]# more yum.repo

[soft]

name=base

baseurl=http://192.168.23.100/yum

gpgcheck=0

[root@k8smaster yum.repos.d]# scp yum.repo 192.168.23.102:/etc/yum.repos.d/

[email protected]'s password:

yum.repo 100% 63 0.1KB/s 00:00

[root@k8smaster yum.repos.d]# scp yum.repo 192.168.23.101:/etc/yum.repos.d/

[email protected]'s password:

yum.repo

2、修改/etc/hosts

[root@k8smaster yum.repos.d]# cat >> /etc/hosts << EOF

192.168.23.100 k8smaster01

192.168.23.101 k8smaster02

192.168.23.102 k8smaster03

> EOF

[root@k8smaster yum.repos.d]#

3、安装依赖

yum install -y conntrack ntpdate ntp ipvsadm ipset iptables curl sysstat libseccomp wget vim net-tools git iproute lrzsz bash-completion tree bridge-utils unzip bind-utils gcc

4、关闭selinux

setenforce 0 && sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

5、关闭防火墙,设置防火墙为iptables并设置空规则

#关闭firewalld并取消自启动

systemctl stop firewalld && systemctl disable firewalld

#安装iptables,启动iptables,设置开机自启,清空iptables规则,保存当前规则到默认规则

yum -y install iptables-services && systemctl start iptables && systemctl enable iptables && iptables -F && service iptables save

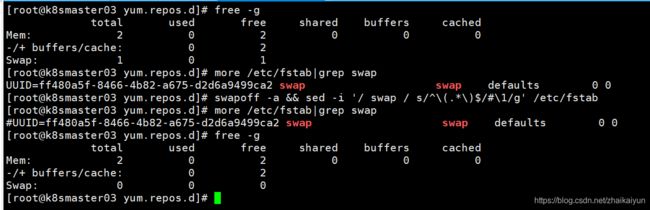

6、关闭swap分区

#关闭swap分区【虚拟内存】并且永久关闭虚拟内存。

swapoff -a && sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

7、配置内核参数,对于k8s

cat > kubernetes.conf <

net.bridge.bridge-nf-call-iptables=1

#开启网桥模式【重要】

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

net.ipv4.tcp_tw_recycle=0

#禁止使用swap空间,只有当系统OOM时才允许使用它

vm.swappiness=0

#不检查物理内存是否够用

vm.overcommit_memory=1

#开启OOM

vm.panic_on_oom=0

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file-max=52706963

fs.nr_open=52706963

#关闭ipv6【重要】

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

#将优化内核文件拷贝到/etc/sysctl.d/文件夹下,这样优化文件开机的时候能够被调用

cp kubernetes.conf /etc/sysctl.d/kubernetes.conf

#手动刷新,让优化文件立即生效

sysctl -p /etc/sysctl.d/kubernetes.conf

8、调整系统时区

#设置系统时区为中国/上海

timedatectl set-timezone Asia/Shanghai

#将当前的 UTC 时间写入硬件时钟

timedatectl set-local-rtc 0

#重启依赖于系统时间的服务

systemctl restart rsyslog

systemctl restart crond

9、关闭系统不需要的服务

#关闭及禁用邮件服务

systemctl stop postfix && systemctl disable postfix

10、设置日志的保存方式

在Centos7以后,因为引导方式改为了system.d,所以有两个日志系统同时在工作,默认的是rsyslogd,以及systemd journald

使用systemd journald更好一些,因此我们更改默认为systemd journald,只保留一个日志的保存方式。

1).创建保存日志的目录

mkdir /var/log/journal

2).创建配置文件存放目录

mkdir /etc/systemd/journald.conf.d

3).创建配置文件

cat > /etc/systemd/journald.conf.d/99-prophet.conf <

#持久化保存到磁盘

Storage=persistent

#压缩历史日志

Compress=yes

SyncIntervalSec=5m

RateLimitInterval=30s

RateLimitBurst=1000

#最大占用空间10G

SystemMaxUse=10G

#单日志文件最大200M

SystemMaxFileSize=200M

#日志保存时间2周

MaxRetentionSec=2week

#不将日志转发到syslog

ForwardToSyslog=no

EOF

4).重启systemd journald的配置

systemctl restart systemd-journald

11、打开文件数调整

echo "* soft nofile 65536" >> /etc/security/limits.conf

echo "* hard nofile 65536" >> /etc/security/limits.conf

12、升级Linux内核为4.44版本

[root@k8smaster yum.repos.d]# yum install kernel-lt.x86_64 -y (4.4.213-1.el7.elrepo)

[root@k8smaster yum.repos.d]# awk -F\' '$1=="menuentry " {print $2}' /etc/grub2.cfg

CentOS Linux (4.4.213-1.el7.elrepo.x86_64) 7 (Core)

CentOS Linux, with Linux 3.10.0-123.el7.x86_64

CentOS Linux, with Linux 0-rescue-b7478dd50b1d41a5836a6a670b5cd8c1

[root@k8smaster yum.repos.d]#grub2-set-default 'CentOS Linux (4.4.213-1.el7.elrepo.x86_64) 7 (Core)'

[root@k8snode01 ~]# uname -a

Linux k8snode01 4.4.213-1.el7.elrepo.x86_64 #1 SMP Wed Feb 5 10:44:50 EST 2020 x86_64 x86_64 x86_64 GNU/Linux

13、关闭NUMA

numa主要是和swap有关

[root@k8smaster01 default]# cp /etc/default/grub{,.bak}

[root@k8smaster01 default]# vi /etc/default/grub

GRUB_TIMEOUT=5

GRUB_DISTRIBUTOR="$(sed 's, release .*$,,g' /etc/system-release)"

GRUB_DEFAULT=saved

GRUB_DISABLE_SUBMENU=true

GRUB_TERMINAL_OUTPUT="console"

GRUB_CMDLINE_LINUX="vconsole.keymap=us crashkernel=auto vconsole.font=latarcyrheb-sun16 rhgb quiet numa=off"

GRUB_DISABLE_RECOVERY="true"

[root@k8smaster01 default]# cp /boot/grub2/grub.cfg{,.bak}

[root@k8smaster01 default]# grub2-mkconfig -o /boot/grub2/grub.cfg #重新生成grub2配置文件

Generating grub configuration file ...

Found linux image: /boot/vmlinuz-4.4.213-1.el7.elrepo.x86_64

Found initrd image: /boot/initramfs-4.4.213-1.el7.elrepo.x86_64.img

Found linux image: /boot/vmlinuz-3.10.0-123.el7.x86_64

Found initrd image: /boot/initramfs-3.10.0-123.el7.x86_64.img

Found linux image: /boot/vmlinuz-0-rescue-b7478dd50b1d41a5836a6a670b5cd8c1

Found initrd image: /boot/initramfs-0-rescue-b7478dd50b1d41a5836a6a670b5cd8c1.img

done

[root@k8smaster01 default]#

14、kube-proxy开启ipvs的前置条件

modprobe br_netfilter #加载netfilter模块

cat > /etc/sysconfig/modules/ipvs.modules <

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules

bash /etc/sysconfig/modules/ipvs.modules

lsmod | grep -e ip_vs -e nf_conntrack_ipv4 #使用lsmod命令查看这些文件是否被引导。

15、安装docker

依赖 yum install yum-utils device-mapper-persistent-data lvm2 -y

yum install -y docker-ce #安装docker

创建/etc/docker目录

[ ! -d /etc/docker ] && mkdir /etc/docker

配置daemon

cat > /etc/docker/daemon.json <

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

}

}

修改docker.service文件

/usr/lib/systemd/system/docker.service

ExecStart=/usr/bin/dockerd -H fd:// --insecure-registry 0.0.0.0/0 -H unix:///var/run/docker.sock -H tcp://0.0.0.0:2375 --containerd=/run/containerd/containerd.sock

# 重启docker服务

systemctl daemon-reload && systemctl restart docker && systemctl enable docker

16、安装仓库和镜像初始化

docker run -d -p 5000:5000 --restart=always --name private-docker-registry --privileged=true -v /data/registry:/var/lib/registry 192.168.23.100:5000/registry:v2

flannel网络镜像包

链接:https://pan.baidu.com/s/1-DYxDoU2X85aobaGFclKfA

提取码:nson

k8s基础镜像包

链接:https://pan.baidu.com/s/17uV90VPXqoaezwccpTj2GQ

提取码:13t3

导入镜像

[root@k8smaster k8s_image]# more load_image.sh

#!/bin/bash

ls /home/zhaiky/k8s_image|grep -v load > /tmp/image-list.txt

cd /home/zhaiky/k8s_image

for i in $( cat /tmp/image-list.txt )

do

docker load -i $i

done

rm -rf /tmp/image-list.txt

上传镜像到私有仓库

docker push 192.168.23.100:5000/kube-apiserver:v1.15.1

docker push 192.168.23.100:5000/kube-proxy:v1.15.1

docker push 192.168.23.100:5000/kube-controller-manager:v1.15.1

docker push 192.168.23.100:5000/kube-scheduler:v1.15.1

docker push 192.168.23.100:5000/registry:v1

docker push 192.168.23.100:5000/coreos/flannel:v0.11.0-s390x

docker push 192.168.23.100:5000/coreos/flannel:v0.11.0-ppc64le

docker push 192.168.23.100:5000/coreos/flannel:v0.11.0-arm64

docker push 192.168.23.100:5000/coreos/flannel:v0.11.0-arm

docker push 192.168.23.100:5000/coreos/flannel:v0.11.0-amd64

docker push 192.168.23.100:5000/coredns:1.3.1

docker push 192.168.23.100:5000/etcd:3.3.10

docker push 192.168.23.100:5000/pause:3.1

17、安装HAProxy+Keepalived

kubernetes Master节点运行组件如下:

kube-apiserver:提供了资源操作的唯一入口,并提供认证、授权、访问控制、API注册和发现等机制

kube-scheduler:负责资源的调度,按照预定的调度策略将Pod调度到相应的机器上

kube-controller-manager:负责维护集群的状态,比如故障检测、自动扩展、滚动更新等

etcd:CoreOS基于Raft开发的分布式key-value存储,可用于服务发现、共享配置以及一致性保障(如数据库选主、分布式锁等)

kube-scheduler和 kube-controller-manager可以以集群模式运行,通过leader选举产生一个工作进程,其它进程处于阻塞模式。

kube-apiserver 可以运行多个实例,但对其它组件需要提供统一的访问地址,利用HAProxy+Keepalived配置统一的访问地址。

配置的思路就是利用HAProxy+Keepalived实现kube-apiserver虚拟IP访问从而实现高可用和负载均衡,拆解如下:

1)Keepalived提供kube-apiserver对外服务的虚拟IP(VIP)

2)HAProxy监听Keepalived VIP

3)运行Keepalived和HAProxy的节点称为 LB(负载均衡)节点

4)Keepalived是一主多备运行模式,故至少需要两个LB节点

Keepalived在运行过程中周期检查本机的HAProxy进程状态,如果检测到HAProxy进程异常,则触发重新选主的过程,VIP 将飘移到新选出来的主节点,从而实现 VIP 的高可用。

所有组件(如 kubeclt、apiserver、controller-manager、scheduler 等)都通过VIP+HAProxy监听的6444端口访问kube-apiserver服务(注意:kube-apiserver默认端口为6443,为了避免冲突我们将HAProxy端口设置为6444,其它组件都是通过该端口统一请求apiserver)

创建HAProxy启动脚本

该步骤在k8smaster01 执行

[root@k8smaster01 k8s]# mkdir -p /root/k8s/lb

[root@k8smaster01 k8s]# vi /root/k8s/lb/start-haproxy.sh

[root@k8smaster01 k8s]# cd lb/

[root@k8smaster01 lb]# chmod +x start-haproxy.sh

[root@k8smaster01 lb]# more start-haproxy.sh

#!/bin/bash

# 修改为你自己的Master地址

MasterIP1=192.168.23.100

MasterIP2=192.168.23.101

MasterIP3=192.168.23.102

#这是kube-apiserver默认端口,不用修改

MasterPort=6443

# 容器将HAProxy的6444端口暴露出去

docker run -d --restart=always --name HAProxy-K8S -p 6444:6444 \

-e MasterIP1=$MasterIP1 \

-e MasterIP2=$MasterIP2 \

-e MasterIP3=$MasterIP3 \

-e MasterPort=$MasterPort \

wise2c/haproxy-k8s

[root@k8smaster01 lb]#

创建Keepalived启动脚本

该步骤在k8smaster01 执行

[root@k8smaster01 lb]# more start-keepalived.sh

#!/bin/bash

# 修改为你自己的虚拟 IP 地址

VIRTUAL_IP=192.168.141.200

# 虚拟网卡设备名

INTERFACE=ens33

# 虚拟网卡的子网掩码

NETMASK_BIT=24

# HAProxy暴露端口,内部指向kube-apiserver的6443端口

CHECK_PORT=6444

#路由标识符

RID=10

#虚拟路由标识符

VRID=160

#IPV4多播地址,默认 224.0.0.18

MCAST_GROUP=224.0.0.18

docker run -itd --restart=always --name=Keepalived-K8S \

--net=host --cap-add=NET_ADMIN \

-e VIRTUAL_IP=$VIRTUAL_IP \

-e INTERFACE=$INTERFACE \

-e CHECK_PORT=$CHECK_PORT \

-e RID=$RID \

-e VRID=$VRID \

-e NETMASK_BIT=$NETMASK_BIT \

-e MCAST_GROUP=$MCAST_GROUP \

wise2c/keepalived-k8s

[root@k8smaster01 lb]# chmod +x start-keepalived.sh

[root@k8smaster01 lb]#

复制脚本到其它 Master 地址

分别在 k8smaster02和k8smaster03执行创建工作目录命令

mkdir -p /root/k8s/lb

将 kubernetes-master-01 中的脚本拷贝至其它 Master

[root@k8smaster01 lb]# scp *sh [email protected]:/root/k8s/lb/

[email protected]'s password:

start-haproxy.sh 100% 463 0.5KB/s 00:00

start-keepalived.sh 100% 718 0.7KB/s 00:00

[root@k8smaster01 lb]# scp *sh [email protected]:/root/k8s/lb/

[email protected]'s password:

start-haproxy.sh 100% 463 0.5KB/s 00:00

start-keepalived.sh 100% 718 0.7KB/s 00:00

[root@k8smaster01 lb]#

分别在3个 Master 中启动容器(执行脚本)

sh /root/k8s/lb/start-haproxy.sh && sh /root/k8s/lb/start-keepalived.sh

验证是否成功

查看容器

[root@k8smaster01 zhaiky]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

fe02201d21da wise2c/keepalived-k8s "/usr/bin/keepalived…" 12 minutes ago Up 12 minutes Keepalived-K8S

ae6353789107 wise2c/haproxy-k8s "/docker-entrypoint.…" 12 minutes ago Up 12 minutes 0.0.0.0:6444->6444/tcp HAProxy-K8S

[root@k8smaster01 zhaiky]#

# 查看网卡绑定的虚拟 IP

2: ens33:

inet 192.168.141.151/24 brd 192.168.141.255 scope global ens33

inet 192.168.23.200/24 scope global secondary ens33

特别注意:Keepalived 会对 HAProxy 监听的 6444 端口进行检测,如果检测失败即认定本机 HAProxy 进程异常,会将 VIP 漂移到其他节点,所以无论本机 Keepalived 容器异常或 HAProxy 容器异常都会导致 VIP 漂移到其他节点

[root@k8smaster01 lb]# ip addr del 192.168.141.200/24 dev ens33 #VIP不释放,可以手工移除

bash-4.4# cd /etc/keepalived/

bash-4.4# more keepalived.conf

global_defs {

router_id 10

vrrp_version 2

vrrp_garp_master_delay 1

}

vrrp_script chk_haproxy {

script "/bin/busybox nc -v -w 2 -z 127.0.0.1 6444 2>&1 | grep open | grep 6444"

timeout 1

interval 1 # check every 1 second

fall 2 # require 2 failures for KO

rise 2 # require 2 successes for OK

}

vrrp_instance lb-vips {

state BACKUP

interface ens33

virtual_router_id 160

priority 100

advert_int 1

nopreempt

track_script {

chk_haproxy

}

authentication {

auth_type PASS

auth_pass blahblah

}

virtual_ipaddress {

192.168.23.200/24 dev ens33

}

}

bash-4.4#

root@fa6f8f258a8b:/usr/local/etc/haproxy# more haproxy.cfg

global

log 127.0.0.1 local0

log 127.0.0.1 local1 notice

maxconn 4096

#chroot /usr/share/haproxy

#user haproxy

#group haproxy

daemon

defaults

log global

mode http

option httplog

option dontlognull

retries 3

option redispatch

timeout connect 5000

timeout client 50000

timeout server 50000

frontend stats-front

bind *:8081

mode http

default_backend stats-back

frontend fe_k8s_6444

bind *:6444

mode tcp

timeout client 1h

log global

option tcplog

default_backend be_k8s_6443

acl is_websocket hdr(Upgrade) -i WebSocket

acl is_websocket hdr_beg(Host) -i ws

backend stats-back

mode http

balance roundrobin

stats uri /haproxy/stats

stats auth pxcstats:secret

backend be_k8s_6443

mode tcp

timeout queue 1h

timeout server 1h

timeout connect 1h

log global

balance roundrobin

server rancher01 192.168.23.100:6443

server rancher02 192.168.23.101:6443

server rancher03 192.168.23.102:6443

root@fa6f8f258a8b:/usr/local/etc/haproxy#

18、安装kubeadm、kubelet、kubectl

yum install -y kubeadm-1.15.1 kubelet-1.15.1 kubectl-1.15.1

systemctl enable kubelet && systemctl start kubelet

19、启用kubectl命令的自动补全功能

# 安装并配置bash-completion

yum install -y bash-completion

echo 'source /usr/share/bash-completion/bash_completion' >> /etc/profile

source /etc/profile

echo "source <(kubectl completion bash)" >> ~/.bashrc

source ~/.bashrc

20、初始化Master

配置文件包,包括kubeadm-config.yaml和kube-flannel.yml都在里面

链接:https://pan.baidu.com/s/1g0G7Ion0n6lERpluNjh_9A

提取码:6pxt

kubeadm config print init-defaults --kubeconfig ClusterConfiguration > kubeadm-config.yaml .

[root@k8smaster01 ~]# cp /home/zhaiky/kubeadm-config.yaml .

[root@k8smaster01 install]# more kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.23.100

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8smaster01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "192.168.23.200:6444"

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: 192.168.23.100:5000

kind: ClusterConfiguration

kubernetesVersion: v1.15.1

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:

SupportIPVSProxyMode: true

mode: ipvs

[root@k8smaster01 install]# kubeadm init --config=kubeadm-config.yaml --upload-certs | tee kubeadm-init.log

安装日志记录

[root@k8smaster01 install]# more kubeadm-init.log

[init] Using Kubernetes version: v1.15.1

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8smaster01 localhost] and IPs [192.168.23.100 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8smaster01 localhost] and IPs [192.168.23.100 127.0.0.1 ::1]

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8smaster01 kubernetes kubernetes.default kubernetes.default.svc kubernet

es.default.svc.cluster.local] and IPs [10.96.0.1 192.168.23.100 192.168.23.200]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "admin.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifest

s". This can take up to 4m0s

[apiclient] All control plane components are healthy after 27.013105 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.15" in namespace kube-system with the configuration for the kubelets in the clus

ter

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

cbf3262f383a5fb7633929e254d1956b5f2f3f1afc542a5a205b14e8e760e2d5

[mark-control-plane] Marking the node k8smaster01 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8smaster01 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedul

e]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certifica

te credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Toke

n

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.23.200:6444 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:744391df4d7dd8ea7c05c40735c0af1fe33a18dd78bff962e9da5992a33f75b1 \

--control-plane --certificate-key cbf3262f383a5fb7633929e254d1956b5f2f3f1afc542a5a205b14e8e760e2d5

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.23.200:6444 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:744391df4d7dd8ea7c05c40735c0af1fe33a18dd78bff962e9da5992a33f75b1

[root@k8smaster01 install]#

[root@k8smaster03 ~]# kubeadm join 192.168.23.200:6444 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:744391df4d7dd8ea7c05c40735c0af1fe33a18dd78bff962e9da5992a33f75b1 \

> --control-plane --certificate-key cbf3262f383a5fb7633929e254d1956b5f2f3f1afc542a5a205b14e8e760e2d5

[preflight] Running pre-flight checks

[WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 19.03.5. Latest validated version: 18.09

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8smaster03 localhost] and IPs [192.168.23.102 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8smaster03 localhost] and IPs [192.168.23.102 127.0.0.1 ::1]

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8smaster03 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.23.102 192.168.23.200]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[check-etcd] Checking that the etcd cluster is healthy

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.15" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[etcd] Announced new etcd member joining to the existing etcd cluster

[etcd] Wrote Static Pod manifest for a local etcd member to "/etc/kubernetes/manifests/etcd.yaml"

[etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[mark-control-plane] Marking the node k8smaster03 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8smaster03 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

[root@k8smaster01 install]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster01 NotReady master 5m48s v1.15.1

k8smaster02 NotReady master 4m16s v1.15.1

k8smaster03 NotReady master 3m42s v1.15.1

[root@k8smaster01 install]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

[root@k8smaster01 install]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-75b6d67b6d-dqxwb 0/1 Pending 0 6m59s

coredns-75b6d67b6d-grqjp 0/1 Pending 0 6m59s

etcd-k8smaster01 1/1 Running 0 6m15s

etcd-k8smaster02 1/1 Running 0 4m14s

etcd-k8smaster03 1/1 Running 0 5m11s

kube-apiserver-k8smaster01 1/1 Running 0 6m3s

kube-apiserver-k8smaster02 1/1 Running 0 4m24s

kube-apiserver-k8smaster03 1/1 Running 0 5m12s

kube-controller-manager-k8smaster01 1/1 Running 0 6m5s

kube-controller-manager-k8smaster02 1/1 Running 0 4m32s

kube-controller-manager-k8smaster03 1/1 Running 0 5m12s

kube-proxy-jwzmp 1/1 Running 0 5m12s

kube-proxy-jzftf 1/1 Running 0 6m59s

kube-proxy-rwmmf 1/1 Running 0 5m46s

kube-scheduler-k8smaster01 1/1 Running 0 6m15s

kube-scheduler-k8smaster02 1/1 Running 0 4m31s

kube-scheduler-k8smaster03 1/1 Running 0 5m12s

[root@k8smaster01 install]#

21、安装flannel网络插件

[root@k8smaster01 install]# kubectl create -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

[root@k8smaster01 install]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster01 Ready master 15m v1.15.1

k8smaster02 Ready master 14m v1.15.1

k8smaster03 Ready master 13m v1.15.1

[root@k8smaster01 install]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-75b6d67b6d-dqxwb 1/1 Running 0 15m 10.244.0.3 k8smaster01

coredns-75b6d67b6d-grqjp 1/1 Running 0 15m 10.244.0.2 k8smaster01

etcd-k8smaster01 1/1 Running 0 14m 192.168.23.100 k8smaster01

etcd-k8smaster02 1/1 Running 0 12m 192.168.23.101 k8smaster02

etcd-k8smaster03 1/1 Running 0 13m 192.168.23.102 k8smaster03

kube-apiserver-k8smaster01 1/1 Running 0 14m 192.168.23.100 k8smaster01

kube-apiserver-k8smaster02 1/1 Running 0 13m 192.168.23.101 k8smaster02

kube-apiserver-k8smaster03 1/1 Running 0 13m 192.168.23.102 k8smaster03

kube-controller-manager-k8smaster01 1/1 Running 0 14m 192.168.23.100 k8smaster01

kube-controller-manager-k8smaster02 1/1 Running 0 13m 192.168.23.101 k8smaster02

kube-controller-manager-k8smaster03 1/1 Running 0 13m 192.168.23.102 k8smaster03

kube-flannel-ds-amd64-4gcxh 1/1 Running 0 61s 192.168.23.101 k8smaster02

kube-flannel-ds-amd64-s2css 1/1 Running 0 61s 192.168.23.102 k8smaster03

kube-flannel-ds-amd64-srml4 1/1 Running 0 61s 192.168.23.100 k8smaster01

kube-proxy-jwzmp 1/1 Running 0 13m 192.168.23.102 k8smaster03

kube-proxy-jzftf 1/1 Running 0 15m 192.168.23.100 k8smaster01

kube-proxy-rwmmf 1/1 Running 0 14m 192.168.23.101 k8smaster02

kube-scheduler-k8smaster01 1/1 Running 0 14m 192.168.23.100 k8smaster01

kube-scheduler-k8smaster02 1/1 Running 0 13m 192.168.23.101 k8smaster02

kube-scheduler-k8smaster03 1/1 Running 0 13m 192.168.23.102 k8smaster03

[root@k8smaster01 install]#

22、将k8s子节点加入到k8s主节点

kubeadm join 192.168.23.200:6444 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:744391df4d7dd8ea7c05c40735c0af1fe33a18dd78bff962e9da5992a33f75b1

23、查看集群信息

[root@k8smaster02 ~]# kubectl -n kube-system get cm kubeadm-config -oyaml #查看生效的配置

apiVersion: v1

data:

ClusterConfiguration: |

apiServer:

extraArgs:

authorization-mode: Node,RBAC

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 192.168.23.200:6444

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: 192.168.23.100:5000

kind: ClusterConfiguration

kubernetesVersion: v1.15.1

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

ClusterStatus: |

apiEndpoints:

k8smaster01:

advertiseAddress: 192.168.23.100

bindPort: 6443

k8smaster02:

advertiseAddress: 192.168.23.101

bindPort: 6443

k8smaster03:

advertiseAddress: 192.168.23.102

bindPort: 6443

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterStatus

kind: ConfigMap

metadata:

creationTimestamp: "2020-03-01T10:24:55Z"

name: kubeadm-config

namespace: kube-system

resourceVersion: "607"

selfLink: /api/v1/namespaces/kube-system/configmaps/kubeadm-config

uid: 65ad345a-932c-4125-9001-ddb61ea6bbe8

[root@k8smaster02 ~]#

[root@k8smaster02 ~]# kubectl -n kube-system exec etcd-k8smaster01 -- etcdctl \

> --endpoints=https://192.168.23.100:2379 \

> --ca-file=/etc/kubernetes/pki/etcd/ca.crt \

> --cert-file=/etc/kubernetes/pki/etcd/server.crt \

> --key-file=/etc/kubernetes/pki/etcd/server.key cluster-health

member 903f08c56f6bda30 is healthy: got healthy result from https://192.168.23.101:2379

member b93b088d0d91193a is healthy: got healthy result from https://192.168.23.102:2379

member d1d41f113495431a is healthy: got healthy result from https://192.168.23.100:2379

cluster is healthy

[root@k8smaster02 ~]#

[root@k8smaster02 ~]# kubectl get endpoints kube-controller-manager --namespace=kube-system -o yaml

apiVersion: v1

kind: Endpoints

metadata:

annotations:

control-plane.alpha.kubernetes.io/leader: '{"holderIdentity":"k8smaster01_af5547bf-903c-4d14-9539-160db7150d8e","leaseDurationSeconds":15,"acquireTime":"2020-03-01T10:24:54Z","renewTime":"2020-03-01T10:52:58Z","leaderTransitions":0}'

creationTimestamp: "2020-03-01T10:24:54Z"

name: kube-controller-manager

namespace: kube-system

resourceVersion: "3267"

selfLink: /api/v1/namespaces/kube-system/endpoints/kube-controller-manager

uid: b60b5dc4-708e-4ed1-a5e0-262dcc1fb089

[root@k8smaster02 ~]#

[root@k8smaster02 ~]# kubectl get endpoints kube-scheduler --namespace=kube-system -o yaml

apiVersion: v1

kind: Endpoints

metadata:

annotations:

control-plane.alpha.kubernetes.io/leader: '{"holderIdentity":"k8smaster01_08468212-bdbd-448f-ba10-5c56f4ccb813","leaseDurationSeconds":15,"acquireTime":"2020-03-01T10:24:54Z","renewTime":"2020-03-01T10:56:53Z","leaderTransitions":0}'

creationTimestamp: "2020-03-01T10:24:54Z"

name: kube-scheduler

namespace: kube-system

resourceVersion: "3654"

selfLink: /api/v1/namespaces/kube-system/endpoints/kube-scheduler

uid: 63a62e3a-2649-417d-a852-ce178066b318

[root@k8smaster02 ~]#