Hbase2.3.7安装

需要先安装hadoop Zookeeper

Hadoop-3.1.3部署_hunheidaode的博客-CSDN博客

Zookeeper 3台机器集群安装_hunheidaode的博客-CSDN博客

1.解压

[root@master software]# tar -zxvf hbase-2.3.7-bin.tar.gz -C /opt/module/

2.修改hbase-env.sh

[root@master conf]# vi hbase-env.sh

#!/usr/bin/env bash

#

#/**

# * Licensed to the Apache Software Foundation (ASF) under one

# * or more contributor license agreements. See the NOTICE file

# * distributed with this work for additional information

# * regarding copyright ownership. The ASF licenses this file

# * to you under the Apache License, Version 2.0 (the

# * "License"); you may not use this file except in compliance

# * with the License. You may obtain a copy of the License at

# *

# * http://www.apache.org/licenses/LICENSE-2.0

# *

# * Unless required by applicable law or agreed to in writing, software

# * distributed under the License is distributed on an "AS IS" BASIS,

# * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# * See the License for the specific language governing permissions and

# * limitations under the License.

# */

# Set environment variables here.

# This script sets variables multiple times over the course of starting an hbase process,

# so try to keep things idempotent unless you want to take an even deeper look

# into the startup scripts (bin/hbase, etc.)

# The java implementation to use. Java 1.8+ required.

export JAVA_HOME=/opt/jdk/jdk1.8.0_202

# Extra Java CLASSPATH elements. Optional.

# export HBASE_CLASSPATH=

# The maximum amount of heap to use. Default is left to JVM default.

# export HBASE_HEAPSIZE=1G

# Uncomment below if you intend to use off heap cache. For example, to allocate 8G of

# offheap, set the value to "8G".

# export HBASE_OFFHEAPSIZE=1G

# Extra Java runtime options.

# Default settings are applied according to the detected JVM version. Override these default

# settings by specifying a value here. For more details on possible settings,

# see http://hbase.apache.org/book.html#_jvm_tuning

# export HBASE_OPTS

# Uncomment one of the below three options to enable java garbage collection logging for the server-side processes.

# This enables basic gc logging to the .out file.

# export SERVER_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps"

# This enables basic gc logging to its own file.

# If FILE-PATH is not replaced, the log file(.gc) would still be generated in the HBASE_LOG_DIR .

# export SERVER_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -Xloggc:"

# This enables basic GC logging to its own file with automatic log rolling. Only applies to jdk 1.6.0_34+ and 1.7.0_2+.

# If FILE-PATH is not replaced, the log file(.gc) would still be generated in the HBASE_LOG_DIR .

# export SERVER_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -Xloggc: -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=1 -XX:GCLogFileSize=512M"

# Uncomment one of the below three options to enable java garbage collection logging for the client processes.

# This enables basic gc logging to the .out file.

# export CLIENT_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps"

# This enables basic gc logging to its own file.

# If FILE-PATH is not replaced, the log file(.gc) would still be generated in the HBASE_LOG_DIR .

# export CLIENT_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -Xloggc:"

# This enables basic GC logging to its own file with automatic log rolling. Only applies to jdk 1.6.0_34+ and 1.7.0_2+.

# If FILE-PATH is not replaced, the log file(.gc) would still be generated in the HBASE_LOG_DIR .

# export CLIENT_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -Xloggc: -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=1 -XX:GCLogFileSize=512M"

# See the package documentation for org.apache.hadoop.hbase.io.hfile for other configurations

# needed setting up off-heap block caching.

# Uncomment and adjust to enable JMX exporting

# See jmxremote.password and jmxremote.access in $JRE_HOME/lib/management to configure remote password access.

# More details at: http://java.sun.com/javase/6/docs/technotes/guides/management/agent.html

# NOTE: HBase provides an alternative JMX implementation to fix the random ports issue, please see JMX

# section in HBase Reference Guide for instructions.

# export HBASE_JMX_BASE="-Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.management.jmxremote.authenticate=false"

# export HBASE_MASTER_OPTS="$HBASE_MASTER_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10101"

# export HBASE_REGIONSERVER_OPTS="$HBASE_REGIONSERVER_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10102"

# export HBASE_THRIFT_OPTS="$HBASE_THRIFT_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10103"

# export HBASE_ZOOKEEPER_OPTS="$HBASE_ZOOKEEPER_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10104"

# export HBASE_REST_OPTS="$HBASE_REST_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10105"

# File naming hosts on which HRegionServers will run. $HBASE_HOME/conf/regionservers by default.

# export HBASE_REGIONSERVERS=${HBASE_HOME}/conf/regionservers

# Uncomment and adjust to keep all the Region Server pages mapped to be memory resident

#HBASE_REGIONSERVER_MLOCK=true

#HBASE_REGIONSERVER_UID="hbase"

# File naming hosts on which backup HMaster will run. $HBASE_HOME/conf/backup-masters by default.

# export HBASE_BACKUP_MASTERS=${HBASE_HOME}/conf/backup-masters

# Extra ssh options. Empty by default.

# export HBASE_SSH_OPTS="-o ConnectTimeout=1 -o SendEnv=HBASE_CONF_DIR"

# Where log files are stored. $HBASE_HOME/logs by default.

# export HBASE_LOG_DIR=${HBASE_HOME}/logs

# Enable remote JDWP debugging of major HBase processes. Meant for Core Developers

# export HBASE_MASTER_OPTS="$HBASE_MASTER_OPTS -Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=8070"

# export HBASE_REGIONSERVER_OPTS="$HBASE_REGIONSERVER_OPTS -Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=8071"

# export HBASE_THRIFT_OPTS="$HBASE_THRIFT_OPTS -Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=8072"

# export HBASE_ZOOKEEPER_OPTS="$HBASE_ZOOKEEPER_OPTS -Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=8073"

# export HBASE_REST_OPTS="$HBASE_REST_OPTS -Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=8074"

# A string representing this instance of hbase. $USER by default.

# export HBASE_IDENT_STRING=$USER

# The scheduling priority for daemon processes. See 'man nice'.

# export HBASE_NICENESS=10

# The directory where pid files are stored. /tmp by default.

# export HBASE_PID_DIR=/var/hadoop/pids

# Seconds to sleep between slave commands. Unset by default. This

# can be useful in large clusters, where, e.g., slave rsyncs can

# otherwise arrive faster than the master can service them.

# export HBASE_SLAVE_SLEEP=0.1

# Tell HBase whether it should manage it's own instance of ZooKeeper or not.

export HBASE_MANAGES_ZK=false

# The default log rolling policy is RFA, where the log file is rolled as per the size defined for the

# RFA appender. Please refer to the log4j.properties file to see more details on this appender.

# In case one needs to do log rolling on a date change, one should set the environment property

# HBASE_ROOT_LOGGER to ",DRFA".

# For example:

# HBASE_ROOT_LOGGER=INFO,DRFA

# The reason for changing default to RFA is to avoid the boundary case of filling out disk space as

# DRFA doesn't put any cap on the log size. Please refer to HBase-5655 for more context.

# Tell HBase whether it should include Hadoop's lib when start up,

# the default value is false,means that includes Hadoop's lib.

# export HBASE_DISABLE_HADOOP_CLASSPATH_LOOKUP="true"

# Override text processing tools for use by these launch scripts.

# export GREP="${GREP-grep}"

# export SED="${SED-sed}"

3.修改hbase-site.xml

hbase.cluster.distributed

true

hbase.rootdir

hdfs://master:8020/hbase

hbase.zookeeper.quorum

master,node1,node2

4.修改regionservers文件

master

node1

node2

5.把hbase分别上传或者分发到node1、node2机器

[root@master module]# sudo xsync hbase/

分发脚本可看:

Linux xsync命令脚本_hunheidaode的博客-CSDN博客

6.修改添加环境变量:

my_env.sh如下:

#HADOOP_HOME

export HADOOP_HOME=/opt/module/hadoop-3.1.3

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

#HBASE_HOME

export HBASE_HOME=/opt/module/hbase

export PATH=$PATH:$HBASE_HOME/bin

[root@master module]# vi /etc/profile.d/my_env.sh

[root@master module]# source /etc/profile

[root@master module]# hbase

Usage: hbase [] []

Options:

--config DIR Configuration direction to use. Default: ./conf

--hosts HOSTS Override the list in 'regionservers' file

--auth-as-server Authenticate to ZooKeeper using servers configuration

--internal-classpath Skip attempting to use client facing jars (WARNING: unstable results between versions)

--help or -h Print this help message

Commands:

Some commands take arguments. Pass no args or -h for usage.

shell Run the HBase shell

hbck Run the HBase 'fsck' tool. Defaults read-only hbck1.

Pass '-j /path/to/HBCK2.jar' to run hbase-2.x HBCK2.

snapshot Tool for managing snapshots

wal Write-ahead-log analyzer

hfile Store file analyzer

zkcli Run the ZooKeeper shell

master Run an HBase HMaster node

regionserver Run an HBase HRegionServer node

zookeeper Run a ZooKeeper server

rest Run an HBase REST server

thrift Run the HBase Thrift server

thrift2 Run the HBase Thrift2 server

clean Run the HBase clean up script

classpath Dump hbase CLASSPATH

mapredcp Dump CLASSPATH entries required by mapreduce

pe Run PerformanceEvaluation

ltt Run LoadTestTool

canary Run the Canary tool

version Print the version

completebulkload Run BulkLoadHFiles tool

regionsplitter Run RegionSplitter tool

rowcounter Run RowCounter tool

cellcounter Run CellCounter tool

pre-upgrade Run Pre-Upgrade validator tool

hbtop Run HBTop tool

CLASSNAME Run the class named CLASSNAME

7.3分发一下 my_env.sh 或者是编写3台机器 都需要编写环境变量

[root@master module]# sudo xsync /etc/profile.d/my_env.sh

==================== master ====================

sending incremental file list

sent 47 bytes received 12 bytes 118.00 bytes/sec

total size is 213 speedup is 3.61

==================== node1 ====================

sending incremental file list

my_env.sh

sent 307 bytes received 41 bytes 696.00 bytes/sec

total size is 213 speedup is 0.61

==================== node2 ====================

sending incremental file list

my_env.sh

sent 307 bytes received 41 bytes 696.00 bytes/sec

total size is 213 speedup is 0.61

[root@master module]# ll

8.启动hadoop

master机器启动:

[root@master module]# ./jpsall.sh

======================集群节点状态====================

====================== master ====================

9794 QuorumPeerMain

8459 Jps

19407 JobHistoryServer

====================== node1 ====================

8153 Jps

31034 QuorumPeerMain

====================== node2 ====================

7025 Jps

30260 QuorumPeerMain

======================执行完毕====================

[root@master module]# cd hadoop-3.1.3/

[root@master hadoop-3.1.3]# ./sbin/start-dfs.sh

WARNING: HADOOP_SECURE_DN_USER has been replaced by HDFS_DATANODE_SECURE_USER. Using value of HADOOP_SECURE_DN_USER.

Starting namenodes on [master]

上一次登录:日 12月 12 16:44:01 CST 2021pts/2 上

Starting datanodes

上一次登录:日 12月 12 17:06:09 CST 2021pts/2 上

Starting secondary namenodes [node2]

上一次登录:日 12月 12 17:06:11 CST 2021pts/2 上

[root@master hadoop-3.1.3]#

node1机器启动 yarn:

[root@node1 hadoop-3.1.3]# echo $JAVA_HOME

/opt/jdk/jdk1.8.0_202

[root@node1 hadoop-3.1.3]# sbin/stop-yarn.sh

Stopping nodemanagers

上一次登录:日 12月 12 16:20:02 CST 2021pts/2 上

Stopping resourcemanager

上一次登录:日 12月 12 16:44:10 CST 2021pts/2 上

[root@node1 hadoop-3.1.3]# ./sbin/start-yarn.sh

Starting resourcemanager

上一次登录:日 12月 12 16:44:12 CST 2021pts/2 上

Starting nodemanagers

上一次登录:日 12月 12 17:07:59 CST 2021pts/2 上

[root@node1 hadoop-3.1.3]#

9.在 master 启动历史服务器

[root@master hadoop-3.1.3]$ mapred --daemon start historyserver10.查看hadoop进程:

11.启动Zookeeper:脚本可看前面文章

[root@master module]# ./zk.sh start

---------- zookeeper master 启动 ------------

ZooKeeper JMX enabled by default

Using config: /opt/module/zookeeper-3.5.7/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

---------- zookeeper node1 启动 ------------

ZooKeeper JMX enabled by default

Using config: /opt/module/zookeeper-3.5.7/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

---------- zookeeper node2 启动 ------------

ZooKeeper JMX enabled by default

Using config: /opt/module/zookeeper-3.5.7/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@master module]# ./zk.sh status

---------- zookeeper master 状态 ------------

ZooKeeper JMX enabled by default

Using config: /opt/module/zookeeper-3.5.7/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost.

Mode: follower

---------- zookeeper node1 状态 ------------

ZooKeeper JMX enabled by default

Using config: /opt/module/zookeeper-3.5.7/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost.

Mode: leader

---------- zookeeper node2 状态 ------------

ZooKeeper JMX enabled by default

Using config: /opt/module/zookeeper-3.5.7/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost.

Mode: follower

[root@master module]#

12.查看进程:hadoop与Zookeeper都已经启动

13.启动hbase:进入bin目录下:启动: start-hbase.sh

[root@master module]# cd hbase/bin/

[root@master bin]# start-hbase.sh

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/module/hadoop-3.1.3/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/module/hbase/lib/client-facing-thirdparty/slf4j-log4j12-1.7.30.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

running master, logging to /opt/module/hbase/logs/hbase-root-master-master.out

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/module/hadoop-3.1.3/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/module/hbase/lib/client-facing-thirdparty/slf4j-log4j12-1.7.30.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

master: running regionserver, logging to /opt/module/hbase/logs/hbase-root-regionserver-master.out

node1: running regionserver, logging to /opt/module/hbase/logs/hbase-root-regionserver-node1.out

node2: running regionserver, logging to /opt/module/hbase/logs/hbase-root-regionserver-node2.out

[root@master bin]# cd ../../

[root@master module]# ./jpsall.sh

======================集群节点状态====================

====================== master ====================

12101 NodeManager

9416 NameNode

16473 QuorumPeerMain

18954 HMaster

19964 Jps

9613 DataNode

15357 JobHistoryServer

19199 HRegionServer

====================== node1 ====================

10673 ResourceManager

10851 NodeManager

16883 Jps

14506 QuorumPeerMain

8845 DataNode

16350 HRegionServer

====================== node2 ====================

7860 SecondaryNameNode

7738 DataNode

13036 QuorumPeerMain

15452 Jps

9598 NodeManager

14895 HRegionServer

======================执行完毕====================

[root@master module]#

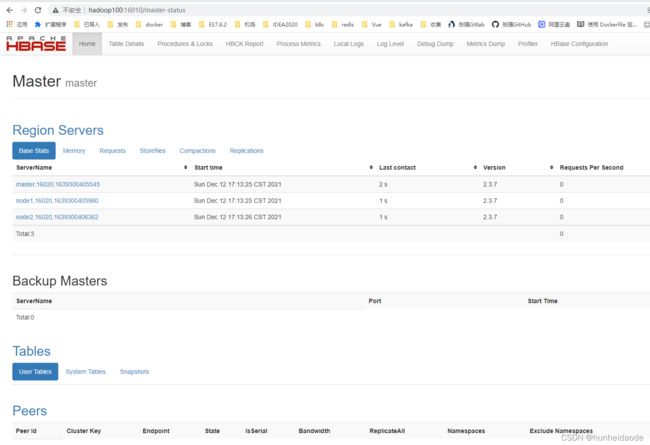

14.界面访问:http://hadoop100:16010/ hadoop100需要在windows HOSTS配置

测试:

[root@master module]# hbase shell

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/module/hadoop-3.1.3/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/module/hbase/lib/client-facing-thirdparty/slf4j-log4j12-1.7.30.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

HBase Shell

Use "help" to get list of supported commands.

Use "exit" to quit this interactive shell.

For Reference, please visit: http://hbase.apache.org/2.0/book.html#shell

Version 2.3.7, r8b2f5141e900c851a2b351fccd54b13bcac5e2ed, Tue Oct 12 16:38:55 UTC 2021

Took 0.0009 seconds

hbase(main):001:0> create 'test', 'cf';

hbase(main):002:0* list 'test'

Created table test

Took 2.0871 seconds

TABLE

test

1 row(s)

Took 0.0440 seconds

=> ["test"]

hbase(main):003:0> describe 'test'

Table test is ENABLED

test

COLUMN FAMILIES DESCRIPTION

{NAME => 'cf', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', VERSIONS => '1', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', COMPRESSION => 'NONE', TTL => 'FOREVER', MIN_VERSIONS

=> '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

1 row(s)

Quota is disabled

Took 0.2884 seconds

hbase(main):004:0> put 'test', 'row1', 'cf:a', 'value1'

Took 0.1731 seconds

hbase(main):005:0> put 'test', 'row2', 'cf:b', 'value2'

Took 0.0151 seconds

hbase(main):006:0> put 'test', 'row3', 'cf:c', 'value3'

Took 0.0148 seconds

hbase(main):007:0> scan 'test'

ROW COLUMN+CELL

row1 column=cf:a, timestamp=2021-12-12T18:10:53.986, value=value1

row2 column=cf:b, timestamp=2021-12-12T18:10:58.310, value=value2

row3 column=cf:c, timestamp=2021-12-12T18:11:03.406, value=value3

3 row(s)

Took 0.1204 seconds

hbase(main):008:0> get 'test', 'row1'

COLUMN CELL

cf:a timestamp=2021-12-12T18:10:53.986, value=value1

1 row(s)

Took 0.0132 seconds

hbase(main):009:0>