QT软件开发-基于FFMPEG设计录屏与rtsp、rtmp推流软件(支持桌面与摄像头)(一)

QT软件开发-基于FFMPEG设计录屏与rtsp、rtmp推流软件(支持桌面与摄像头)(一) https://xiaolong.blog.csdn.net/article/details/126954626

QT软件开发-基于FFMPEG设计录屏与rtsp、rtmp推流软件(支持桌面与摄像头)(二) https://xiaolong.blog.csdn.net/article/details/126958188

QT软件开发-基于FFMPEG设计录屏与rtsp、rtmp推流软件(支持桌面与摄像头)(三) https://xiaolong.blog.csdn.net/article/details/126959401

QT软件开发-基于FFMPEG设计录屏与rtsp、rtmp推流软件(支持桌面与摄像头)(四) https://xiaolong.blog.csdn.net/article/details/126959869

一、前言

说起ffmpeg,只要是搞音视频相关的开发应该都是听过的。FFmpeg提供了非常先进的音频/视频编解码库,并且支持跨平台。

现在互联网上ffmpeg相关的文章、教程也非常的多,ffmpeg本身主要是用来对视频、音频进行解码、编码,对音视频进行处理。

其中主要是解码和编码。 解码的应用主要是视频播放器制作、音乐播放器制作,解码视频文件得到视频画面再渲染显示出来就是播放器的基本模型了。 编码主要是用于视频录制保存,就是将摄像头的画面或者屏幕的画面编码后写入文件保存为视频,比如:行车记录仪录制视频,监控摄像头录制视频等等。 当然也可以编码推流到服务器,现在的直播平台、智能家居里的视频监控、智能安防摄像头都是这样的应用。

当前的这篇文章和接下来的几篇主要是介绍编码的案例,通过ffmpeg设计一个视频录制软件,完成摄像头的视频录制保存、桌面画面录制保存,支持rtmp、rtsp推流到流媒体服务器实现直播。比如:推流到NGINX服务器、推流到B站直播间、推流到斗鱼直播间等等。 通过这个案例也可以了解到现在的安防摄像头是如何实现远程监控画面查看,如何保留历史视频文件进行回放等等。

接下来会通过连续几篇文章,循序渐进的编写案例来完成摄像头录制、音频录制、桌面录制、推流等这些功能。文章里不涉及太多的理论知识(理论知识网上太多了),主要是以代码、以实现功能为主。

我这里开发用到的环境介绍: 这些都是Qt基本环境,下载Qt安装包下来安装完就可以开发了。

ffmpeg版本: 4.2.2

Qt版本 : 5.12.6

编译器类型 : MinGW32bit

关于解码的案例,在前面写了几篇文章做了介绍(后续也会持续更新)。

QT软件开发-基于FFMPEG设计视频播放器-支持软解与硬解(一)

https://xiaolong.blog.csdn.net/article/details/126832537

QT软件开发-基于FFMPEG设计视频播放器-支持软解与硬解(二)

https://xiaolong.blog.csdn.net/article/details/126833434

QT软件开发-基于FFMPEG设计视频播放器-支持软解与硬解(三)

https://xiaolong.blog.csdn.net/article/details/126836582

QT软件开发-基于FFMPEG设计视频播放器-支持软解与硬解(四)

https://xiaolong.blog.csdn.net/article/details/126842988

QT软件开发-基于FFMPEG设计视频播放器-支持流媒体地址播放(五)

https://xiaolong.blog.csdn.net/article/details/126915003

QT软件开发-基于FFMPEG设计视频播放器-支持软解与硬解-完整例子(六)

https://blog.csdn.net/xiaolong1126626497/article/details/126916817

二、内容介绍

音视频的编码其实ffmpeg在例程里提供了案例,当前文章就是参考ffmpeg的案例实现的。

ffmpeg提供的音视频编码案例位置:ffmpeg-4.2.2\doc\examples\muxing.c,这个案例会生成一个10秒的视频,画面和音频是通过代码生成的。

这几篇文章循序渐进编写的内容规划与案例如下:

(1)完成目标:将摄像头画面编码后保存为mp4格式视频,保存在本地。 摄像头的画面采集采用Qt的QVideoProbe类来捕获,windows、Android、Linux、iOS等平台都可以正常运行。

特点:只是保存摄像头画面,不录制音频。

(2)完成目标:捕获桌面的画面编码后保存为mp4格式视频,保存在本地。桌面的画面捕获采用Qt的QScreen获取,通过定时器来间隔获取画面加到到编码队列里,然后编码成视频文件文件。 可以支持全屏录制、或者指定区域录制,只需要处理好图像,再传入编码器即可。

特点:只是保存摄像头画面,不录制音频。

(3)完成目标:在前面的例子上加上麦克风音频采集功能,通过Qt的QAudioInput采集音频PCM数据,编码到视频里,完成画面+声音的录制保存。

特点:同时录制视频画面,麦克风声音。

(4)完成目标:在前面第(3)例子上修改,增加rtmp、rtsp推流,可以将视频画面和麦克风的声音编码推流到流媒体服务器。比如:B站直播间、斗鱼直播间、或者自己搭建的流媒体服务器上。 完成直播推流。推流后,可以自己通过播放器拉流进行观看。前面的播放器设计章节里,已经讲了如何使用ffmpeg开发流媒体播放器,最前面已经贴上链接了。

如果想自己搭建流媒体服务器,可以看这里:

windows下使用Nginx搭建Rtmp流媒体服务器,实现视频直播功能

https://blog.csdn.net/xiaolong1126626497/article/details/106391149

Linux下使用Nginx搭建Rtmp流媒体服务器,实现视频直播功能:

https://blog.csdn.net/xiaolong1126626497/article/details/10537889

三、设计说明

本篇文章要完成的目标:

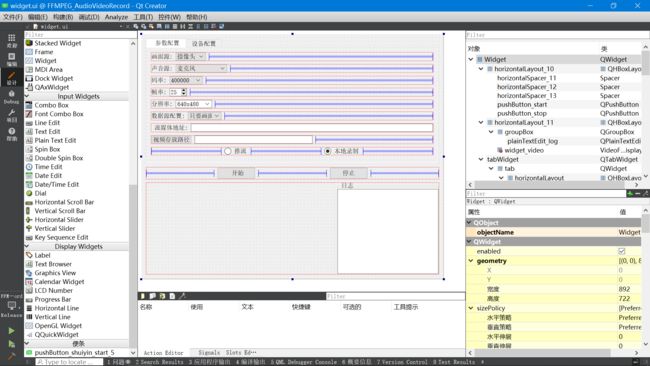

**界面上: **设计好UI界面,UI界面按照最终的成品进行设计(先设计好UI界面,再陆陆续续的完成功能开发)。

功能上: 将摄像头画面编码后保存为mp4格式视频,保存在本地。 摄像头的画面采集采用Qt的QVideoProbe类来捕获,windows、Android、Linux、iOS等平台都可以正常运行。

特点:只是保存摄像头画面,不录制音频。

设计说明:

要完成摄像头的采集和编码、需要有两个线程,通过子线程来完成耗时间的工作不卡UI。

A线程:采集摄像头的数据存放到缓冲区。

B线程:获取缓冲区的数据编码,保存为视频。

UI界面主要是完成画面和一些状态信息的展示。

在工程里有一个结构体,用来保存整个编码的的一些参数:

//录制视频的参数配置

struct RecordConfig

{

int Mode; // 0 本地录制 1 推流

int ImageInptSrc; //图像来源: 0 摄像头 1 桌面

int input_DataConf; // 0只要画面 1 画面+音频

qint8 desktop_page; //桌面的页面编号

int video_bit_rate; //码率

int video_frame; //帧率

int ImageWidth; //图像宽

int ImageHeight; //图像高

QString VideoSavePath; //视频保存路径

/*视频相关*/

QMutex video_encode_mutex;

QWaitCondition video_WaitConditon;

QCameraInfo camera;

/*音频相关*/

QAudioDeviceInfo audio;

QMutex audio_encode_mutex;

QQueue<QByteArray> AudioDataQueue;

/*推流地址 比如: rtmp rtsp等等*/

QByteArray PushStreamAddr;

//RGB图像缓冲区,这是采集数据之后转为RGB888的数据,因为还需要将此数据转为YUV420P

unsigned char *rgb_buffer;

size_t grb24_size; //RGB数据大小。 宽 x 高 x 3

//存放转换后的YUV42OP数据

unsigned char *video_yuv420p_buff;

unsigned char *video_yuv420p_buff_temp;

size_t yuv420p_size; //yuv420p数据大小。 宽 x 高 x 3 / 2

};

四、运行效果

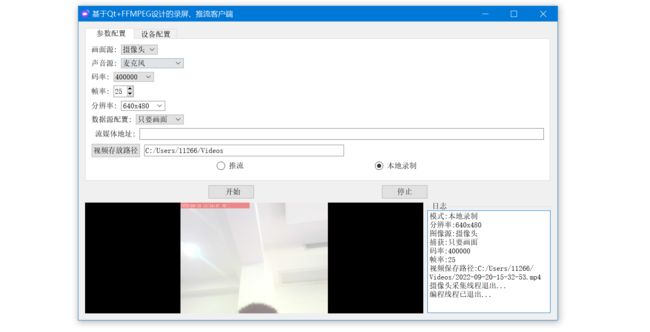

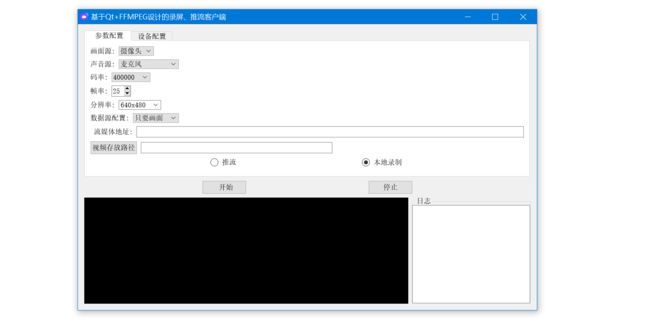

(1)这是打开之后的界面

(2)设备配置页面

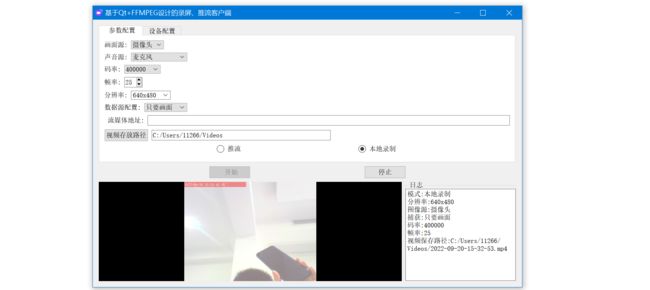

(3)配置好参数,点击开始按钮,开始录制视频,下面是录制中的效果。

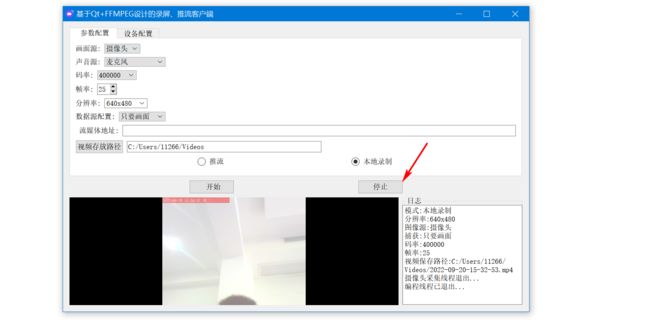

(4)如果想停止录制,点击停止按钮,就可以停止。下面是点击停止按钮后的效果。

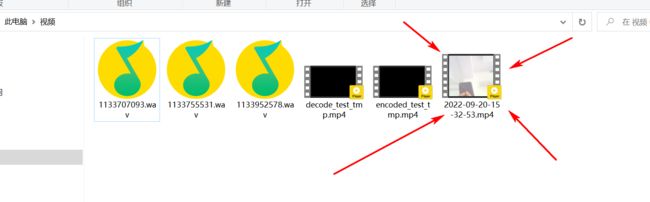

(5)在视频存放路径下可以看到录制好的视频。

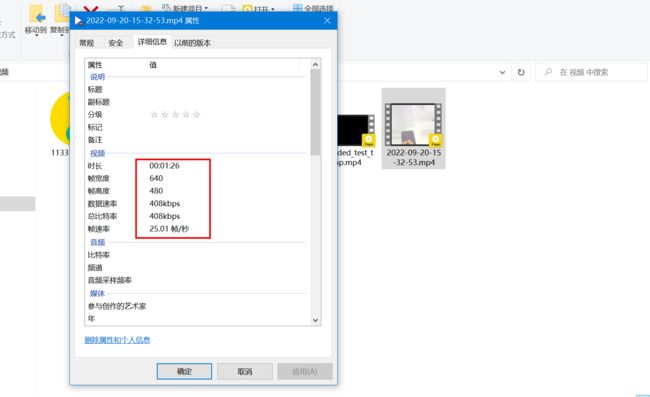

查看视频的参数:

五、源代码

5.1 配置参数.h文件

#ifndef CONFIG_H

#define CONFIG_H

#include 5.2 ffmpeg编码线程.h

#ifndef FFMPEG_ENCODETHREAD_H

#define FFMPEG_ENCODETHREAD_H

#include "Config.h"

//单个输出AVStream的包装器

typedef struct OutputStream

{

AVStream *st;

AVCodecContext *enc;

/* 下一帧的点数*/

int64_t next_pts;

int samples_count;

AVFrame *frame;

AVFrame *tmp_frame;

struct SwsContext *sws_ctx;

struct SwrContext *swr_ctx;

}OutputStream;

class ffmpeg_EncodeThread: public QThread

{

Q_OBJECT

public:

ffmpeg_EncodeThread(RecordConfig *config);

bool m_run; //运行状态 false表示退出 true表示正常运行

protected:

void run();

signals:

void LogSend(int err,QString text);

private:

struct RecordConfig *m_RecordConfig;

int StartEncode();

void ffmpeg_close_stream(OutputStream *ost);

void ffmpeg_add_stream(OutputStream *ost, AVFormatContext *oc,AVCodec **codec,enum AVCodecID codec_id);

void ffmpeg_open_video(AVCodec *codec, OutputStream *ost, AVDictionary *opt_arg);

AVFrame *ffmpeg_alloc_picture(enum AVPixelFormat pix_fmt, int width, int height);

int ffmpeg_write_video_frame(AVFormatContext *oc, OutputStream *ost);

AVFrame *get_video_frame(OutputStream *ost);

void fill_yuv_image(AVFrame *pict, int frame_index,int width, int height);

int ffmpeg_write_frame(AVFormatContext *fmt_ctx, const AVRational *time_base, AVStream *st, AVPacket *pkt);

};

#endif // FFMPEG_ENCODETHREAD_H

5.3 ffmpeg编程线程.cpp

#include "ffmpeg_EncodeThread.h"

//关闭流

void ffmpeg_EncodeThread::ffmpeg_close_stream(OutputStream *ost)

{

avcodec_free_context(&ost->enc);

av_frame_free(&ost->frame);

av_frame_free(&ost->tmp_frame);

sws_freeContext(ost->sws_ctx);

swr_free(&ost->swr_ctx);

}

//添加输出流

void ffmpeg_EncodeThread::ffmpeg_add_stream(OutputStream *ost, AVFormatContext *oc,AVCodec **codec,enum AVCodecID codec_id)

{

AVCodecContext *c;

*codec = avcodec_find_encoder(codec_id);

ost->st = avformat_new_stream(oc,nullptr);

ost->st->id = oc->nb_streams-1;

c=avcodec_alloc_context3(*codec);

ost->enc = c;

switch ((*codec)->type)

{

case AVMEDIA_TYPE_AUDIO:

//设置数据格式

c->sample_fmt = FFMPEG_AudioFormat;

c->bit_rate = AUDIO_BIT_RATE_SET; //设置码率

c->sample_rate = AUDIO_RATE_SET; //音频采样率

//设置采样通道

c->channels= av_get_channel_layout_nb_channels(c->channel_layout);

c->channel_layout = AUDIO_CHANNEL_SET; //AV_CH_LAYOUT_MONO 单声道 AV_CH_LAYOUT_STEREO 立体声

c->channels=av_get_channel_layout_nb_channels(c->channel_layout); //通道数

ost->st->time_base={1,c->sample_rate};

//c->strict_std_compliance = FF_COMPLIANCE_EXPERIMENTAL; //允许使用实验性AAC编码器-增加的

break;

case AVMEDIA_TYPE_VIDEO:

c->codec_id = codec_id;

//码率:影响体积,与体积成正比:码率越大,体积越大;码率越小,体积越小。

c->bit_rate = m_RecordConfig->video_bit_rate; //设置码率400000 400kps 500000

/*分辨率必须是2的倍数。 */

c->width = m_RecordConfig->ImageWidth;

c->height = m_RecordConfig->ImageHeight;

/*时基:这是基本的时间单位,以秒为单位*/

ost->st->time_base={1,m_RecordConfig->video_frame}; //按帧率计算时间基准

c->framerate = {m_RecordConfig->video_frame,1}; //设置帧率

c->time_base = ost->st->time_base;

c->gop_size = 10; // 关键帧间隔 /* 最多每十二帧发射一帧内帧 */

c->pix_fmt = AV_PIX_FMT_YUV420P; //固定设置为420P

c->max_b_frames = 0; // 不使用b帧

if(c->codec_id == AV_CODEC_ID_MPEG1VIDEO)

{

c->mb_decision = 2;

}

// 预设:快速

av_opt_set(c->priv_data,"preset", "superfast", 0);

break;

default:

break;

}

/* 某些格式希望流头分开*/

if(oc->oformat->flags & AVFMT_GLOBALHEADER)c->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

}

AVFrame *ffmpeg_EncodeThread::ffmpeg_alloc_picture(enum AVPixelFormat pix_fmt, int width, int height)

{

AVFrame *picture;

picture=av_frame_alloc();

picture->format = pix_fmt;

picture->width = width;

picture->height = height;

/*为帧数据分配缓冲区*/

av_frame_get_buffer(picture,32);

return picture;

}

void ffmpeg_EncodeThread::ffmpeg_open_video(AVCodec *codec, OutputStream *ost, AVDictionary *opt_arg)

{

AVCodecContext *c = ost->enc;

AVDictionary *opt = nullptr;

av_dict_copy(&opt, opt_arg, 0);

//H264编码器的一些参数设置

c->qmin = 10;

c->qmax = 51;

//Optional Param

c->max_b_frames = 0;

#if 1

//下面设置两个参数影响编码延时,如果不设置,编码器默认会缓冲很多帧

// Set H264 preset and tune

// av_dict_set(&opt, "preset", "fast", 0);

// av_dict_set(&opt, "tune", "zerolatency", 0);

#else

/**

* ultrafast,superfast, veryfast, faster, fast, medium

* slow, slower, veryslow, placebo.

注意:这是x264编码速度的选项, 设置该参数可以降低编码延时

*/

av_opt_set(c->priv_data,"preset","superfast",0);

#endif

avcodec_open2(c, codec, &opt);

av_dict_free(&opt);

ost->frame = ffmpeg_alloc_picture(c->pix_fmt, c->width,c->height);

ost->tmp_frame = nullptr;

/* 将流参数复制到多路复用器 */

avcodec_parameters_from_context(ost->st->codecpar,c);

}

/*

准备图像数据

YUV422占用内存空间 = w * h * 2

YUV420占用内存空间 = width*height*3/2

*/

void ffmpeg_EncodeThread::fill_yuv_image(AVFrame *pict, int frame_index,int width, int height)

{

unsigned int y_size=width*height;

m_RecordConfig->video_encode_mutex.lock();

m_RecordConfig->video_WaitConditon.wait(&m_RecordConfig->video_encode_mutex);

memcpy(m_RecordConfig->video_yuv420p_buff_temp,

m_RecordConfig->video_yuv420p_buff,

m_RecordConfig->yuv420p_size);

m_RecordConfig->video_encode_mutex.unlock();

//将YUV数据拷贝到缓冲区 y_size=wXh

memcpy(pict->data[0],m_RecordConfig->video_yuv420p_buff_temp,y_size);

memcpy(pict->data[1],m_RecordConfig->video_yuv420p_buff_temp+y_size,y_size/4);

memcpy(pict->data[2],m_RecordConfig->video_yuv420p_buff_temp+y_size+y_size/4,y_size/4);

}

//获取一帧视频

AVFrame *ffmpeg_EncodeThread::get_video_frame(OutputStream *ost)

{

AVCodecContext *c = ost->enc;

/* 检查我们是否要生成更多帧---判断是否结束录制 */

// if(av_compare_ts(ost->next_pts, c->time_base,STREAM_DURATION, (AVRational){ 1, 1 }) >= 0)

// return nullptr;

/*当我们将帧传递给编码器时,它可能会保留对它的引用

*内部; 确保我们在这里不覆盖它*/

if (av_frame_make_writable(ost->frame) < 0) return nullptr;

//获取图像

//DTS(解码时间戳)和PTS(显示时间戳)

fill_yuv_image(ost->frame, ost->next_pts, c->width, c->height);

ost->frame->pts = ost->next_pts++;

//视频帧添加水印

return ost->frame;

}

int ffmpeg_EncodeThread::ffmpeg_write_frame(AVFormatContext *fmt_ctx, const AVRational *time_base, AVStream *st, AVPacket *pkt)

{

/*将输出数据包时间戳值从编解码器重新调整为流时基 */

av_packet_rescale_ts(pkt, *time_base, st->time_base);

pkt->stream_index = st->index;

/*将压缩的帧写入媒体文件*/

return av_interleaved_write_frame(fmt_ctx, pkt);

}

/*

*编码一个视频帧并将其发送到多路复用器

*/

int ffmpeg_EncodeThread::ffmpeg_write_video_frame(AVFormatContext *oc, OutputStream *ost)

{

int ret;

AVCodecContext *c;

AVFrame *frame;

int got_packet = 0;

AVPacket pkt;

c=ost->enc;

//获取一帧数据

frame = get_video_frame(ost);

if(frame==nullptr)

{

emit LogSend(-1,"视频帧制作失败...\n");

return -1;

}

av_init_packet(&pkt);

//编码图像

ret=avcodec_encode_video2(c, &pkt, frame, &got_packet);

if(ret < 0)

{

emit LogSend(-1,"视频帧编码出错...\n");

return ret;

}

if(got_packet)

{

ret=ffmpeg_write_frame(oc, &c->time_base, ost->st, &pkt);

}

else

{

ret = 0;

}

if(ret < 0)

{

emit LogSend(-1,"写入视频帧时出错...\n");

return ret;

}

return 0;

}

//开始编码

int ffmpeg_EncodeThread::StartEncode()

{

//基本变量结构的定义

int ret = -1;

OutputStream video_st;

AVOutputFormat *fmt=nullptr;

AVFormatContext *oc=nullptr;

AVCodec *video_codec=nullptr;

AVDictionary *opt = nullptr;

int have_video=0;

int encode_video=0;

memset(&video_st,0,sizeof(OutputStream));

//获取当前时间用来设置当前视频文件的名称

QDateTime dateTime(QDateTime::currentDateTime());

//时间效果: 2020-03-05 16:25::04 周四

QByteArray VideoSavePath="";

VideoSavePath+=m_RecordConfig->VideoSavePath; //视频保存路径

VideoSavePath+="/";

VideoSavePath+=dateTime.toString("yyyy-MM-dd-hh-mm-ss");

VideoSavePath+=".mp4";

//保存最终的地址

QByteArray ffmpegOutPath;

//本地录制

if(m_RecordConfig->Mode==0)

{

ret=avformat_alloc_output_context2(&oc,nullptr,nullptr,VideoSavePath.data()); //存放到文件

if(ret!=0)

{

emit LogSend(-1,tr("地址无法打开:%1\n").arg(VideoSavePath.data()));

return ret;

}

emit LogSend(0,tr("视频保存路径:%1\n").arg(VideoSavePath.data()));

//如果文件已经存在

if(QFile(VideoSavePath).exists())

{

//删除原来的文件

QFile::remove(VideoSavePath);

}

ffmpegOutPath=VideoSavePath;

}

//推流

else if(m_RecordConfig->Mode==1)

{

QByteArray FormatType;

ffmpegOutPath=m_RecordConfig->PushStreamAddr;

}

//设置视频编码格式

fmt=oc->oformat;

fmt->video_codec=AV_CODEC_ID_H264; //视频编码为H264

fmt->audio_codec=AV_CODEC_ID_AAC; //音频编码为AAC

//只要图像

if(m_RecordConfig->input_DataConf==0)

{

have_video = 1;

encode_video = 1;

//配置视频流

ffmpeg_add_stream(&video_st,oc,&video_codec,fmt->video_codec);

}

//图像+音频

else if(m_RecordConfig->input_DataConf==1)

{

}

//配置视频参数

if (have_video)

{

ffmpeg_open_video(video_codec,&video_st,opt);

}

//打印当前流的编码信息

av_dump_format(oc, 0, ffmpegOutPath.data(), 1);

//打开输出文件

if(!(fmt->flags & AVFMT_NOFILE))

{

ret = avio_open(&oc->pb, ffmpegOutPath.data(), AVIO_FLAG_WRITE);

if (ret < 0)

{

LogSend(-1,tr("无法打开流:%1\n").arg(ffmpegOutPath.data()));

return ret;

}

}

//编写流头

ret=avformat_write_header(oc,&opt);

if(ret<0)

{

LogSend(-1,tr("输出文件打开出错:%1\n").arg(ffmpegOutPath.data()));

return ret;

}

//参考值清0

video_st.next_pts=0;

//开始编码

while(m_run)

{

//写视频帧

ret=ffmpeg_write_video_frame(oc,&video_st);

if(ret<0)

{

break;

}

}

av_write_trailer(oc);

/*关闭流*/

if (have_video)

ffmpeg_close_stream(&video_st);

if (!(fmt->flags & AVFMT_NOFILE))

avio_closep(&oc->pb);

avformat_free_context(oc);

LogSend(0,"编程线程已退出...\n");

return ret;

}

//设置配置参数

ffmpeg_EncodeThread::ffmpeg_EncodeThread(RecordConfig *config)

{

m_RecordConfig=config;

}

//线程开始运行

void ffmpeg_EncodeThread::run()

{

//开始编码

StartEncode();

}

5.4 视频采集线程.h

#ifndef VIDEOGETTHREAD_H

#define VIDEOGETTHREAD_H

#include "Config.h"

class VideoGetThread: public QThread

{

Q_OBJECT

public:

VideoGetThread(RecordConfig *config);

public slots:

void slotOnProbeFrame(const QVideoFrame &frame);

protected:

void run();

signals:

void LogSend(int err,QString text);

void VideoDataOutput(QImage); //输出信号

private:

int Camear_Init();

QCamera *camera;

QVideoProbe *m_pProbe;

QEventLoop *loop;

struct RecordConfig *m_RecordConfig;

};

#endif // VIDEOGETTHREAD_H

5.5 视频采集线程.cpp

#include "VideoGetThread.h"

VideoGetThread::VideoGetThread(struct RecordConfig *config)

{

//全局参数配置

m_RecordConfig=config;

}

//开始运行线程

void VideoGetThread::run()

{

int ret=-1;

loop=new QEventLoop;

//初始摄像头,开启视频采集

ret=Camear_Init();

if(ret!=0)

{

LogSend(-1,"摄像头初始化错误...\n");

goto RETURN;

}

//开启事件循环

loop->exec();

RETURN:

loop->exit();

//出来释放空间

delete loop;

loop=nullptr;

if(camera)

{

camera->stop();

}

if(camera)

{

delete camera;

camera=nullptr;

}

if(m_pProbe)

{

delete m_pProbe;

m_pProbe=nullptr;

}

LogSend(0,"摄像头采集线程退出...\n");

}

//摄像头初始化

int VideoGetThread::Camear_Init()

{

/*创建摄像头对象,根据选择的摄像头打开*/

camera = new QCamera(m_RecordConfig->camera);

if(camera==nullptr)return -1;

m_pProbe = new QVideoProbe;

if(m_pProbe == nullptr)return -1;

m_pProbe->setSource(camera); // Returns true, hopefully.

connect(m_pProbe, SIGNAL(videoFrameProbed(QVideoFrame)),this, SLOT(slotOnProbeFrame(QVideoFrame)), Qt::QueuedConnection);

/*配置摄像头捕获模式为帧捕获模式*/

//camera->setCaptureMode(QCamera::CaptureStillImage);

camera->setCaptureMode(QCamera::CaptureVideo);

/*启动摄像头*/

camera->start();

/*设置摄像头的采集帧率和分辨率*/

QCameraViewfinderSettings settings;

// settings.setPixelFormat(QVideoFrame::Format_YUYV); //设置像素格式 Android上只支持NV21格式

//设置摄像头的分辨率

settings.setResolution(QSize(m_RecordConfig->ImageWidth,m_RecordConfig->ImageHeight));

camera->setViewfinderSettings(settings);

//获取当前的配置

//QCameraViewfinderSettings get_set=camera->viewfinderSettings();

//qDebug()<<"最大帧率支持:"<

//qDebug()<<"像素格式支持:"<

//qDebug()<<"当前分辨率:"<

//获取摄像头支持的分辨率、帧率等参数

#if 0

int i=0;

QList<QCameraViewfinderSettings > ViewSets = camera->supportedViewfinderSettings();

foreach (QCameraViewfinderSettings ViewSet, ViewSets)

{

qDebug() << i++ <<" max rate = " << ViewSet.maximumFrameRate() << "min rate = "<< ViewSet.minimumFrameRate() << "resolution "<<ViewSet.resolution()<<ViewSet.pixelFormat();

}

/* 我的笔记本电脑输出的格式如下

0 max rate = 30 min rate = 30 resolution QSize(1280, 720) Format_Jpeg

1 max rate = 30 min rate = 30 resolution QSize(320, 180) Format_Jpeg

2 max rate = 30 min rate = 30 resolution QSize(320, 240) Format_Jpeg

3 max rate = 30 min rate = 30 resolution QSize(352, 288) Format_Jpeg

4 max rate = 30 min rate = 30 resolution QSize(424, 240) Format_Jpeg

5 max rate = 30 min rate = 30 resolution QSize(640, 360) Format_Jpeg

6 max rate = 30 min rate = 30 resolution QSize(640, 480) Format_Jpeg

7 max rate = 30 min rate = 30 resolution QSize(848, 480) Format_Jpeg

8 max rate = 30 min rate = 30 resolution QSize(960, 540) Format_Jpeg

9 max rate = 10 min rate = 10 resolution QSize(1280, 720) Format_YUYV

10 max rate = 10 min rate = 10 resolution QSize(1280, 720) Format_BGR24

11 max rate = 30 min rate = 30 resolution QSize(320, 180) Format_YUYV

12 max rate = 30 min rate = 30 resolution QSize(320, 180) Format_BGR24

13 max rate = 30 min rate = 30 resolution QSize(320, 240) Format_YUYV

14 max rate = 30 min rate = 30 resolution QSize(320, 240) Format_BGR24

15 max rate = 30 min rate = 30 resolution QSize(352, 288) Format_YUYV

16 max rate = 30 min rate = 30 resolution QSize(352, 288) Format_BGR24

17 max rate = 30 min rate = 30 resolution QSize(424, 240) Format_YUYV

18 max rate = 30 min rate = 30 resolution QSize(424, 240) Format_BGR24

19 max rate = 30 min rate = 30 resolution QSize(640, 360) Format_YUYV

20 max rate = 30 min rate = 30 resolution QSize(640, 360) Format_BGR24

21 max rate = 30 min rate = 30 resolution QSize(640, 480) Format_YUYV

22 max rate = 30 min rate = 30 resolution QSize(640, 480) Format_BGR24

23 max rate = 20 min rate = 20 resolution QSize(848, 480) Format_YUYV

24 max rate = 20 min rate = 20 resolution QSize(848, 480) Format_BGR24

25 max rate = 15 min rate = 15 resolution QSize(960, 540) Format_YUYV

26 max rate = 15 min rate = 15 resolution QSize(960, 540) Format_BGR24

*/

#endif

return 0;

}

void VideoGetThread::slotOnProbeFrame(const QVideoFrame &frame)

{

QVideoFrame cloneFrame(frame);

cloneFrame.map(QAbstractVideoBuffer::ReadOnly);

if(cloneFrame.pixelFormat()==QVideoFrame::Format_NV21)

{

NV21_TO_RGB24(cloneFrame.bits(),m_RecordConfig->rgb_buffer,cloneFrame.width(),cloneFrame.height());

}

else if(cloneFrame.pixelFormat()==QVideoFrame::Format_YUYV)

{

yuyv_to_rgb(cloneFrame.bits(),m_RecordConfig->rgb_buffer,cloneFrame.width(),cloneFrame.height());

}

else if(cloneFrame.pixelFormat()==QVideoFrame::Format_RGB24)

{

memcpy(m_RecordConfig->rgb_buffer,cloneFrame.bits(),m_RecordConfig->grb24_size);

}

else if(cloneFrame.pixelFormat()==QVideoFrame::Format_BGR24)

{

memcpy(m_RecordConfig->rgb_buffer,cloneFrame.bits(),m_RecordConfig->grb24_size);

}

else if(cloneFrame.pixelFormat()==QVideoFrame::Format_Jpeg)

{

}

else

{

qDebug("当前格式编码为%1,暂时不支持转换.\n");

}

//加载图片数据

QImage image(m_RecordConfig->rgb_buffer,

cloneFrame.width(),

cloneFrame.height(),

QImage::Format_RGB888);

if(cloneFrame.pixelFormat()==QVideoFrame::Format_BGR24)

{

image=image.rgbSwapped(); //BGR格式转RGB

image=image.mirrored(false, true);

}

if(cloneFrame.pixelFormat()==QVideoFrame::Format_Jpeg)

{

image.loadFromData((const uchar *)cloneFrame.bits(),cloneFrame.mappedBytes());

}

//绘制图片水印

QDateTime dateTime(QDateTime::currentDateTime());

//时间效果: 2020-03-05 16:25::04 周一

QString qStr="";

qStr+=dateTime.toString("yyyy-MM-dd hh:mm:ss ddd");

QPainter pp(&image);

QPen pen = QPen(Qt::white);

pp.setPen(pen);

pp.fillRect(QRectF(0,0,300,25),QBrush(QColor(255,0,0,100)));

pp.drawText(QPointF(0,20),qStr);

//提取RGB数据

//下面这一块处理比较耗时间,效率低下. 后期再进行优化

unsigned char *p=m_RecordConfig->rgb_buffer;

for(int i=0;i<image.height();i++)

{

for(int j=0;j<image.width();j++)

{

QRgb rgb=image.pixel(j,i);

*p++=qRed(rgb);

*p++=qGreen(rgb);

*p++=qBlue(rgb);

}

}

//将数据放入全局缓冲区

m_RecordConfig->video_encode_mutex.lock();

RGB24_TO_YUV420(m_RecordConfig->rgb_buffer,image.width(),image.height(),m_RecordConfig->video_yuv420p_buff);

m_RecordConfig->video_encode_mutex.unlock();

m_RecordConfig->video_WaitConditon.wakeAll();

//将图像数据给UI界面

emit VideoDataOutput(image); //发送信号

cloneFrame.unmap();

}

5.6 主界面逻辑.cpp

#include "widget.h"

#include "ui_widget.h"

Widget::Widget(QWidget *parent)

: QWidget(parent)

, ui(new Ui::Widget)

{

ui->setupUi(this);

this->setWindowTitle("基于Qt+FFMPEG设计的录屏、推流客户端");

//更新麦克风与摄像头设备

on_pushButton_UpdateDevInfo_clicked();

//参数配置结构

m_RecordConfig=new struct RecordConfig;

//摄像头采集线程

m_VideoGetThread=new VideoGetThread(m_RecordConfig);

//ffmpeg编码线程

m_ffmpeg_EncodeThread=new ffmpeg_EncodeThread(m_RecordConfig);

if(m_VideoGetThread==nullptr || m_ffmpeg_EncodeThread==nullptr)

{

slot_LogDisplay(0,"线程new失败...\n");

ui->pushButton_start->setEnabled(false);

ui->pushButton_stop->setEnabled(false);

return;

}

//关联摄像头图像输出到UI界面

connect(m_VideoGetThread,SIGNAL(VideoDataOutput(QImage )),ui->widget_video,SLOT(slotSetOneFrame(QImage)));

//关联日志信号

connect(m_VideoGetThread,SIGNAL(LogSend(int,QString)),this,SLOT(slot_LogDisplay(int,QString)));

//关联日志信号

connect(m_ffmpeg_EncodeThread,SIGNAL(LogSend(int,QString)),this,SLOT(slot_LogDisplay(int,QString)));

}

Widget::~Widget()

{

delete ui;

}

//开始(推流或者保存)

void Widget::on_pushButton_start_clicked()

{

//1. 判断模式

//本地录制

if(ui->radioButton_save_stream->isChecked())

{

m_RecordConfig->Mode=0;

slot_LogDisplay(0,QString("模式:本地录制\n"));

}

//推流

else if(ui->radioButton_send_stream->isChecked())

{

m_RecordConfig->Mode=1;

slot_LogDisplay(0,QString("模式:推流\n"));

}

//默认模式--本地录制

else

{

m_RecordConfig->Mode=0;

slot_LogDisplay(0,QString("模式:本地录制\n"));

}

//2. 解析分辨率

QString input_size=ui->comboBox_WxH->currentText();

if(input_size.contains("x",Qt::CaseSensitive))

{

int input_w=input_size.section('x',0,0).toInt();

int input_h=input_size.section('x',1,1).toInt();

if(input_w<=0||input_h<=0)

{

QMessageBox::warning(this,"提示","请按格式填分辨率参数!",QMessageBox::Ok,QMessageBox::Ok);

return;

}

else

{

//保证输入的宽高是2的倍数

while(input_w%2!=0)

{

input_w++;

}

while(input_h%2!=0)

{

input_h++;

}

slot_LogDisplay(0,QString("分辨率:%1x%2\n").arg(input_w).arg(input_h));

m_RecordConfig->ImageWidth=input_w;

m_RecordConfig->ImageHeight=input_h;

}

}

else

{

QMessageBox::warning(this,"提示","请按格式填分辨率参数!",QMessageBox::Ok,QMessageBox::Ok);

return;

}

//3. 计算RGB24占用大小,并申请空间

m_RecordConfig->grb24_size=m_RecordConfig->ImageWidth*m_RecordConfig->ImageHeight*3;

m_RecordConfig->rgb_buffer=(unsigned char *)malloc(m_RecordConfig->grb24_size);

if(m_RecordConfig->rgb_buffer==nullptr)

{

QMessageBox::warning(this,"提示","RGB24空间申请失败!",QMessageBox::Ok,QMessageBox::Ok);

return;

}

//4.计算YUV42OP格式占用大小,并申请空间

m_RecordConfig->yuv420p_size=m_RecordConfig->ImageWidth*m_RecordConfig->ImageHeight*3/2;

m_RecordConfig->video_yuv420p_buff=(unsigned char *)malloc(m_RecordConfig->yuv420p_size);

m_RecordConfig->video_yuv420p_buff_temp=(unsigned char *)malloc(m_RecordConfig->yuv420p_size);

if(m_RecordConfig->video_yuv420p_buff==nullptr ||

m_RecordConfig->video_yuv420p_buff_temp==nullptr)

{

QMessageBox::warning(this,"提示","YUV42OP空间申请失败!",QMessageBox::Ok,QMessageBox::Ok);

return;

}

//5. 配置图像来源

//摄像头

if(ui->comboBox_video_input->currentIndex()==0)

{

//来源摄像头

m_RecordConfig->ImageInptSrc=0;

if(video_dev_list.size()<=0)

{

QMessageBox::warning(this,"提示","没有摄像头可用!",QMessageBox::Ok,QMessageBox::Ok);

return;

}

//得到选择的摄像头

m_RecordConfig->camera=video_dev_list.at(ui->comboBox_video_list->currentIndex());

slot_LogDisplay(0,QString("图像源:摄像头\n"));

}

//桌面

else if(ui->comboBox_video_input->currentIndex()==1)

{

//来源桌面

m_RecordConfig->ImageInptSrc=1;

//桌面的页面

m_RecordConfig->desktop_page=ui->spinBox_DesktopPage->value();

slot_LogDisplay(0,QString("图像源:桌面\n"));

}

//6. 配置音频来源

//如果需要声音+画面

if(ui->comboBox_data_conf->currentIndex()==1)

{

//需要声音+画面

m_RecordConfig->input_DataConf=1;

slot_LogDisplay(0,QString("捕获:声音+画面\n"));

//麦克风

if(ui->comboBox_audio_input->currentIndex()==0)

{

}

//扬声器

else if(ui->comboBox_audio_input->currentIndex()==1)

{

}

//麦克风+扬声器

else if(ui->comboBox_audio_input->currentIndex()==2)

{

}

if(audio_dev_list.size()<=0)

{

QMessageBox::warning(this,"提示","没有音频设备可用!",QMessageBox::Ok,QMessageBox::Ok);

return;

}

}

else

{

//只要画面

m_RecordConfig->input_DataConf=0;

slot_LogDisplay(0,QString("捕获:只要画面\n"));

}

//7. 配置码率

m_RecordConfig->video_bit_rate=ui->comboBox_rate->currentText().toInt();

if(m_RecordConfig->video_bit_rate<1000)

{

QMessageBox::warning(this,"提示","码率配置有误!",QMessageBox::Ok,QMessageBox::Ok);

return;

}

slot_LogDisplay(0,QString("码率:%1\n").arg(m_RecordConfig->video_bit_rate));

//8. 配置帧率

m_RecordConfig->video_frame=ui->spinBox_framCnt->value();

if(m_RecordConfig->video_frame<10)

{

QMessageBox::warning(this,"提示","帧率配置有误!",QMessageBox::Ok,QMessageBox::Ok);

return;

}

slot_LogDisplay(0,QString("帧率:%1\n").arg(m_RecordConfig->video_frame));

//9. 推流地址

m_RecordConfig->PushStreamAddr=ui->lineEdit_PushStreamAddr->text().toUtf8();

//如果是选择推流模式

if(m_RecordConfig->Mode==1)

{

if(m_RecordConfig->PushStreamAddr.isEmpty())

{

QMessageBox::warning(this,"提示","推流地址未填写!",QMessageBox::Ok,QMessageBox::Ok);

return;

}

else

{

//判断地址是否合理

if(m_RecordConfig->PushStreamAddr.contains("rtsp")||

m_RecordConfig->PushStreamAddr.contains("rtmp"))

{

//正常了

slot_LogDisplay(0,QString("推流地址:%1\n").arg(m_RecordConfig->PushStreamAddr.data()));

}

else

{

QMessageBox::warning(this,"提示","推流地址格式有误!",QMessageBox::Ok,QMessageBox::Ok);

return;

}

}

}

//10. 视频保存路径

m_RecordConfig->VideoSavePath=ui->lineEdit_VideoSavePath->text();

//如果是本地录制

if(m_RecordConfig->Mode==0)

{

if(m_RecordConfig->VideoSavePath.isEmpty())

{

QMessageBox::warning(this,"提示","视频保存目录未选择!",QMessageBox::Ok,QMessageBox::Ok);

return;

}

else

{

//如果目录不存在

if(!QDir(m_RecordConfig->VideoSavePath).exists())

{

QMessageBox::warning(this,"提示","视频保存目录不存在!",QMessageBox::Ok,QMessageBox::Ok);

return;

}

}

}

//11. 开启采集线程

//如果画面来源选择摄像头

if(m_RecordConfig->ImageInptSrc==0)

{

//开启视频采集线程

m_VideoGetThread->start();

}

//开启编码线程

m_ffmpeg_EncodeThread->m_run=1;

m_ffmpeg_EncodeThread->start();

//禁止开始按钮重复点击

ui->pushButton_start->setEnabled(false);

}

//停止 (推流或者保存)

void Widget::on_pushButton_stop_clicked()

{

//恢复开始按钮点击

ui->pushButton_start->setEnabled(true);

//退出视频采集线程

if(m_VideoGetThread->isRunning())

{

qDebug()<<"视频采集线程在运行....";

m_VideoGetThread->quit();

m_VideoGetThread->wait();

}

//退出编码线程

if(m_ffmpeg_EncodeThread->isRunning())

{

qDebug()<<"编码线程在运行....";

m_ffmpeg_EncodeThread->m_run=0;

m_RecordConfig->video_WaitConditon.wakeAll();

m_ffmpeg_EncodeThread->quit();

m_ffmpeg_EncodeThread->wait();

}

//释放RGB24和YUV占用的空间

free(m_RecordConfig->video_yuv420p_buff);

free(m_RecordConfig->video_yuv420p_buff_temp);

free(m_RecordConfig->rgb_buffer);

}

//更新设备列表

void Widget::on_pushButton_UpdateDevInfo_clicked()

{

QComboBox *comboBox_audio=ui->comboBox_audio_list;

QComboBox *comboBox_video=ui->comboBox_video_list;

/*1. 获取声卡设备列表*/

audio_dev_list.clear();

comboBox_audio->clear();

foreach(const QAudioDeviceInfo &deviceInfo, QAudioDeviceInfo::availableDevices(QAudio::AudioOutput))

{

audio_dev_list.append(deviceInfo);

comboBox_audio->addItem(deviceInfo.deviceName());

}

/*2. 获取摄像头列表*/

video_dev_list.clear();

comboBox_video->clear();

video_dev_list=QCameraInfo::availableCameras();

for(int i=0;i<video_dev_list.size();i++)

{

comboBox_video->addItem(video_dev_list.at(i).deviceName());

}

//没有麦克风设备

if(audio_dev_list.size()==0)

{

slot_LogDisplay(0,"未查询到可用的麦克风设备.\n");

}

//没有摄像头设备

if(video_dev_list.size()==0)

{

slot_LogDisplay(0,"未查询到可用的摄像头.\n");

}

}

//日志显示

//int err 状态值.用来做一些特殊处理

//QString text 日志文本

void Widget::slot_LogDisplay(int err,QString text)

{

ui->plainTextEdit_log->insertPlainText(text);

//移动滚动条到底部

QScrollBar *scrollbar = ui->plainTextEdit_log->verticalScrollBar();

if(scrollbar)

{

scrollbar->setSliderPosition(scrollbar->maximum());

}

}

//窗口关闭事件

void Widget::closeEvent(QCloseEvent *event)

{

//停止采集与编码线程

if(!ui->pushButton_start->isEnabled())

{

on_pushButton_stop_clicked();

}

//释放其他空间

delete m_VideoGetThread;

delete m_ffmpeg_EncodeThread;

delete m_RecordConfig;

event->accept(); //接受事件

}

//图像输入源发生改变

void Widget::on_comboBox_video_input_activated(int index)

{

//摄像头

if(index==0)

{

//调整默认分辨率码率

ui->comboBox_rate->setCurrentIndex(0);

ui->comboBox_WxH->setCurrentIndex(0);

}

//桌面

else if(index==1)

{

//调整默认分辨率码率

ui->comboBox_rate->setCurrentIndex(1);

ui->comboBox_WxH->setCurrentIndex(1);

}

}

//选择视频存放路径

void Widget::on_pushButton_selectPath_clicked()

{

QString path_dir="./";

QString inpt_path=ui->lineEdit_VideoSavePath->text();

//系统的视频目录

QStringList path_list=QStandardPaths::standardLocations(QStandardPaths::MoviesLocation);

//如果为空

if(inpt_path.isEmpty())

{

if(path_list.size()>0)

{

path_dir=path_list.at(0);

}

}

else

{

//如果路径不存在

if(!QDir(inpt_path).exists())

{

if(path_list.size()>0)

{

path_dir=path_list.at(0);

}

}

else

{

//使用之前用户输入的路径

path_dir=inpt_path;

}

}

QString dir=QFileDialog::getExistingDirectory(this,"选择视频保存目录",path_dir,

QFileDialog::ShowDirsOnly| QFileDialog::DontResolveSymlinks);

//不为空

if(!dir.isEmpty())

{

ui->lineEdit_VideoSavePath->setText(dir);

}

}

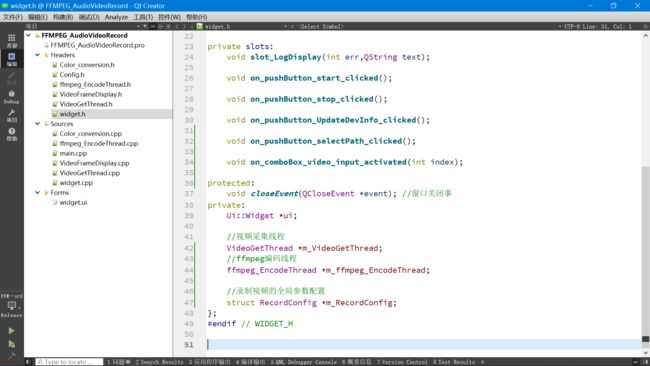

5.7 主界面逻辑.h

#ifndef WIDGET_H

#define WIDGET_H

#include "Config.h"

#include "VideoGetThread.h"

#include "VideoFrameDisplay.h"

#include "ffmpeg_EncodeThread.h"

QT_BEGIN_NAMESPACE

namespace Ui { class Widget; }

QT_END_NAMESPACE

class Widget : public QWidget

{

Q_OBJECT

public:

Widget(QWidget *parent = nullptr);

~Widget();

QList<QAudioDeviceInfo> audio_dev_list;

QList<QCameraInfo> video_dev_list;

private slots:

void slot_LogDisplay(int err,QString text);

void on_pushButton_start_clicked();

void on_pushButton_stop_clicked();

void on_pushButton_UpdateDevInfo_clicked();

void on_pushButton_selectPath_clicked();

void on_comboBox_video_input_activated(int index);

protected:

void closeEvent(QCloseEvent *event); //窗口关闭事

private:

Ui::Widget *ui;

//视频采集线程

VideoGetThread *m_VideoGetThread;

//ffmpeg编码线程

ffmpeg_EncodeThread *m_ffmpeg_EncodeThread;

//录制视频的全局参数配置

struct RecordConfig *m_RecordConfig;

};

#endif // WIDGET_H

5.8 pro工程文件

QT += core gui

QT += multimedia

greaterThan(QT_MAJOR_VERSION, 4): QT += widgets

CONFIG += c++11

# The following define makes your compiler emit warnings if you use

# any Qt feature that has been marked deprecated (the exact warnings

# depend on your compiler). Please consult the documentation of the

# deprecated API in order to know how to port your code away from it.

DEFINES += QT_DEPRECATED_WARNINGS

# You can also make your code fail to compile if it uses deprecated APIs.

# In order to do so, uncomment the following line.

# You can also select to disable deprecated APIs only up to a certain version of Qt.

#DEFINES += QT_DISABLE_DEPRECATED_BEFORE=0x060000 # disables all the APIs deprecated before Qt 6.0.0

SOURCES += \

Color_conversion.cpp \

VideoFrameDisplay.cpp \

VideoGetThread.cpp \

ffmpeg_EncodeThread.cpp \

main.cpp \

widget.cpp

HEADERS += \

Color_conversion.h \

Config.h \

VideoFrameDisplay.h \

VideoGetThread.h \

ffmpeg_EncodeThread.h \

widget.h

FORMS += \

widget.ui

# Default rules for deployment.

qnx: target.path = /tmp/$${TARGET}/bin

else: unix:!android: target.path = /opt/$${TARGET}/bin

!isEmpty(target.path): INSTALLS += target

RC_ICONS=logo.ico

win32

{

message('运行win32版本')

INCLUDEPATH+=C:/FFMPEG/ffmpeg_x86_4.2.2/include

LIBS+=C:/FFMPEG/ffmpeg_x86_4.2.2/bin/av*

LIBS+=C:/FFMPEG/ffmpeg_x86_4.2.2/bin/sw*

LIBS+=C:/FFMPEG/ffmpeg_x86_4.2.2/bin/pos*

}