自然语言处理NLP文本分类顶会论文阅读笔记(一)

笔记目录

- 关于Transformer

- 小样本学习

- BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

- SKEP: Sentiment Knowledge Enhanced Pre-training for Sentiment Analysis

- Leveraging Graph to Improve Abstractive Multi-Document Summarization

- Context-Guided BERT for Targeted Aspect-Based Sentiment Analysis

- Does syntax matter? A strong baseline for Aspect-based Sentiment Analysis with RoBERTa

-

- Perturbed Masking: Parameter-free Probing for Analyzing and Interpreting BERT

- COSFORMER : RETHINKING SOFTMAX IN ATTENTION

- Label Confusion Learning to Enhance Text Classification Models

- MASKER: Masked Keyword Regularization for Reliable Text Classification

-

- Convolutional Neural Networks for Sentence Classification

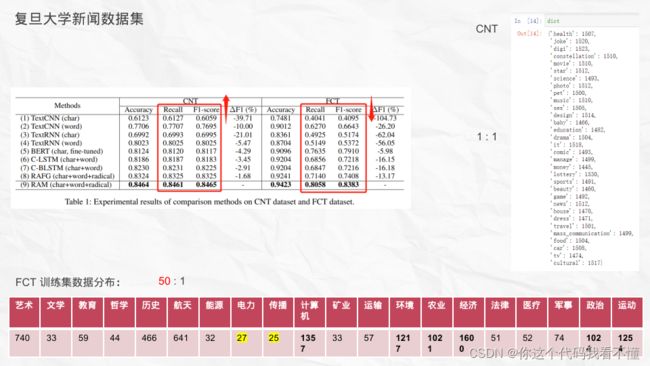

- Ideography Leads Us to the Field of Cognition: A Radical-Guided Associative Model for Chinese Text Classification

- ACT: an Attentive Convolutional Transformer for Efficient Text Classification

- Merging Statistical Feature via Adaptive Gate for Improved Text Classification

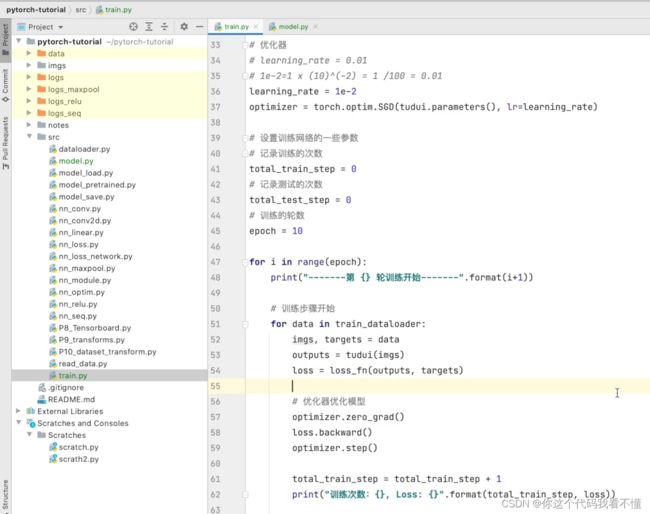

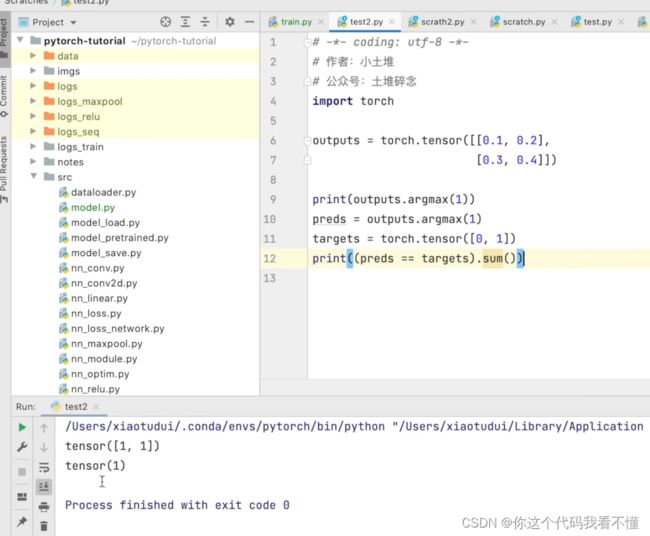

- Pytorch实战

-

- 初识Pytorch

- 初识词表

- 词嵌入

- TextCNN-Pytorch代码

- 简单CNN二分类Pytorch实现

- 杂记

-

- 自定义损失函数踩坑

- 批归一化

- Con1d和Conv2d

- 膨胀卷积

- 稀疏Attention

- Prompt Learning初探

- 固定bert参数的方法

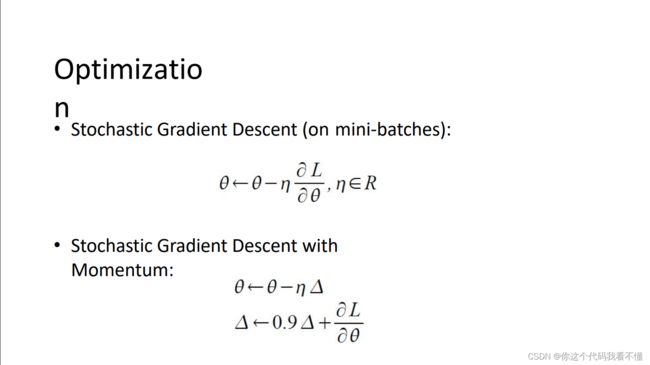

- 手动反向传播和优化器

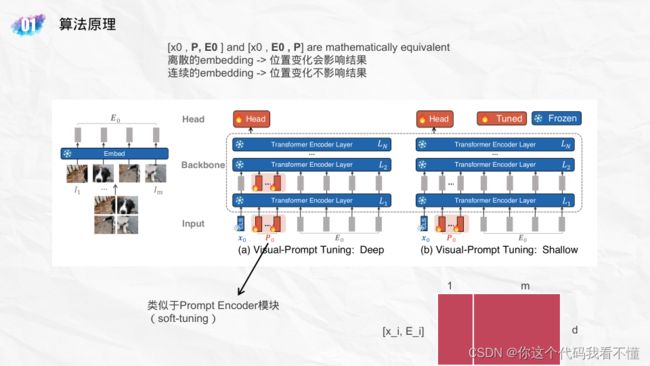

- Visual Prompt Tuning (VPT)

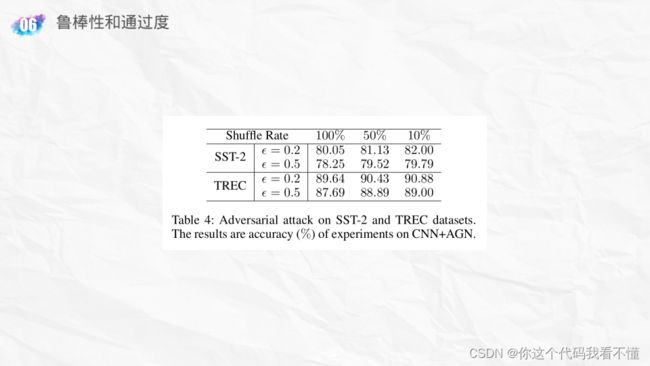

- Adversarial Multi-task Learning for Text Classification

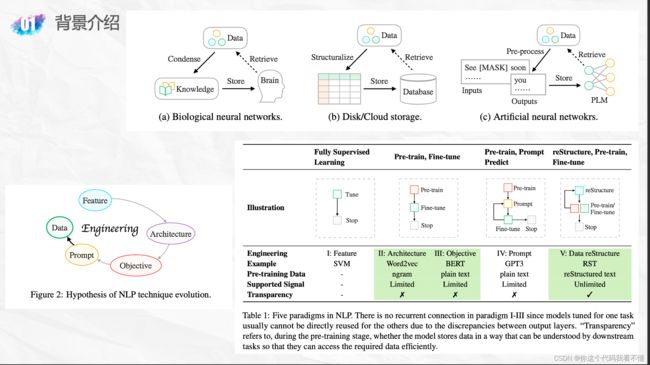

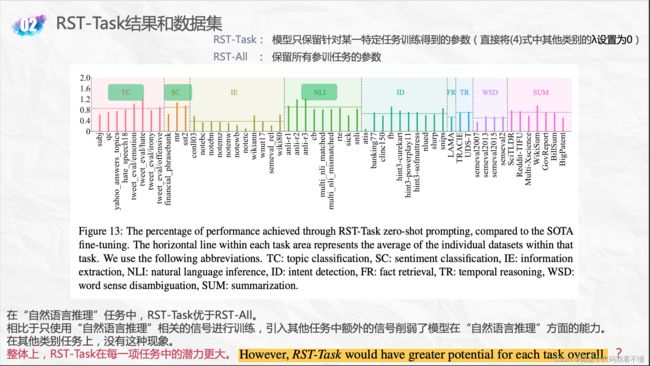

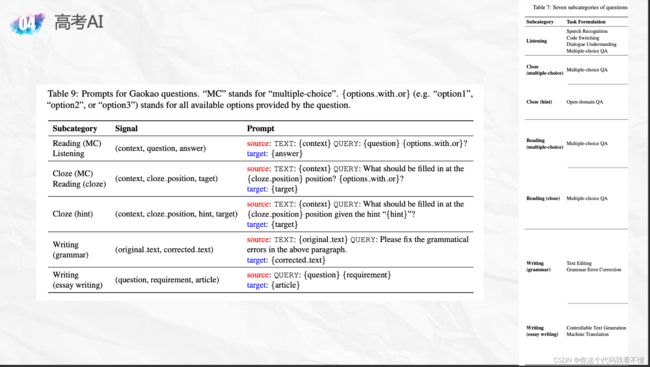

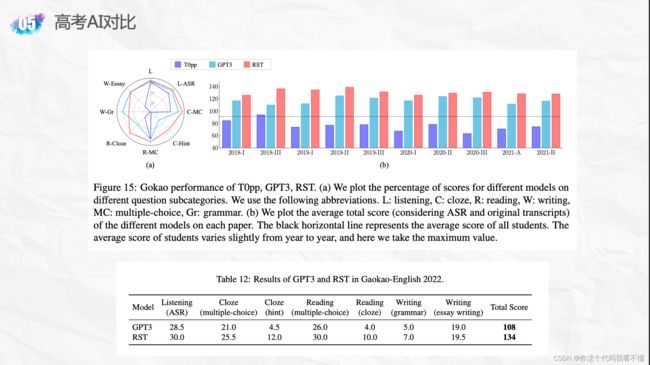

- reStructured Pre-training

- Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer「谷歌:T5」

关于Transformer

-

transformer在大的数据集上表现更好。

-> BERT模型,在大数据集上进行预训练得到语言模型结果。 -

vi-T是对顺序不敏感的,因此用固定的位置编码对输入进行补充。

-> 那么为什么Transformer会对位置信息不敏感呢?输入和输出不也是按照一定序列排好的吗?

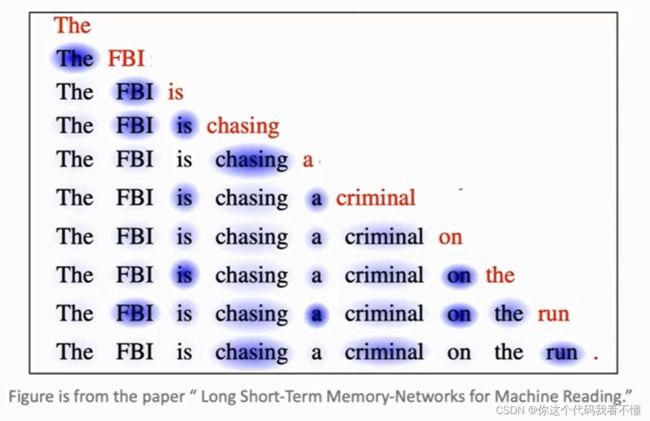

-> 回忆一下,encoder self-attention机制,在tokens序列中,后面的token包含有前面token的语义信息,而前面的token同样是包含有后面token的信息的,并不像simpleRNN一样是从左向右依次提取。那么这样将会导致序列提取出来的信息“包罗万象”,比如在“我爱你”这句话某一层的提取结果中,每一个位置上的token都会叠加其余位置上token的信息,经过多个自注意力层提取之后,原始输入“我爱你”和“你爱我”这两句话对应的特征序列理应是不容易区分开的,然而这两句话的现实涵义则是完全不同的。

-> 疑惑:RNN有长文本遗忘的问题,对于长文本,语句双向的涵义叠加起来看起来似乎合理,可以解决问题;但对于短文本,双向RNN会不会也有和Transformer同样的问题,即混淆序列中token的位置信息?

[token之间的相关性;K、Q (token*W) 之间的相似性] -

transformer N维序列的输入[x]对应N维序列的输出[c],RNN里边可以只保留最后一个状态向量 h i h_i hi,而transformer必须全部保留,因为参数不共享(多头自注意力机制那里也不共享参数)。

-> 猜测:考虑到句子中每个位置上不同单词出现的频数不同,因此不共享参数可能可以达到更好的效果(多头自注意力机制则更好理解了,如果共享了参数那么也没有其存在的必要了)。

- Transformer在训练阶段的解码器部分,为了防止自注意力偷窥到预测单词之后的序列,采用mask方法。

- 有个问题,mask掉的到底是什么?参考了知乎上的回答,虽然可以自圆其说,但整体理解上面还是有些模棱两可:

小样本学习

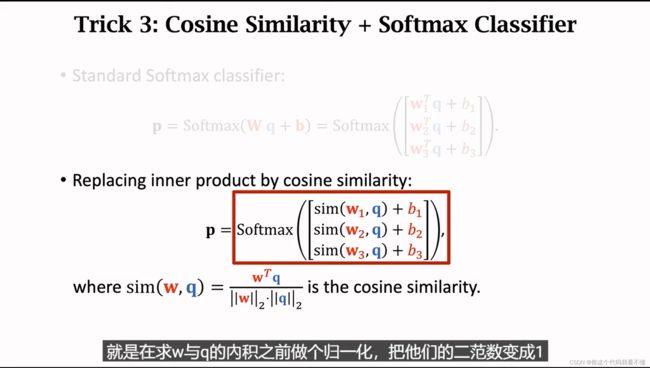

- 对Transformer求Q和K的相关度时,也可以做此改进。

- 从K、Q、V入手,看看是否能对Transformer模型改进。

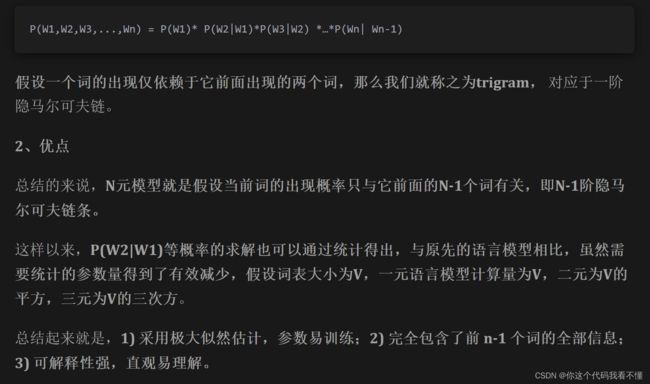

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

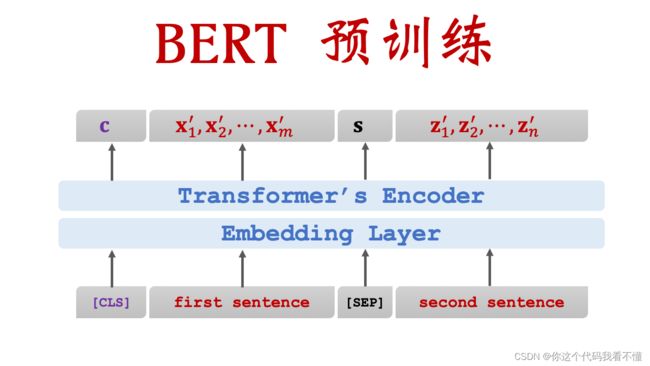

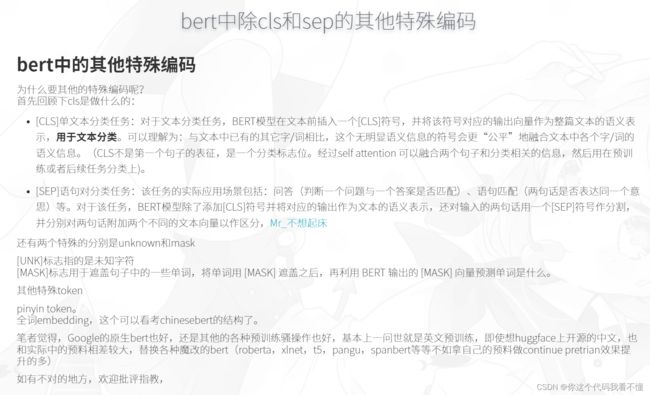

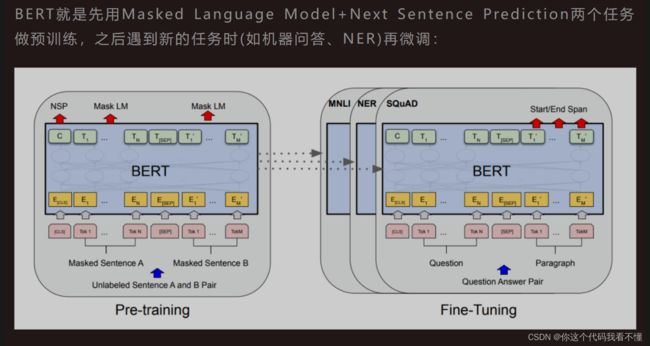

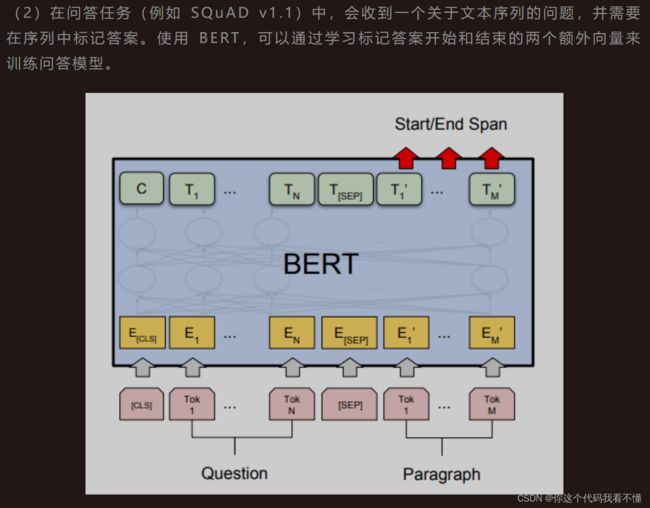

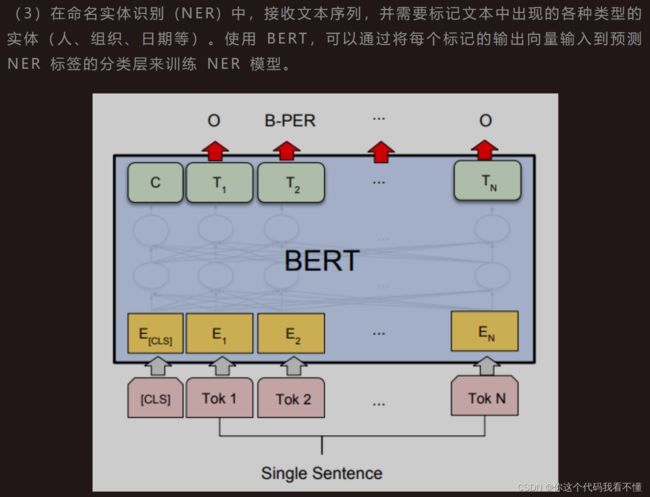

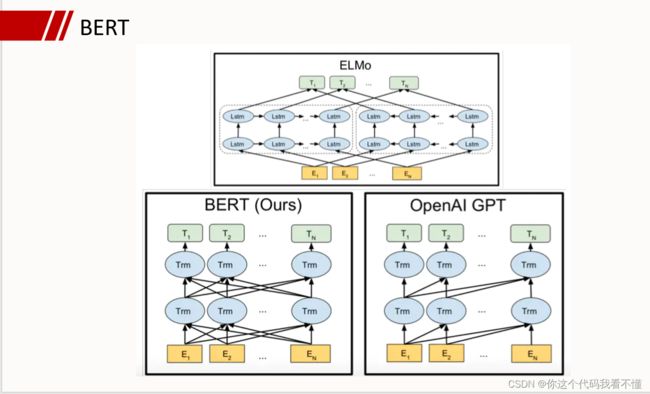

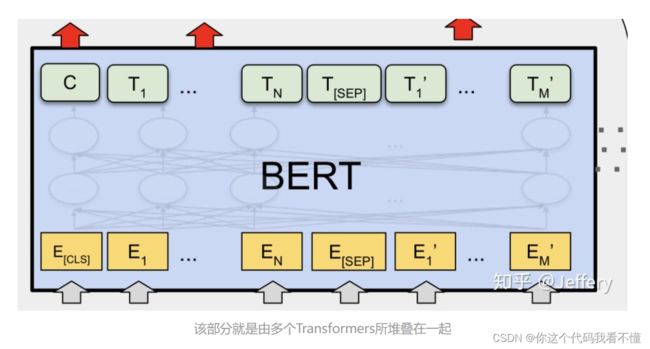

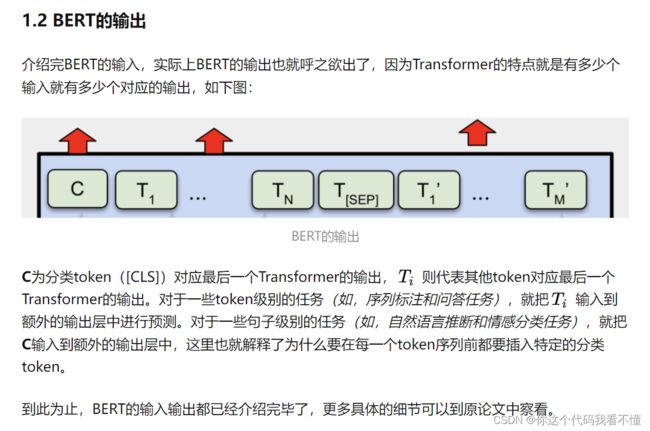

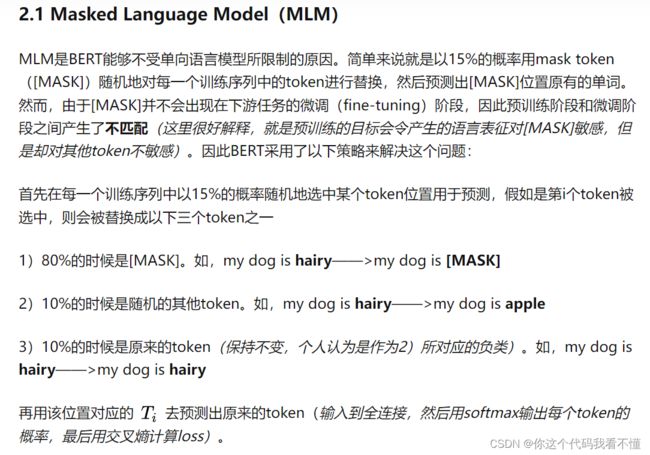

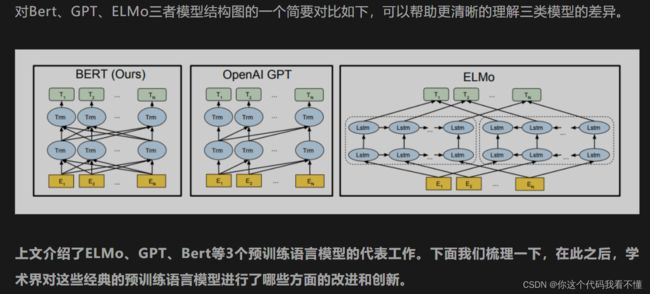

- Transformer的decoder部分为单向模型,因为文本生成需要从左向右依次预测下一个位置上的字母;而encoder部分则为双向模型,因为每一个位置上的token都整合了其余位置上所有token的信息。概括来说,BERT模型是深度堆叠的Transformer,并且只利用了其中encoder部分的模型。

https://zhuanlan.zhihu.com/p/98855346

https://www.cnblogs.com/gaowenxingxing/p/15005130.html,博客园

https://www.zhihu.com/question/425387974,知乎问答

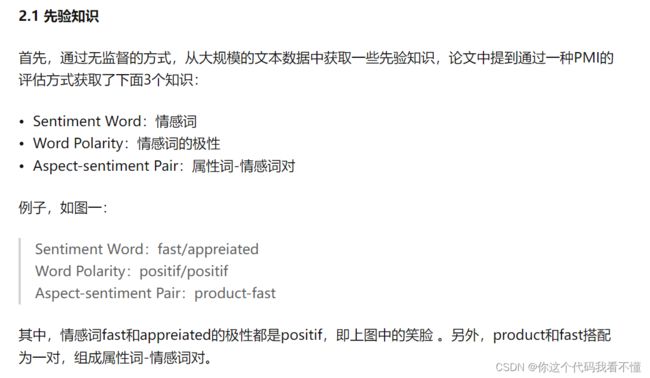

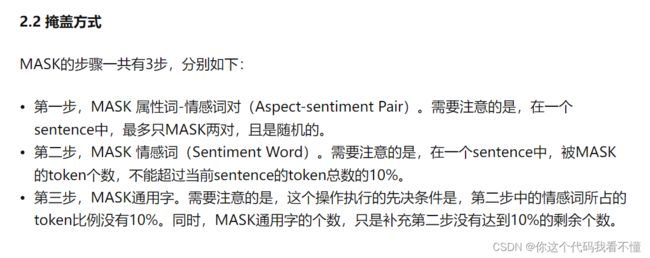

SKEP: Sentiment Knowledge Enhanced Pre-training for Sentiment Analysis

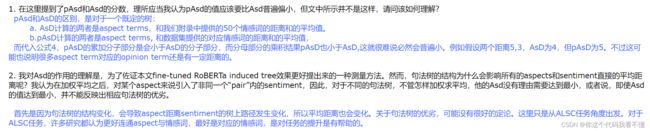

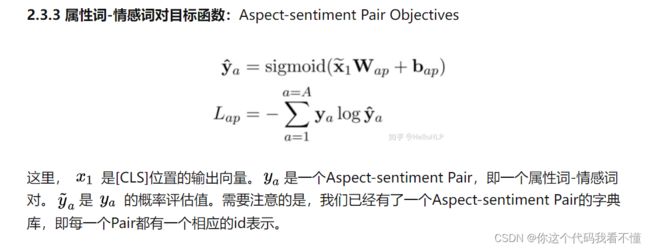

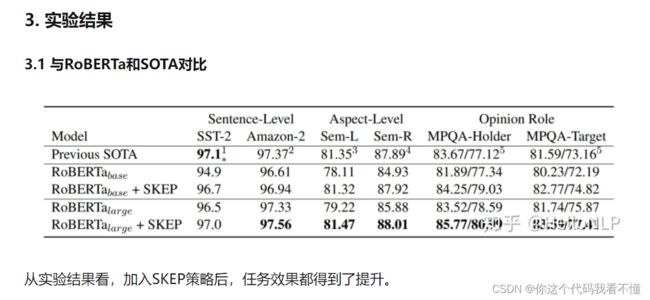

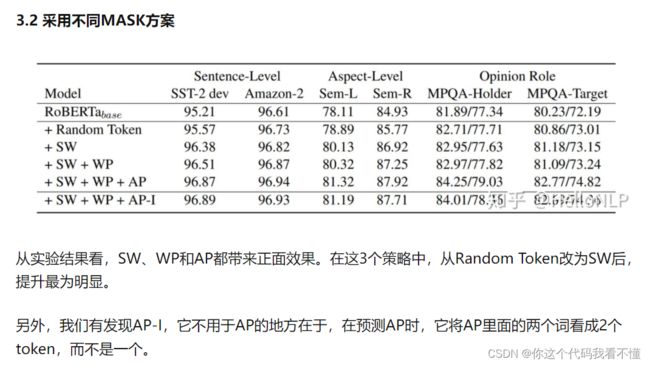

- 上述提到的情感词的极性,可以理解为给定词语蕴涵积极或消极的意义。那么如何理解以上三类先验知识呢?首先,为了让特征提取器对情感词更敏感,需要在预训练阶段接受情感词的特征信息。其次,模型知道一个词是不是情感词之后,还需要知道这个情感词的意义是积极的还是消极的。另外,假设一条影评“电影很好看,但是爆米花不好吃”,我们该如何做情感分析呢?文中引入了属性词-情感词对的说法,在这个例子中就是“电影-好看”、“爆米花-不好吃”,强化了属性与其对应的情感词之间的联系,而弱化了不相关的词间联系。通过消融实验发现这三种做法确实能够提高模型解释力。

- Reference:https://zhuanlan.zhihu.com/p/267837817

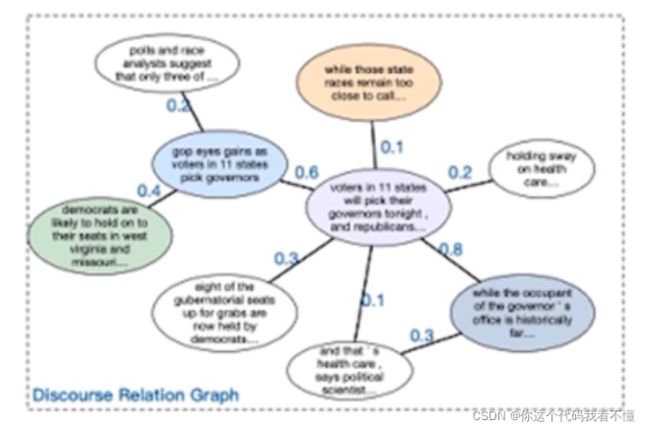

Leveraging Graph to Improve Abstractive Multi-Document Summarization

- key words: 文本生成和摘要、多文档输入、图神经网络

相关背景:

之前的许多模型诸如BERT,RoBERTa等等,均会限制输入的token数量不能超过512个。

利用本文提出的图模型,在BERT和预训练语言模型上进行改进,可以突破序列化结构对输入长度的限制,处理多文档的输入。

![]()

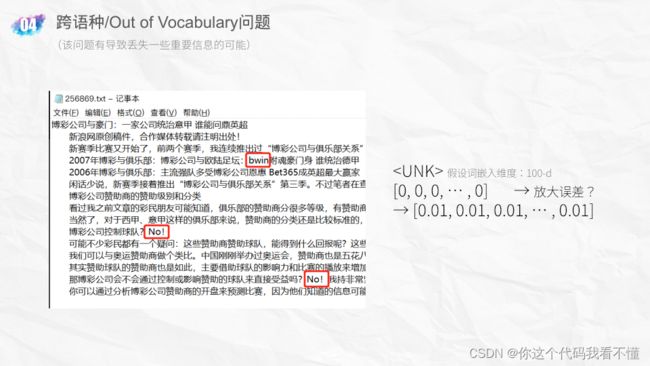

[CLS]: classifier, [SEP]: separator , [UNK]: unknow

- 本文的核心点在于使用图网络对段落间的相关度进行了测量,然后将这一权重作为全局注意力(global attention)进行计算,将预测出的第t个token与某一段中的第i个token之间的相关度作为局部注意力(local attention)进行计算,注意这个local attention通过global attention指导计算得到。

- 部分参考:https://blog.csdn.net/Suyebiubiu/article/details/106797601

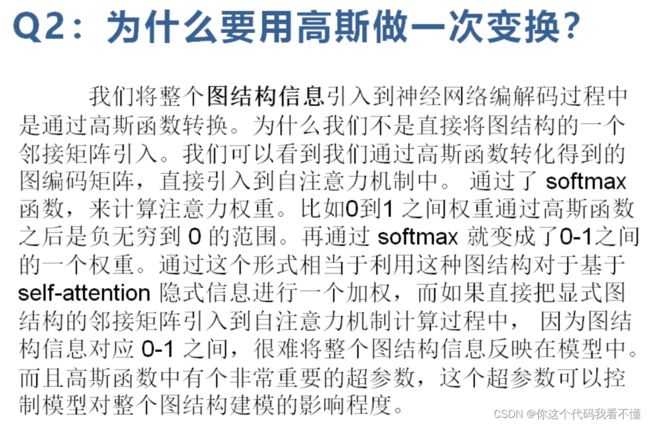

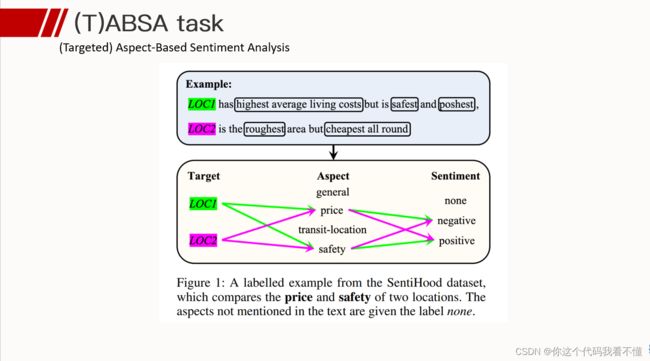

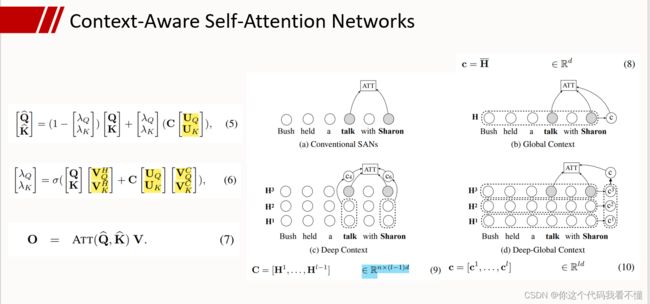

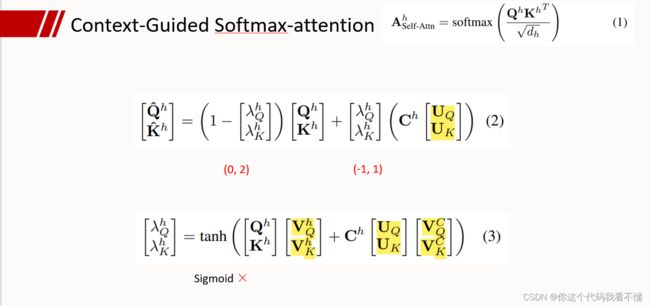

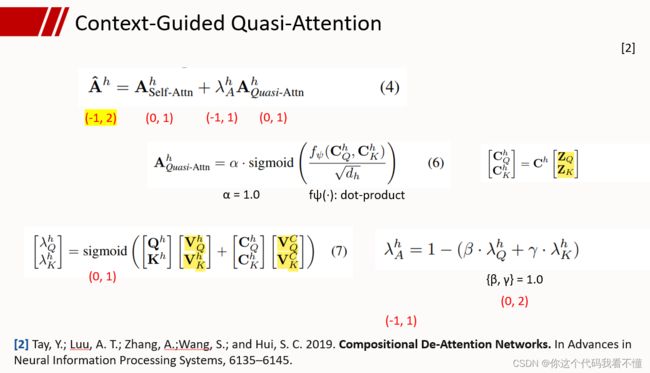

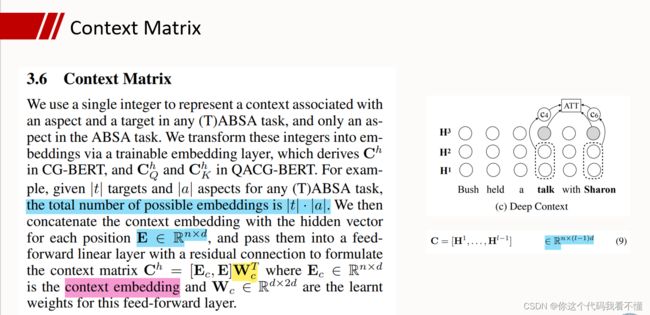

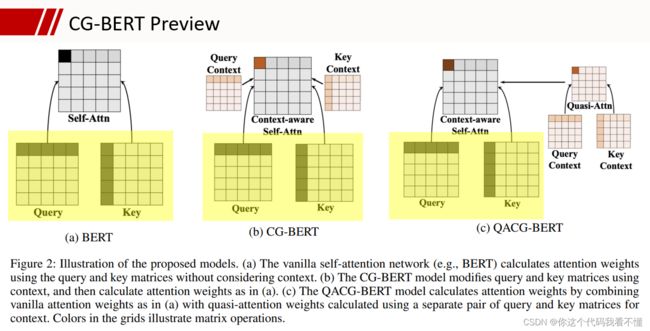

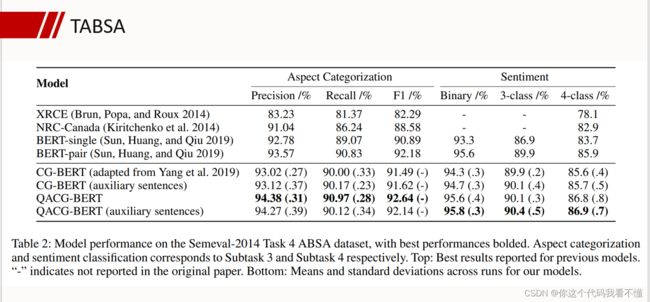

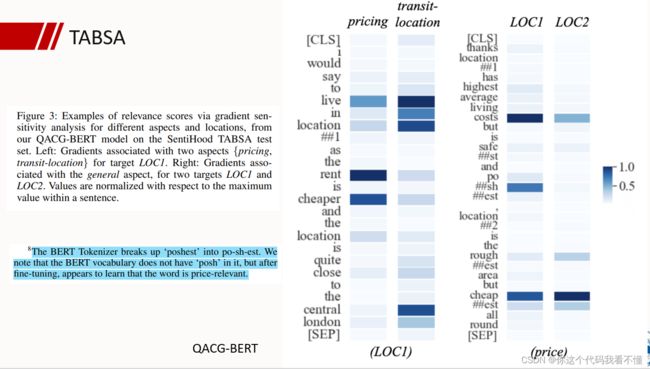

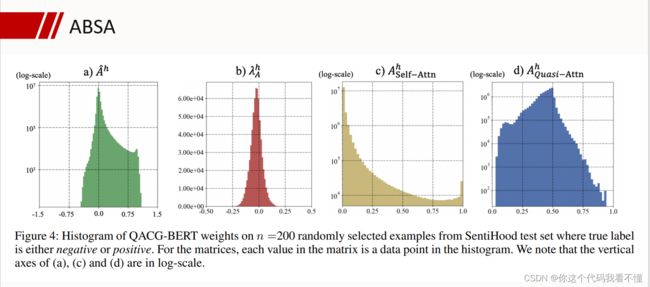

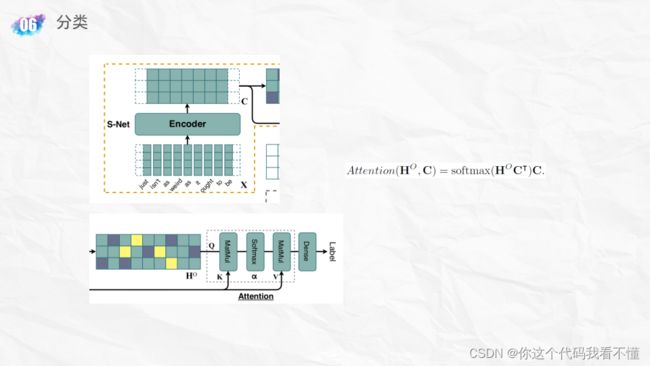

Context-Guided BERT for Targeted Aspect-Based Sentiment Analysis

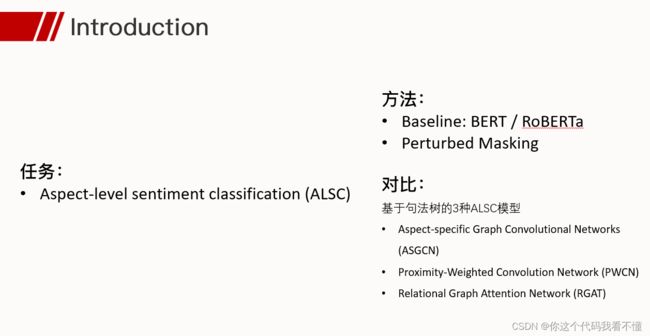

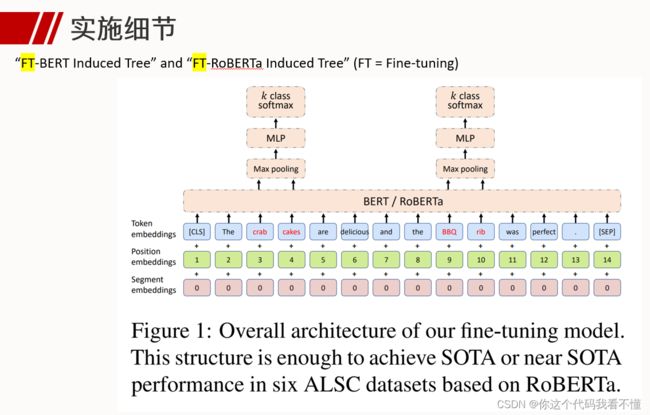

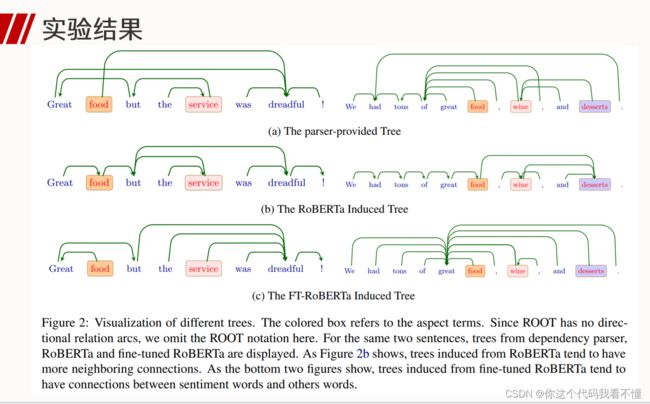

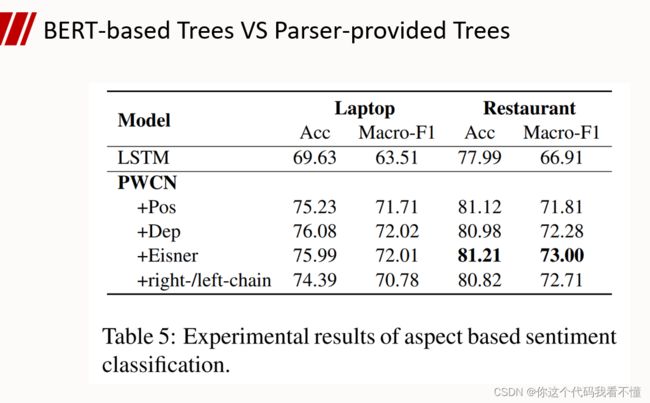

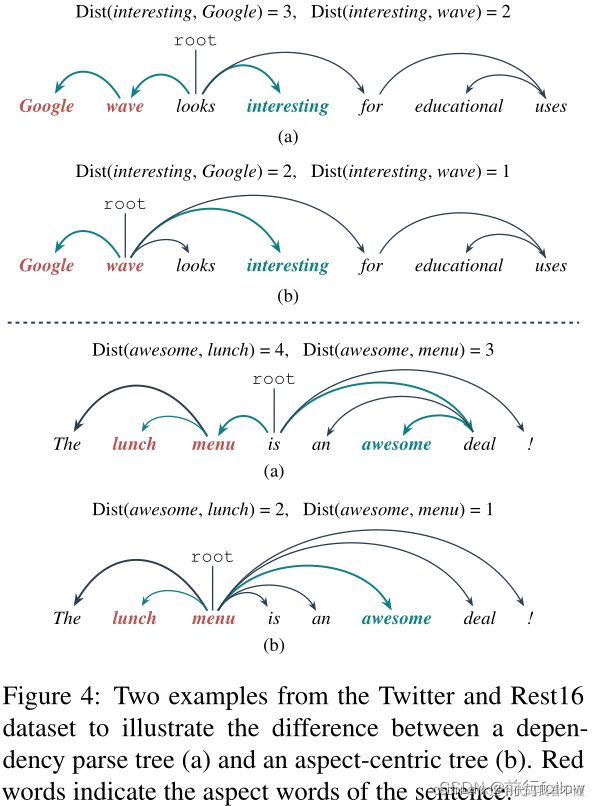

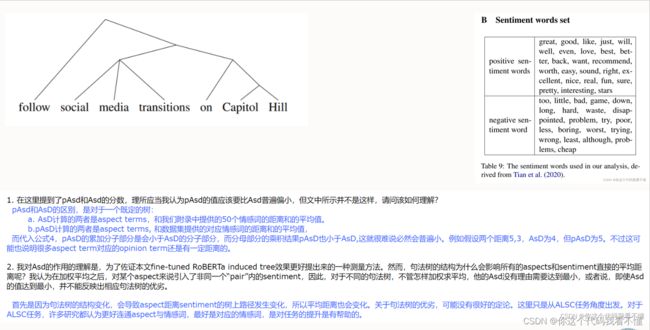

Does syntax matter? A strong baseline for Aspect-based Sentiment Analysis with RoBERTa

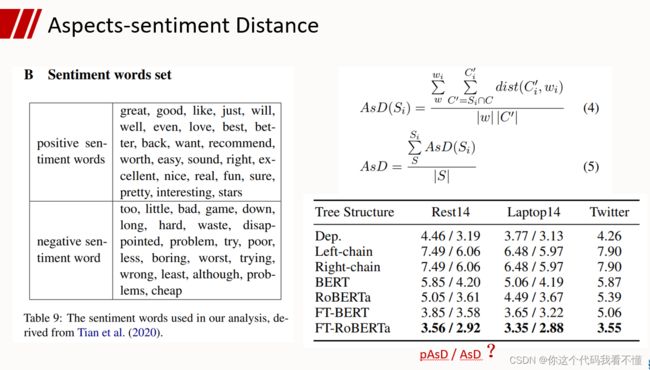

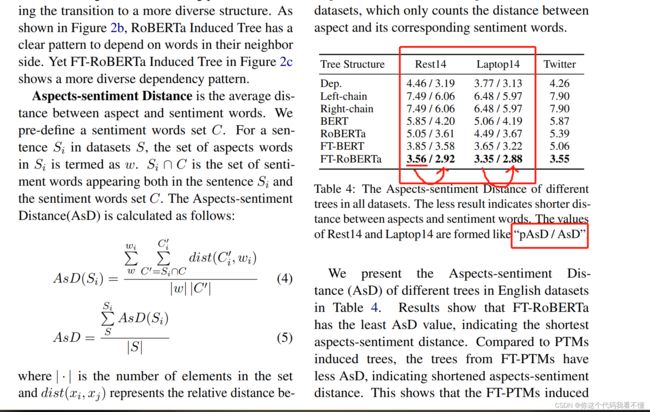

- pAsd的值为什么反而比Asd更大?

- 按照理解,Asd计算中,对某一个aspect来说引入了不属于他的“pair”内的sentiment的距离(在multi-aspect任务中),整体的加权平均,对于任何一种句法树来说,都没理由希望Asd的值最小,也就是说,在这里的Asd即使达到最小值并没有解释力。

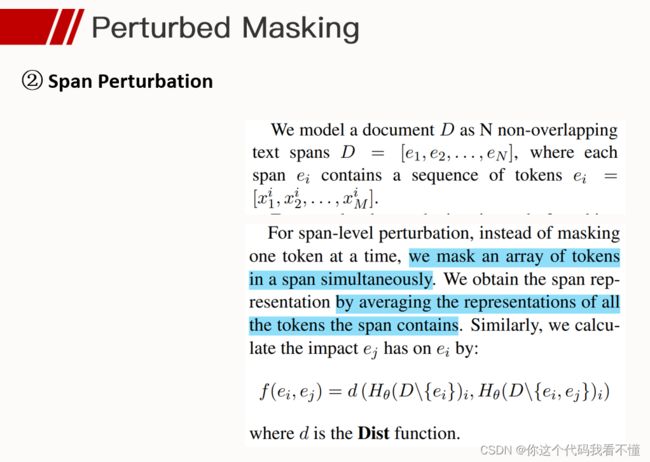

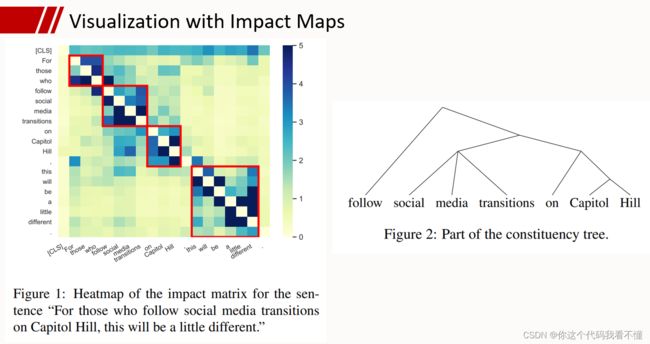

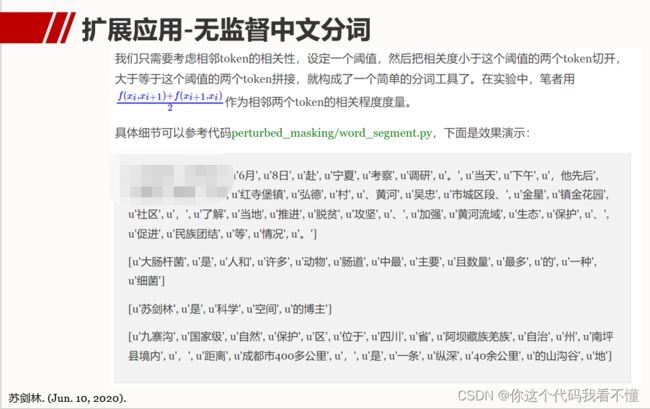

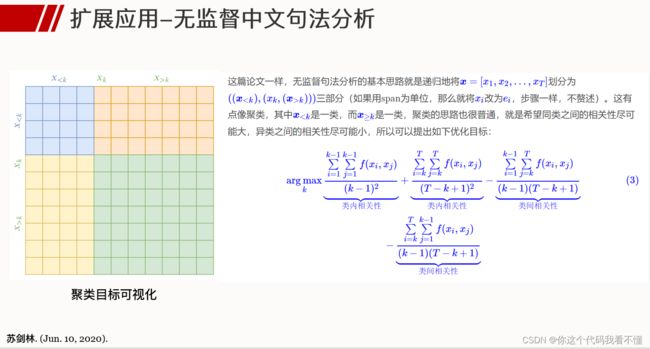

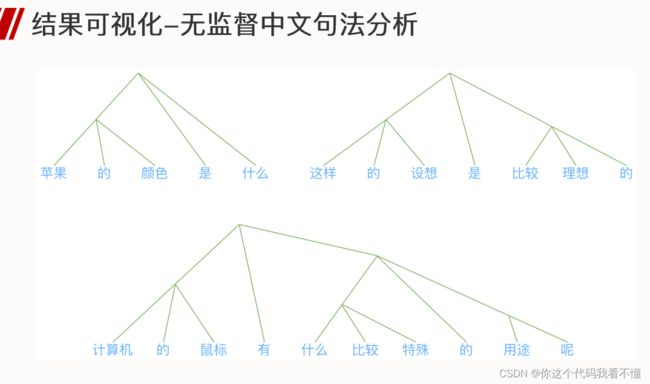

Perturbed Masking: Parameter-free Probing for Analyzing and Interpreting BERT

I n t r o d u c t i o n Introduction Introduction

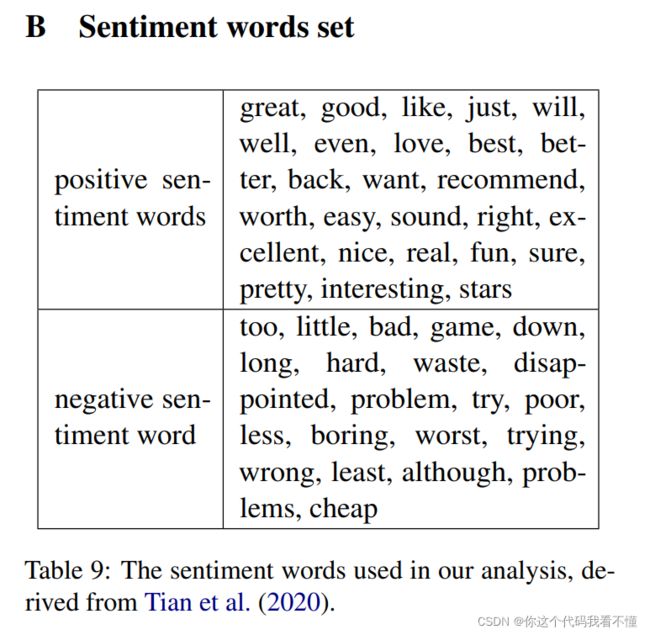

- 近些年,预训练语言模型 E L M o 、 B E R T 、 X L N e t ELMo、BERT、XLNet ELMo、BERT、XLNet在各种下游任务中都实现了SOTA。为了更深入的了解预训练语言模型,许多探针任务被设计出来。探针(probe)通常是一个简单的神经网络(具有少量的额外参数),其使用预训练语言模型输出的特征向量,并执行某些简单任务(需要标注数据)。通常来说,探针的表现可以间接的衡量预训练语言模型生成向量的表现。

- 基于探针的方法最大的缺点是需要引入额外的参数,这将使最终的结果难以解释,难以区分是预训练语言模型捕获了语义信息还是探针任务学习到了下游任务的知识,并将其编码至引入的额外参数中。

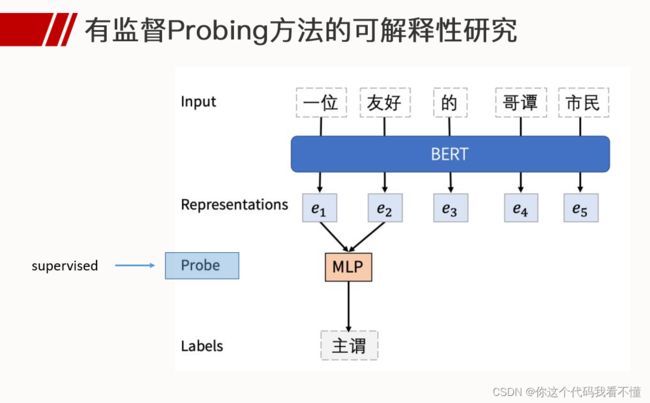

- 本文提出了一种称为Perturbed Masking的无参数探针,其能用于分析和解释预训练语言模型。

- Perturbed Masking通过改进 M L M MLM MLM(Masked Language Model)任务的目标函数来衡量单词 x j x_j xj对于预测 x i x_i xi的重要性。

推荐阅读:

https://www.pianshen.com/article/40441703264/

https://spaces.ac.cn/archives/7476

- 以下内容来自:https://mp.weixin.qq.com/s/7qbonGz2u9e6BQ444IJbWw

- 以下,https://mp.weixin.qq.com/s/cUk-bORVqqcYj-oQtDx21A

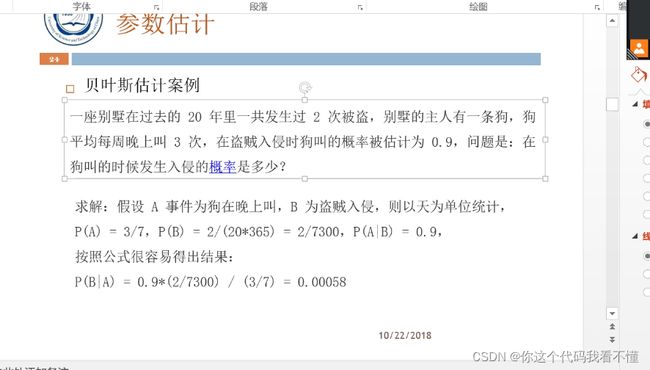

- p(A·B)

- 分层设置学习率,靠近输入的层提取底层的共性特征,靠近输出的层提取高级的(特定场景)专有特征。微调时,靠近输入层的学习率就应该比较小。这个想法其实对于CV领域的效果会更好。有人做实验,使用ImageNet数据集预训练的模型,用在汉字识别上,只训练最后的全连接层(frozen前面所有卷积层的参数),就取得了与前人相近的实验结果,也是这个道理。

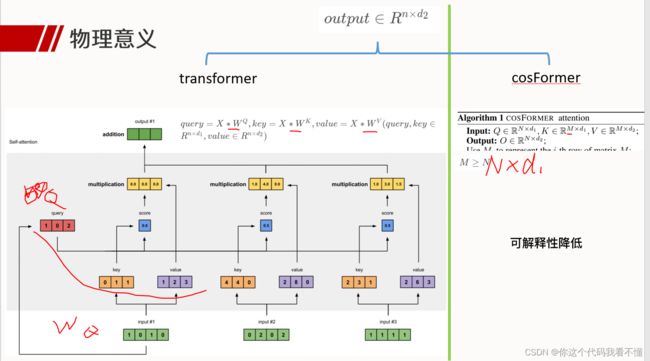

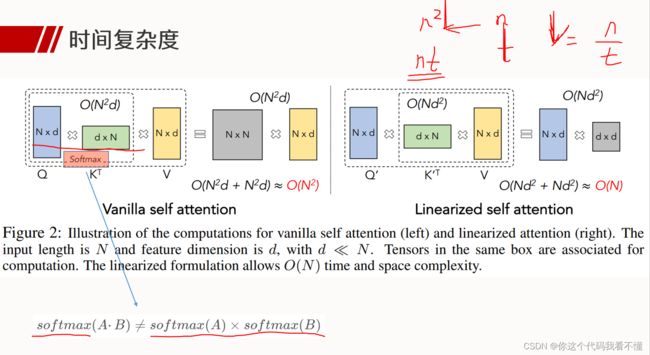

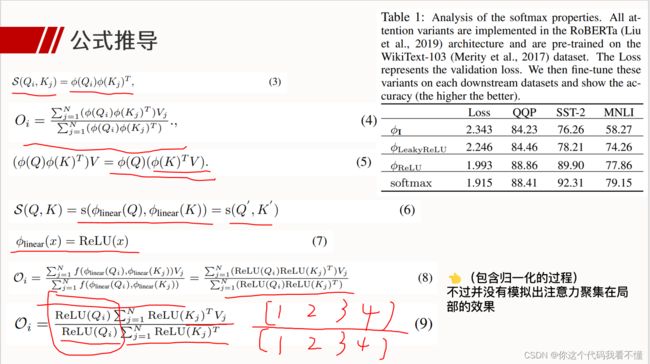

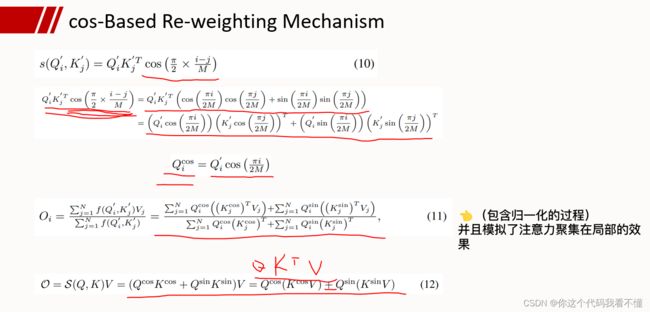

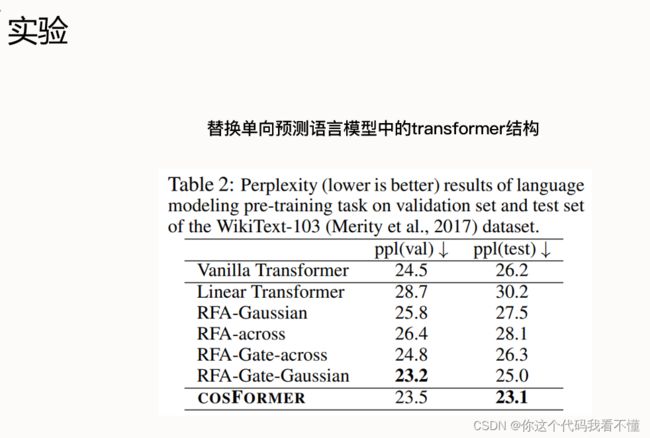

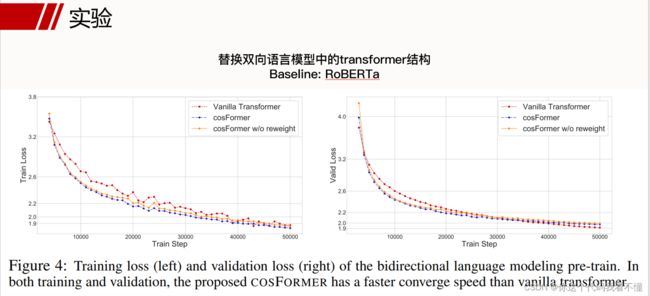

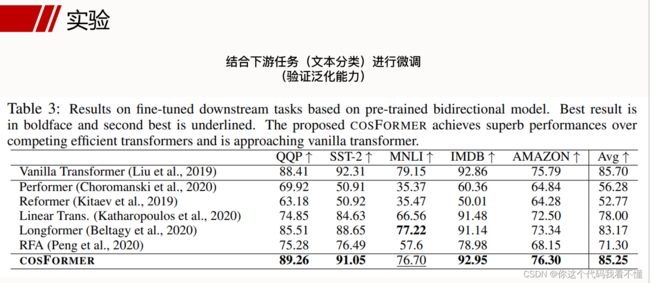

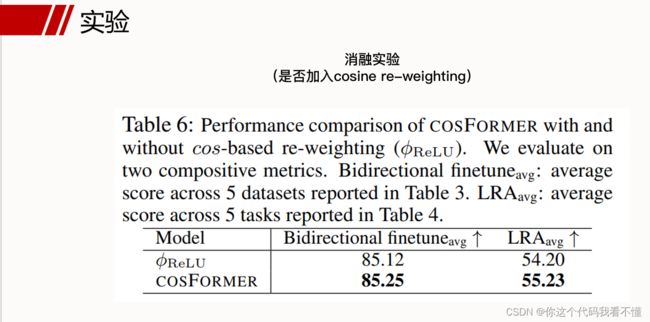

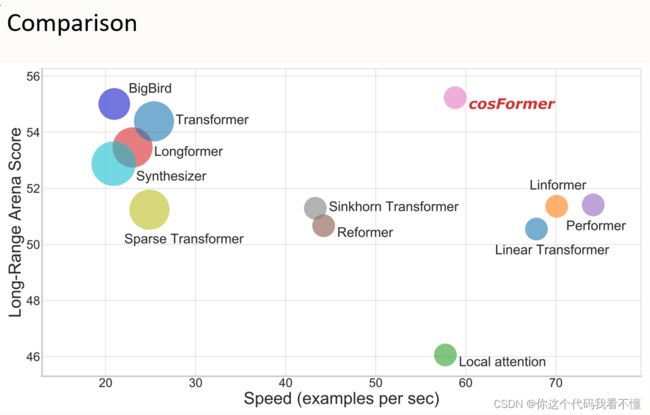

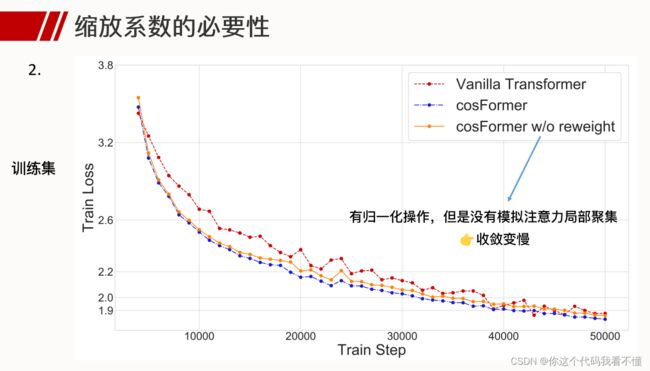

COSFORMER : RETHINKING SOFTMAX IN ATTENTION

-

推荐阅读:https://www.freesion.com/article/1548803712/

-

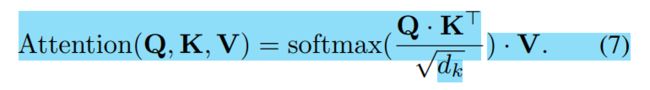

transformer:

q u e r y = X ∗ W Q , k e y = X ∗ W K , v a l u e = X ∗ W V ( q u e r y , k e y ∈ R n × d 1 , v a l u e ∈ R n × d 2 ) query = X * W^Q, key = X * W^K, value = X * W^V (query, key ∈ R^{n \times d_1}, value ∈ R^{n \times d_2}) query=X∗WQ,key=X∗WK,value=X∗WV(query,key∈Rn×d1,value∈Rn×d2) -

o u t p u t ∈ R n × d 2 output∈R^{n \times d_2} output∈Rn×d2

-

s o f t m a x ( A ⋅ B ) ≠ s o f t m a x ( A ) × s o f t m a x ( B ) softmax(A·B) \neq softmax(A) \times softmax(B) softmax(A⋅B)=softmax(A)×softmax(B)

在另一篇论文中,题出最终的注意力系数取{-1, 0, 1, 2}的情况,我也认为系数应该越丰富越好,但是本文中认为在相关矩阵中负数是一种冗余的数据,剔除掉之后实验效果更好。

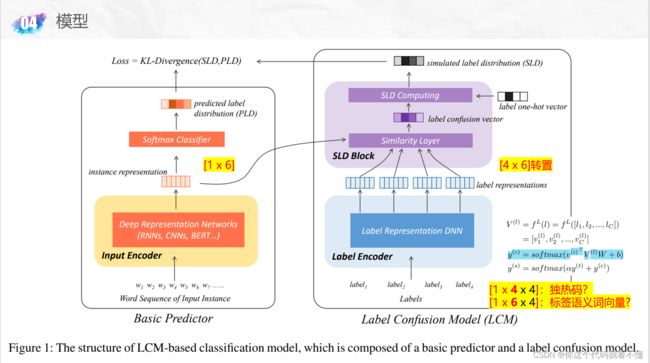

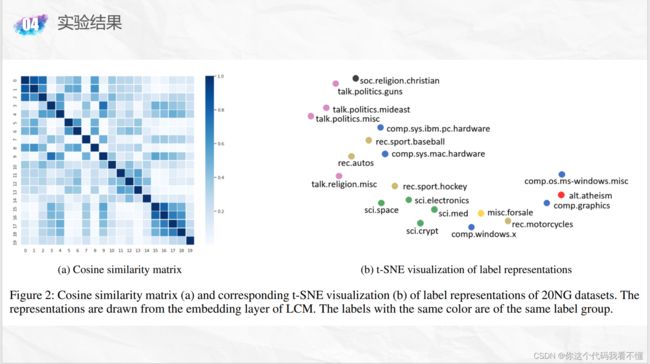

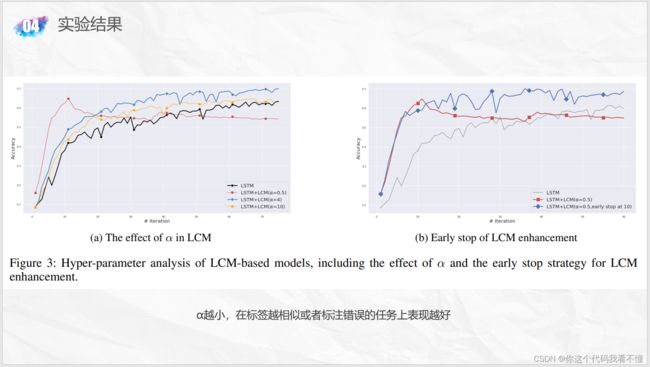

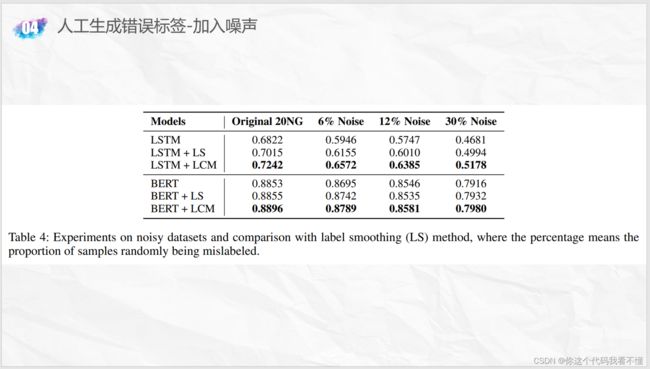

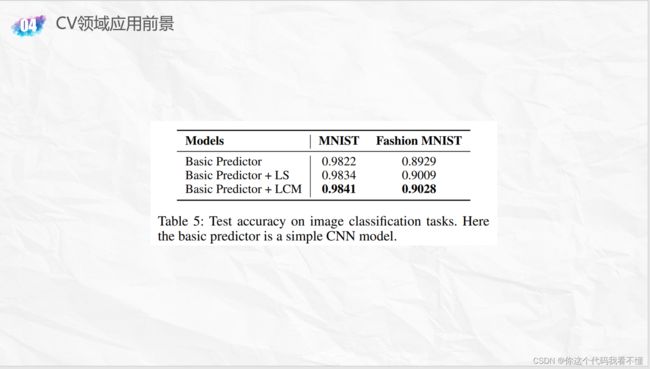

Label Confusion Learning to Enhance Text Classification Models

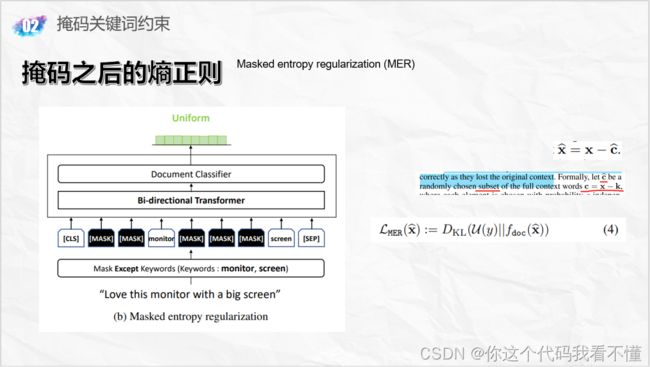

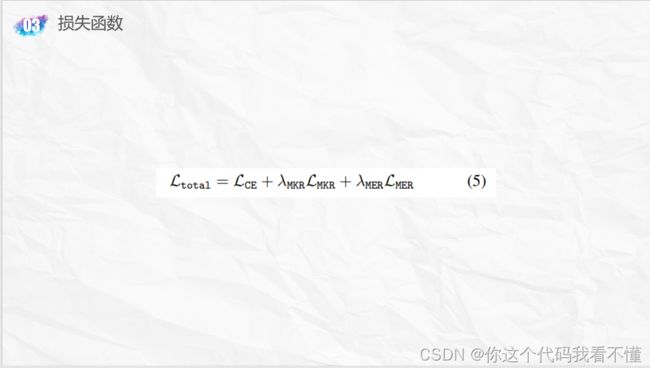

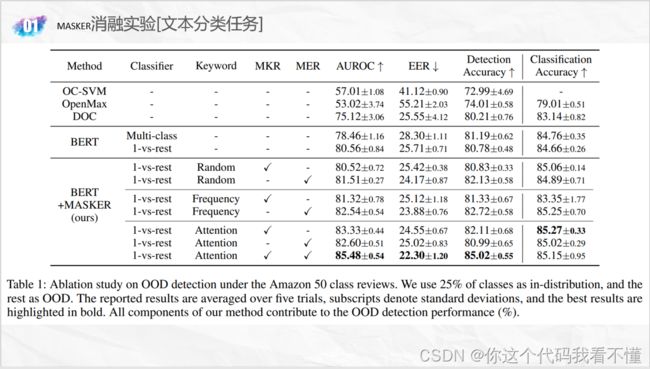

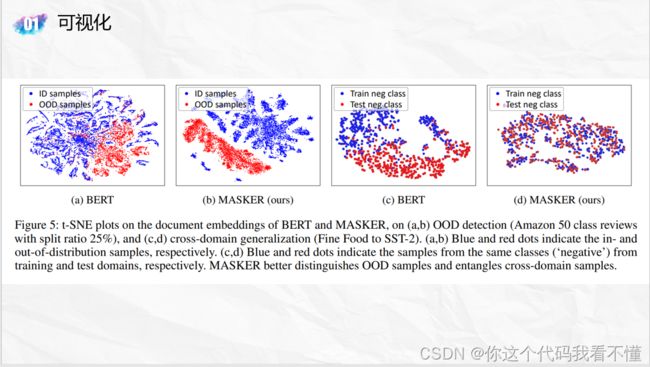

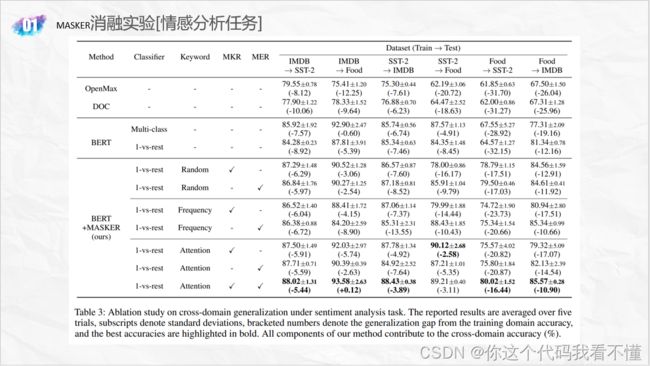

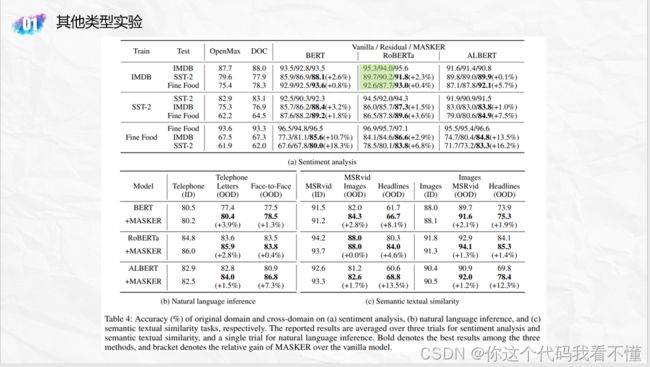

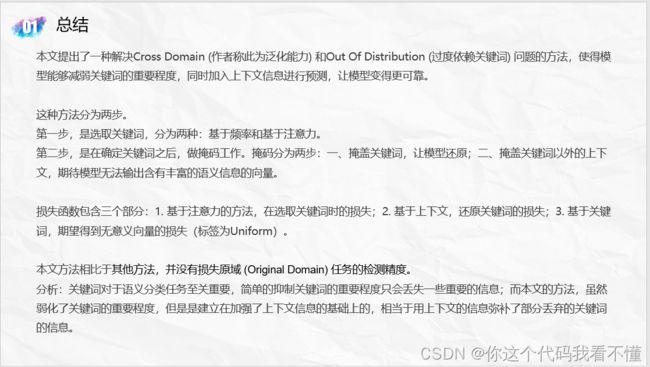

MASKER: Masked Keyword Regularization for Reliable Text Classification

Convolutional Neural Networks for Sentence Classification

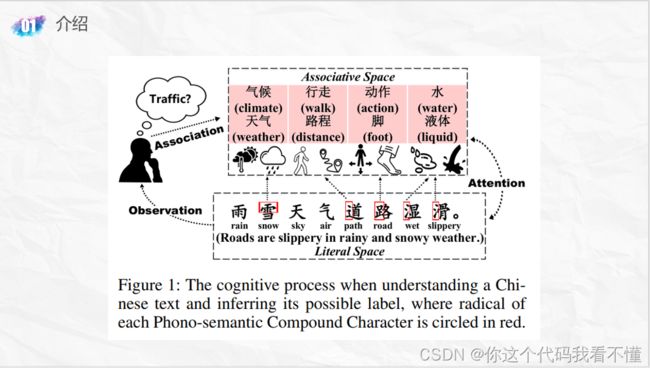

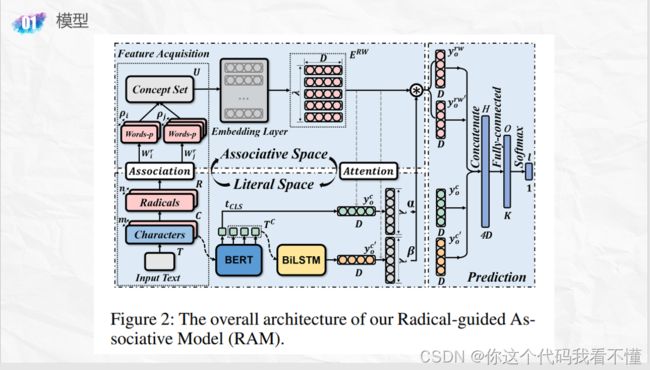

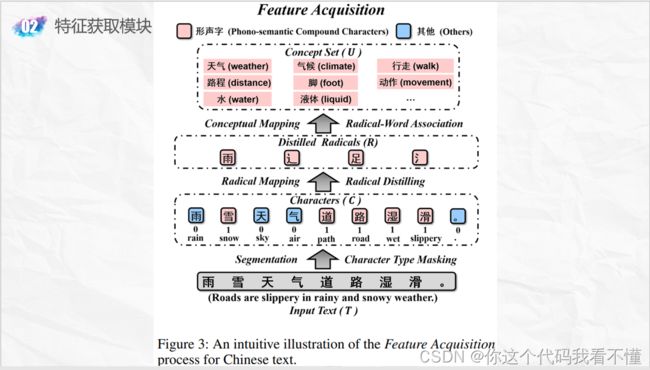

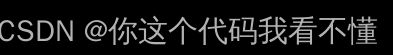

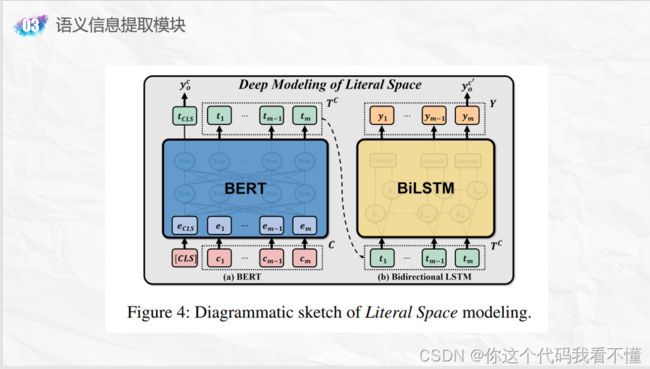

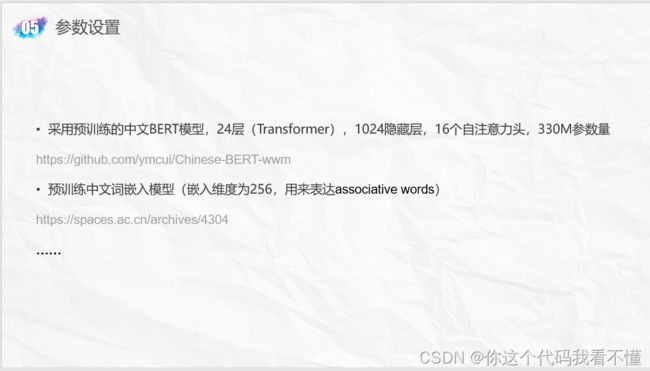

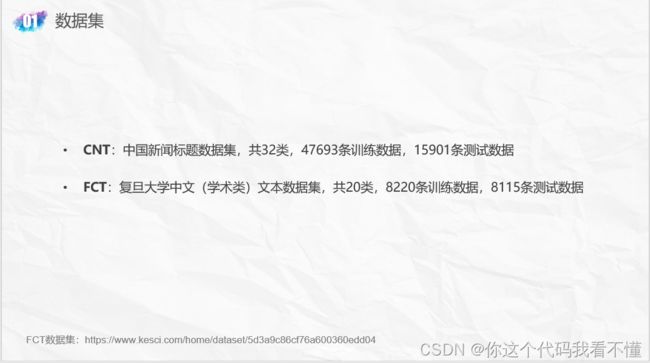

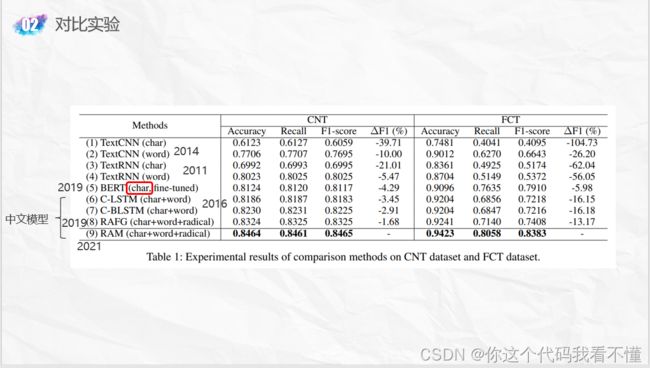

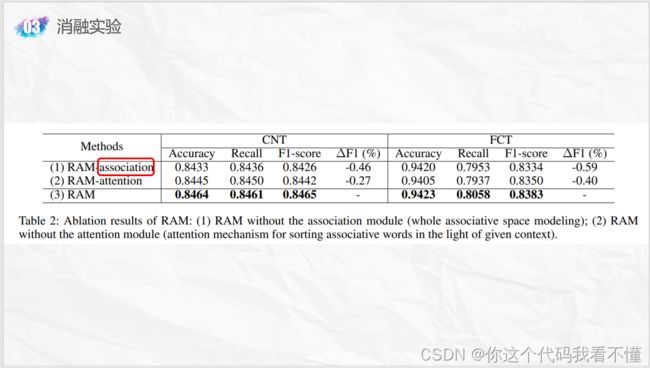

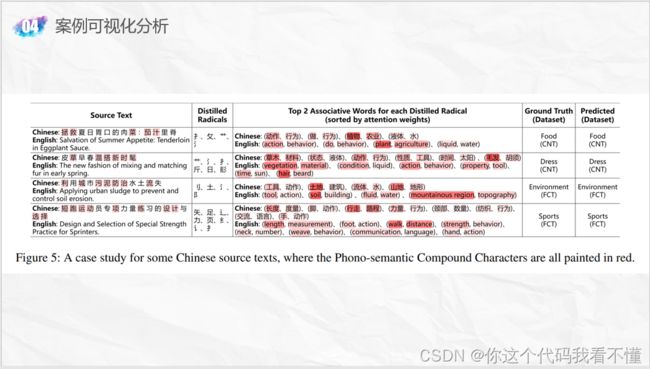

Ideography Leads Us to the Field of Cognition: A Radical-Guided Associative Model for Chinese Text Classification

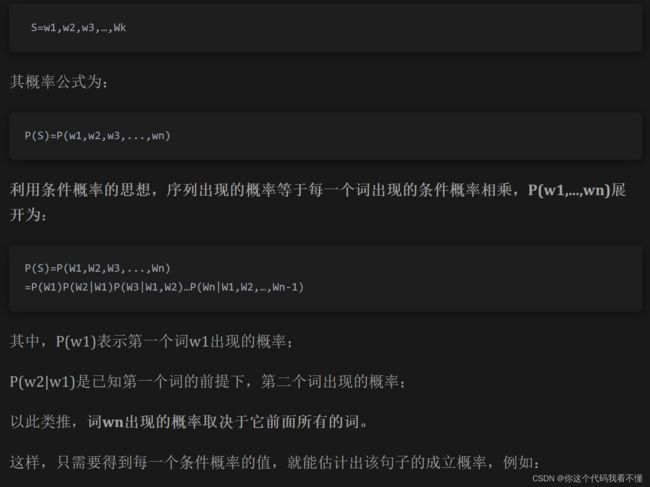

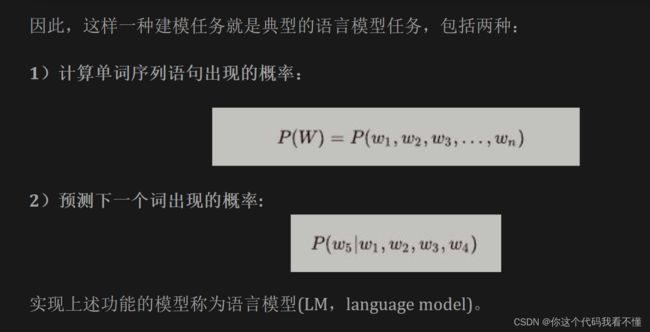

*自然语言处理中N-Gram模型介绍

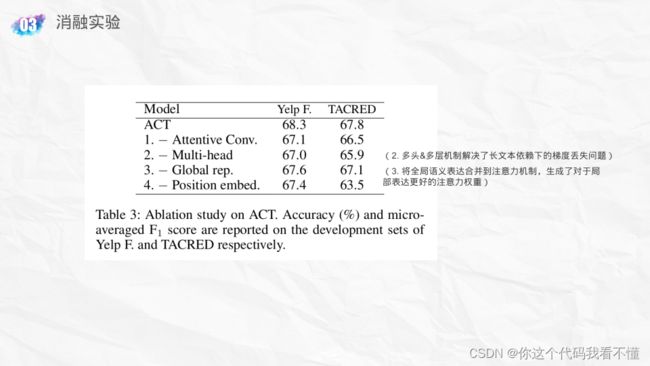

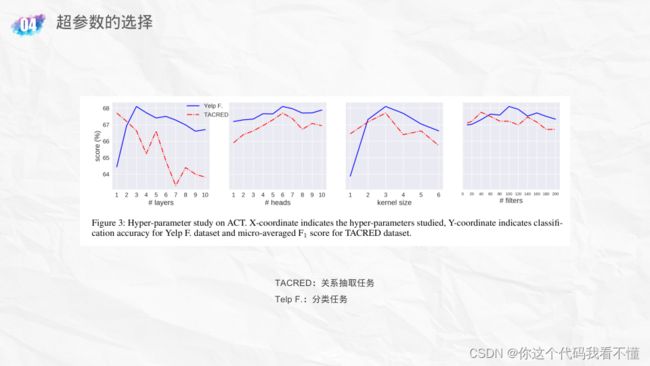

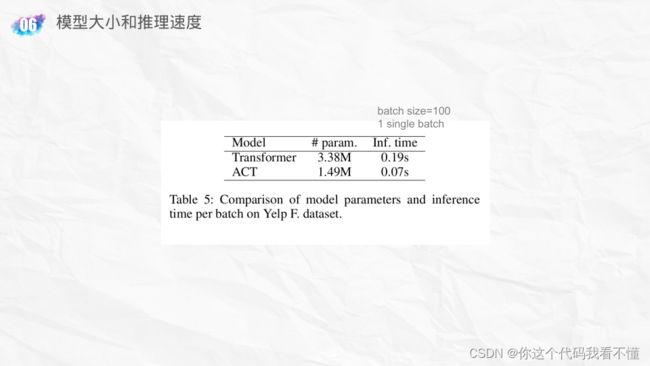

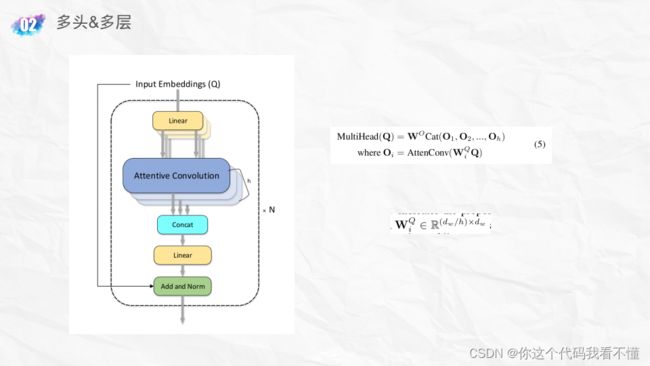

ACT: an Attentive Convolutional Transformer for Efficient Text Classification

关系抽取:抽取三元组(主体、关系、客体)

关系抽取:抽取三元组(主体、关系、客体)

由于需要构建知识图谱,所以在实体识别的基础上,我们需要构建一个模型来识别同一个句子中实体间的关系。关系抽取本身是一个分类问题。给定两个实体和两个实体共同出现的句子文本,判别两个实体之间的关系。

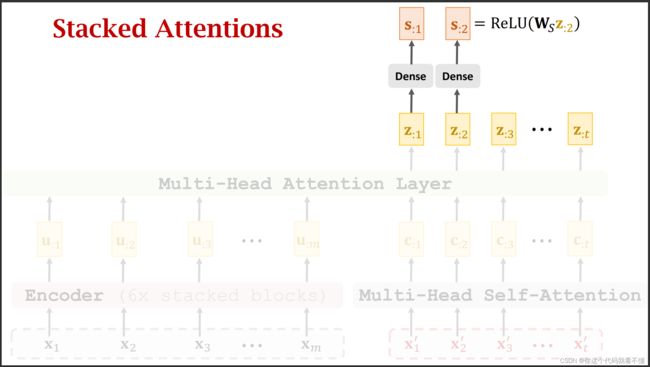

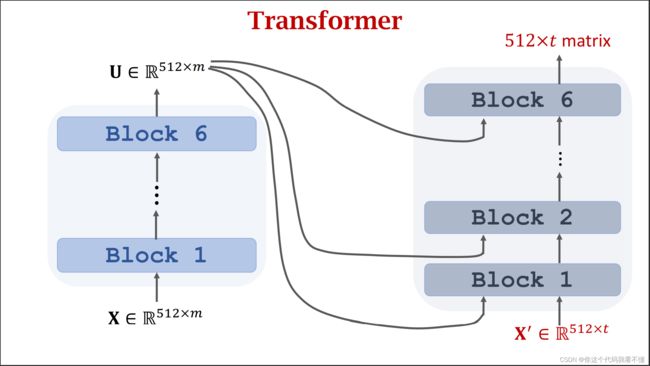

transformer的encoder和decoder也各有6层

transformer的encoder和decoder也各有6层

&多头

bert-12/24

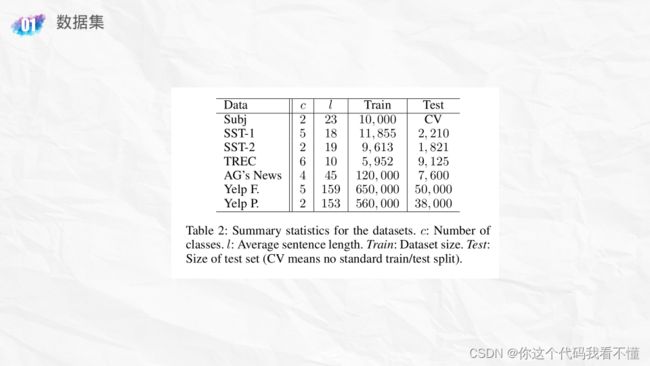

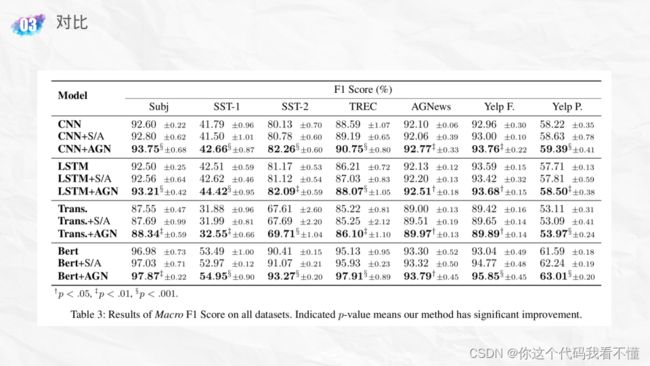

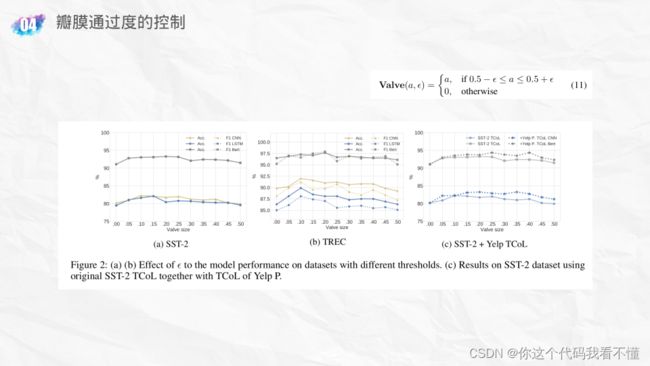

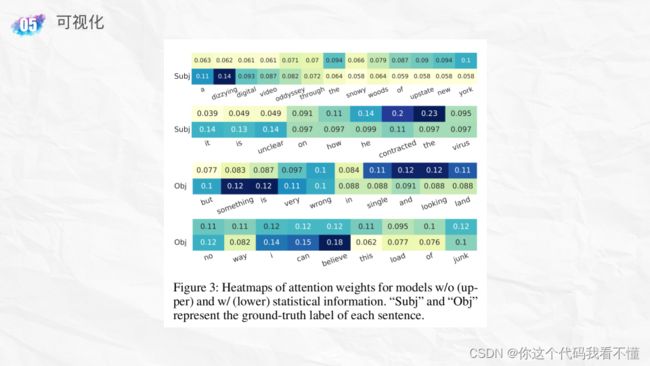

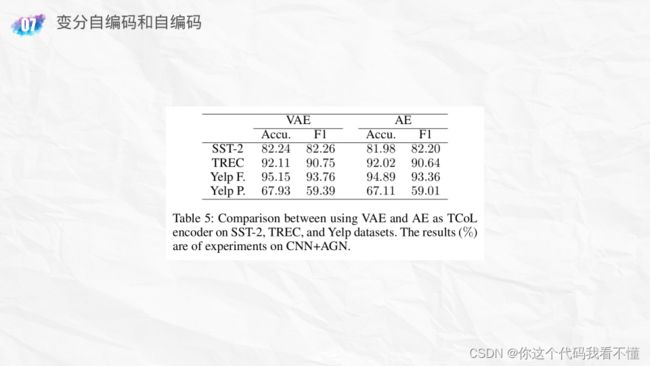

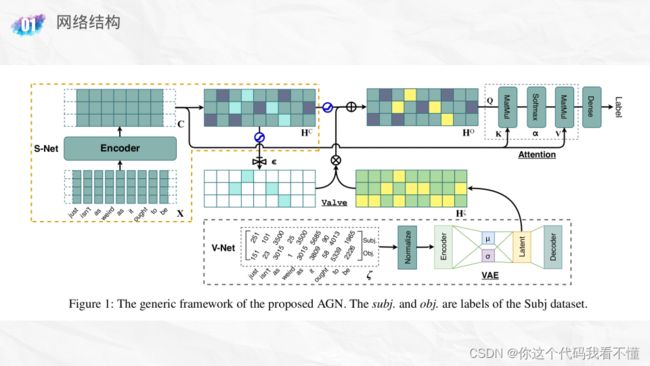

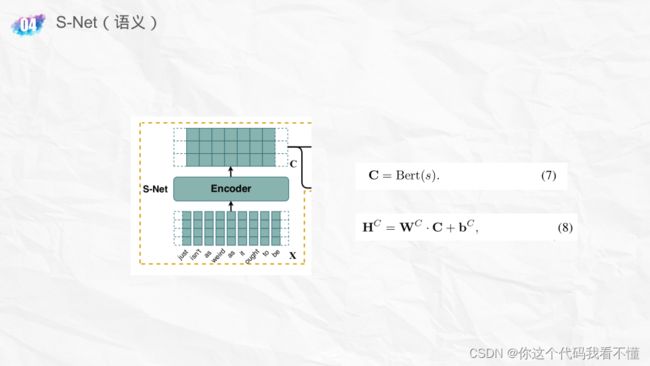

Merging Statistical Feature via Adaptive Gate for Improved Text Classification

![]() 如果单词 w 在所有标签上的频率很高或很低,那么我们可以假设 w 对分类任务的贡献有限。相反,如果一个词在特定的标签类中出现得更频繁,假设这个词是携带特殊信息的。

如果单词 w 在所有标签上的频率很高或很低,那么我们可以假设 w 对分类任务的贡献有限。相反,如果一个词在特定的标签类中出现得更频繁,假设这个词是携带特殊信息的。

TCoL字典 V 仅从训练集获得,防止信息泄露。

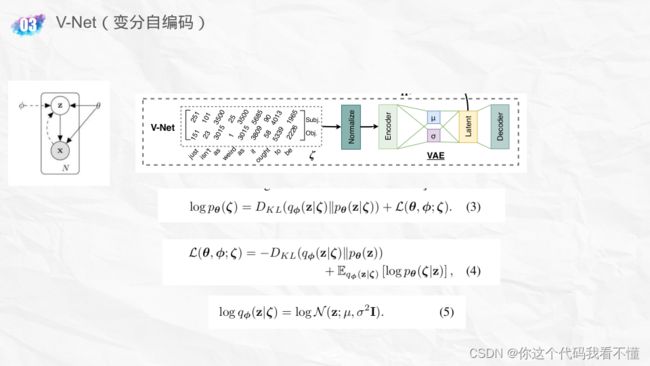

似然估计

观测数据是X,而X由隐变量Z产生,由Z->X是生成模型\theta,就是解码器;

观测数据是X,而X由隐变量Z产生,由Z->X是生成模型\theta,就是解码器;

而由x->z是识别模型\phi,类似于自编码器的编码器。

z为原因

p(z)先验概率

p(z|ζ)后验概率

p(ζ|z)似然估计

(K · Q · V)

Pytorch实战

初识Pytorch

初识词表

if os.path.exists(config.vocab_path):

vocab = pkl.load(open(config.vocab_path, 'rb')) # 语料库,词表

else:

vocab = build_vocab(config.train_path, tokenizer=tokenizer, max_size=MAX_VOCAB_SIZE, min_freq=1)

pkl.dump(vocab, open(config.vocab_path, 'wb'))

print(f"Vocab size: {len(vocab)}")

def build_vocab(file_path, tokenizer, max_size, min_freq):

vocab_dic = {}

with open(file_path, 'r', encoding='UTF-8') as f:

for line in tqdm(f):

lin = line.strip()

if not lin:

continue

content = lin.split('\t')[0]

for word in tokenizer(content):

vocab_dic[word] = vocab_dic.get(word, 0) + 1 # 字典赋值,构建词表

# get("key", 默认值),如果没有找到key,则返回默认值

vocab_list = sorted([_ for _ in vocab_dic.items() if _[1] >= min_freq], key=lambda x: x[1], reverse=True)[:max_size]

# 如果大于等于最小出现频次min_freq才放进vocab_list,并排好序,只取词表最大长度max_size之前的键值对

# reverse=True为降序

vocab_dic = {word_count[0]: idx for idx, word_count in enumerate(vocab_list)}

# 为词表vocab_list建立索引,并返回一个字典 {'iii': 0, 'sdf': 1} (丢弃了频次数据)

# 按照频次降序排列,霍夫曼树?

'''

tinydict = {'Name': 'Runoob', 'Age': 7}

tinydict2 = {'Sex': 'female' }

tinydict.update(tinydict2)

>>

tinydict : {'Name': 'Runoob', 'Age': 7, 'Sex': 'female'}

'''

vocab_dic.update({UNK: len(vocab_dic), PAD: len(vocab_dic) + 1})

# len(vocab_dic)

# {UNK: len(vocab_dic), PAD: len(vocab_dic) + 1}

# '', ''

return vocab_dic

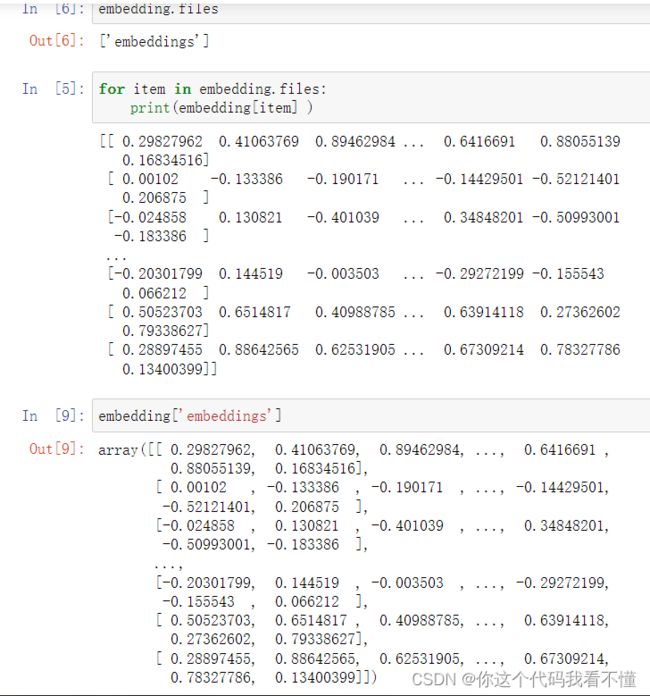

词嵌入

if config.embedding_pretrained is not None:

self.embedding = nn.Embedding.from_pretrained(config.embedding_pretrained, freeze=False)

# 词嵌入

else:

self.embedding = nn.Embedding(config.n_vocab, config.embed, padding_idx=config.n_vocab - 1)

out = self.embedding(x[0])

TextCNN-Pytorch代码

run.py

import time

import torch

import numpy as np

from train_eval import train, init_network, test

from importlib import import_module

import argparse

from tensorboardX import SummaryWriter

parser = argparse.ArgumentParser(description='Chinese Text Classification')

parser.add_argument('--model', default='TextCNN', type=str,

help='choose a model: TextCNN, TextRNN, FastText, TextRCNN, TextRNN_Att, DPCNN, Transformer')

parser.add_argument('--embedding', default='pre_trained', type=str, help='random or pre_trained')

parser.add_argument('--word', default=False, type=bool, help='True for word, False for char')

args = parser.parse_args()

if __name__ == '__main__':

dataset = 'THUCNews' # 数据集

# 搜狗新闻:embedding_SougouNews.npz, 腾讯:embedding_Tencent.npz, 随机初始化:random

embedding = 'embedding_SougouNews.npz'

if args.embedding == 'random':

embedding = 'random'

model_name = args.model # TextCNN, TextRNN,

if model_name == 'FastText':

from utils_fasttext import build_dataset, build_iterator, get_time_dif

embedding = 'random'

else:

from utils import build_dataset, build_iterator, get_time_dif

x = import_module('models.' + model_name) # 导入模块,相对路径

config = x.Config(dataset, embedding) # 传入参数,对应模型的Config类初始化

np.random.seed(1)

torch.manual_seed(1)

torch.cuda.manual_seed_all(1)

torch.backends.cudnn.deterministic = True # 保证每次结果一样

start_time = time.time()

print("Loading data...")

vocab, train_data, dev_data, test_data = build_dataset(config, args.word)

train_iter = build_iterator(train_data, config)

dev_iter = build_iterator(dev_data, config)

test_iter = build_iterator(test_data, config)

time_dif = get_time_dif(start_time)

print("Time usage:", time_dif)

# train

config.n_vocab = len(vocab)

model = x.Model(config).to(config.device)

writer = SummaryWriter(log_dir=config.log_path + '/' + time.strftime('%m-%d_%H.%M', time.localtime()))

if model_name != 'Transformer':

init_network(model)

print(model.parameters)

# torch.save(model, "saved\\cnn_model.pth")

# train(config, model, train_iter, dev_iter, test_iter, writer)

# train(config, model, train_iter, dev_iter, test_iter, writer)

# test(config, model, test_iter)

test(config, model, test_iter)

train_eval.py

# coding: UTF-8

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

from sklearn import metrics

import time

from utils import get_time_dif

import pickle as pkl

from tensorboardX import SummaryWriter

import csv

# 自定义的函数

# 找字典里value = val的键值对,返回其key

def get_key(_dict_, val):

for key, value in _dict_.items():

if value == val:

return key

return 'Key Not Found'

# 权重初始化,默认xavier

def init_network(model, method='xavier', exclude='embedding', seed=123):

for name, w in model.named_parameters():

if exclude not in name:

if 'weight' in name:

if method == 'xavier':

nn.init.xavier_normal_(w)

elif method == 'kaiming':

nn.init.kaiming_normal_(w)

else:

nn.init.normal_(w)

elif 'bias' in name:

nn.init.constant_(w, 0)

else:

pass

def train(config, model, train_iter, dev_iter, test_iter, writer):

start_time = time.time()

model.train()

optimizer = torch.optim.Adam(model.parameters(), lr=config.learning_rate)

# 学习率指数衰减,每次epoch:学习率 = gamma * 学习率

# scheduler = torch.optim.lr_scheduler.ExponentialLR(optimizer, gamma=0.9)

total_batch = 0 # 记录进行到多少batch

dev_best_loss = float('inf')

last_improve = 0 # 记录上次验证集loss下降的batch数

flag = False # 记录是否很久没有效果提升

#writer = SummaryWriter(log_dir=config.log_path + '/' + time.strftime('%m-%d_%H.%M', time.localtime()))

for epoch in range(config.num_epochs):

print('Epoch [{}/{}]'.format(epoch + 1, config.num_epochs))

# scheduler.step() # 学习率衰减

for i, (trains, labels) in enumerate(train_iter):

#print (trains[0].shape)

outputs = model(trains)

model.zero_grad()

loss = F.cross_entropy(outputs, labels)

loss.backward()

optimizer.step()

if total_batch % 100 == 0:

# 每多少轮输出在训练集和验证集上的效果

true = labels.data.cpu()

predic = torch.max(outputs.data, 1)[1].cpu()

train_acc = metrics.accuracy_score(true, predic)

dev_acc, dev_loss = evaluate(config, model, dev_iter)

if dev_loss < dev_best_loss:

dev_best_loss = dev_loss

torch.save(model.state_dict(), config.save_path)

improve = '*'

last_improve = total_batch

else:

improve = ''

time_dif = get_time_dif(start_time)

msg = 'Iter: {0:>6}, Train Loss: {1:>5.2}, Train Acc: {2:>6.2%}, Val Loss: {3:>5.2}, Val Acc: {4:>6.2%}, Time: {5} {6}'

print(msg.format(total_batch, loss.item(), train_acc, dev_loss, dev_acc, time_dif, improve))

writer.add_scalar("loss/train", loss.item(), total_batch)

writer.add_scalar("loss/dev", dev_loss, total_batch)

writer.add_scalar("acc/train", train_acc, total_batch)

writer.add_scalar("acc/dev", dev_acc, total_batch)

model.train()

total_batch += 1

if total_batch - last_improve > config.require_improvement:

# 验证集loss超过1000batch没下降,结束训练

print("No optimization for a long time, auto-stopping...")

flag = True

break

if flag:

break

writer.close()

test(config, model, test_iter)

def test(config, model, test_iter):

# test

model.load_state_dict(torch.load(config.save_path))

model.eval()

start_time = time.time()

test_acc, test_loss, test_report, test_confusion = evaluate(config, model, test_iter, test=True)

msg = 'Test Loss: {0:>5.2}, Test Acc: {1:>6.2%}'

print(msg.format(test_loss, test_acc))

print("Precision, Recall and F1-Score...")

print(test_report)

print("Confusion Matrix...")

print(test_confusion)

time_dif = get_time_dif(start_time)

print("Time usage:", time_dif)

def evaluate(config, model, data_iter, test=False):

print(config.class_list)

model.eval()

loss_total = 0

predict_all = np.array([], dtype=int)

labels_all = np.array([], dtype=int)

vocab = pkl.load(open(config.vocab_path, 'rb'))

file = open("saved\\predict.csv", "w", newline='', encoding="utf-8-sig")

label_txt = open("THUCNews\\data\\test.txt", "r", encoding="utf-8")

lines = label_txt.readlines()

num = 0

with torch.no_grad():

for texts, labels in data_iter:

if len(texts[0]) < config.batch_size:

print("当前batch size不足,跳出")

break

# print(config.batch_size)

outputs = model(texts)

# print(outputs)

for row in range(config.batch_size):

# _str_ = ""

# print(row)

# print(config.pad_size)

# for column in range(config.pad_size):

# print(column)

# print(vocab)

# print(texts[0][row, column])

# _str_ = _str_ + get_key(vocab, texts[0][row, column])

# print(labels[row].item())

# print(outputs)

_str_ = lines[num]

num += 1

_str_ = _str_.strip('\n')

_str_ = _str_.replace(_str_[-1], "")

# print(_str_[-1])

# _str_ = _str_.replace("", "")

label = labels[row].item()

output = torch.argmax(outputs[row], -1).item()

# print(_str_)

# print(config.class_list[label])

# print(config.class_list[output])

# file.write(_str_ + "\t" + config.class_list[output] + "\t" + config.class_list[label] + "\n")

csv_file = csv.writer(file)

csv_file.writerow([_str_, config.class_list[output], config.class_list[label]])

# print("第{}行已记录".format(row))

loss = F.cross_entropy(outputs, labels)

loss_total += loss

print(labels)

labels = labels.data.cpu().numpy()

# print(labels)

predic = torch.max(outputs.data, 1)[1].cpu().numpy()

labels_all = np.append(labels_all, labels)

predict_all = np.append(predict_all, predic)

file.close()

label_txt.close()

acc = metrics.accuracy_score(labels_all, predict_all)

if test:

report = metrics.classification_report(labels_all, predict_all, target_names=config.class_list, digits=4)

confusion = metrics.confusion_matrix(labels_all, predict_all)

return acc, loss_total / len(data_iter), report, confusion

return acc, loss_total / len(data_iter)

utils.py

# coding: UTF-8

import os

import torch

import numpy as np

import pickle as pkl

from tqdm import tqdm

import time

from datetime import timedelta

MAX_VOCAB_SIZE = 10000 # 词表长度限制

UNK, PAD = '' , '' # 未知字,padding符号

# 中文

# char-level 词表较小

# word-level 词表会较大

'''

tqdm # python进度条函数

from tqdm import tqdm

import time

d = {'loss':0.2,'learn':0.8}

for i in tqdm(range(50),desc='进行中',ncols=10,postfix=d): #desc设置名称,ncols设置进度条长度.postfix以字典形式传入详细信息

time.sleep(0.1)

pass

'''

def build_vocab(file_path, tokenizer, max_size, min_freq):

vocab_dic = {}

with open(file_path, 'r', encoding='UTF-8') as f:

for line in tqdm(f):

lin = line.strip()

if not lin:

continue

content = lin.split('\t')[0]

for word in tokenizer(content):

vocab_dic[word] = vocab_dic.get(word, 0) + 1 # 字典赋值,构建词表

# get("key", 默认值),如果没有找到key,则返回默认值

vocab_list = sorted([_ for _ in vocab_dic.items() if _[1] >= min_freq], key=lambda x: x[1], reverse=True)[:max_size]

# 如果大于等于最小出现频次min_freq才放进vocab_list,并排好序,只取词表最大长度max_size之前的键值对

# reverse=True为降序

vocab_dic = {word_count[0]: idx for idx, word_count in enumerate(vocab_list)}

# 为词表vocab_list建立索引,并返回一个字典 {'iii': 0, 'sdf': 1} (丢弃了频次数据)

# 按照频次降序排列,霍夫曼树?

'''

tinydict = {'Name': 'Runoob', 'Age': 7}

tinydict2 = {'Sex': 'female' }

tinydict.update(tinydict2)

>>

tinydict : {'Name': 'Runoob', 'Age': 7, 'Sex': 'female'}

'''

vocab_dic.update({UNK: len(vocab_dic), PAD: len(vocab_dic) + 1})

# len(vocab_dic)

# {UNK: len(vocab_dic), PAD: len(vocab_dic) + 1}

# '', ''

return vocab_dic

def build_dataset(config, ues_word):

if ues_word:

tokenizer = lambda x: x.split(' ') # 以空格隔开,word-level

else:

tokenizer = lambda x: [y for y in x] # char-level # 构建列表tokenizer,该列表遍历了x

if os.path.exists(config.vocab_path):

vocab = pkl.load(open(config.vocab_path, 'rb')) # 语料库,词表

else:

vocab = build_vocab(config.train_path, tokenizer=tokenizer, max_size=MAX_VOCAB_SIZE, min_freq=1)

pkl.dump(vocab, open(config.vocab_path, 'wb'))

print(f"Vocab size: {len(vocab)}")

def load_dataset(path, pad_size=32): # default, pad_size = 32

contents = []

with open(path, 'r', encoding='UTF-8') as f:

for line in tqdm(f):

lin = line.strip()

if not lin:

continue

content, label = lin.split('\t')

words_line = []

token = tokenizer(content) # token 列表

seq_len = len(token)

if pad_size:

if len(token) < pad_size:

token.extend([vocab.get(PAD)] * (pad_size - len(token)))

'''

In[36]: ["hi"]*3

Out[36]: ['hi', 'hi', 'hi']

'''

else:

token = token[:pad_size] # 截断

seq_len = pad_size

# word to id

for word in token:

words_line.append(vocab.get(word, vocab.get(UNK)))

contents.append((words_line, int(label), seq_len))

return contents # [([...], 0, seq_len), ([...], 1, seq_len), ...]

train = load_dataset(config.train_path, config.pad_size)

dev = load_dataset(config.dev_path, config.pad_size)

test = load_dataset(config.test_path, config.pad_size)

return vocab, train, dev, test

class DatasetIterater(object):

def __init__(self, batches, batch_size, device): # batches

self.batch_size = batch_size

self.batches = batches

self.n_batches = len(batches) // batch_size

self.residue = False # 记录batch数量是否为整数,True:否,False:是

if len(batches) % self.n_batches != 0:

self.residue = True

self.index = 0

self.device = device

def _to_tensor(self, datas):

x = torch.LongTensor([_[0] for _ in datas]).to(self.device)

y = torch.LongTensor([_[1] for _ in datas]).to(self.device)

# pad前的长度(超过pad_size的设为pad_size)

seq_len = torch.LongTensor([_[2] for _ in datas]).to(self.device)

return (x, seq_len), y

def __next__(self):

if self.residue and self.index == self.n_batches:

batches = self.batches[self.index * self.batch_size: len(self.batches)]

self.index += 1

batches = self._to_tensor(batches)

return batches

elif self.index > self.n_batches:

self.index = 0

raise StopIteration

else:

batches = self.batches[self.index * self.batch_size: (self.index + 1) * self.batch_size]

self.index += 1

batches = self._to_tensor(batches)

return batches

def __iter__(self):

return self

def __len__(self):

if self.residue:

return self.n_batches + 1

else:

return self.n_batches

def build_iterator(dataset, config):

iter = DatasetIterater(dataset, config.batch_size, config.device)

return iter

def get_time_dif(start_time):

"""获取已使用时间"""

end_time = time.time()

time_dif = end_time - start_time

return timedelta(seconds=int(round(time_dif)))

if __name__ == "__main__":

# 如果执行python utils.py

# 则运行以下代码

'''提取预训练词向量'''

# 下面的目录、文件名按需更改。

train_dir = "./THUCNews/data/train.txt"

vocab_dir = "./THUCNews/data/vocab.pkl"

pretrain_dir = "./THUCNews/data/sgns.sogou.char"

emb_dim = 300

filename_trimmed_dir = "./THUCNews/data/embedding_SougouNews"

if os.path.exists(vocab_dir):

word_to_id = pkl.load(open(vocab_dir, 'rb'))

else:

# tokenizer = lambda x: x.split(' ') # 以词为单位构建词表(数据集中词之间以空格隔开)

tokenizer = lambda x: [y for y in x] # 以字为单位构建词表

word_to_id = build_vocab(train_dir, tokenizer=tokenizer, max_size=MAX_VOCAB_SIZE, min_freq=1)

pkl.dump(word_to_id, open(vocab_dir, 'wb'))

embeddings = np.random.rand(len(word_to_id), emb_dim)

f = open(pretrain_dir, "r", encoding='UTF-8')

for i, line in enumerate(f.readlines()):

# if i == 0: # 若第一行是标题,则跳过

# continue

lin = line.strip().split(" ")

if lin[0] in word_to_id:

idx = word_to_id[lin[0]]

emb = [float(x) for x in lin[1:301]]

embeddings[idx] = np.asarray(emb, dtype='float32')

f.close()

np.savez_compressed(filename_trimmed_dir, embeddings=embeddings)

models.TextCNN.py

# coding: UTF-8

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

class Config(object):

"""配置参数"""

def __init__(self, dataset, embedding):

self.model_name = 'TextCNN'

self.train_path = dataset + '/data/train.txt' # 训练集

self.dev_path = dataset + '/data/dev.txt' # 验证集

self.test_path = dataset + '/data/test.txt' # 测试集

self.class_list = [x.strip() for x in open(

dataset + '/data/class.txt').readlines()] # 类别名单

self.vocab_path = dataset + '/data/vocab.pkl' # 词表 语料库

self.save_path = dataset + '/saved_dict/' + self.model_name + '.ckpt' # 模型训练结果

self.log_path = dataset + '/log/' + self.model_name

self.embedding_pretrained = torch.tensor(

np.load(dataset + '/data/' + embedding)["embeddings"].astype('float32'))\

if embedding != 'random' else None # 预训练词向量 # \ 续航符

self.device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu') # 设备

self.dropout = 0.5 # 随机失活

self.require_improvement = 1000 # 若超过1000batch效果还没提升,则提前结束训练

self.num_classes = len(self.class_list) # 类别数

self.n_vocab = 0 # 词表大小,在运行时赋值

self.num_epochs = 20 # epoch数

self.batch_size = 128 # mini-batch大小

self.pad_size = 32 # 每句话处理成的长度(短填长切)

self.learning_rate = 1e-3 # 学习率

self.embed = self.embedding_pretrained.size(1)\

if self.embedding_pretrained is not None else 300 # 字向量维度

self.filter_sizes = (2, 3, 4) # 卷积核尺寸

self.num_filters = 256 # 卷积核数量(channels数)

'''Convolutional Neural Networks for Sentence Classification'''

class Model(nn.Module):

def __init__(self, config):

super(Model, self).__init__()

if config.embedding_pretrained is not None:

self.embedding = nn.Embedding.from_pretrained(config.embedding_pretrained, freeze=False)

# 词嵌入

else:

self.embedding = nn.Embedding(config.n_vocab, config.embed, padding_idx=config.n_vocab - 1)

self.convs = nn.ModuleList(

[nn.Conv2d(1, config.num_filters, (k, config.embed)) for k in config.filter_sizes])

self.dropout = nn.Dropout(config.dropout)

self.fc = nn.Linear(config.num_filters * len(config.filter_sizes), config.num_classes)

def conv_and_pool(self, x, conv):

x = F.relu(conv(x)).squeeze(3)

x = F.max_pool1d(x, x.size(2)).squeeze(2)

return x

def forward(self, x):

# print("输入序列:")

# print(x[0])

#print (x[0].shape)

out = self.embedding(x[0])

# print("词嵌入:")

# print(out)

out = out.unsqueeze(1)

out = torch.cat([self.conv_and_pool(out, conv) for conv in self.convs], 1)

out = self.dropout(out)

out = self.fc(out)

return out

简单CNN二分类Pytorch实现

main.py

from torch.utils.data import DataLoader

from loadDatasets import *

from model import *

import torchvision

import torch

torch.set_default_tensor_type(torch.DoubleTensor)

torch.autograd.set_detect_anomaly = True

gpu_device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

if __name__ == '__main__':

batch_size = myModel.batch_size

# 加载数据集

train_data = myDataLoader('train.csv', 'datasets', transform=torchvision.transforms.ToTensor())

print("训练集数量{}".format(len(train_data)))

dev_data = myDataLoader('dev.csv', 'datasets', transform=torchvision.transforms.ToTensor())

print("验证集数量{}".format(len(dev_data)))

valid_batch_size = len(dev_data) // (len(train_data)/batch_size)

valid_batch_size = int(valid_batch_size)

train_loader = DataLoader(train_data, batch_size=batch_size, shuffle=True)

dev_loader = DataLoader(dev_data, batch_size=valid_batch_size, shuffle=True)

# 加载模型

cnn_model = myModel()

# 加载预训练参数

cnn_model.load_state_dict(torch.load("model\\cnn_model.pth"), strict=False)

cnn_model = cnn_model.to(gpu_device)

loss_fun = nn.CrossEntropyLoss()

loss_fun = loss_fun.to(gpu_device)

# 迭代训练

epochs = 2

optimizer = torch.optim.Adam(cnn_model.parameters(),

lr=1e-3,

betas=(0.9, 0.999),

eps=1e-08,

weight_decay=0,

amsgrad=False)

total_train_step = 0

# valid_size = 0

# valid_num = 0

for epoch in range(epochs):

print("===========第{}轮训练开始===========".format(epoch + 1))

for trainData, validData in zip(train_loader, dev_loader):

train_seq, train_label = trainData

valid_seq, valid_label = validData

batch_size_train = len(train_seq)

batch_size_valid = len(valid_seq)

# print(batch_size_current)

if batch_size_train < batch_size or batch_size_valid < valid_batch_size:

print("当前不足一个batch_size,停止训练")

break

train_seq = train_seq.to(gpu_device)

train_label = train_label.to(gpu_device)

valid_seq = valid_seq.to(gpu_device)

valid_label = valid_label.to(gpu_device)

# print(train_seq)

# print(train_seq.shape)

# print(train_label)

# print("调用train model")

cnn_model.from_type = "train"

train_output = cnn_model(train_seq)

train_output = train_output.to(gpu_device)

# print("调用valid model")

cnn_model.from_type = "valid"

cnn_model.valid_batch_size = valid_batch_size

# valid_output = cnn_model(valid_seq)

# valid_output = valid_output.to(gpu_device)

# print(valid_output)

# print(valid_label)

# print(valid_output.argmax(1))

# print("训练集")

# print(train_output)

# print(train_output.argmax(1))

# print(train_label)

loss = loss_fun(train_output, train_label)

optimizer.zero_grad()

loss.backward(retain_graph=True)

optimizer.step()

total_train_step += 1

# valid_size += valid_batch_size

# valid_num += (valid_output.argmax(1) == valid_label).sum()

cnn_channel = ""

if (train_output.argmax(1) == train_label).sum() / batch_size > 0.65:

if cnn_model.channel["cnn1"]["status"]:

cnn_model.channel["cnn1"]["prob"] *= 1.0005

cnn_channel = "cnn1"

else:

cnn_model.channel["cnn2"]["prob"] *= 1.0005

cnn_channel = "cnn2"

if total_train_step % 50 == 0:

print("训练次数{},当前损失值 --------- {}".format(total_train_step, loss))

accuracy_train = (train_output.argmax(1) == train_label).sum() / batch_size

print("batch train-accuracy {}%".format(accuracy_train * 100))

# accuracy_valid = valid_num / valid_size

# print("total valid-accuracy {}%".format(accuracy_valid * 100))

print("model total_num {}".format(cnn_model.total_num))

print("当前执行的通道 {}".format(cnn_channel))

prob1 = cnn_model.channel["cnn1"]["prob"] / (cnn_model.channel["cnn1"]["prob"] + cnn_model.channel["cnn2"]["prob"])

prob2 = cnn_model.channel["cnn2"]["prob"] / (cnn_model.channel["cnn1"]["prob"] + cnn_model.channel["cnn2"]["prob"])

print("通道1概率值 {} 通道2概率值{}".format(prob1, prob2))

# 保存模型

torch.save(cnn_model.state_dict(), "model\\cnn_model.pth")

test.py

# total test-accuracy 91.00260416666667% ×

# total test-accuracy 79.90767045454545%

# total test-accuracy 81.09197443181819%

# total test-accuracy 81.17365056818181% # 加入dropout

# total test-accuracy 77.64382102272727% # 加入通路奖励机制

# total test-accuracy 76.68185763888889% # 通路奖励

# total test-accuracy 76.3367259174312%

import torch

import torchvision

from torch.utils.data import DataLoader

from loadDatasets import myDataLoader

from model import myModel

import csv

torch.set_default_tensor_type(torch.DoubleTensor)

torch.autograd.set_detect_anomaly = True

test_batch_size = 1024

gpu_device = torch.device("cuda:0")

test_data = myDataLoader('test.csv', 'datasets', transform=torchvision.transforms.ToTensor())

print("测试集数量{}".format(len(test_data)))

test_loader = DataLoader(test_data, batch_size=test_batch_size, shuffle=True)

# 加载模型

cnn_model = myModel()

cnn_model.load_state_dict(torch.load("model\\cnn_model.pth"), strict=False)

cnn_model.eval()

cnn_model = cnn_model.to(gpu_device)

test_size = 0

test_num = 0

for testData in test_loader:

test_seq, test_label = testData

test_seq = test_seq.to(gpu_device)

test_label = test_label.to(gpu_device)

batch_size_test = len(test_seq)

# print(batch_size_current)

if batch_size_test < test_batch_size:

print("当前不足一个batch_size,停止训练")

break

cnn_model.from_type = "test"

# test_output = cnn_model(test_seq)

test_output = cnn_model.forward(test_seq)

test_output = test_output.to(gpu_device)

test_size += test_batch_size

test_num += (test_output.argmax(1) == test_label).sum()

result_csv = open("result\\result.csv", "a")

csv_write = csv.writer(result_csv)

csv_write.writerow(['概率分布', '预测值', '真实值'])

for predict, label in zip(test_output, test_label):

# predict.to(torch.device("cpu"))

# label.to(torch.device("cpu"))

# print(predict)

probability_distribution = "[" + str(predict[0].to(torch.float64).item()) + "," + str(predict[1].to(torch.float64).item()) + "]"

# print(str(predict[0].to(torch.float64).item()) + " " + str(predict[1].to(torch.float64).item()))

# print(label.item())

label = label.item()

predict_cls = predict.view(-1, 2).argmax(1)

# print(predict_cls.item())

predict_cls = predict_cls.item()

csv_write.writerow([probability_distribution, predict_cls, label])

result_csv.close()

accuracy_test = test_num / test_size

print("total test-accuracy {}%".format(accuracy_test * 100))

model.py

import random

import torch.nn as nn

import torch

torch.set_default_tensor_type(torch.DoubleTensor)

class myModel(nn.Module):

batch_size = 128

def __init__(self):

super().__init__()

self.from_type = ""

self.total_num = 0

self.cnn1_num = 0

self.cnn2_num = 0

self.current_batch_size = self.batch_size

self.valid_batch_size = self.batch_size

self.channel = {

"cnn1": {

"prob": 0.5,

"status": False

},

"cnn2": {

"prob": 0.5,

"status": False

}

}

self.cnn1 = nn.Sequential(

nn.Conv2d(1, 3, (1, 2), padding="same"), # out = 10

nn.ReLU(inplace=False),

nn.Conv2d(3, 5, (1, 3)), # out = 8

nn.ReLU(inplace=False),

nn.Conv2d(5, 7, (1, 5)), # out = 4

nn.ReLU(inplace=False),

nn.Flatten(),

)

self.cnn2 = nn.Sequential(

nn.Conv2d(1, 2, (1, 2), padding="same"), # out = 10

nn.ReLU(inplace=False),

nn.Conv2d(2, 4, (1, 3)), # out = 8

nn.ReLU(inplace=False),

nn.Conv2d(4, 7, (1, 5)), # out = 4

nn.ReLU(inplace=False),

nn.Flatten(),

)

self.fc = nn.Sequential(

nn.Linear(28, 14),

nn.Dropout(0.5),

nn.Linear(14, 7),

# nn.Dropout(0.5),

nn.ReLU(inplace=False),

nn.Linear(7, 2),

nn.Softmax()

)

# self.relu = nn.Relu()

def forward(self, x):

if self.from_type == "valid":

self.current_batch_size = self.valid_batch_size

else:

self.current_batch_size = self.batch_size

# print("model batch size = {}".format(self.current_batch_size))

# print(x)

x = x.to(torch.device("cuda:0"))

# random_num1 = random.random()

self.total_num = self.total_num + 1

self.channel["cnn1"]["status"] = False

self.channel["cnn2"]["status"] = False

self.channel["cnn1"]["prob"] = self.channel["cnn1"]["prob"] / (

self.channel["cnn1"]["prob"] + self.channel["cnn2"]["prob"])

self.channel["cnn2"]["prob"] = self.channel["cnn2"]["prob"] / (

self.channel["cnn1"]["prob"] + self.channel["cnn2"]["prob"])

# if random_num1 < 0.5:

# x1 = torch.relu(self.cnn1(x))

# else:

# x1 = torch.tanh(self.cnn1(x))

# random_num2 = random.random()

# if random_num2 < 0.5:

# x2 = torch.relu(self.cnn1(x))

# else:

# x2 = torch.sigmoid(self.cnn1(x))

x1 = torch.zeros([self.batch_size, 28])

x2 = torch.zeros([self.batch_size, 28])

x1 = x1.to(torch.device("cuda:0"))

x2 = x2.to(torch.device("cuda:0"))

random_num = random.random()

if random_num < self.channel["cnn1"]["prob"]:

x1 = torch.relu(self.cnn1(x))

# print(self.x1.clone() + torch.relu(self.cnn1(x)))

# print(x1.shape)

# print(torch.relu(self.cnn1(x)).shape)

self.channel["cnn1"]["status"] = True

else:

x2 = torch.relu(self.cnn2(x))

# print(self.x2.clone() + torch.relu(self.cnn2(x)))

# print(x2.shape)

# print(torch.relu(self.cnn2(x)).shape)

self.channel["cnn2"]["status"] = True

x = (x1 * self.channel["cnn1"]["prob"] + x2 * self.channel["cnn2"]["prob"])

# x = x.view(batch_size, -1, 28)

# print(x.shape)

x = self.fc(x)

# print(x.shape)

# print("共执行了{}次".format(self.total_num))

return x

loadDatasets.py

import os

import pandas as pd

import torch

from torch.utils.data import Dataset

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

class myDataLoader(Dataset):

def __init__(self, annotations_file, root_dir, transform=None, target_transform=None):

full_path = os.path.join(root_dir, annotations_file)

self.csv_data = pd.read_csv(full_path)

# csv_data.drop(labels=None,axis=0, index=0, columns=None, inplace=True)

del self.csv_data['Unnamed: 0']

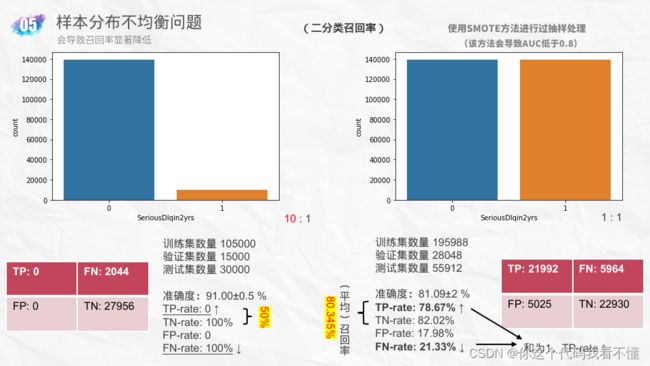

# Step7:样本不均衡问题

X = self.csv_data.drop('SeriousDlqin2yrs', axis=1)

y = self.csv_data['SeriousDlqin2yrs']

# sns.countplot(x='SeriousDlqin2yrs', data=self.csv_data)

# plt.show()

# 使用SMOTE方法进行过抽样处理

from imblearn.over_sampling import SMOTE # 过抽样处理库SMOTE

model_smote = SMOTE() # 建立SMOTE模型对象

X, y = model_smote.fit_resample(X, y) # 输入数据并作过抽样处理

self.csv_data = pd.concat([y, X], axis=1) # 按列合并数据框

# print(smote_resampled.head(5))

# groupby_data_smote = smote_resampled.groupby('SeriousDlqin2yrs').count() # 对label做分类汇总

# print(groupby_data_smote) # 打印输出经过SMOTE处理后的数据集样本分类分布

# sns.countplot(x='SeriousDlqin2yrs', data=smote_resampled)

# plt.show()

# 该方法导致AUC低于0.8

self.length = len(self.csv_data)

self.transform = transform

self.target_transform = target_transform

def __len__(self):

return self.length

def __getitem__(self, idx):

seq = self.csv_data.iloc[idx, 1:]

# 转换类型

seq = np.array(seq)

seq = torch.tensor(seq)

seq = seq.reshape(1, 1, 10)

label = self.csv_data.iloc[idx][0]

label = torch.tensor(label).long().item()

# label = torch.Tensor(label)

return seq, label

杂记

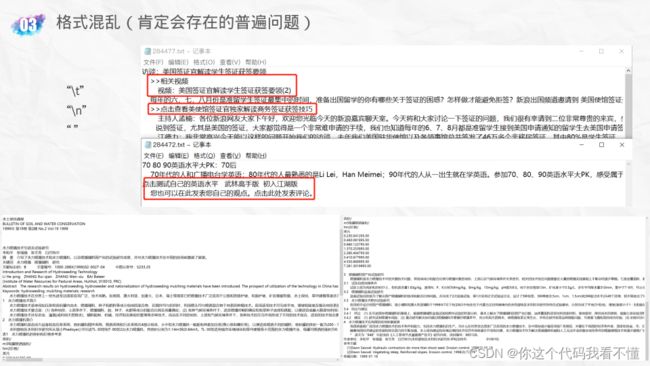

(爬虫,普遍问题)正文格式混乱,标点符号 特殊字符 ‘\t’ ‘\n’ ’ ‘,如果直接删除或者替换为’‘,怎么样才能不影响段落之间的关系?段落之间的’\n’怎么才能被识别出来?

(爬虫,普遍问题)正文格式混乱,标点符号 特殊字符 ‘\t’ ‘\n’ ’ ‘,如果直接删除或者替换为’‘,怎么样才能不影响段落之间的关系?段落之间的’\n’怎么才能被识别出来?

本来以为词嵌入之后向量内的数值和为1,并且

本来以为词嵌入之后向量内的数值和为1,并且

细想一下,概率平均分布的那个向量是在经过多层感知机和softmax分类之后得到的,而并不是这里的词向量。

![]()

冷门新闻

冷门新闻

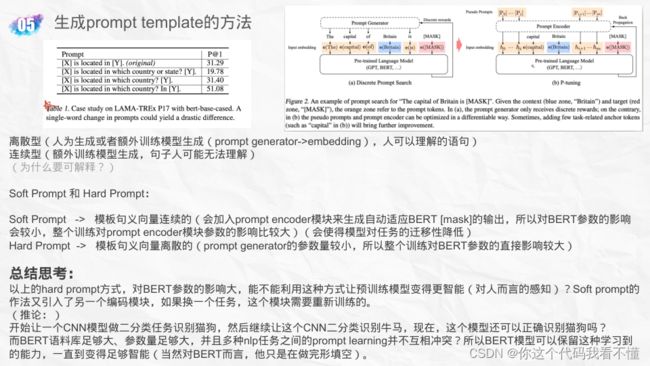

还是说soft prompt只是一种寻找prompt的方法,一旦训练好,便可一直使用,并且BERT模型的参数还是会在下游任务进行中得到微调的?(这种可能性比较小,因为引入的参数矩阵要适配下游任务,当然也可以将多个任务同时进行训练,感觉可能难以实现,但可以试试)

还是说soft prompt只是一种寻找prompt的方法,一旦训练好,便可一直使用,并且BERT模型的参数还是会在下游任务进行中得到微调的?(这种可能性比较小,因为引入的参数矩阵要适配下游任务,当然也可以将多个任务同时进行训练,感觉可能难以实现,但可以试试)

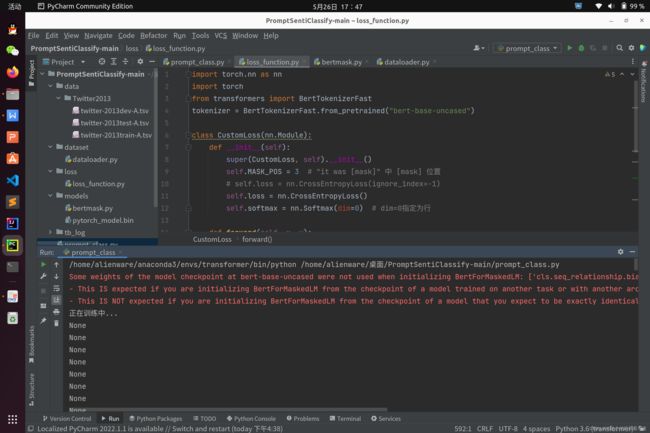

自定义损失函数踩坑

修改损失函数之后,发生了grad==none的情况(train_output.grad):

通过

通过grad_input = torch.autograd.grad(loss, [train_output], retain_graph=True)返回变量可以打印出来梯度,但此处应该只是计算出了中间变量梯度的值,并不会对反向传播起到作用:

虽然本项目的训练没有出现问题,最终损失值可以下降,但在另一个项目里边发生了损失不收敛的问题,所以目前无法确定是修改损失函数之后导致模型不收敛还是梯度没有反向传播回去(目前认为前者可能性更大一些,准备重新定义一个模型了)。

因为没有更多精力排查问题,所以现在暂时先避开可能导致出现这种问题的修改方式。建议对于模型输出的修改全部在model类的forward()中进行,尽量不要在损失函数中定义。

批归一化

num_features:特征的维度 (N,L) -> L ; (N,C,L) -> C:

class torch.nn.BatchNorm1d(num_features, eps=1e-05, momentum=0.1, affine=True) [source]

num_features:特征的维度 (N,C,X,Y) -> C:

class torch.nn.BatchNorm2d(num_features, eps=1e-05, momentum=0.1, affine=True)[source]

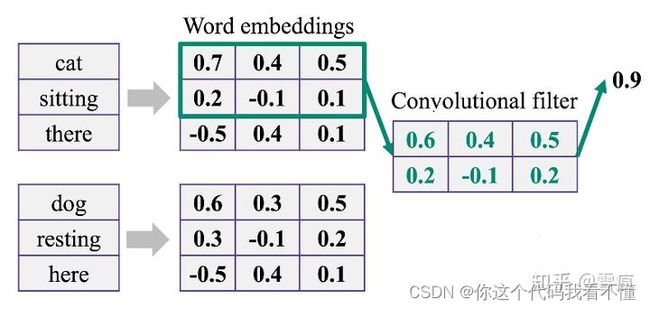

Con1d和Conv2d

Con1d和Conv2d的区别

图像的数据一般是三维的 W ∗ H ∗ C W*H*C W∗H∗C,文本的数据一般是二维的 L ∗ D L*D L∗D

C C C 代表图像的通道数, D D D 代表词向量的维度。

k e r n e l _ s i z e kernel\_size kernel_size:卷积核的尺寸

在Conv2D中,是一个二维的元组 w ∗ h w*h w∗h ,当然也可以传入整数,代表 w = = h w==h w==h ;

在Conv1D中,是整数 l l l 。

-

Conv2d:

如图,输入为 7 ∗ 7 ∗ 3 7*7*3 7∗7∗3的图片,卷积核大小为 3 ∗ 3 3*3 3∗3,卷积核个数为 2 2 2,参数量为 3 ∗ 3 ∗ 3 ∗ 2 3*3*3*2 3∗3∗3∗2 -

Conv1d:

如图,输入序列为 3 ∗ 3 3*3 3∗3的文本,卷积核大小为 2 2 2,个数为 1 1 1,参数量为 3 ∗ 2 ∗ 1 3*2*1 3∗2∗1 -

shape

[1,

2,

3,

4]

# [1, 2, 3, 4]

# torch.Size([4])

[[12,45],

[33,58],

[60,17],

[10,82]]

# torch.Size([4, 2])

torch.tensor([[12,45]]).shape

Out[31]: torch.Size([1, 2])

torch.tensor(

[[1],

[2],

[3],

[4]]).shape

Out[32]: torch.Size([4, 1])

torch.tensor(

[[[12,45],

[33,58],

[60,17],

[10,82]]]).shape

Out[34]: torch.Size([1, 4, 2])

膨胀卷积

这种效果类似于在卷积层之前添加了池化层,但膨胀卷积的作法可以在不增加参数量的情况下,保证输出维度不变。

稀疏Attention

-

膨胀注意力:

Atrous Self Attention就是启发于“膨胀卷积(Atrous Convolution)”,如下右图所示,它对相关性进行了约束,强行要求每个元素只跟它相对距离为k,2k,3k,…的元素关联,其中k>1是预先设定的超参数。

O ( n 2 / k ) O(n^2 / k) O(n2/k)

O ( n 2 / k ) O(n^2 / k) O(n2/k) -

Local Self Attention

显然Local Self Attention则要放弃全局关联,重新引入局部关联。具体来说也很简单,就是约束每个元素只与前后 k k k个元素以及自身有关联,如下图所示:

- Sparse Self Attention

Atrous Self Attention是带有一些洞的,而Local Self Attention正好填补了这些洞,所以一个简单的方式就是将Local Self Attention和Atrous Self Attention交替使用,两者累积起来,理论上也可以学习到全局关联性,也省了显存。

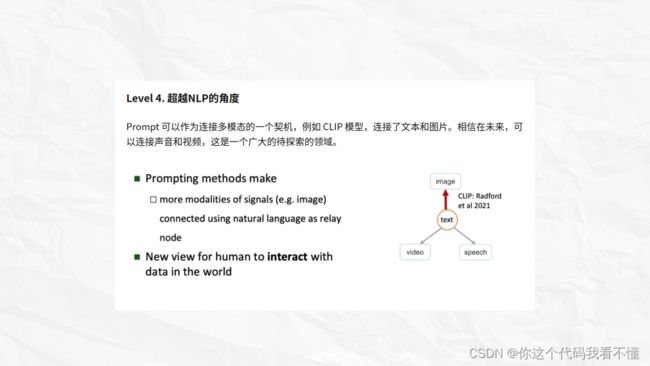

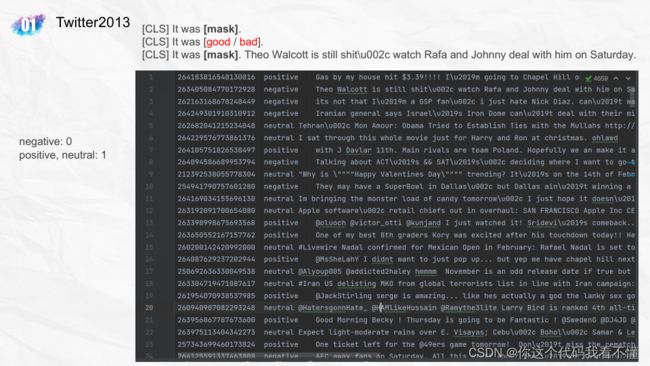

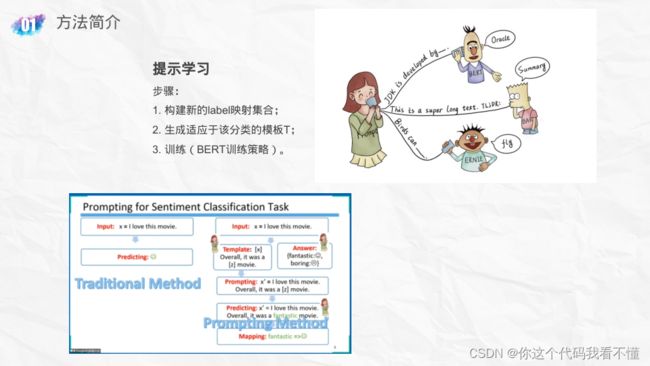

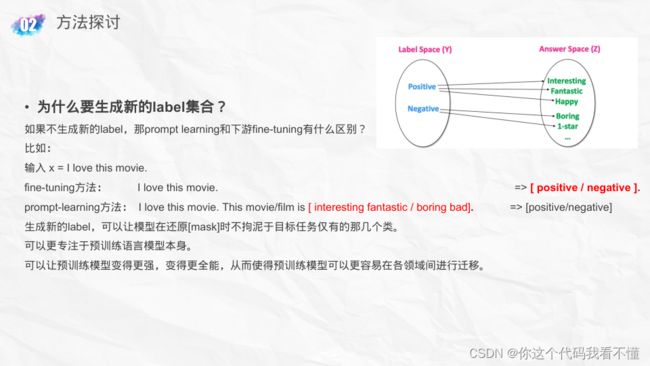

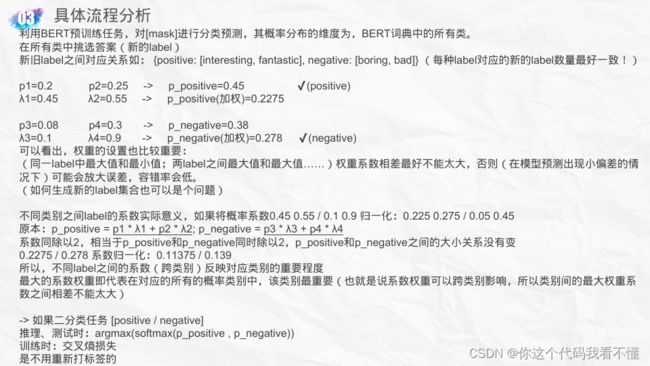

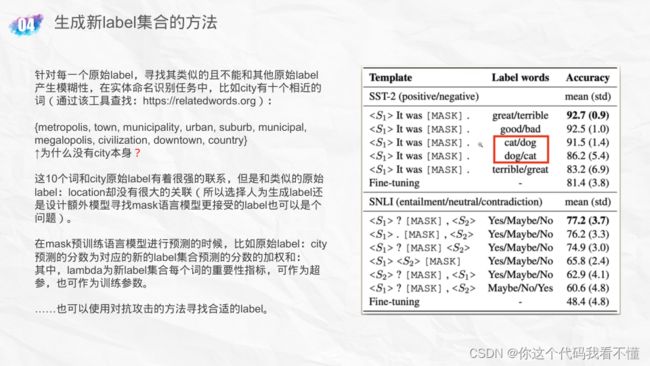

Prompt Learning初探

- PL VS 传统的下游任务微调法:

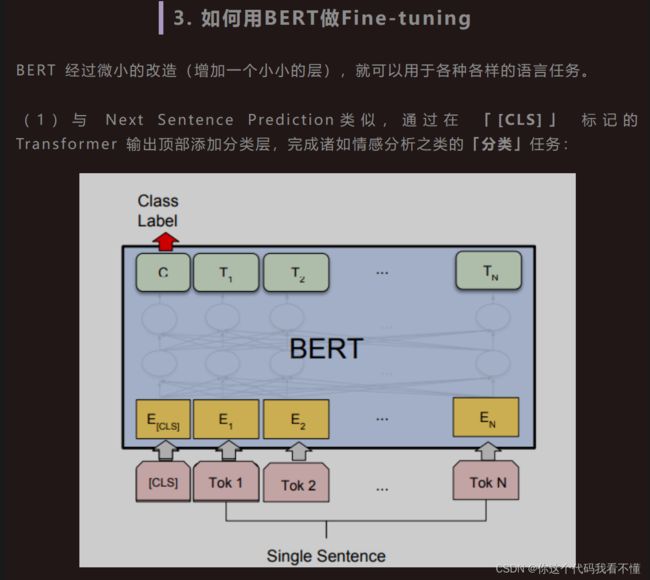

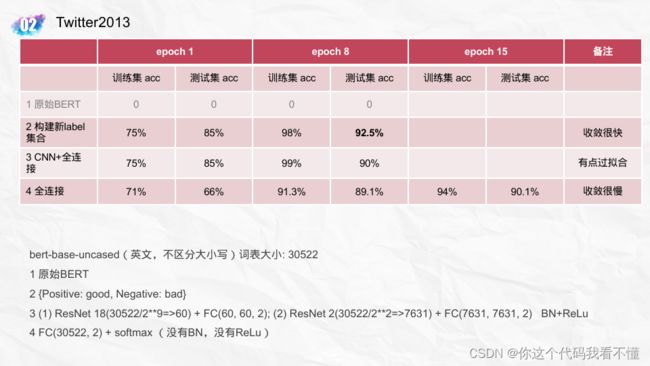

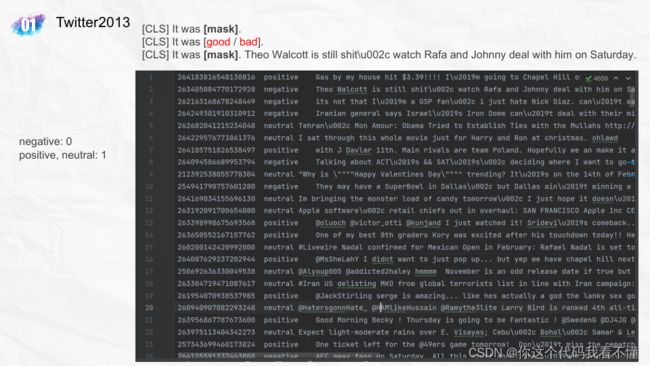

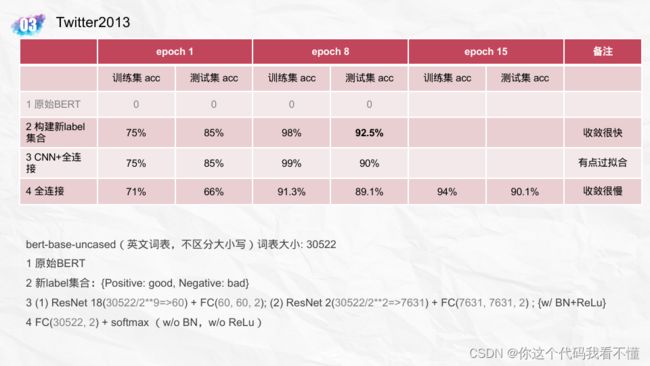

fine-tuning做分类的为[CLS]位置的输出,而prompt learning为[mask]位置的输出,易演顶针,鉴定为醍醐灌顶。

另外,既然本词表是uncased,那[mask]和[MASK],[CLS]和[cls]应该是一样的。

另外,既然本词表是uncased,那[mask]和[MASK],[CLS]和[cls]应该是一样的。

固定bert参数的方法

参数!直接给我冻结!!

手动反向传播和优化器

点击这里查看

l o s s = ( x ∗ w − y ) 2 loss=(x*w-y)^2 loss=(x∗w−y)2

g r a d w = 2 ∗ ( x ∗ w − y ) ∗ x grad_w=2*(x*w-y)*x gradw=2∗(x∗w−y)∗x

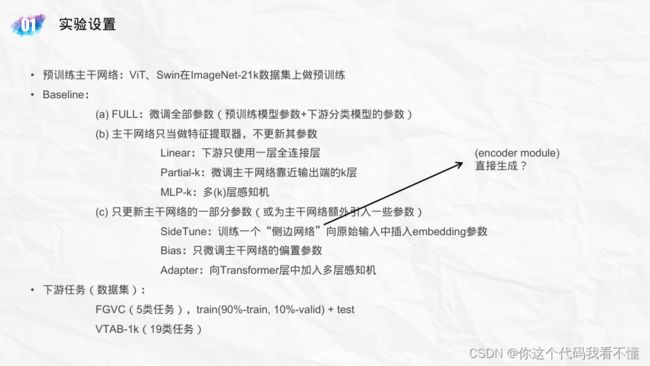

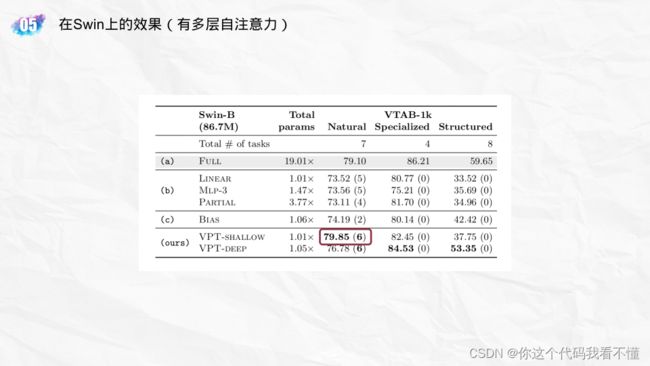

Visual Prompt Tuning (VPT)

- 固定住bert参数试一试

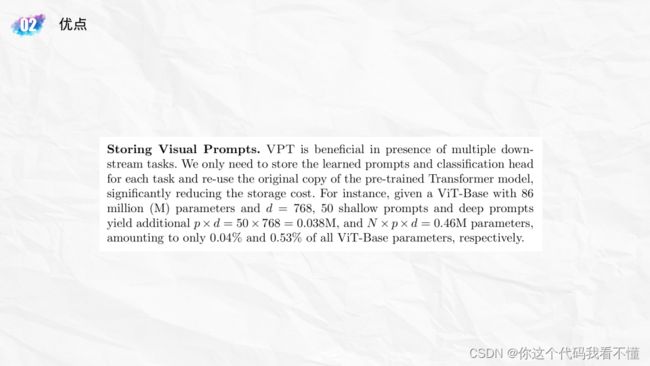

优点:新产生的参数量少

优点:新产生的参数量少

d一般为某数的平方(如果输入图像为正方形)

Swin多头?

Swin多头?

d_model / h

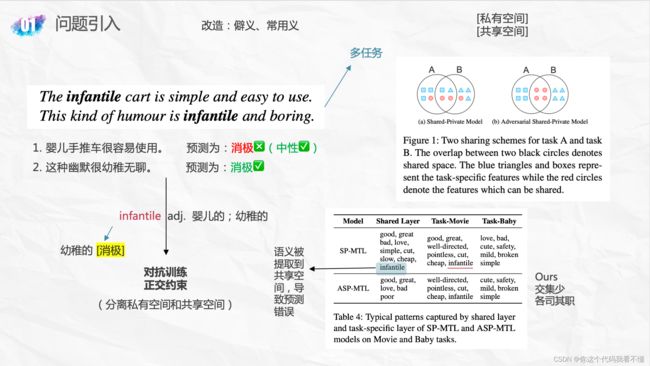

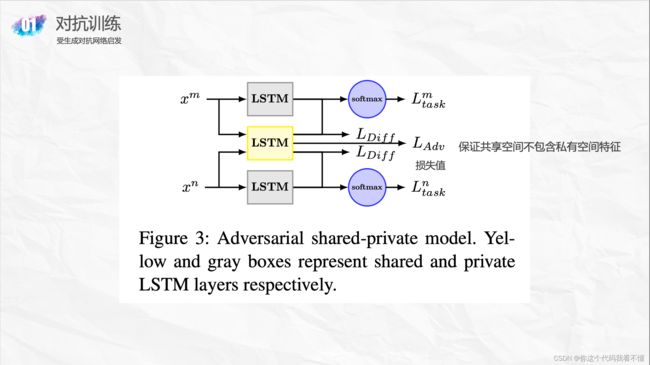

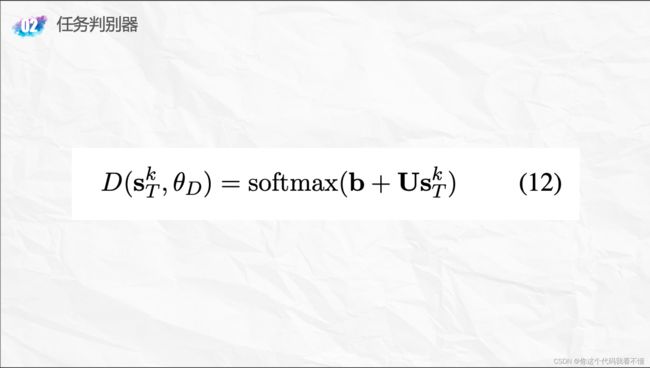

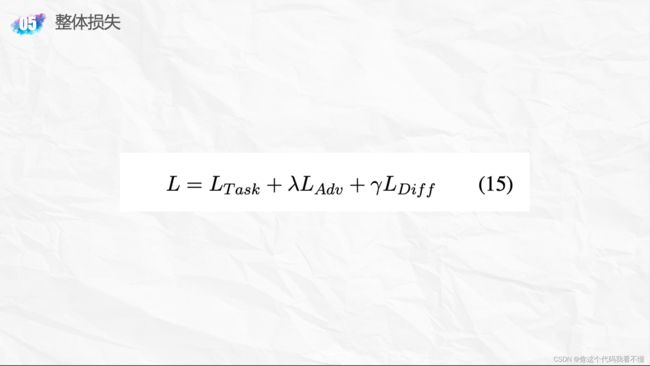

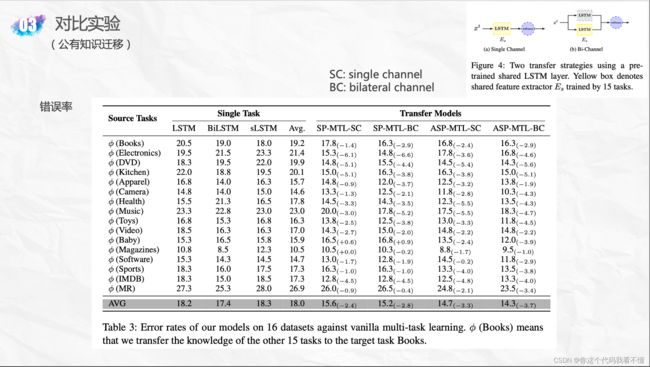

Adversarial Multi-task Learning for Text Classification

- 节省空间

reStructured Pre-training

Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer「谷歌:T5」

- 智慧的眼神?

( a + b ) m i n = a m i n + b m i n (a + b)_{min} = a_{min} + b_{min} (a+b)min=amin+bmin

Next

自然语言处理NLP文本分类顶会论文阅读笔记(二)

- 以防笔记丢失,先发布为妙(●’◡’●)周更ing…