音频采集 via FFmpeg

音频采集 via FFmpeg

- FFmpeg 简介

- FFmpeg 命令行采集音频

- FFmpeg API 采集音频

-

- 音频重采样

- 采集音频代码

-

- open_cap_device 函数

-

- enum_dshow_acap_devices 函数

- open_output_audio_file 函数

-

- open_output_file 函数

- init_audio_encoder 函数

- audio_transcode 函数

-

- decode_a_frame 函数

-

- read_decode_convert_and_store 函数

-

- decode_av_frame 函数

- load_encode_and_write 函数

-

- read_samples_from_fifo 函数

- encode_av_frame 函数

- flush_encoder 函数

- 其他框架下的采集

FFmpeg 简介

FFmpeg 是一套可以用来记录、转换数字音频、视频,并能将其转化为流的开源计算机程序。采用 LGPL 或 GPL 许可证。它提供了录制、转换以及流化音视频的完整解决方案。它包含了非常先进的音频 / 视频编解码库 libavcodec,为了保证高可移植性和编解码质量,libavcodec 里很多 code 都是从头开发的。

FFmpeg 在 Linux 平台下开发,但它同样也可以在其它操作系统环境中编译运行,包括 Windows、Mac OS X 等。这个项目最早由 Fabrice Bellard 发起,2004 年至 2015 年间由 Michael Niedermayer 主要负责维护。许多 FFmpeg 的开发人员都来自 MPlayer 项目,而且当前 FFmpeg 也是放在 MPlayer 项目组的服务器上。项目的名称来自 MPEG 视频编码标准,前面的 “FF” 代表 “Fast Forward”。

FFmpeg 命令行采集音频

FFmpeg 提供了现成的程序用命令行的方式对音频进行采集。

- 首先需要枚举电脑上的音频采集设备:

>ffmpeg.exe -list_devices true -f dshow -i dummy

… …

[dshow @ 007bd020] DirectShow video devices (some may be both video and audio devices)

[dshow @ 007bd020] “USB Web Camera - HD"

[dshow @ 007bd020] Alternative name "@device_pnp_\\?\usb#vid_1bcf&pid_288e&mi_00#7&6c75a67&0&0000#{65e8773d-8f56-11d0-a3b9-00a0c9223196}\global"

[dshow @ 003cd000] DirectShow audio devices

[dshow @ 003cd000] "Microphone (Realtek High Defini"

[dshow @ 003cd000] Alternative name "@device_cm_{33D9A762-90C8-11D0-BD43-00A0C911CE86}\Microphone (Realtek High Defini“

在我的电脑上有两个采集设备,一个是用来采集视频的摄像头,一个是用来采集音频的麦克风“Microphone (Realtek High Defini ”(此处名称因为太长被截断)。

- 然后选择麦克风设备进行采集

>ffmpeg.exe -f dshow -i audio="Microphone (Realtek High Defini" d:\test.mp3

... ...

Guessed Channel Layout for Input Stream #0.0 : stereo

Input #0, dshow, from 'audio=Microphone (Realtek High Defini':

Duration: N/A, start: 38604.081000, bitrate: 1411 kb/s

Stream #0:0: Audio: pcm_s16le, 44100 Hz, stereo, s16, 1411 kb/s

Stream mapping:

Stream #0:0 -> #0:0 (pcm_s16le (native) -> mp3 (libmp3lame))

Press [q] to stop, [?] for help

Output #0, mp3, to 'd:\test.mp3':

Metadata:

TSSE : Lavf57.71.100

Stream #0:0: Audio: mp3 (libmp3lame), 44100 Hz, stereo, s16p

Metadata:

encoder : Lavc57.89.100 libmp3lame

size=102kB time=00:00:06.48 bitrate=128.7kbits/s speed=2.13x ← 正在录制的音频信息,会实时变化

FFmpeg 在 Windows 上用的是 DirectShow 输入设备进行采集的,然后经由自己的 mp3 encoder 和 file writer 写到磁盘上。

FFmpeg API 采集音频

FFmpeg 还提供了完备的 API 对音频进行采集。

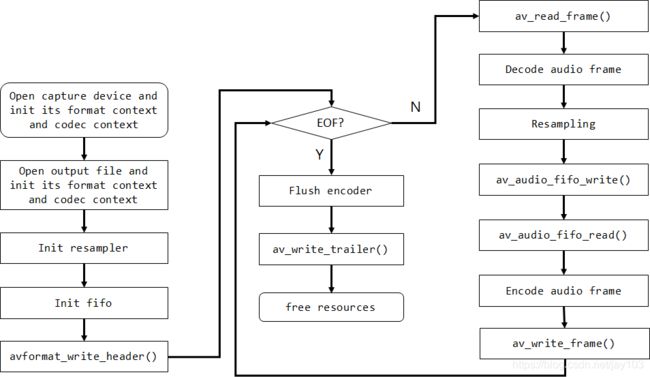

下面是使用 FFmpeg 采集并编码音频的流程图。使用该流程,可以编码 MP3、AAC、FLAC 等等各种 FFmpeg 支持的音频。

音频重采样

音频重采样 是转换已采样的音频数据的过程,比如当输入输出数据的采样率不一致时,或者声道数不一致时,就需要重采样。音频重采样主要步骤是进行抽取或插值。由于抽取可能产生混叠,插值可能产生镜像,因此需要在抽取前进行抗混叠滤波,在插值后进行抗镜像滤波。抗混叠滤波和抗镜像滤波都是使用低通滤波器实现。

FFmpeg 提供了重采样的 API,主要流程如下:

采集音频代码

以下是整个 FFmpeg 采集过程的概要代码,略去各个函数的具体实现和资源释放。

本文中的代码基于 FFmpeg 4.1。

hr = open_cap_device(AVMEDIA_TYPE_AUDIO, &cap_fmt_ctx, &cap_codec_ctx);

GOTO_IF_FAILED(hr);

hr = open_output_audio_file(out_file, cap_codec_ctx, &out_fmt_ctx, &enc_ctx);

GOTO_IF_FAILED(hr);

hr = init_resampler(cap_codec_ctx, enc_ctx, &resample_ctx);

GOTO_IF_FAILED(hr);

hr = init_fifo(&fifo, enc_ctx);

GOTO_IF_FAILED(hr);

hr = avformat_write_header(out_fmt_ctx, NULL);

GOTO_IF_FAILED(hr);

while (_kbhit() == 0) { // Infinitely capture audio until a key input.

int finished = 0;

hr = audio_transcode( cap_fmt_ctx, cap_codec_ctx, out_fmt_ctx, enc_ctx,

fifo, resample_ctx, 0, &finished, false, true );

GOTO_IF_FAILED(hr);

if (finished)

break;

}

flush_encoder(out_fmt_ctx, enc_ctx);

hr = av_write_trailer(out_fmt_ctx);

GOTO_IF_FAILED(hr);

open_cap_device 函数

在 Windows 上 FFmpeg 使用 DirectShow 的设备进行采集,这里我们先枚举所有的设备,然后选用第一个成功初始化的设备。

int open_cap_device(

AVMediaType cap_type,

AVFormatContext **cap_fmt_ctx,

AVCodecContext **cap_codec_ctx,

AVDictionary** options = NULL)

{

RETURN_IF_NULL(cap_fmt_ctx);

RETURN_IF_NULL(cap_codec_ctx);

*cap_fmt_ctx = NULL;

*cap_codec_ctx = NULL;

int hr = -1;

std::string cap_device_name;

std::vector<std::wstring> cap_devices;

avdevice_register_all();

CoInitialize(NULL);

AVInputFormat* input_fmt = av_find_input_format("dshow");

GOTO_IF_NULL(input_fmt);

switch (cap_type) {

case AVMEDIA_TYPE_AUDIO:

cap_device_name = "audio=";

hr = enum_dshow_acap_devices(cap_devices);

break;

}

GOTO_IF_FAILED(hr);

cap_device_name += unicodeToUtf8(cap_devices[0].c_str());

hr = avformat_open_input(cap_fmt_ctx, cap_device_name.c_str(), input_fmt, options);

GOTO_IF_FAILED(hr);

hr = avformat_find_stream_info(*cap_fmt_ctx, NULL);

GOTO_IF_FAILED(hr);

for (unsigned int i = 0; i < (*cap_fmt_ctx)->nb_streams; i++) {

AVCodecParameters* codec_par = (*cap_fmt_ctx)->streams[i]->codecpar;

if (codec_par->codec_type == cap_type) {

av_dump_format(*cap_fmt_ctx, i, NULL, 0);

AVCodec* decoder = avcodec_find_decoder(codec_par->codec_id);

GOTO_IF_NULL(decoder);

*cap_codec_ctx = avcodec_alloc_context3(decoder);

GOTO_IF_NULL(*cap_codec_ctx);

/** initialize the stream parameters with demuxer information */

hr = avcodec_parameters_to_context(*cap_codec_ctx, codec_par);

GOTO_LABEL_IF_FAILED(hr, OnErr);

hr = avcodec_open2(*cap_codec_ctx, decoder, NULL);

GOTO_IF_FAILED(hr);

break;

}

}

GOTO_IF_NULL(*cap_codec_ctx);

hr = 0;

RESOURCE_FREE:

CoUninitialize();

return hr;

OnErr:

avformat_free_context(*cap_fmt_ctx);

*cap_fmt_ctx = NULL;

if (NULL != *cap_codec_ctx)

avcodec_free_context(cap_codec_ctx);

goto RESOURCE_FREE;

}

enum_dshow_acap_devices 函数

下面是使用 DShow 的 API 对音频采集设备进行枚举。

HRESULT enum_dshow_acap_devices(std::vector<std::wstring>& devices)

{

return enum_dshow_devices(CLSID_AudioInputDeviceCategory, devices);

}

HRESULT enum_dshow_devices(const IID& deviceCategory, std::vector<std::wstring>& devices)

{

HRESULT hr = E_FAIL;

CComPtr <ICreateDevEnum> pDevEnum =NULL;

CComPtr <IEnumMoniker> pClassEnum = NULL;

CComPtr<IMoniker> pMoniker =NULL;

ULONG cFetched = 0;

hr = CoCreateInstance(CLSID_SystemDeviceEnum, NULL, CLSCTX_INPROC, IID_ICreateDevEnum, (void**)&pDevEnum);

RETURN_IF_FAILED(hr);

hr = pDevEnum->CreateClassEnumerator(deviceCategory, &pClassEnum, 0);

RETURN_IF_FAILED(hr);

// If there are no enumerators for the requested type, then

// CreateClassEnumerator will succeed, but pClassEnum will be NULL.

RETURN_IF_NULL(pClassEnum);

while (S_OK == (pClassEnum->Next(1, &pMoniker, &cFetched))) {

CComPtr<IPropertyBag> pPropertyBag = NULL;

hr = pMoniker->BindToStorage(NULL, NULL, IID_IPropertyBag, (void**)&pPropertyBag);

pMoniker = NULL;

if (FAILED(hr))

continue;

CComVariant friendlyName;

friendlyName.vt = VT_BSTR;

hr = pPropertyBag->Read(L"FriendlyName", &friendlyName, NULL) ;

if (SUCCEEDED(hr)) {

std::wstring strFriendlyName(friendlyName.bstrVal);

devices.push_back(strFriendlyName);

}

}

RETURN_IF_TRUE(devices.empty(), E_FAIL);

return S_OK;

}

open_output_audio_file 函数

打开一个输出文件并初始化音频编码器。

int open_output_audio_file(

const char *file_name,

AVCodecContext *dec_ctx,

AVFormatContext **out_fmt_ctx,

AVCodecContext **enc_ctx)

{

RETURN_IF_NULL(enc_ctx);

int hr = -1;

AVCodecContext *codec_ctx = NULL;

hr = open_output_file(file_name, dec_ctx->codec_type, out_fmt_ctx, &codec_ctx);

RETURN_IF_FAILED(hr);

hr = init_audio_encoder(dec_ctx->sample_rate, *out_fmt_ctx, 0, codec_ctx);

GOTO_LABEL_IF_FAILED(hr, OnErr);

*enc_ctx = codec_ctx;

return 0;

OnErr:

avcodec_free_context(&codec_ctx);

avio_closep(&(*out_fmt_ctx)->pb);

avformat_free_context(*out_fmt_ctx);

*out_fmt_ctx = NULL;

*enc_ctx = NULL;

return hr;

}

open_output_file 函数

通过文件后缀名 guess 一个最适合的编码器。

int open_output_file(

const char *file_name,

AVMediaType stream_type,

AVFormatContext **out_fmt_ctx,

AVCodecContext **enc_ctx )

{

RETURN_IF_NULL(file_name);

RETURN_IF_NULL(out_fmt_ctx);

RETURN_IF_NULL(enc_ctx);

int hr = -1;

AVIOContext *output_io_ctx = NULL;

/** Open the output file to write to it. */

hr = avio_open(&output_io_ctx, file_name, AVIO_FLAG_WRITE);

RETURN_IF_FAILED(hr);

/** Create a new format context for the output container format. */

*out_fmt_ctx = avformat_alloc_context();

RETURN_IF_NULL(*out_fmt_ctx);

/** Associate the output file (pointer) with the container format context. */

(*out_fmt_ctx)->pb = output_io_ctx;

/** Guess the desired container format based on the file extension. */

(*out_fmt_ctx)->oformat = av_guess_format(NULL, file_name, NULL);

GOTO_LABEL_IF_NULL((*out_fmt_ctx)->oformat, OnErr);

char*& url = (*out_fmt_ctx)->url;

if (NULL == url)

url = av_strdup(file_name);

/** Find the encoder to be used by its name. */

AVCodecID out_codec_id = AV_CODEC_ID_NONE;

switch (stream_type) {

case AVMEDIA_TYPE_AUDIO:

out_codec_id = (*out_fmt_ctx)->oformat->audio_codec;

break;

}

int stream_idx = add_stream_and_alloc_enc(out_codec_id, *out_fmt_ctx, enc_ctx);

GOTO_LABEL_IF_FALSE(stream_idx >= 0, OnErr);

return 0;

OnErr:

avio_closep(&(*out_fmt_ctx)->pb);

avformat_free_context(*out_fmt_ctx);

*out_fmt_ctx = NULL;

*enc_ctx = NULL;

return hr;

}

init_audio_encoder 函数

初始化音频的一些基本参数如:声道、采样率、比特率、时间戳基准等。

int init_audio_encoder(

int sample_rate,

const AVFormatContext* out_fmt_ctx,

unsigned int audio_stream_idx,

AVCodecContext *codec_ctx,

AVSampleFormat sample_fmt = AV_SAMPLE_FMT_NONE,

uint64_t channel_layout = AV_CH_FRONT_LEFT | AV_CH_FRONT_RIGHT,

int64_t bit_rate = 64000 )

{

int hr = -1;

RETURN_IF_NULL(codec_ctx);

RETURN_IF_NULL(out_fmt_ctx);

RETURN_IF_FALSE(audio_stream_idx < out_fmt_ctx->nb_streams);

/**

* Set the basic encoder parameters.

* The input file's sample rate is used to avoid a sample rate conversion.

*/

codec_ctx->channel_layout = channel_layout;

codec_ctx->channels = av_get_channel_layout_nb_channels(channel_layout);

codec_ctx->sample_rate = sample_rate;

codec_ctx->sample_fmt = (sample_fmt != AV_SAMPLE_FMT_NONE) ? sample_fmt : codec_ctx->codec->sample_fmts[0];

codec_ctx->bit_rate = bit_rate;

/** Allow the use of the experimental encoder */

codec_ctx->strict_std_compliance = FF_COMPLIANCE_EXPERIMENTAL;

codec_ctx->time_base.den = sample_rate;

codec_ctx->time_base.num = 1;

/**

* Some container formats (like MP4) require global headers to be present

* Mark the encoder so that it behaves accordingly.

*/

if (out_fmt_ctx->oformat->flags & AVFMT_GLOBALHEADER)

codec_ctx->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

AVStream* stream = out_fmt_ctx->streams[audio_stream_idx];

stream->time_base = codec_ctx->time_base;

/** Open the encoder for the audio stream to use it later. */

hr = avcodec_open2(codec_ctx, codec_ctx->codec, NULL);

RETURN_IF_FAILED(hr);

hr = avcodec_parameters_from_context(stream->codecpar, codec_ctx);

RETURN_IF_FAILED(hr);

return 0;

}

audio_transcode 函数

理论上我们采集的是 Raw PCM 数据,不需要 decode,直接可以 encode,但考虑到要使用 fifo 和 resampler,且为了和真正的 transcoding 共用代码,故封装成了 audio_transcode 函数并在采集时也调用。

注意:解码后的音频数据不能直接编码,而是要经过一个 FIFO(先入先出队列),这是因为音频编解码的 frame 大小往往是不一样的(视频 frame 大小狭义上是一样的,但格式(RGB,YUV 等)可能不一样)。

int audio_transcode(

AVFormatContext* in_fmt_ctx,

AVCodecContext* dec_ctx,

AVFormatContext* out_fmt_ctx,

AVCodecContext* enc_ctx,

AVAudioFifo* fifo,

SwrContext* resample_ctx,

int aud_stream_index,

int* finished,

bool interleaved = false,

bool init_pts = false)

{

int hr = -1;

audio_base_info out_aud_info(enc_ctx);

hr = decode_a_frame(in_fmt_ctx, dec_ctx, &out_aud_info, fifo, resample_ctx, aud_stream_index, finished);

RETURN_IF_FAILED(hr);

/**

* If we have enough samples for the encoder, we encode them.

* At the end of the file, we pass the remaining samples to the encoder.

*/

while (av_audio_fifo_size(fifo) >= enc_ctx->frame_size ||

(*finished && av_audio_fifo_size(fifo) > 0)) {

/**

* Take one frame worth of audio samples from the FIFO buffer,

* encode it and write it to the output file.

*/

hr = load_encode_and_write(fifo, out_fmt_ctx, enc_ctx, &out_aud_info, interleaved, init_pts);

RETURN_IF_FAILED(hr);

}

/**

* If we are at the end of the input file and have encoded

* all remaining samples, we can exit this loop and finish.

*/

if (*finished)

flush_encoder(out_fmt_ctx, enc_ctx, interleaved, init_pts);

return 0;

}

decode_a_frame 函数

此处只是解码的外层 wrapper。

int decode_a_frame(

AVFormatContext* in_fmt_ctx,

AVCodecContext* dec_ctx,

audio_base_info* out_aud_info,

AVAudioFifo* fifo,

SwrContext* resample_ctx,

int audio_stream_index,

int* finished)

{

int hr = AVERROR_EXIT;

/* Make sure that there is one frame worth of samples in the FIFO

* buffer so that the encoder can do its work.

* Since the decoder's and the encoder's frame size may differ, we

* need to FIFO buffer to store as many frames worth of input samples

* that they make up at least one frame worth of output samples. */

while (av_audio_fifo_size(fifo) < out_aud_info->frame_size) {

/* Decode one frame worth of audio samples, convert it to the

* output sample format and put it into the FIFO buffer. */

hr = read_decode_convert_and_store(fifo, in_fmt_ctx, dec_ctx, out_aud_info,

resample_ctx, audio_stream_index, finished);

RETURN_IF_FAILED(hr);

if (*finished)

break;

}

return hr;

}

read_decode_convert_and_store 函数

继续 wrapper。

int read_decode_convert_and_store(

AVAudioFifo *fifo,

AVFormatContext *in_fmt_ctx,

AVCodecContext *dec_ctx,

audio_base_info* out_aud_info,

SwrContext *resampler_ctx,

int audio_stream_index,

int *finished)

{

RETURN_IF_NULL(finished);

/** Temporary storage of the input samples of the frame read from the file. */

std::vector<AVFrame*> decoded_frames;

int hr = AVERROR_EXIT;

/** Decode one frame worth of audio samples. */

hr = decode_av_frame(in_fmt_ctx, dec_ctx, audio_stream_index, decoded_frames, finished);

/**

* If we are at the end of the file and there are no more samples

* in the decoder which are delayed, we are actually finished.

* This must not be treated as an error.

*/

if (*finished && decoded_frames.empty()) {

hr = 0;

goto RESOURCE_FREE;

}

if (FAILED(hr) && decoded_frames.empty())

GOTO_IF_FAILED(hr);

/** If there is decoded data, convert and store it */

for (size_t i = 0; i < decoded_frames.size(); ++i) {

AVFrame* frame = decoded_frames[i];

hr = resample_and_store(frame, dec_ctx->sample_rate, out_aud_info, resampler_ctx, fifo);

GOTO_IF_FAILED(hr);

}

hr = 0;

RESOURCE_FREE:

for (size_t i = 0; i < decoded_frames.size(); ++i)

av_frame_free(&decoded_frames[i]);

return hr;

}

decode_av_frame 函数

终于找到你了,亲爱的解码函数,不过她其实也是 FFmpeg 的终极 wrapper -_-!

注意:此处已经抛弃了 legacy 的 avcodec_decode_audio4,而是使用 avcodec_send_packet 和 avcodec_receive_frame,具体请参考 官方文档。

int decode_av_frame(

AVFormatContext *in_fmt_ctx,

AVCodecContext *dec_ctx,

int stream_index, // -1 means any stream

std::vector<AVFrame*>& frames,

int *finished)

{

RETURN_IF_NULL(in_fmt_ctx);

RETURN_IF_NULL(dec_ctx);

RETURN_IF_NULL(finished);

*finished = 0;

AVFrame *frame = NULL;

/** Packet used for temporary storage. */

AVPacket in_pkt;

int hr = -1;

init_packet(&in_pkt);

while (true) {

/** Read one frame from the input file into a temporary packet. */

hr = av_read_frame(in_fmt_ctx, &in_pkt);

if (FAILED(hr)) {

/** If we are at the end of the file, flush the decoder below. */

if (hr == AVERROR_EOF)

*finished = 1;

}

else if ((stream_index >= 0) && (in_pkt.stream_index != stream_index))

continue;

else

av_packet_rescale_ts(&in_pkt,

in_fmt_ctx->streams[in_pkt.stream_index]->time_base,

dec_ctx->time_base);

hr = avcodec_send_packet(dec_ctx, *finished ? NULL : &in_pkt);

if (SUCCEEDED(hr) || (hr == AVERROR(EAGAIN))) {

while (true) {

/** Initialize temporary storage for one input frame. */

frame = av_frame_alloc();

GOTO_IF_NULL(frame);

hr = avcodec_receive_frame(dec_ctx, frame);

if (SUCCEEDED(hr))

frames.push_back(frame);

else if (hr == AVERROR_EOF) {

*finished = 1;

break;

}

else if (hr == AVERROR(EAGAIN)) // need more packets

break;

else

GOTO_IF_FAILED(hr);

}

}

else if (hr == AVERROR_EOF)

*finished = 1;

else

GOTO_IF_FAILED(hr);

if (*finished || !frames.empty())

break;

}

hr = 0;

RESOURCE_FREE:

// free resources

return hr;

}

load_encode_and_write 函数

无止境的 wrapper,从 fifo 队列中读取目标大小的数据然后进行编码并写到文件中。

int load_encode_and_write(

AVAudioFifo* fifo,

AVFormatContext* out_fmt_ctx,

AVCodecContext* enc_ctx,

audio_base_info* out_aud_info,

bool interleaved,

bool init_pts = true )

{

int hr = -1;

/** Temporary storage of the output samples of the frame written to the file. */

AVFrame *output_frame = NULL;

hr = read_samples_from_fifo(fifo, out_aud_info, &output_frame);

GOTO_IF_FAILED(hr);

/** Encode one frame worth of audio samples. */

int data_written = 0;

hr = encode_av_frame(output_frame, out_fmt_ctx, enc_ctx,

&data_written, interleaved, init_pts);

GOTO_IF_FAILED(hr);

hr = 0;

RESOURCE_FREE:

if (NULL != output_frame)

av_frame_free(&output_frame);

return hr;

}

read_samples_from_fifo 函数

顾名思义,不解释。

int read_samples_from_fifo(AVAudioFifo* fifo, audio_base_info* out_aud_info, AVFrame** output_frame)

{

RETURN_IF_NULL(fifo);

RETURN_IF_NULL(out_aud_info);

RETURN_IF_NULL(output_frame);

int hr = -1;

/**

* Use the maximum number of possible samples per frame.

* If there is less than the maximum possible frame size in the FIFO

* buffer use this number. Otherwise, use the maximum possible frame size

*/

int fifo_size = av_audio_fifo_size(fifo);

const int frame_size = FFMIN(fifo_size, out_aud_info->frame_size);

/** Initialize temporary storage for one output frame. */

hr = init_audio_frame(output_frame, out_aud_info);

RETURN_IF_FAILED(hr);

/**

* Read as many samples from the FIFO buffer as required to fill the frame.

* The samples are stored in the frame temporarily.

*/

int samples_read = av_audio_fifo_read(fifo, (void**)((*output_frame)->data), frame_size);

RETURN_IF_FALSE(samples_read == frame_size);

return 0;

}

encode_av_frame 函数

亲爱的编码函数。

int encode_av_frame(

AVFrame *frame,

AVFormatContext *out_fmt_ctx,

AVCodecContext *enc_ctx,

int* data_written,

bool interleaved,

bool init_pts)

{

// frame can be NULL which means to flush

RETURN_IF_NULL(out_fmt_ctx);

RETURN_IF_NULL(enc_ctx);

RETURN_IF_NULL(data_written);

*data_written = 0;

int hr = -1;

if (NULL != frame && init_pts) {

if (enc_ctx->codec_type == AVMEDIA_TYPE_AUDIO) {

/** Set a timestamp based on the sample rate for the container. */

frame->pts = g_ttl_a_samples;

g_ttl_a_samples += frame->nb_samples;

}

}

int stream_idx = 0;

for (unsigned int i = 0; i < out_fmt_ctx->nb_streams; ++i) {

if (out_fmt_ctx->streams[i]->codecpar->codec_type == enc_ctx->codec_type) {

stream_idx = i;

break;

}

}

std::vector<AVPacket*> packets;

AVPacket* output_packet = NULL;

hr = avcodec_send_frame(enc_ctx, frame);

if (SUCCEEDED(hr) || (hr == AVERROR(EAGAIN))) {

while (true) {

/** Packet used for temporary storage. */

output_packet = new AVPacket();

init_packet(output_packet);

hr = avcodec_receive_packet(enc_ctx, output_packet);

if (SUCCEEDED(hr)) {

output_packet->stream_index = stream_idx;

packets.push_back(output_packet);

}

else if (hr == AVERROR(EAGAIN)) // need more input frames

break;

else if (hr == AVERROR_EOF)

break;

else

GOTO_IF_FAILED(hr);

}

}

else if (hr != AVERROR_EOF)

GOTO_IF_FAILED(hr);

for (size_t i = 0; i < packets.size(); ++i) {

// set pts based on stream time base.

AVRational stream_tb = get_stream_time_base(out_fmt_ctx, enc_ctx->codec_type);

AVPacket* packet = packets[i];

switch (enc_ctx->codec_type) {

case AVMEDIA_TYPE_AUDIO:

av_packet_rescale_ts(packet, enc_ctx->time_base, stream_tb);

break;

}

/** Write one frame from the temporary packet to the output file. */

if (interleaved)

hr = av_interleaved_write_frame(out_fmt_ctx, packet);

else

hr = av_write_frame(out_fmt_ctx, packet);

GOTO_IF_FAILED(hr);

*data_written = 1;

}

hr = 0;

RESOURCE_FREE:

// free resources

return hr;

}

flush_encoder 函数

终于结束了,最后是擦屁股。

int flush_encoder(

AVFormatContext* format_ctx,

AVCodecContext* codec_ctx,

bool interleaved,

bool init_pts)

{

if (!(codec_ctx->codec->capabilities & AV_CODEC_CAP_DELAY))

return 0;

int data_written = 0;

/** Flush the encoder as it may have delayed frames. */

do {

int hr = encode_av_frame(NULL, format_ctx, codec_ctx, &data_written, interleaved, init_pts);

RETURN_IF_FAILED(hr);

} while (data_written);

return 0;

}

其他框架下的采集

请参考对应的文章。

- Waveform API

- DirectShow

- Media Foundation

![]()

– EOF –