Kaggle-泰坦尼克号生存者预测比赛|初级入门

问题描述

-

泰坦尼克号的沉没是历史上最臭名昭著的沉船事件之一。1912年4月15日,泰坦尼克号在处女航中撞上冰山沉没,2224名乘客和船员中1502人遇难。这一耸人听闻的悲剧震惊了国际社会,并导致了更好的船舶安全条例。

-

沉船造成如此巨大人员伤亡的原因之一是没有足够的救生艇来容纳乘客和船员。虽然在沉船事件中幸存下来也有一些运气的因素,但有些人比其他人更有可能幸存下来,比如妇女、儿童和上层阶级。

-

在这个挑战中,我们要求你完成对可能存活下来的人的分析。我们特别要求你们运用机器学习工具来预测哪些乘客在灾难中幸存下来。

数据来源:https://www.kaggle.com/c/titanic/data

初探数据

首先看看数据,长什么样

pandas是常用的python数据处理包,把csv文件读入称dataframe格式,数据分为两部分:训练集和测试集,训练集891行12列,测试集419行11列(无survived列)。

各列的含义如下:

| PassengerId | 乘客ID编号 |

|---|---|

| Pclass | 乘客等级 |

| Name | 姓名 |

| Sex | 性别 |

| Age | 年龄 |

| SibSp | 堂兄弟/妹个数 |

| Parch | 父母与小孩个数 |

| Ticket | 船票信息 |

| Fare | 票价 |

| Cabin | 客舱 |

| Embarked | 登船港口C,Q,S |

1.数据初步认识

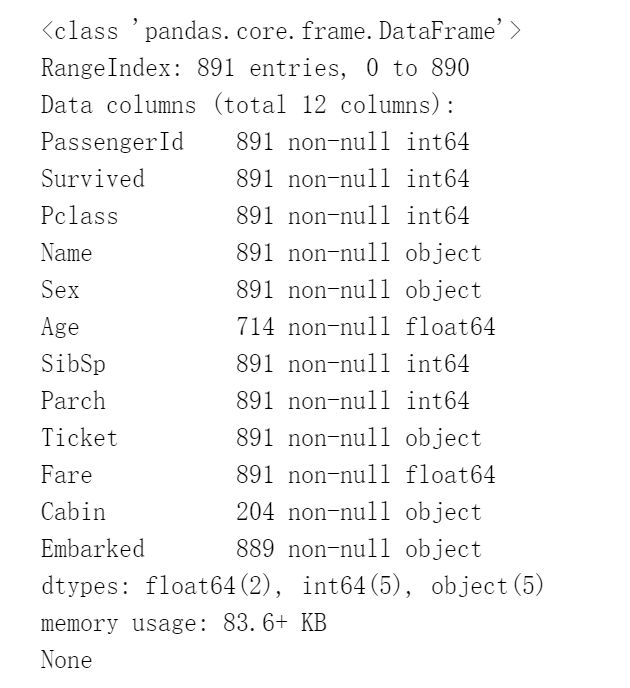

print(df_train.info())

上面的数据告诉我们训练集共有891名乘客,但是他们有些属性不全,比如:

- Age属性只有714名乘客有记录

- Cabin则只有204名乘客有记录

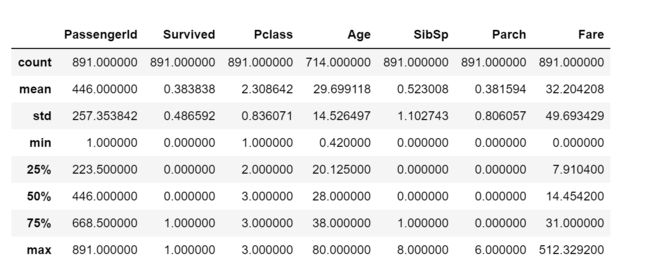

可以采用pandans中的describe()方法,对数据中的每一列数进行统计分析。得到数值型数据的一些分布(而有些属性比如姓名是文本型,登船港口是类目性,这些用describe()方法是看不到的)

df_train.describe()

上面可以得出,大概有38.3%的人获救了,乘客的平均年龄是29.7岁。

2.数据初步分析

每个乘客都有这么多属性,如何知道哪些属性更有用,又该如何使用呢。所以我们要知道,对数据的认识非常重要!看看单一/多个属性和最后的survived之间有什么样的关系。

下面使用统计学与绘图,了解数据之间的相关性,主要在以下方面:

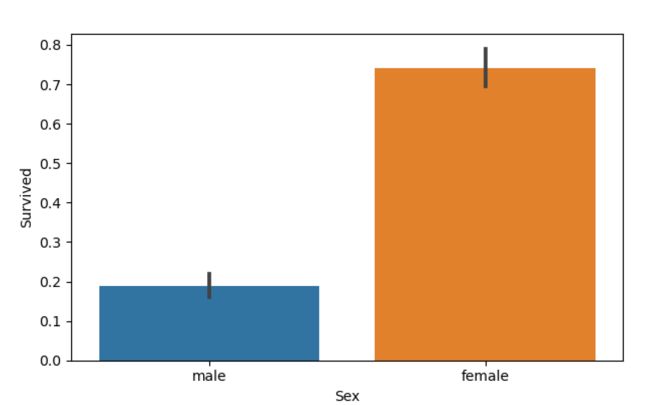

- 1.性别与幸存率的关系

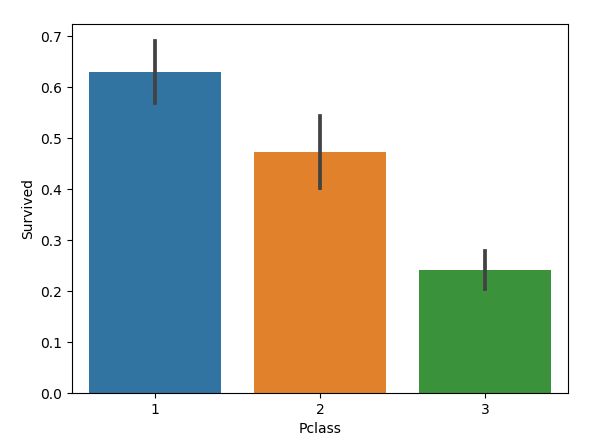

- 2.乘客社会等级与幸存率的关系

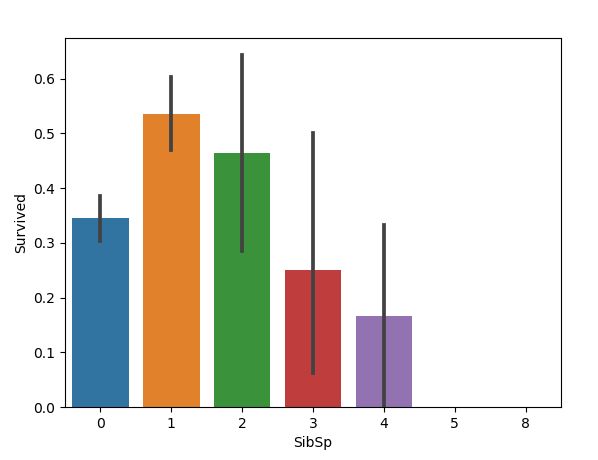

- 3.配偶及兄弟姐妹数与幸存率的关系

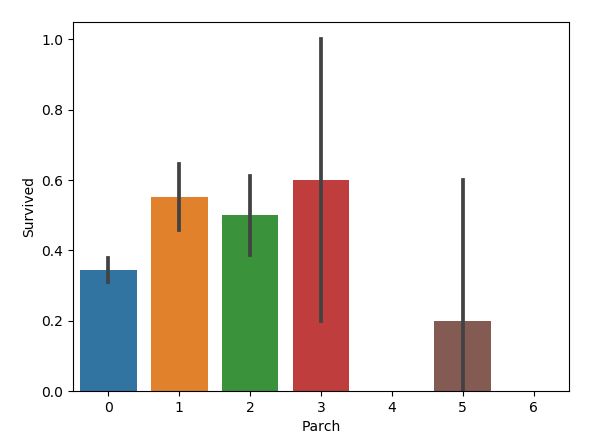

- 4.父母及子女数与幸存率的关系

- 5.年龄与幸存率的关系

- 6.Embarked登港港口与幸存率的关系

- 7.称呼与幸存率的关系

- 8.家庭人数与幸存率的关系

- 9.不同船舱的乘客与幸存率的关系

2.1性别与幸存率的关系

2.乘客社会等级与幸存率的关系

sns.barplot(x='Pclass', y='Survived', data=train)

3.配偶及兄弟姐妹数与幸存率的关系

sns.barplot(x='SibSp', y='Survived', data=train)

sns.barplot(x='Parch', y='Survived', data=train)

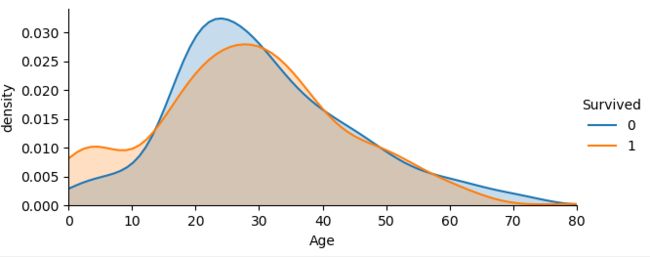

5.年龄与幸存率的关系

facet = sns.FacetGrid(train, hue="Survived",aspect=2)

facet.map(sns.kdeplot,'Age',shade= True)

facet.set(xlim=(0, train['Age'].max()))

facet.add_legend()

plt.xlabel('Age')

plt.ylabel('density')

从不同生还情况的密度图可以看出,在年龄15岁的左侧,生还率有明显差别,密度图非交叉区域面积非常大,但在其他年龄段,则差别不是很明显,认为是随机所致,因此可以考虑将年龄偏小的区域分离出来。

6.Embarked登港港口与幸存率的关系

登船港口(Embarked):

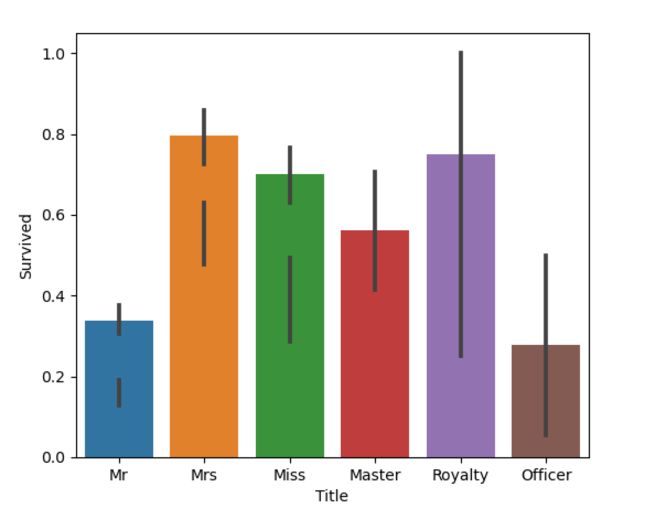

7.称呼与幸存率的关系

定义以下几种头衔类型,

- Officer政府官员

- Royalty王室(皇室)

- Mr已婚男士

- Mrs已婚妇女

- Miss年轻未婚女子

- Master有技能的人/教师

all_data = pd.concat([train, test], ignore_index=True)

all_data['Title'] = all_data['Name'].apply(lambda x:x.split(',')[1].split('.')[0].strip())

Title_Dict = {}

Title_Dict.update(dict.fromkeys(['Capt', 'Col', 'Major', 'Dr', 'Rev'], 'Officer'))

Title_Dict.update(dict.fromkeys(['Don', 'Sir', 'the Countess', 'Dona', 'Lady'], 'Royalty'))

Title_Dict.update(dict.fromkeys(['Mme', 'Ms', 'Mrs'], 'Mrs'))

Title_Dict.update(dict.fromkeys(['Mlle', 'Miss'], 'Miss'))

Title_Dict.update(dict.fromkeys(['Mr'], 'Mr'))

Title_Dict.update(dict.fromkeys(['Master','Jonkheer'], 'Master'))

all_data['Title'] = all_data['Title'].map(Title_Dict)

sns.barplot(x="Title", y="Survived", data=all_data)

plt.show()

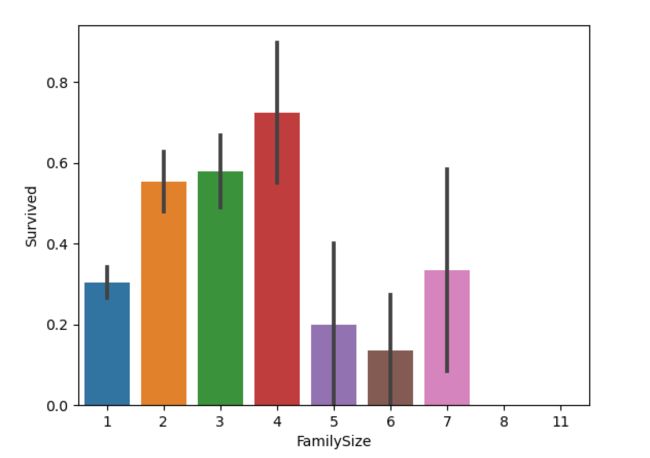

8.家庭人数与幸存率的关系

这里新增FamilyLabel特征,这个特征等于父母儿童+配偶兄弟姐妹+1,在文中就是 FamilyLabel=Parch+SibSp+1,然后将FamilySize分为三类:

all_data['FamilySize']=all_data['SibSp']+all_data['Parch']+1

sns.barplot(x="FamilySize", y="Survived", data=all_data)

数据探索

import warnings

warnings.filterwarnings(action="ignore")

import matplotlib.pyplot as plt

import seaborn as sns

plt.figure(figsize=[12, 10])

plt.subplot(3,3,1)

sns.barplot('Pclass', 'Survived', data=train)

plt.subplot(3,3,2)

sns.barplot('SibSp', 'Survived', data=train)

plt.subplot(3,3,3)

sns.barplot('Parch', 'Survived', data=train)

plt.subplot(3,3,4)

sns.barplot('Sex', 'Survived', data=train)

plt.subplot(3,3,5)

sns.barplot('Ticket', 'Survived', data=train)

plt.subplot(3,3,6)

sns.barplot('Cabin', 'Survived', data=train)

plt.subplot(3,3,7)

sns.barplot('Embarked', 'Survived', data=train)

plt.subplot(3,3,8)

sns.distplot(train[train.Survived==1].Age, color='green', kde=False)

sns.distplot(train[train.Survived==0].Age, color='orange', kde=False)

plt.subplot(3,3,9)

sns.distplot(train[train.Survived==1].Fare, color='green', kde=False)

sns.distplot(train[train.Survived==0].Fare, color='orange', kde=False)

数据准备

train.drop(['PassengerId','Name','Ticket','SibSp','Parch','Ticket','Cabin'],axis=1,inplace=True)

test.drop(['PassengerId','Name','Ticket','SibSp','Parch','Ticket','Cabin'],axis=1,inplace=True)

titanic=pd.concat([train, test], sort=False)

titanic=pd.get_dummies(titanic)#get_dummies one-hot encoding将离散特征的取值扩展到欧式空间,比如性别本事是一个特征,经过one-hot编码后,就变成了男或女两个特征

train=titanic[:len_train]#前len_train行为训练集

test=titanic[len_train:]#后面的为测试集

# Lets change type of target

train.Survived=train.Survived.astype('int')

train.Survived.dtype

xtrain=train.drop("Survived",axis=1)

ytrain=train['Survived']

xtest=test.drop("Survived", axis=1)

模型的构建及训练

随机森林

RF=RandomForestClassifier(random_state=1)

PRF=[{'n_estimators':[10,100],'max_depth':[3,6],'criterion':['gini','entropy']}]

GSRF=GridSearchCV(estimator=RF, param_grid=PRF, scoring='accuracy',cv=2)

scores_rf=cross_val_score(GSRF,xtrain,ytrain,scoring='accuracy',cv=5)

np.mean(scores_rf)

SVC

svc=make_pipeline(StandardScaler(),SVC(random_state=1))

r=[0.0001,0.001,0.1,1,10,50,100]

PSVM=[{'svc__C':r, 'svc__kernel':['linear']},

{'svc__C':r, 'svc__gamma':r, 'svc__kernel':['rbf']}]

GSSVM=GridSearchCV(estimator=svc, param_grid=PSVM, scoring='accuracy', cv=2)

scores_svm=cross_val_score(GSSVM, xtrain.astype(float), ytrain,scoring='accuracy', cv=5)

np.mean(scores_svm)

完整实现代码

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import numpy as np

from sklearn.model_selection import cross_val_score

from sklearn.ensemble import RandomForestClassifier

from sklearn.svm import SVC

from sklearn.preprocessing import StandardScaler

from sklearn.pipeline import make_pipeline

from sklearn.model_selection import GridSearchCV

import warnings

warnings.filterwarnings(action="ignore")

train=pd.read_csv("input/train.csv")

test=pd.read_csv("input/test.csv")

test2=pd.read_csv("input/test.csv")

titanic=pd.concat([train, test], sort=False)

len_train=train.shape[0]#train数据的行数

print(titanic)

print(titanic.dtypes.sort_values())#对数据进行排序

titanic.select_dtypes(include='int').tail()

titanic.select_dtypes(include='object').head() #查看特定类型的数据行

titanic.select_dtypes(include= 'float').head()

print(titanic.isnull().sum()[titanic.isnull().sum()>0])#只显示存在缺失值的属性,如果没有titanic.isnull().sum()>0则不确实的特征属性会显示为0

train.Fare=train.Fare.fillna(train.Fare.mean())#Fare票价的缺失值用平均值填充

test.Fare=test.Fare.fillna(train.Fare.mean())

train.Cabin=train.Cabin.fillna("unknow")#船舱座位号空的用unknow填充

test.Cabin=test.Cabin.fillna("unknow")

train.Embarked=train.Embarked.fillna(train.Embarked.mode()[0])#mode为求众数(频数最高的值)

test.Embarked=test.Embarked.fillna(train.Embarked.mode()[0])

train['title']=train.Name.apply(lambda x: x.split('.')[0].split(',')[1].strip())

test['title']=test.Name.apply(lambda x: x.split('.')[0].split(',')[1].strip())

#对年龄缺失值的补全,先提取姓名中的称谓,判断大致身份,然后根据各不同称谓的平均年龄,对同类

#缺失值进行补齐。

newtitles={

"Capt": "Officer",

"Col": "Officer",

"Major": "Officer",

"Jonkheer": "Royalty",

"Don": "Royalty",

"Sir" : "Royalty",

"Dr": "Officer",

"Rev": "Officer",

"the Countess":"Royalty",

"Dona": "Royalty",

"Mme": "Mrs",

"Mlle": "Miss",

"Ms": "Mrs",

"Mr" : "Mr",

"Mrs" : "Mrs",

"Miss" : "Miss",

"Master" : "Master",

"Lady" : "Royalty"}

train['title']=train.title.map(newtitles)

test['title']=test.title.map(newtitles)

train.groupby(['title','Sex']).Age.mean()

def newage (cols):

title=cols[0]

Sex=cols[1]

Age=cols[2]

if pd.isnull(Age):

if title=='Master' and Sex=="male":

return 4.57

elif title=='Miss' and Sex=='female':

return 21.8

elif title=='Mr' and Sex=='male':

return 32.37

elif title=='Mrs' and Sex=='female':

return 35.72

elif title=='Officer' and Sex=='female':

return 49

elif title=='Officer' and Sex=='male':

return 46.56

elif title=='Royalty' and Sex=='female':

return 40.50

else:

return 42.33

else:

return Age

train.Age = train[['title', 'Sex', 'Age']].apply(newage, axis=1)

test.Age = test[['title', 'Sex', 'Age']].apply(newage, axis=1)

#droping features I won't use in model

#train.drop(['PassengerId','Name','Ticket','SibSp','Parch','Ticket','Cabin']

## 数据准备

train.drop(['PassengerId','Name','Ticket','SibSp','Parch','Ticket','Cabin'],axis=1,inplace=True)

test.drop(['PassengerId','Name','Ticket','SibSp','Parch','Ticket','Cabin'],axis=1,inplace=True)

titanic=pd.concat([train, test], sort=False)

titanic=pd.get_dummies(titanic)#get_dummies one-hot encoding将离散特征的取值扩展到欧式空间,比如性别本事是一个特征,经过one-hot编码后,就变成了男或女两个特征

train=titanic[:len_train]#前len_train行为训练集

test=titanic[len_train:]#后面的为测试集

# Lets change type of target

train.Survived=train.Survived.astype('int')

train.Survived.dtype

xtrain=train.drop("Survived",axis=1)

ytrain=train['Survived']

xtest=test.drop("Survived", axis=1)

RF=RandomForestClassifier(random_state=1)

PRF=[{'n_estimators':[10,100],'max_depth':[3,6],'criterion':['gini','entropy']}]

GSRF=GridSearchCV(estimator=RF, param_grid=PRF, scoring='accuracy',cv=2)

scores_rf=cross_val_score(GSRF,xtrain,ytrain,scoring='accuracy',cv=5)

np.mean(scores_rf)

svc=make_pipeline(StandardScaler(),SVC(random_state=1))

r=[0.0001,0.001,0.1,1,10,50,100]

PSVM=[{'svc__C':r, 'svc__kernel':['linear']},

{'svc__C':r, 'svc__gamma':r, 'svc__kernel':['rbf']}]

GSSVM=GridSearchCV(estimator=svc, param_grid=PSVM, scoring='accuracy', cv=2)

scores_svm=cross_val_score(GSSVM, xtrain.astype(float), ytrain,scoring='accuracy', cv=5)

np.mean(scores_svm)

model=GSSVM.fit(xtrain, ytrain)

pred=model.predict(xtest)

output=pd.DataFrame({'PassengerId':test2['PassengerId'],'Survived':pred})

output.to_csv('submission.csv', index=False)