vue3 + video + canvas 人脸识别

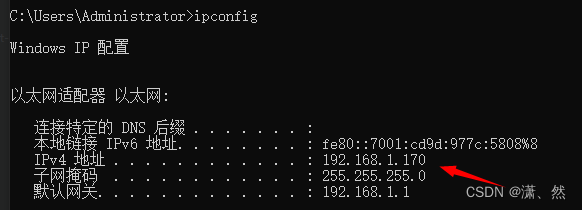

注意调试只有在本地才可以打开摄像头,线上需要 https

该例子使用的是手动点击选择照片

稍微修改注释代码也可以实时获取人脸

示例码云地址

video 修改圆形样式

修改此属性

object-fit: cover;

人脸左右移动方向相反

利用 transform:scaleX 实现反转

video,img{

transform: scaleX(-1);

}

生成的图片出现拉伸

ctx?.drawImage(

video.value,

-50,

0,

screenshotCanvas.value.width * (320 / 240),

screenshotCanvas.value.height

);

// 50 如何得来

( screenshotCanvas.value.width - screenshotCanvas.value.width * (320 / 240) ) / 2

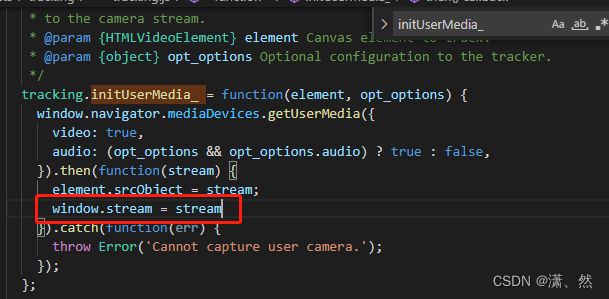

关闭摄像头

window.stream = stream

关闭摄像头

onUnmounted(() => {

window.mediaStreamTrack?.stop();

window.stream?.getTracks()?.forEach((track) => track?.stop());

});

base64 转换 file

//将base64转换为blob

export const dataURLtoBlob = (dataurl) => {

var arr = dataurl.split(","),

mime = arr[0].match(/:(.*?);/)[1],

bstr = atob(arr[1]),

n = bstr.length,

u8arr = new Uint8Array(n);

while (n--) {

u8arr[n] = bstr.charCodeAt(n);

}

return new Blob([u8arr], { type: mime });

};

//将blob转换为file

export const blobToFile = (theBlob, fileName) => {

theBlob.lastModifiedDate = new Date();

theBlob.name = fileName;

return theBlob;

};

const blob = dataURLtoBlob(base64Img);

const file = blobToFile(blob, "face");

iVCam 手机用作电脑摄像头

示例代码如下

码云地址

<template>

<div>

<div class="video-box">

<img :src="src" v-show="src" />

<video

v-show="!src"

ref="video"

id="video"

width="300"

height="300"

preload

autoplay

loop

muted

></video>

<canvas ref="canvas" id="canvas" width="300" height="300"></canvas>

</div>

<canvas

ref="screenshotCanvas"

id="screenshotCanvas"

width="300"

height="300"

></canvas>

</div>

<div @click="screenshotAndUpload">拍摄</div>

</template>

<script setup>

import { ref, reactive, onMounted } from "vue";

import * as tracking from "./assets/tracking/tracking-min.js";

import "./assets/tracking/face-min.js";

const video = ref(null);

const screenshotCanvas = ref(null);

const src = ref("");

onMounted(() => {

init();

});

// 初始化设置

function init() {

// 固定写法

let tracker = new window.tracking.ObjectTracker("face");

tracker.setInitialScale(4);

tracker.setStepSize(2);

tracker.setEdgesDensity(0.1);

window.tracking.track("#video", tracker, {

camera: true,

});

// 检测出人脸 绘画人脸位置 该示例不需要

// tracker.on("track", function(event) {

// context.clearRect(0, 0, canvas.width, canvas.height);

// event.data.forEach(function(rect) {

// context.strokeStyle = "#0764B7";

// context.strokeRect(rect.x, rect.y, rect.width, rect.height);

// });

// });

}

// 上传图片

function screenshotAndUpload() {

// 绘制当前帧图片转换为base64格式

let ctx = screenshotCanvas.value?.getContext("2d");

ctx?.clearRect(

0,

0,

screenshotCanvas.value.width,

screenshotCanvas.value.height

);

ctx?.drawImage(

video.value,

-50,

0,

screenshotCanvas.value.width * (320 / 240),

screenshotCanvas.value.height

);

const base64Img = screenshotCanvas.value?.toDataURL("image/jpeg");

src.value = `${base64Img}`;

// TODO 使用 base64Img 请求接口即可

// setTimeout(() => {

// // 失败

// src.value = "";

// }, 2000);

}

</script>

<style scoped>

/* 绘图canvas 不需显示隐藏即可 */

#screenshotCanvas {

display: none;

}

.video-box {

position: relative;

width: 300px;

height: 300px;

}

video,

img {

width: 300px;

height: 300px;

border-radius: 50%;

object-fit: cover;

border: #000000 5px solid;

box-sizing: border-box;

transform: scaleX(-1);

}

video,

canvas {

position: absolute;

top: 0;

left: 0;

}

</style>

原生示例

<template>

<button @click="openMedia">打开</button>

<button @click="closeMedia">关闭</button>

<button @click="drawMedia">截取</button>

<!-- 摄像展示要用video标签 -->

<video

ref="video"

preload

autoplay

loop

muted

></video>

<!-- 截取的照片用canvas标签展示 -->

<canvas ref="canvas" width="300" height="300"></canvas>

</template>

<script setup>

import { ref, onMounted } from "vue";

const video = ref(null);

const canvas = ref(null);

const imgSrc = ref("");

onMounted(() => {

openMedia();

console.info('video',video.value);

console.log('video',video.value.width);

console.log('video',video.value.height);

});

function openMedia() {

// 旧版本浏览器可能根本不支持mediaDevices,我们首先设置一个空对象

if (navigator.mediaDevices === undefined) {

navigator.mediaDevices = {};

}

// 标准的API

navigator.mediaDevices

.getUserMedia({

video: true,

})

.then((stream) => {

// 摄像头开启成功

video.value.srcObject = stream;

video.value?.play();

})

.catch((err) => {

console.log("err", err);

});

}

function closeMedia() {

video.value.srcObject.getTracks()[0].stop();

}

function drawMedia() {

console.log(canvas.value.width, canvas.value.width * (320 / 240));

const ctx = canvas.value?.getContext("2d");

ctx?.clearRect(0, 0, canvas.value.width, canvas.value.height);

ctx.drawImage(

video.value,

(canvas.value.width - canvas.value.width * (320 / 240)) / 2,

0,

canvas.value.width * (320 / 240),

canvas.value.height

);

// 获取图片base64链接

const image = canvas.value.toDataURL("image/png");

imgSrc.value = image;

}

</script>

<style scoped>

video {

object-fit: cover;

transform: scaleX(-1);

}

canvas {

transform: scaleX(-1);

}

</style>