nn-UNet使用记录--开箱即用

nnunet项目官方地址

MIC-DKFZ/nnUNet (github.com)

使用nnunet之前,建议先阅读两篇论文

nnU-Net: Self-adapting Framework for U-Net-Based Medical Image Segmentation

nnU-Net: a self-configuring method for deep learning-based biomedical image segmentation

本文使用vscode-remote ssh远程配置nnunet包,然后使用KiTS19肾脏肿瘤分割数据集进行实现。

1.服务器环境

import torch

print(torch.__version__) # torch版本查询

print(torch.version.cuda) # cuda版本查询

print(torch.backends.cudnn.version()) # cudnn版本查询

print(torch.cuda.get_device_name(0)) #设备名

1.8.0 11.1 8805

NVIDIA A100-SXM4-40GB

2.nnunet安装

一步到位

pip install nnunet

3.数据准备

以KiTS19为例

原始数据集下载方法:

- github官网下载 – neheller/kits19: The official repository of the 2019 Kidney and Kidney Tumor Segmentation Challenge (github.com)

- 百度飞桨的公共数据集 – Kits19肾脏肿瘤分割 - 飞桨AI Studio (baidu.com)

找数据集的时候我校验过,百度飞桨和github上的数据集是一样的。

github官网下载比较慢,可使用wget命令直接从百度飞桨的数据集地址下载,网速非常快。

原始数据如下图所示,使用nnunet要求结构化的数据集,使用前进行一个简单处理

root@worker04:~/data# tree data/KiTS19/origin

data/KiTS19/origin

|-- case_00000

| |-- imaging.nii.gz

| `-- segmentation.nii.gz

|-- case_00001

| |-- imaging.nii.gz

| `-- segmentation.nii.gz

|-- case_00002

| |-- imaging.nii.gz

| `-- segmentation.nii.gz

|-- case_00003

| |-- imaging.nii.gz

| `-- segmentation.nii.gz

......

下面是我根据nnunet中的dataset_conversion/Task040_KiTS.py修改的代码

import os

import json

import shutil

def save_json(obj, file, indent=4, sort_keys=True):

with open(file, 'w') as f:

json.dump(obj, f, sort_keys=sort_keys, indent=indent)

def maybe_mkdir_p(directory):

directory = os.path.abspath(directory)

splits = directory.split("/")[1:]

for i in range(0, len(splits)):

if not os.path.isdir(os.path.join("/", *splits[:i+1])):

try:

os.mkdir(os.path.join("/", *splits[:i+1]))

except FileExistsError:

# this can sometimes happen when two jobs try to create the same directory at the same time,

# especially on network drives.

print("WARNING: Folder %s already existed and does not need to be created" % directory)

def subdirs(folder, join=True, prefix=None, suffix=None, sort=True):

if join:

l = os.path.join

else:

l = lambda x, y: y

res = [l(folder, i) for i in os.listdir(folder) if os.path.isdir(os.path.join(folder, i))

and (prefix is None or i.startswith(prefix))

and (suffix is None or i.endswith(suffix))]

if sort:

res.sort()

return res

base = "../../../data/KiTS19/origin" # 原始文件路径

out = "../../../nnUNet_raw_data_base/nnUNet_raw_data/Task040_KiTS" # 结构化数据集目录

cases = subdirs(base, join=False)

maybe_mkdir_p(out)

maybe_mkdir_p(os.path.join(out, "imagesTr"))

maybe_mkdir_p(os.path.join(out, "imagesTs"))

maybe_mkdir_p(os.path.join(out, "labelsTr"))

for c in cases:

case_id = int(c.split("_")[-1])

if case_id < 210:

shutil.copy(os.path.join(base, c, "imaging.nii.gz"), os.path.join(out, "imagesTr", c + "_0000.nii.gz"))

shutil.copy(os.path.join(base, c, "segmentation.nii.gz"), os.path.join(out, "labelsTr", c + ".nii.gz"))

else:

shutil.copy(os.path.join(base, c, "imaging.nii.gz"), os.path.join(out, "imagesTs", c + "_0000.nii.gz"))

json_dict = {}

json_dict['name'] = "KiTS"

json_dict['description'] = "kidney and kidney tumor segmentation"

json_dict['tensorImageSize'] = "4D"

json_dict['reference'] = "KiTS data for nnunet"

json_dict['licence'] = ""

json_dict['release'] = "0.0"

json_dict['modality'] = {

"0": "CT",

}

json_dict['labels'] = {

"0": "background",

"1": "Kidney",

"2": "Tumor"

}

json_dict['numTraining'] = len(cases) # 应该是210例

json_dict['numTest'] = 0

json_dict['training'] = [{'image': "./imagesTr/%s.nii.gz" % i, "label": "./labelsTr/%s.nii.gz" % i} for i in cases]

这里只是对数据集进行一个拷贝和重命名,不对原始数据进行修改。

运行代码后,整理好的数据集结构如下:

nnUNet_raw_data_base/nnUNet_raw_data/Task040_KiTS

├── dataset.json

├── imagesTr

│ ├── case_00000_0000.nii.gz

│ ├── case_00001_0000.nii.gz

│ ├── ...

├── imagesTs

│ ├── case_00210_0000.nii.gz

│ ├── case_00211_0000.nii.gz

│ ├── ...

├── labelsTr

│ ├── case_00000.nii.gz

│ ├── case_00001.nii.gz

│ ├── ...

dataset.json文件保存了训练集图像、训练集标签、测试集图像信息

{

"description": "kidney and kidney tumor segmentation",

"labels": {

"0": "background",

"1": "Kidney",

"2": "Tumor"

},

"licence": "",

"modality": {

"0": "CT"

},

"name": "KiTS",

"numTest": 0,

"numTraining": 210,

"reference": "KiTS data for nnunet",

"release": "0.0",

"tensorImageSize": "4D",

"test": [],

"training": [

{

"image": "./imagesTr/case_00000.nii.gz",

"label": "./labelsTr/case_00000.nii.gz"

},

{

"image": "./imagesTr/case_00001.nii.gz",

"label": "./labelsTr/case_00001.nii.gz"

},

......

]

}

4.添加路径

提前准备三个文件夹,分别存放数据集、预处理数据和训练结果

- nnUNet_raw_data_base

- nnUNet_raw_data

- Task005_Prostate

- Task040_KiTS

- nnUNet_raw_data

- nnUNet_preprocessed

- nnUNet_trained_models

提前准备好数据集,放在nnUNet_raw_data_base/nnUNet_raw_data下面。 三个文件夹的路径一定要记清楚,nnunet的数据都在三个文件夹里面了

5.设置环境变量

以下两种方式都可

1.修改主目录中的 .bashrc 文件,通过文本编辑器或者vim修改

-

vim ~/.bashrc

-

将以下代码添加到 .bashrc 文件的底部

修改成上一步建立的三个文件夹的路径

export nnUNet_raw_data_base="/media/fabian/nnUNet_raw_data_base"

export nnUNet_preprocessed="/media/fabian/nnUNet_preprocessed"

export RESULTS_FOLDER="/media/fabian/nnUNet_trained_models"

2.临时设置环境变量

直接在终端输入上面的三行代码,但每次关闭终端,变量会丢失,需要重新设置

成功设置后,键入echo $RESULTS_FOLDER 等验证路径

![]()

6.数据预处理

nnUNet_plan_and_preprocess -t XXX --verify_dataset_integrity

XXX是任务ID,verify_dataset_integrity是对结构化数据集做一个校验,第一次进行预处理的时候最好还是加上。

nnUNet_preprocessed文件夹下

|-- Task005_Prostate

| |-- dataset.json

| |-- dataset_properties.pkl

| |-- gt_segmentations

| |-- nnUNetData_plans_v2.1_2D_stage0

| |-- nnUNetData_plans_v2.1_stage0

| |-- nnUNetPlansv2.1_plans_2D.pkl

| |-- nnUNetPlansv2.1_plans_3D.pkl

| `-- splits_final.pkl

`-- Task040_KiTS

|-- dataset.json

|-- dataset_properties.pkl

|-- gt_segmentations

|-- nnUNetData_plans_v2.1_2D_stage0

|-- nnUNetData_plans_v2.1_stage0

|-- nnUNetPlansv2.1_plans_2D.pkl

|-- nnUNetPlansv2.1_plans_3D.pkl

`-- splits_final.pkl

这里Task040_KiTS的原始数据在预处理之前,我按照冠军论文里的方法做了一个重采样,因此没有stage1。另外,我丢掉了两个错误病例,15号和37号,这样一共有

210-2=208个病例。我一开始也没有做重采样,直接扔到nnunet里面,结果发现nnunet的自动重采样不是那么靠谱,采样后的尺寸太大了。

这里生成的文件都可以打开来看看,对预处理方法和训练过程有一个了解

-

dataset.json就是第3步数据准备生成的

-

daset_properties为数据的 size, spacing, origin, classes, size_after_cropping 等属性

-

gt_segmentations为标签

-

nnUNetData_plans_v2.1_2D_stage0和nnUNetData_plans_v2.1_stage0是预处理后的数据集

-

splits_final.pkl是五折交叉验证划分的结果,一共208个病人,42为一折

-

nnUNetPlansv2.1_plans_3D.pkl

-

plan_path = "../../../nnUNet_preprocessed/Task040_KiTS/nnUNetPlansv2.1_plans_3D.pkl" with open(plan_path,'rb') as f: plans = pickle.load(f) print(plans['plans_per_stage'][0]){'batch_size': 12, 'num_pool_per_axis': [4, 5, 5], 'patch_size': array([ 80, 160, 160]), 'median_patient_size_in_voxels': array([128, 247, 247]), 'current_spacing': array([3.22000003, 1.62 , 1.62 ]), 'original_spacing': array([3.22000003, 1.62 , 1.62 ]), 'do_dummy_2D_data_aug': False, 'pool_op_kernel_sizes': [[2, 2, 2], [2, 2, 2], [2, 2, 2], [2, 2, 2], [1, 2, 2]], 'conv_kernel_sizes': [[3, 3, 3], [3, 3, 3], [3, 3, 3], [3, 3, 3], [3, 3, 3], [3, 3, 3]]} ------------------------------------------------------------------------------------------- batch_size默认为2,如果GPU内存足够,可以设置大一点 patch_size默认为[96,160,160]参考官方教程中的edit_plans_files.md文件

-

准备工作做好之后就可以开始训练了

7.模型训练

nnUNet_train CONFIGURATION TRAINER_CLASS_NAME TASK_NAME_OR_ID FOLD # 格式

nnUNet_train 3d_fullres nnUNetTrainerV2 40 2

训练开始后,训练日志和训练结果记录在nnUNet_trained_models/nnUNet/3d_fullres/Task040_KiTS文件夹下

Task040_KiTS/

|-- nnUNetTrainerV2__nnUNetPlansv2.1

| |-- fold_2

| | |-- debug.json

| | |-- model_best.model

| | |-- model_best.model.pkl

| | |-- model_latest.model

| | |-- model_latest.model.pkl

| | |-- progress.png

| | `-- training_log_2022_4_27_09_06_59.txt

| `-- plans.pkl

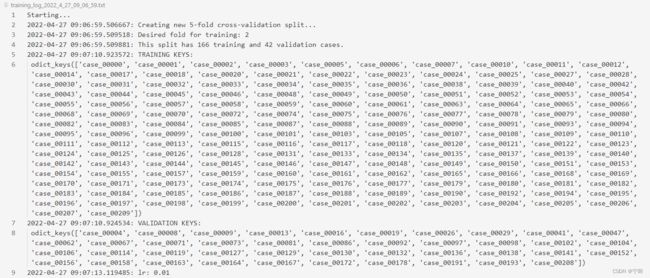

training_log_2022_4_27_09_06_59.txt

batch_size=12,patch_size=(80,160,160)时,GPU使用情况

部分训练结果

2022-04-28 00:40:45.481503:

epoch: 59

2022-04-28 00:55:54.086779: train loss : -0.7443

2022-04-28 00:56:32.347733: validation loss: -0.7275

2022-04-28 00:56:32.349458: Average global foreground Dice: [0.9517, 0.9154]

2022-04-28 00:56:32.349963: (interpret this as an estimate for the Dice of the different classes. This is not exact.)

2022-04-28 00:56:33.177943: lr: 0.009458

2022-04-28 00:56:33.213631: saving checkpoint...

2022-04-28 00:56:33.665359: done, saving took 0.49 seconds

2022-04-28 00:56:33.680583: This epoch took 948.198634 s

2022-04-28 00:56:33.681018:

epoch: 60

2022-04-28 01:11:42.852178: train loss : -0.7372

2022-04-28 01:12:21.286995: validation loss: -0.7232

2022-04-28 01:12:21.288569: Average global foreground Dice: [0.9528, 0.8968]

2022-04-28 01:12:21.289036: (interpret this as an estimate for the Dice of the different classes. This is not exact.)

2022-04-28 01:12:22.120225: lr: 0.009449

2022-04-28 01:12:22.120799: This epoch took 948.439400 s

2022-04-28 01:12:22.121174:

epoch: 61

2022-04-28 01:27:31.129683: train loss : -0.7454

2022-04-28 01:28:09.405324: validation loss: -0.7357

2022-04-28 01:28:09.406955: Average global foreground Dice: [0.9532, 0.9338]

2022-04-28 01:28:09.407326: (interpret this as an estimate for the Dice of the different classes. This is not exact.)

2022-04-28 01:28:10.347231: lr: 0.00944

2022-04-28 01:28:10.373067: saving checkpoint...

2022-04-28 01:28:10.841225: done, saving took 0.49 seconds

2022-04-28 01:28:10.855461: This epoch took 948.733870 s

......

训练结果出乎意料的好,没想到我把数据集重采样之后效果提高了这么多

nnunet包容易上手,但调参不方便,也不能修改网络结构导入自己的模型,接下来想在实验室的服务器上使用nnunet代码,方便以后阅读源码、调参以及导入自己的模型。

生物医学工程专业,研一小硕一枚,接触深度学习和图像分割半年。人生第一次写博客,记录一下自己的学习过程,若有错误,请多指教,与君共勉~~