为什么卷积层不加bias

我们经常看到模型设计时:

nn.Conv2d(1, 3, 3, 2, bias=False)

也就是卷积时不加bias,而这个原因是因为后面跟了:

nn.BatchNorm2d(3)

问:那为什么跟了BN就不用加bias了呢?

因为BN的公式求解过程中会将bias抵消吊,而且BN本身x*γ+β 这里β可以等价bias了。

我们来看下BN的公式:

tmp = (x - mean) / sqrt(var + eps)

result = tmp *gamma + beta

这里主要看tmp就够了,如果卷积存在bias:

看分子:x-mean 变成 (x+bias) - (mean + bias), 那就等于没加一样。

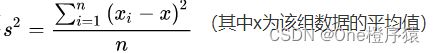

看分母:var公式

这里面的Xi-X 和 分子 x-mean是同样的道理依然会导致bias相互抵消吊。

结论就是:分子和分母中的bias完全不起作用,再加上BN本身有beta存在,完全不需要卷积的bias了。

说归说,我们自己跑下代码验证一下。

这里设置卷积biase=True:

import torch

from torch import nn

seed = 996

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

torch.cuda.manual_seed_all(seed) # if you are using multi-GPU.

torch.manual_seed(seed)

class bn_test(nn.Module):

def __init__(self):

super().__init__()

self.con = nn.Conv2d(1, 3, 3, 2, bias=True)

# self.con = nn.Conv2d(1, 3, 3, 2, bias=False)

self.bn = nn.BatchNorm2d(3)

self.ln = nn.LayerNorm(3)

self.bn_weight = None

self.bn_bias = None

self.con_ret = None

def weights_init(self):

self.bn_weight = self.bn.weight.data.fill_(0.2)

self.bn_bias = self.bn.bias.data.fill_(0.2)

def forward(self, x):

n1 = self.con(x)

self.con_ret = n1 # 记录con2d结果用于后面手工计算BN结果

n2 = self.bn(n1)

return n2

m = bn_test()

m.weights_init()

input_ = [[[[0.4921, 0.9009, 0.3182, 0.4952, 0.5094],

[0.4669, 0.7450, 0.2349, 0.5456, 0.0777],

[0.6964, 0.0136, 0.5799, 0.9792, 0.5952],

[0.3436, 0.1260, 0.2499, 0.6607, 0.7675],

[0.2015, 0.1603, 0.9990, 0.3167, 0.6987]]],

[[[0.9373, 0.5039, 0.4647, 0.6227, 0.8541],

[0.4705, 0.2498, 0.8934, 0.5444, 0.4341],

[0.0048, 0.9343, 0.7926, 0.4487, 0.0061],

[0.8925, 0.4320, 0.1741, 0.2669, 0.6039],

[0.4823, 0.8444, 0.9143, 0.0682, 0.7055]]]]

data = torch.Tensor(input_)

ret = m(data) # 得到torch BN结果

print(ret)这里设置卷积biase=False:

import torch

from torch import nn

seed = 996

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

torch.cuda.manual_seed_all(seed) # if you are using multi-GPU.

torch.manual_seed(seed)

class bn_test(nn.Module):

def __init__(self):

super().__init__()

# self.con = nn.Conv2d(1, 3, 3, 2, bias=True)

self.con = nn.Conv2d(1, 3, 3, 2, bias=False)

self.bn = nn.BatchNorm2d(3)

self.ln = nn.LayerNorm(3)

self.bn_weight = None

self.bn_bias = None

self.con_ret = None

def weights_init(self):

self.bn_weight = self.bn.weight.data.fill_(0.2)

self.bn_bias = self.bn.bias.data.fill_(0.2)

def forward(self, x):

n1 = self.con(x)

self.con_ret = n1 # 记录con2d结果用于后面手工计算BN结果

n2 = self.bn(n1)

return n2

m = bn_test()

m.weights_init()

input_ = [[[[0.4921, 0.9009, 0.3182, 0.4952, 0.5094],

[0.4669, 0.7450, 0.2349, 0.5456, 0.0777],

[0.6964, 0.0136, 0.5799, 0.9792, 0.5952],

[0.3436, 0.1260, 0.2499, 0.6607, 0.7675],

[0.2015, 0.1603, 0.9990, 0.3167, 0.6987]]],

[[[0.9373, 0.5039, 0.4647, 0.6227, 0.8541],

[0.4705, 0.2498, 0.8934, 0.5444, 0.4341],

[0.0048, 0.9343, 0.7926, 0.4487, 0.0061],

[0.8925, 0.4320, 0.1741, 0.2669, 0.6039],

[0.4823, 0.8444, 0.9143, 0.0682, 0.7055]]]]

data = torch.Tensor(input_)

ret = m(data) # 得到torch BN结果

print(ret)可以看到两次跑完结果是一样的,都是:

tensor([[[[ 0.4979, 0.1907],

[ 0.0466, 0.4834]],

[[ 0.4861, 0.1936],

[-0.0964, 0.4781]],

[[ 0.4720, 0.0446],

[ 0.1254, 0.2910]]],

[[[-0.1349, 0.0844],

[ 0.2358, 0.1962]],

[[-0.0635, 0.2445],

[ 0.1627, 0.1948]],

[[ 0.1201, 0.1994],

[-0.1426, 0.4901]]]], grad_fn=