kubernetes velero 使用奇技淫巧

你是否在运维kubernetes集群中有过这样的经历:

一个新人把某个namespace点击删除,导致这下面所有的资源全部丢失,只能一步一步的重新部署。

新搭建集群,为了保证环境尽可能一致,只能从老集群拿出来yaml文件在新集群中疯狂apply。

令人抓狂的瞬间随之而来的就是浪费大好青春的搬砖时光。

现在已经开源了很多集群资源对象备份的工具,把这些工具利用起来让你的工作事半功倍,不在苦逼加班。

集群备份比较

etcd备份

etcd备份可以实现K8S集群的备份,但是这种备份一般是全局的,可以恢复到集群某一时刻的状态,无法精确到恢复某一资源对象,一般使用快照的形式进行备份和恢复。

# 备份

#!/usr/bin/env bash

date;

CACERT="/opt/kubernetes/ssl/ca.pem"

CERT="/opt/kubernetes/ssl/server.pem"

EKY="/opt/kubernetes/ssl/server-key.pem"

ENDPOINTS="192.168.1.36:2379"

ETCDCTL_API=3 etcdctl \

--cacert="${CACERT}" --cert="${CERT}" --key="${EKY}" \

--endpoints=${ENDPOINTS} \

snapshot save /data/etcd_backup_dir/etcd-snapshot-`date +%Y%m%d`.db

# 备份保留30天

find /data/etcd_backup_dir/ -name *.db -mtime +30 -exec rm -f {} \;

# 恢复

ETCDCTL_API=3 etcdctl snapshot restore /data/etcd_backup_dir/etcd-snapshot-20191222.db \

--name etcd-0 \

--initial-cluster "etcd-0=https://192.168.1.36:2380,etcd-1=https://192.168.1.37:2380,etcd-2=https://192.168.1.38:2380" \

--initial-cluster-token etcd-cluster \

--initial-advertise-peer-urls https://192.168.1.36:2380 \

--data-dir=/var/lib/etcd/default.etcd

资源对象备份

对于更小粒度的划分到每种资源对象的备份,对于误删除了某种namespace或deployment以及集群迁移就很有用了。

现在开源工具有很多都提供了这样的功能,比如Velero, PX-Backup,Kasten。

velero:

Velero is an open source tool to safely backup and restore, perform disaster recovery, and migrate Kubernetes cluster resources and persistent volumes.

PX-Backup:

Built from the ground up for Kubernetes, PX-Backup delivers enterprise-grade application and data protection with fast recovery at the click of a button

Kasten:

Purpose-built for Kubernetes, Kasten K10 provides enterprise operations teams an easy-to-use, scalable, and secure system for backup/restore, disaster recovery, and mobility of Kubernetes applications.

velero

Velero lets you:

- Take backups of your cluster and restore in case of loss.

- Migrate cluster resources to other clusters.

- Replicate your production cluster to development and testing clusters.

官方介绍的velero提到了以上三个功能,主要就是备份恢复和迁移。

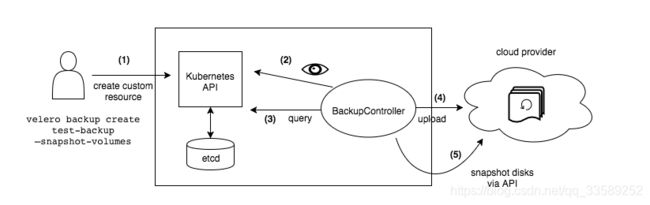

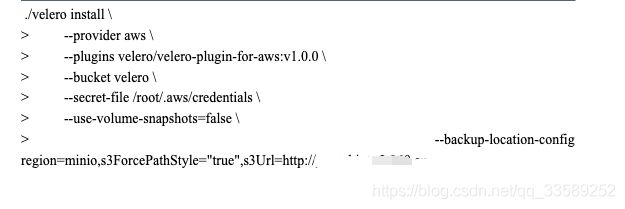

安装

可以通过命令行的方式安装,helm,yaml很多方法,举个例子:

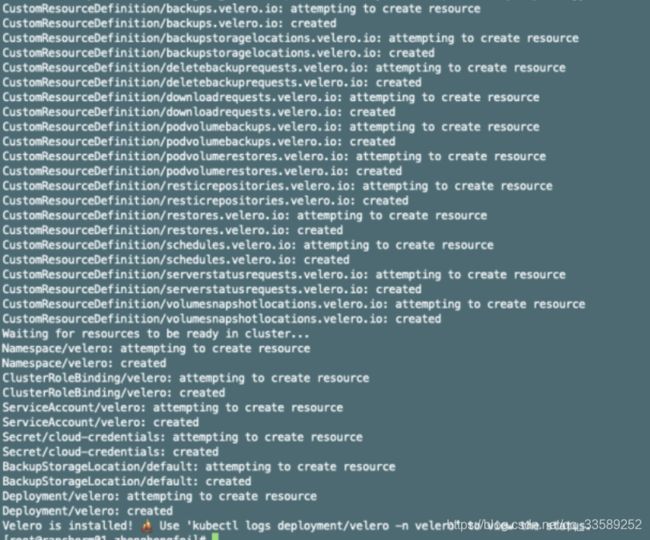

可以看到创建了很多crd,并最终在velero namespace下将应用跑起来了。其实从crd的命名上就可以看出他大概有哪些用途了。

定时备份

对于运维人员来说,对外提供一个集群的稳定性保证是必不可少的,这就需要我们开启定时备份功能。

通过命令行能够开始定时任务,指定那么分区,保留多少时间的备份数据,每隔多长时间进行备份一次。

Examples:

# Create a backup every 6 hours

velero create schedule NAME --schedule="0 */6 * * *"

# Create a backup every 6 hours with the @every notation

velero create schedule NAME --schedule="@every 6h"

# Create a daily backup of the web namespace

velero create schedule NAME --schedule="@every 24h" --include-namespaces web

# Create a weekly backup, each living for 90 days (2160 hours)

velero create schedule NAME --schedule="@every 168h" --ttl 2160h0m0s

velero create schedule 360cloud --schedule="@every 24h" --ttl 2160h0m0s

Schedule "360cloud" created successfully.

[root@xxxxx ~]# kubectl get schedules --all-namespaces

NAMESPACE NAME AGE

velero 360cloud 40s

[root@xxxxx ~]# kubectl get schedules -n velero 360cloud -o yaml

apiVersion: velero.io/v1

kind: Schedule

metadata:

generation: 3

name: 360cloud

namespace: velero

resourceVersion: "18164238"

selfLink: /apis/velero.io/v1/namespaces/velero/schedules/360cloud

uid: 7c04af34-1529-4b48-a3d1-d2f5e98de328

spec:

schedule: '@every 24h'

template:

hooks: {}

includedNamespaces:

- '*'

ttl: 2160h0m0s

status:

lastBackup: "2021-03-07T08:18:49Z"

phase: Enabled

集群迁移备份

对于我们要迁移部分的资源对象,可能并没有进行定时备份,可能有了定时备份,但是想要最新的数据。那么备份一个一次性的数据用来迁移就好了。

velero backup create test01 --include-namespaces default

Backup request "test01" submitted successfully.

Run `velero backup describe test01` or `velero backup logs test01` for more details.

[root@xxxxx ~]# velero backup describe test01

Name: test01

Namespace: velero

Labels: velero.io/storage-location=default

Annotations: velero.io/source-cluster-k8s-gitversion=v1.19.7

velero.io/source-cluster-k8s-major-version=1

velero.io/source-cluster-k8s-minor-version=19

Phase: InProgress

Errors: 0

Warnings: 0

Namespaces:

Included: default

Excluded: <none>

Resources:

Included: *

Excluded: <none>

Cluster-scoped: auto

Label selector: <none>

Storage Location: default

Velero-Native Snapshot PVs: auto

TTL: 720h0m0s

Hooks: <none>

Backup Format Version: 1.1.0

Started: 2021-03-07 16:44:52 +0800 CST

Completed: <n/a>

Expiration: 2021-04-06 16:44:52 +0800 CST

Velero-Native Snapshots: <none included>

备份之后可以使用describe logs去查看更详细的信息。

在另外的集群中使用restore就可以将集群数据恢复了。

[root@xxxxx ~]# velero restore create --from-backup test01

Restore request "test01-20210307164809" submitted successfully.

Run `velero restore describe test01-20210307164809` or `velero restore logs test01-20210307164809` for more details.

[root@xxxxx ~]# kuebctl ^C

[root@xxxxx ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-6799fc88d8-4bnfg 0/1 ContainerCreating 0 6s

nginx-6799fc88d8-cq82j 0/1 ContainerCreating 0 6s

nginx-6799fc88d8-f6qsx 0/1 ContainerCreating 0 6s

nginx-6799fc88d8-gq2xt 0/1 ContainerCreating 0 6s

nginx-6799fc88d8-j5fc7 0/1 ContainerCreating 0 6s

nginx-6799fc88d8-kvvx6 0/1 ContainerCreating 0 5s

nginx-6799fc88d8-pccc4 0/1 ContainerCreating 0 5s

nginx-6799fc88d8-q2fnt 0/1 ContainerCreating 0 4s

nginx-6799fc88d8-r9dqn 0/1 ContainerCreating 0 4s

nginx-6799fc88d8-zqv6v 0/1 ContainerCreating 0 4s

PVC的备份迁移

如果是Amazon EBS Volumes, Azure Managed Disks, Google Persistent Disks的存储类型,velero 允许为PV打快照,作为备份的一部分。

其他类型的存储可以使用插件的形式,实现备份。

velero install --use-restic

apiVersion: v1

kind: Pod

metadata:

annotations:

backup.velero.io/backup-volumes: mypvc

name: rbd-test

spec:

containers:

- name: web-server

image: nginx

volumeMounts:

- name: mypvc

mountPath: /var/lib/www/html

volumes:

- name: mypvc

persistentVolumeClaim:

claimName: rbd-pvc-zhf

readOnly: false

可以通过opt-in,opt-out的形式,为pod添加注解来进行选择需要备份的pod中的volume。

velero backup create testpvc05 --snapshot-volumes=true --include-namespaces default

Backup request "testpvc05" submitted successfully.

Run `velero backup describe testpvc05` or `velero backup logs testpvc05` for more details.

[root@xxxx ceph]# velero backup describe testpvc05

Name: testpvc05

Namespace: velero

Labels: velero.io/storage-location=default

Annotations: velero.io/source-cluster-k8s-gitversion=v1.19.7

velero.io/source-cluster-k8s-major-version=1

velero.io/source-cluster-k8s-minor-version=19

Phase: Completed

Errors: 0

Warnings: 0

Namespaces:

Included: default

Excluded: <none>

Resources:

Included: *

Excluded: <none>

Cluster-scoped: auto

Label selector: <none>

Storage Location: default

Velero-Native Snapshot PVs: true

TTL: 720h0m0s

Hooks: <none>

Backup Format Version: 1.1.0

Started: 2021-03-10 15:11:26 +0800 CST

Completed: 2021-03-10 15:11:36 +0800 CST

Expiration: 2021-04-09 15:11:26 +0800 CST

Total items to be backed up: 92

Items backed up: 92

Velero-Native Snapshots: <none included>

Restic Backups (specify --details for more information):

Completed: 1

删除pod和pvc

[root@xxxxxx ceph]# kubectl delete pod rbd-test

pod "rbd-test" deleted

kubectl delete pvc[root@p48453v ceph]# kubectl delete pvc rbd-pvc-zhf

persistentvolumeclaim "rbd-pvc-zhf" deleted

恢复资源对象

[root@xxxxx ceph]# velero restore create testpvc05 --restore-volumes=true --from-backup testpvc05

Restore request "testpvc05" submitted successfully.

Run `velero restore describe testpvc05` or `velero restore logs testpvc05` for more details.

[root@xxxxxx ceph]#

[root@xxxxxx ceph]# kuebctl^C

[root@xxxxxx ceph]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-6799fc88d8-4bnfg 1/1 Running 0 2d22h

rbd-test 0/1 Init:0/1 0 6s

数据恢复显示

[root@xxxxxx ceph]# kubectl exec rbd-test sh -- ls -l /var/lib/www/html

total 20

drwx------ 2 root root 16384 Mar 10 06:31 lost+found

-rw-r--r-- 1 root root 13 Mar 10 07:11 zheng.txt

[root@xxxxxx ceph]# kubectl exec rbd-test sh -- cat /var/lib/www/html/zheng.txt

zhenghongfei

[root@xxxxx ceph]#

Hook

Velero支持在备份期间在Pod中的容器中执行命令。

metadata:

name: nginx-deployment

namespace: nginx-example

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

annotations:

pre.hook.backup.velero.io/container: fsfreeze

pre.hook.backup.velero.io/command: '["/sbin/fsfreeze", "--freeze", "/var/log/nginx"]'

post.hook.backup.velero.io/container: fsfreeze

post.hook.backup.velero.io/command: '["/sbin/fsfreeze", "--unfreeze", "/var/log/nginx"]'

引导使用前置和后置挂钩冻结文件系统。冻结文件系统有助于确保所有挂起的磁盘IO操作在拍摄快照之前已经完成。

当然我们可以使用这种方式执行备份mysql或其他的文件,但是只建议使用小文件会备份恢复,针对于pod进行备份恢复。

探究备份实现

查找有哪些资源对象需要备份

collector := &itemCollector{

log: log,

backupRequest: backupRequest,

discoveryHelper: kb.discoveryHelper,

dynamicFactory: kb.dynamicFactory,

cohabitatingResources: cohabitatingResources(),

dir: tempDir,

}

items := collector.getAllItems()

调用函数

func (kb *kubernetesBackupper) backupItem(log logrus.FieldLogger, gr schema.GroupResource, itemBackupper *itemBackupper, unstructured *unstructured.Unstructured, preferredGVR schema.GroupVersionResource) bool {

backedUpItem, err := itemBackupper.backupItem(log, unstructured, gr, preferredGVR)

if aggregate, ok := err.(kubeerrs.Aggregate); ok {

log.WithField("name", unstructured.GetName()).Infof("%d errors encountered backup up item", len(aggregate.Errors()))

// log each error separately so we get error location info in the log, and an

// accurate count of errors

for _, err = range aggregate.Errors() {

log.WithError(err).WithField("name", unstructured.GetName()).Error("Error backing up item")

}

return false

}

if err != nil {

log.WithError(err).WithField("name", unstructured.GetName()).Error("Error backing up item")

return false

}

return backedUpItem

}

通过clientset方式获取相应的资源

client, err := ib.dynamicFactory.ClientForGroupVersionResource(gvr.GroupVersion(), resource, additionalItem.Namespace)

if err != nil {

return nil, err

}

item, err := client.Get(additionalItem.Name, metav1.GetOptions{})

将数据保存到文件中。

log.Debugf("Resource %s/%s, version= %s, preferredVersion=%s", groupResource.String(), name, version, preferredVersion)

if version == preferredVersion {

if namespace != "" {

filePath = filepath.Join(velerov1api.ResourcesDir, groupResource.String(), velerov1api.NamespaceScopedDir, namespace, name+".json")

} else {

filePath = filepath.Join(velerov1api.ResourcesDir, groupResource.String(), velerov1api.ClusterScopedDir, name+".json")

}

hdr = &tar.Header{

Name: filePath,

Size: int64(len(itemBytes)),

Typeflag: tar.TypeReg,

Mode: 0755,

ModTime: time.Now(),

}

if err := ib.tarWriter.WriteHeader(hdr); err != nil {

return false, errors.WithStack(err)

}

if _, err := ib.tarWriter.Write(itemBytes); err != nil {

return false, errors.WithStack(err)

}

}

其他的备份工具

PX-Backup 需要交费的产品,人民币玩家可以更加强大。

kanister更倾向于数据上的存储和恢复,比如etcd的snap,mongo等。

参考链接:

https://github.com/vmware-tanzu/velero

https://portworx.com/

https://www.kasten.io/

https://github.com/kanisterio/kanister

https://duyanghao.github.io/kubernetes-ha-and-bur/

https://blog.kubernauts.io/backup-and-restore-of-kubernetes-applications-using-heptios-velero-with-restic-and-rook-ceph-as-2e8df15b1487